I am not going to "promote" them here, it would be rude.

But it's not rude to question Steve's capabilities, without justifying one's remarks? Not many reviewers have examined CPU scaling with a 3090, but there's absolutely nothing wrong with providing links to such testing. Here's one to begin with:

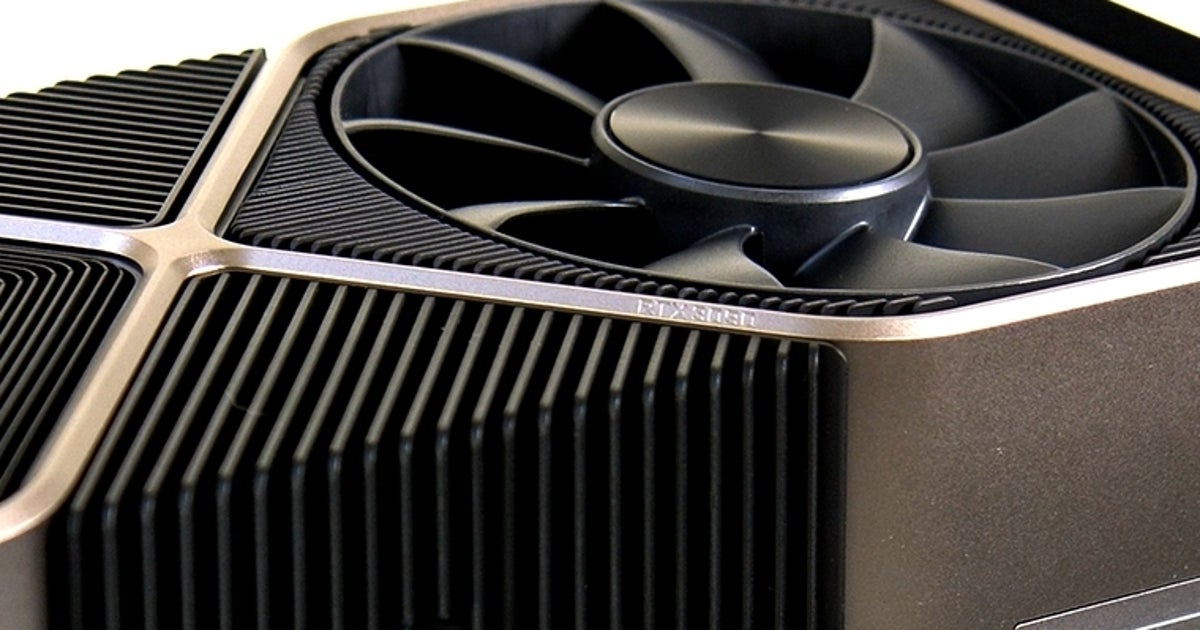

The NVIDIA RTX 3090 benchmarks are here and with them we find whether or not it's worth it for gamers

hardwarecanucks.com

Notice that in all of the games tested, apart from CS:GO, the 3090 at 4K produced the same results (within the usual margins of error and variability) when running with the i9-10900K at 5.3 GHz, as it did at stock speeds.

If using an AMD Ryzen 3950X is going to limit the RTX 3090's performance so much, then why did Steve's testing produce higher results than Hardware Canuck?

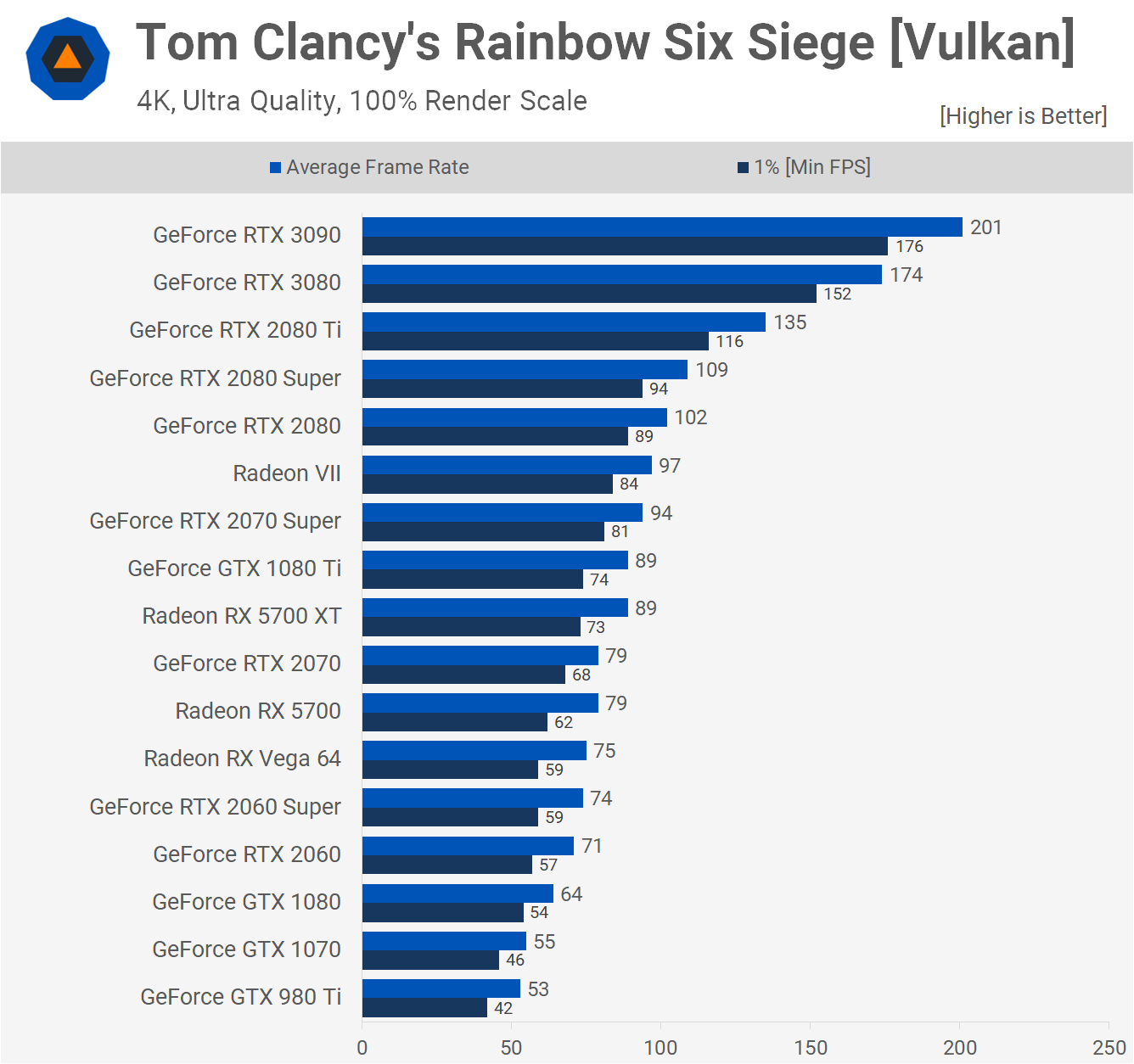

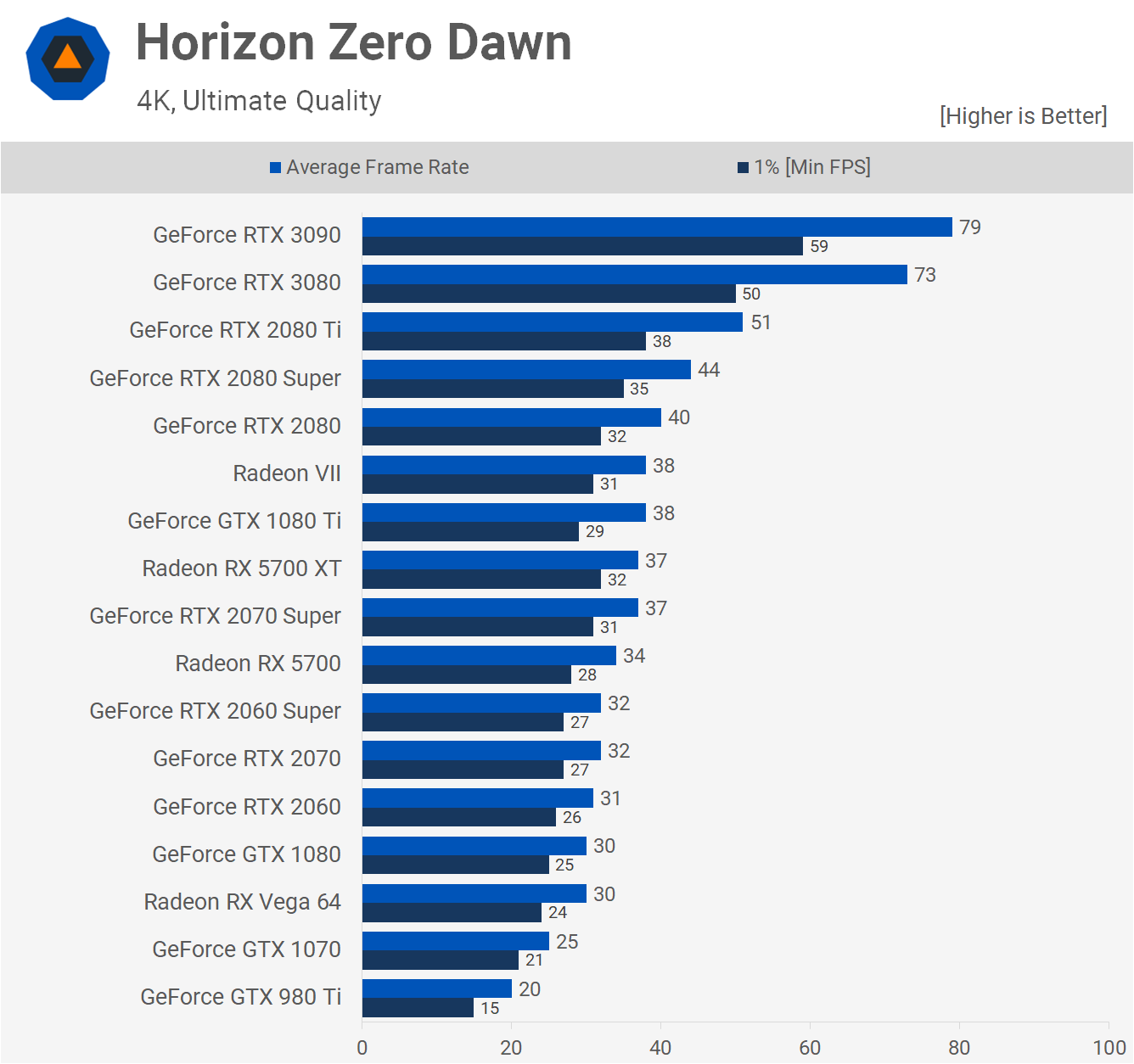

Or picking another test, why did they get very similar average results in Horizon Zero Dawn, but different 1% Low values?

The answer is simple. Every reviewer uses a unique test platform, configured differently to everyone else - not just hardware settings, but how the game is used for testing (some take in-game results, like Steve does, others use a built in benchmark if available).

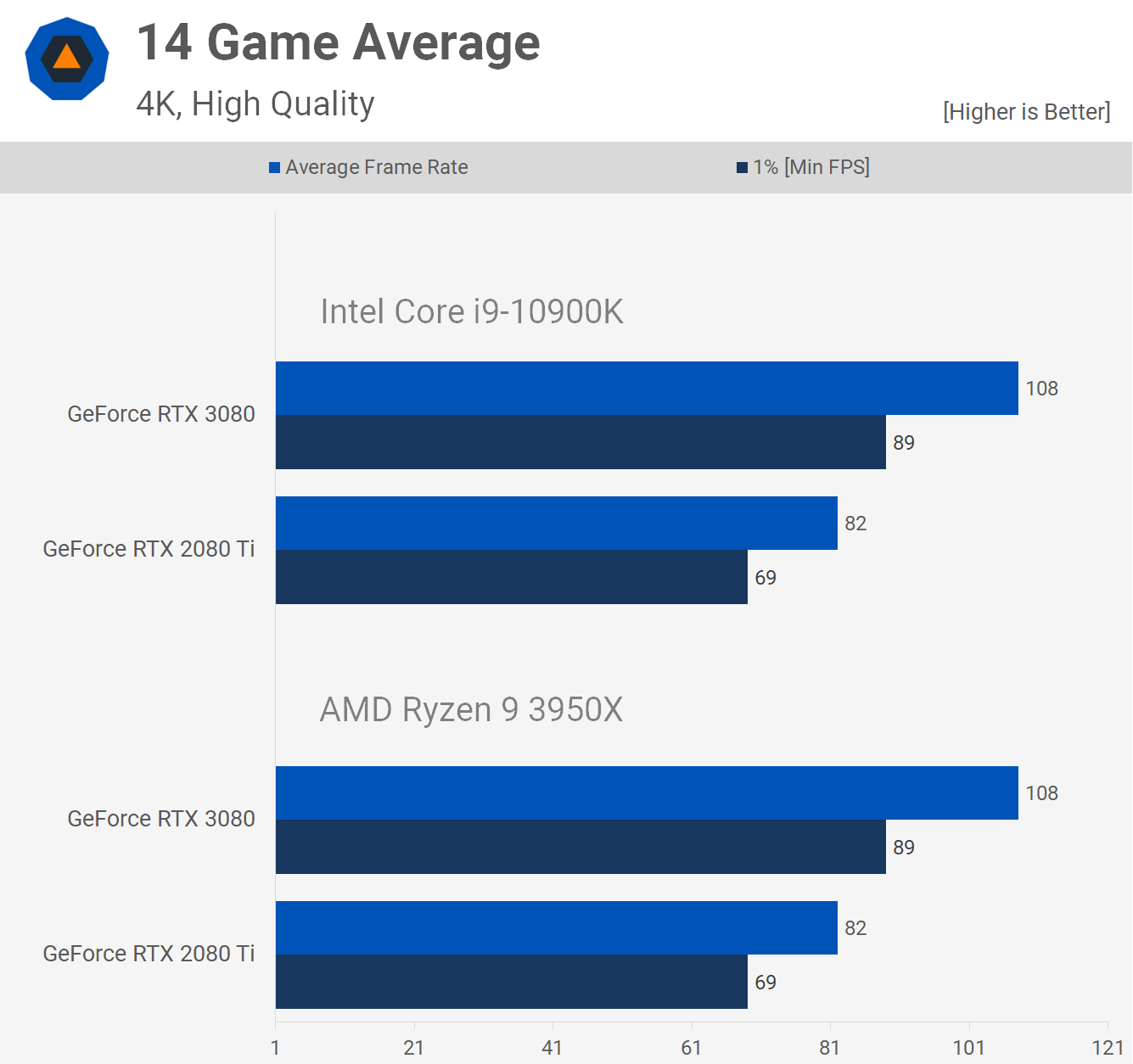

Unless one uses

exactly the same workload on the graphics card, in

exactly the same environmental conditions, then any comparison between test platforms is a vacuous exercise at best. But of course, that's exactly what Steve

did do when he ran the

3080 in the 10900K and 3950K machines:

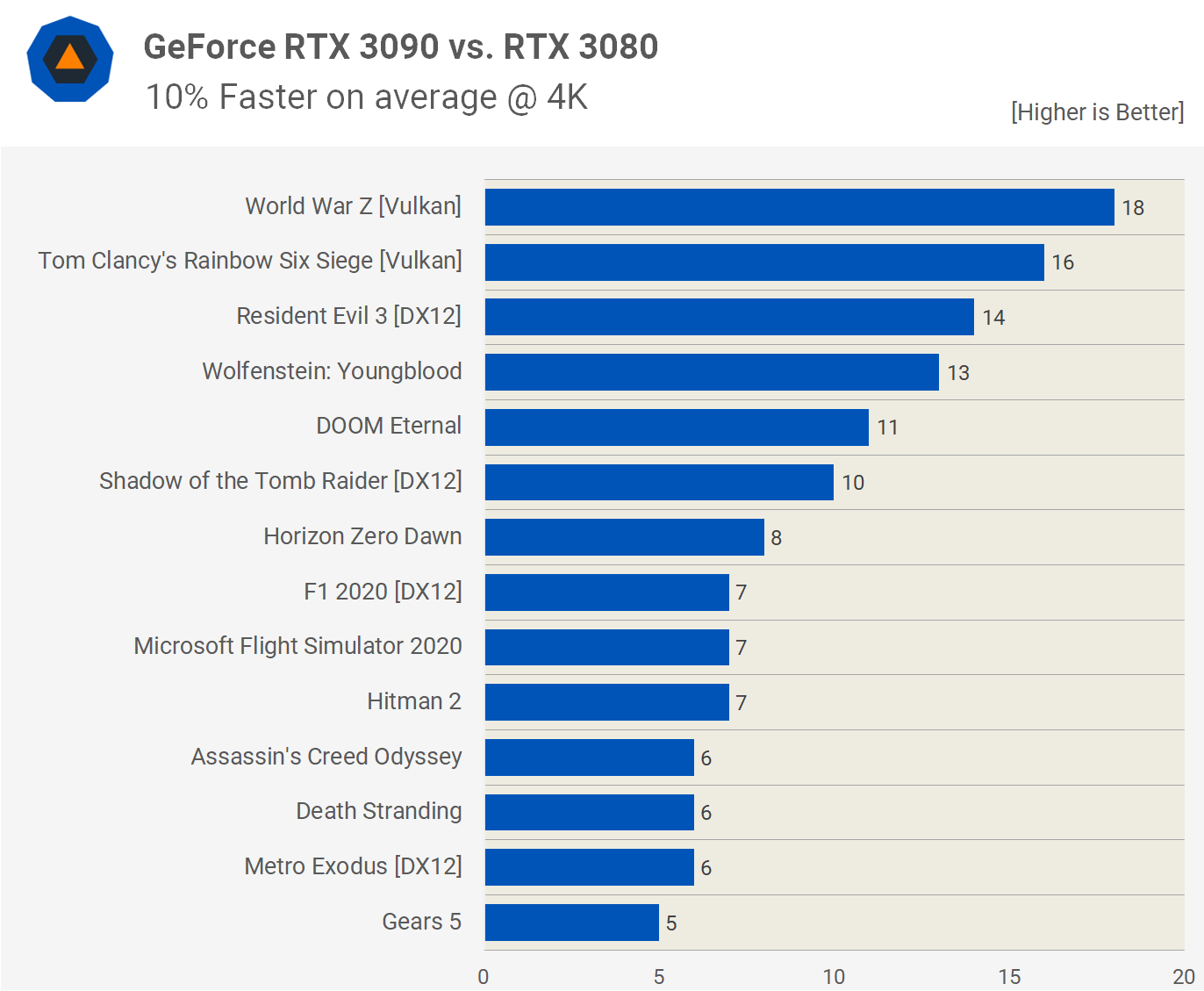

One might then ask as to why the 3090 isn't consistently faster than 3080 in all games, at 4K, if it's not CPU limited:

In terms of theoretical maximum throughputs, the 3090 FE fairs against the 3080 FE like this:

Pixel Rate = 189.8 vs 164.2 GPixel/s (15.3% higher)

Texture Rate = 556.0 vs 456.1 GTexel/s (21.9% higher)

FP32 rate = 35.58 vs 29.77 TFLOPS (19.5% higher)

Bandwidth = 936.2 vs 760.3 GB/s (23.1% higher)

So if the 3090 was completely GPU bound at 4K, in all games, then it should be at least 15% faster than a 3080. Well, we can see this in some of the tests in the above chart, but what about the rest? The answer to this is simple: there are indeed some games that will be CPU limited, even at 4K, with a top end graphics card.

But let's take one last test from Hardware Canucks:

The 13% CPU overclock is only producing a 1% increase in average performance, with the 3090. The card itself is only 9% faster than the 3080 in this test, and this is with what most people would consider to be the fastest 'gaming' CPU out there.

But this is all because of the game itself, and not the hardware. For example, Death Stranding produces a similar outcome, because the game scales more with threads than it does with core speed.

So all the noise about how an AMD Ryzen 9 3950X is somehow constraining the likes of a 3080 and 3090, and not showing them in their true light, is just that:

noise. There's no quantifiable, repeatable evidence to show that this choice of CPU is deliberately constraining the results of these graphics cards at 4K, when examined in the same test conditions.

Besides, it's not like every review of those cards is done using a 10900K platform - a brief survey shows the following:

Tom's Hardware - 9900K

PC Gamer - 10700K

HotHardware - 10980XE

Guru3D - 9900K

TechPowerUp - 9900K

Eurogamer - 10900K

KitGuru - 10900K

ArsTechnica - 8700K

GamersNexus - 10700K

PCWorld - 8700K

PC Perspective - 3900X

That reviewers (ourselves included) are using a range of different platforms should be celebrated, not picked over. All data is useful, no matter whether or not one feels the information is relevant to oneself.