Let's just point out numbers of today's pricing...

7900 XTX --> 900-950$

4090 --> 1700-1900$

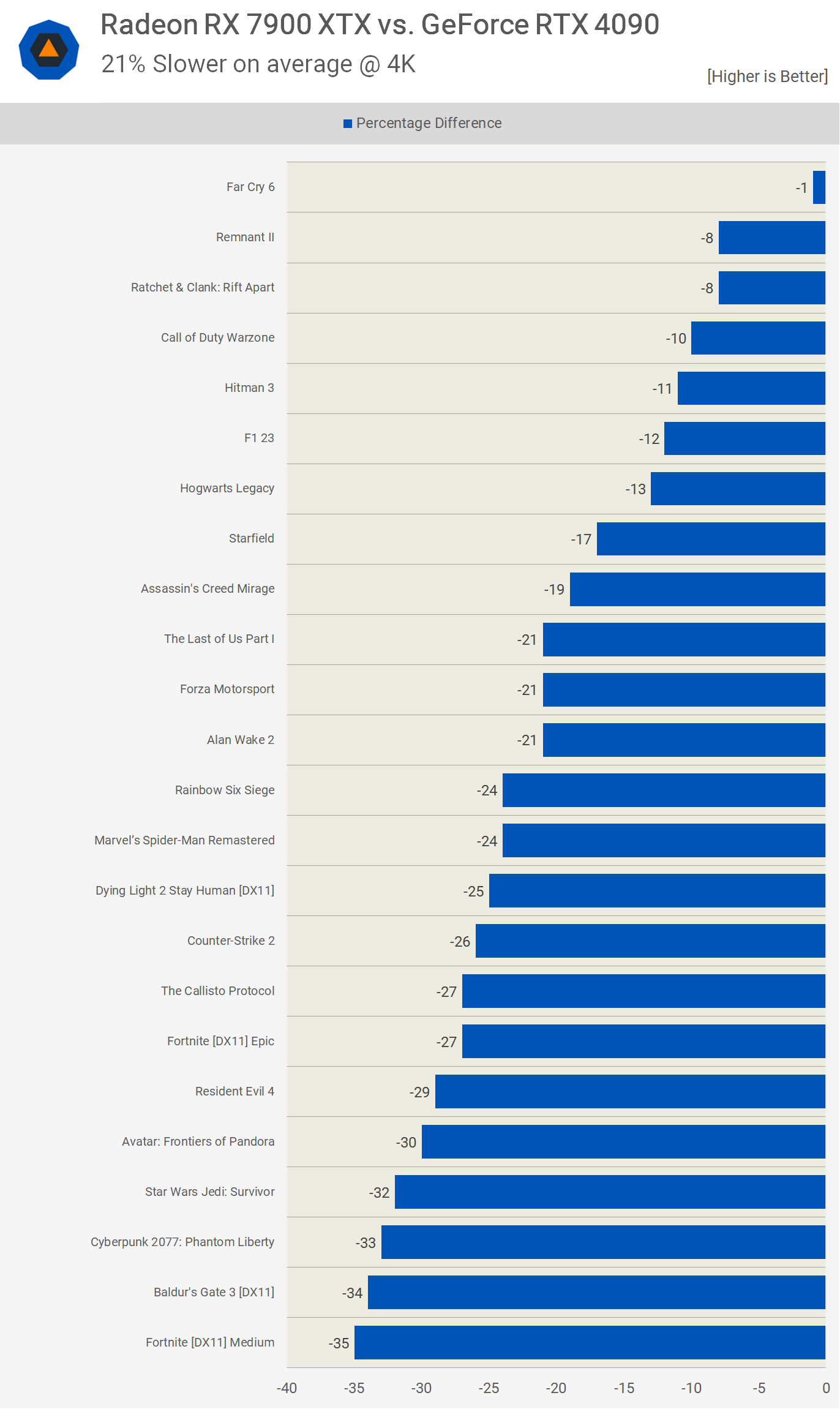

So, do you think that 20% more performance is worth almost a 100% price increase?

And even if it was only this... however, there is also this to consider...

12VHPWRs melting

PCBs cracking

So if you ask me if I can recommend buying a 4090... then my answer is an outstanding NO! I can`t do that as a PC enthusiast.

7900 XTX --> 900-950$

4090 --> 1700-1900$

So, do you think that 20% more performance is worth almost a 100% price increase?

And even if it was only this... however, there is also this to consider...

12VHPWRs melting

PCBs cracking

So if you ask me if I can recommend buying a 4090... then my answer is an outstanding NO! I can`t do that as a PC enthusiast.