Why it matters: When Nvidia first unveiled its RTX 20-series line last year, one of the main features it pushed was the real-time ray tracing abilities. Now, there’s some good news for owners of GTX cards: ray tracing support is coming to these older products, albeit in a basic form and with some caveats.

Update: The latest WHQL GeForce driver update version 425.31 enables DirectX ray tracing support for GeForce GTX 1660/Ti, GTX 1080/Ti, GTX 1070/Ti, and GTX 1060 6 GB graphics cards. Also Titan GPUs based on previous-gen Pascal and Volta architectures.

Update #2: Yes, we will be benchmarking ray tracing in GTX cards soon.

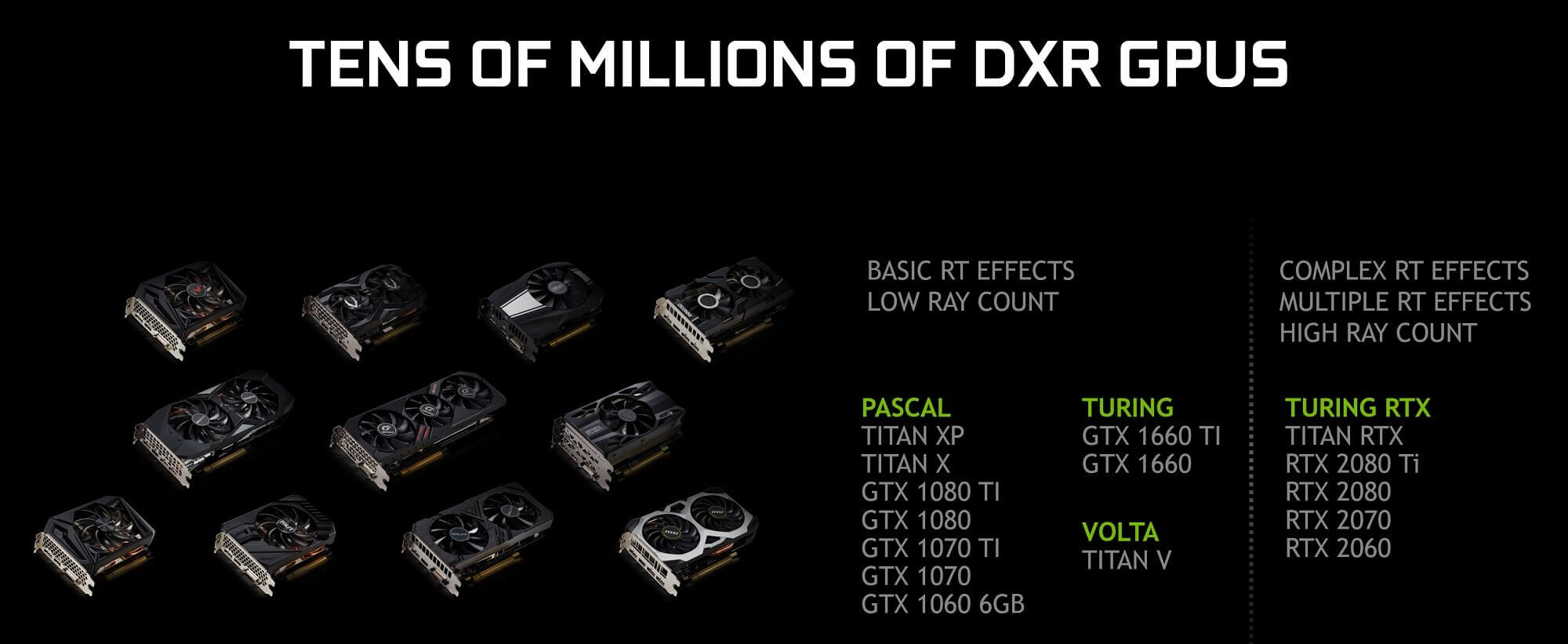

Nvidia used the Game Developer Conference to reveal that April’s GeForce driver update will add basic ray tracing support to cards ranging from the 6GB GTX 1060 and above. This includes the recent GTX 1660 and 1660 Ti, as well as the Titan X, XP, and V. Nvidia writes that games will work with the GTX cards without updates because ray-traced titles are built on DirectX 12’s DirectX Raytracing API.

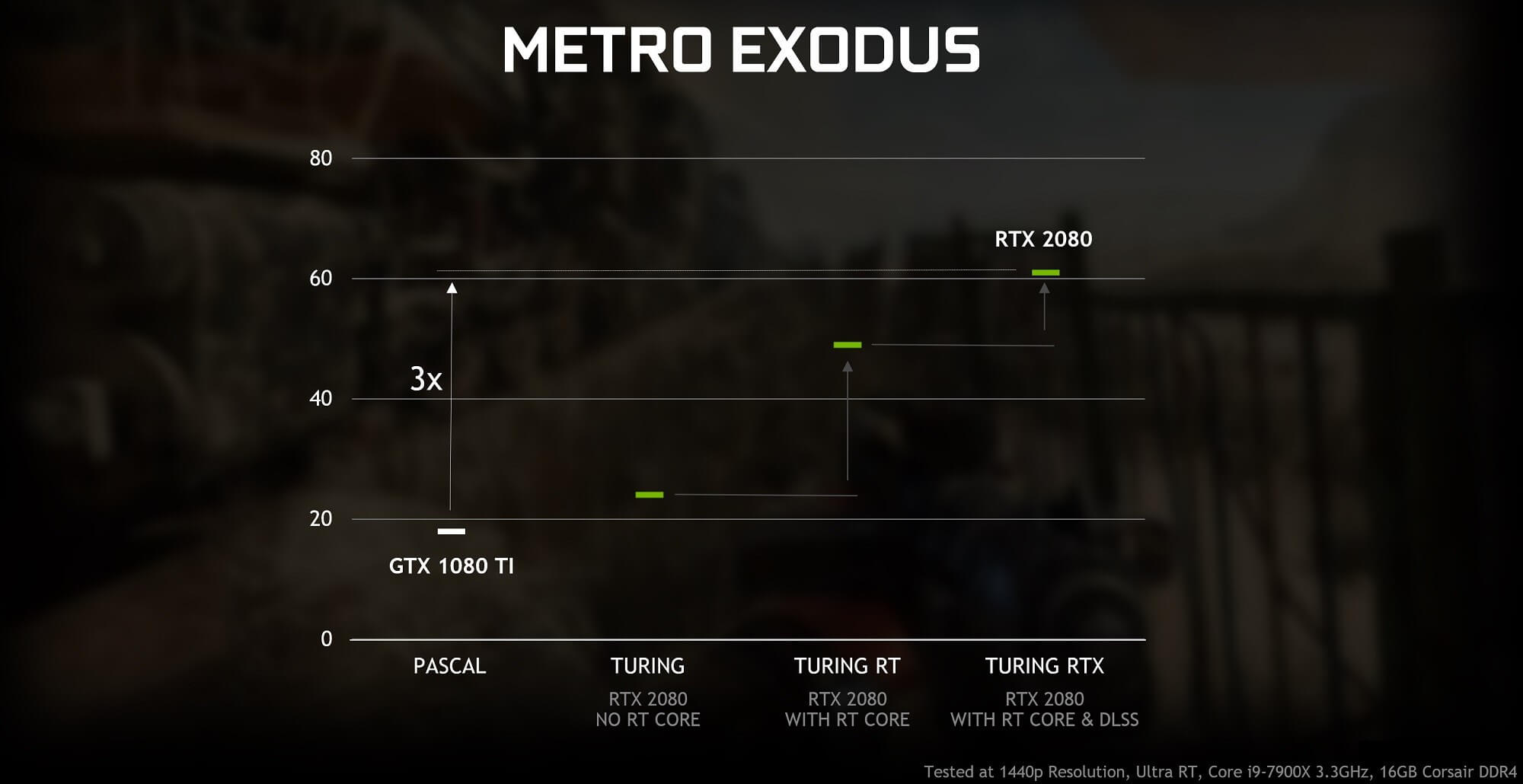

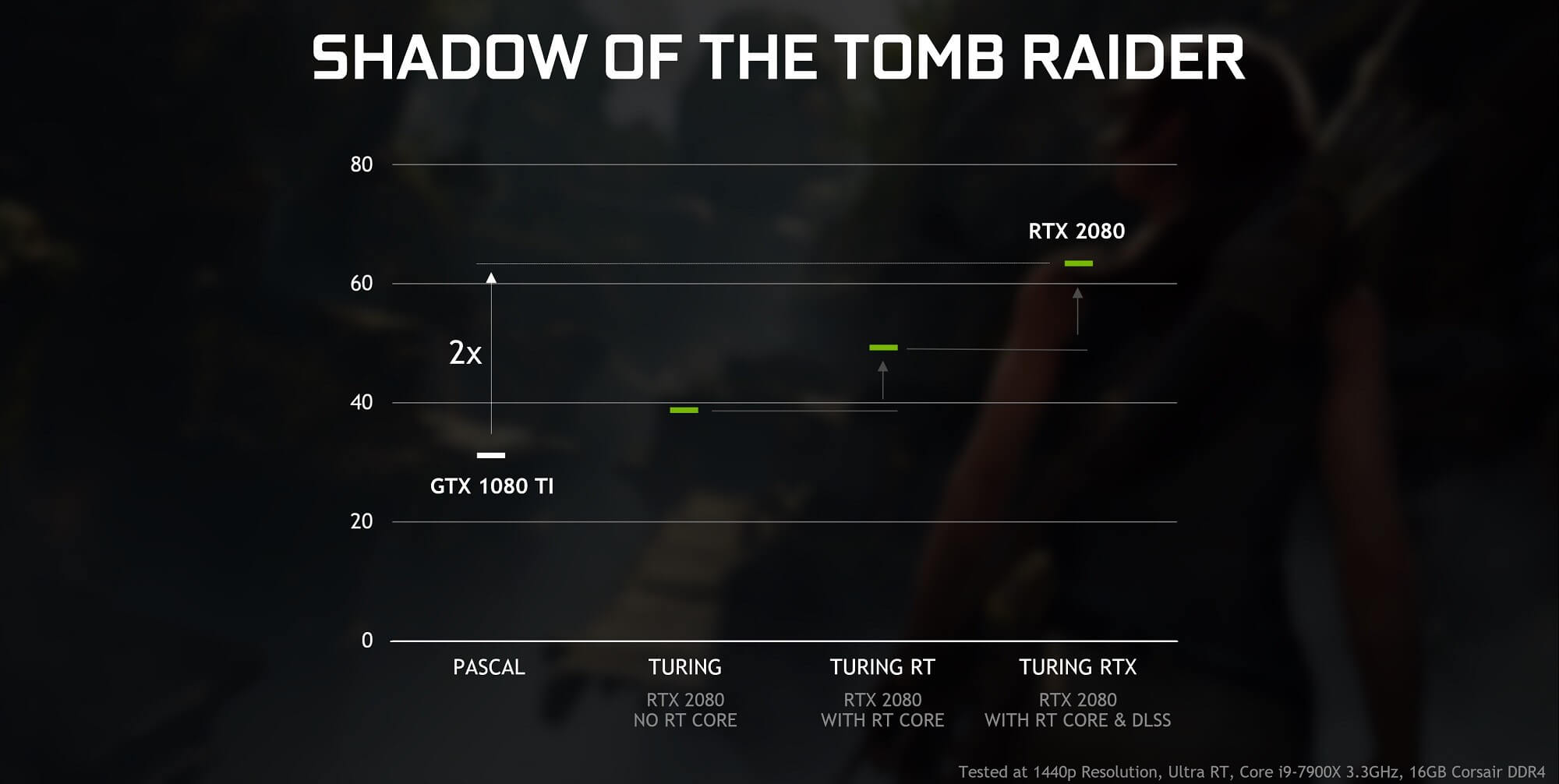

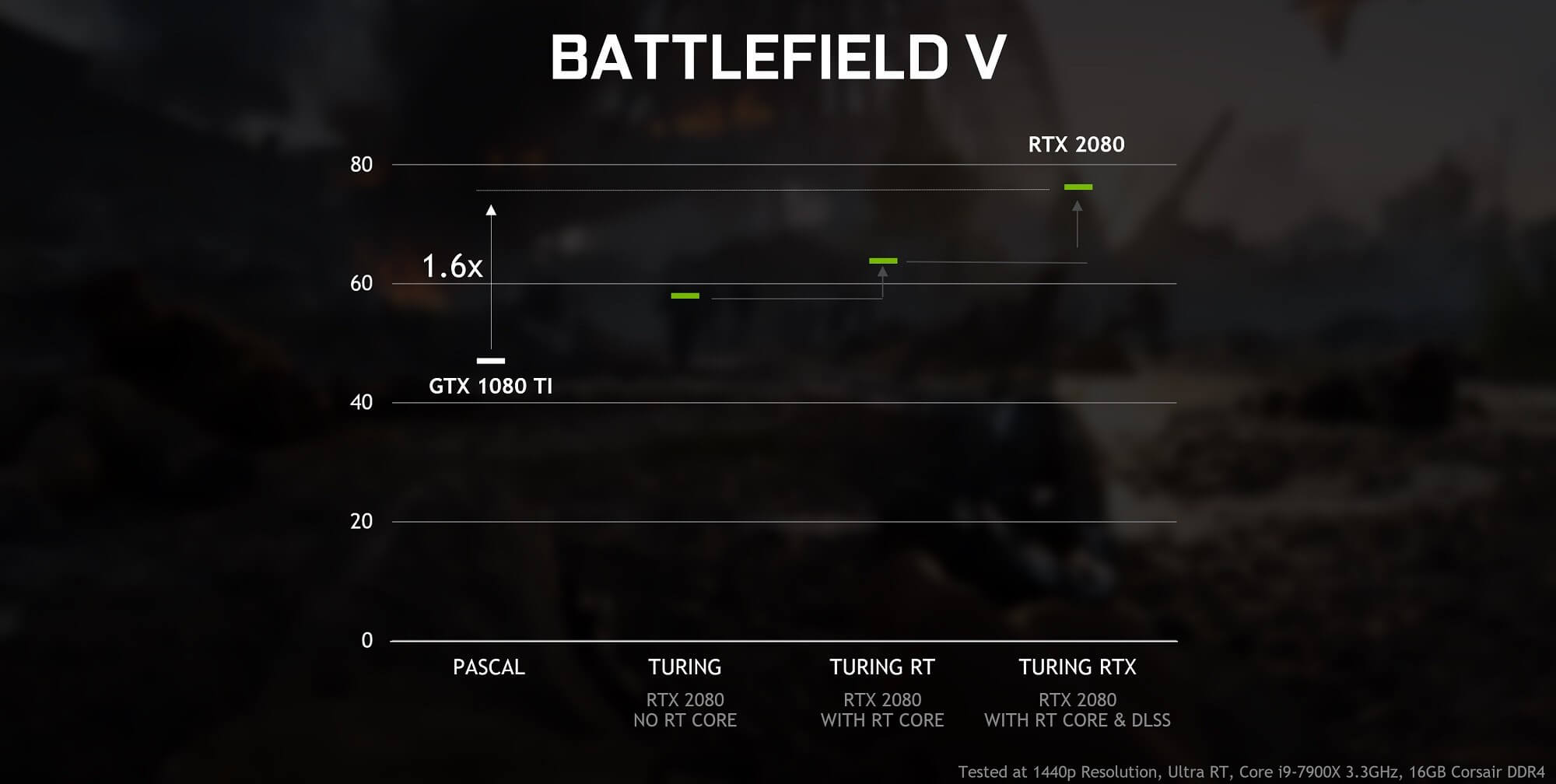

Despite the ray tracing effects on non-RTX cards being “basic” and casting far fewer rays, using the feature on these GPUs comes with a hefty performance hit, which varies depending on the game being played.

Using a Core i9-7900K and a GTX 1080 Ti at a 1440p resolution, Metro Exodus, which uses real-time ray-traced global illumination, can’t even reach 20 fps. Things improve slightly with Shadow of the Tomb Raider, which comes in at around 30 fps, and Battlefield V's less demanding ray tracing effects mean 50-60 fps is possible.

Those who want the full-fat ray tracing experience and Nvidia’s Deep Learning Super Sampling (DLSS) will still need an RTX card and its dedicated RT and Tensor cores. But GTX owners willing to sacrifice performance might appreciate the chance to see how much ray tracing adds to a game.

We’ll just have to wait and see if this encourages more game developers to support ray tracing, which is what Nvidia is doubtlessly hoping for.

https://www.techspot.com/news/79256-nvidia-adding-ray-tracing-support-gtx-cards.html