Why it matters: As Moore's law keeps going, Nvidia's HGX-2 brings us one step closer to exa-scale computing. Nvidia continues to be the king of deep learning and this new platform will allow scientists and developers to compute things that were impossible just a few months ago.

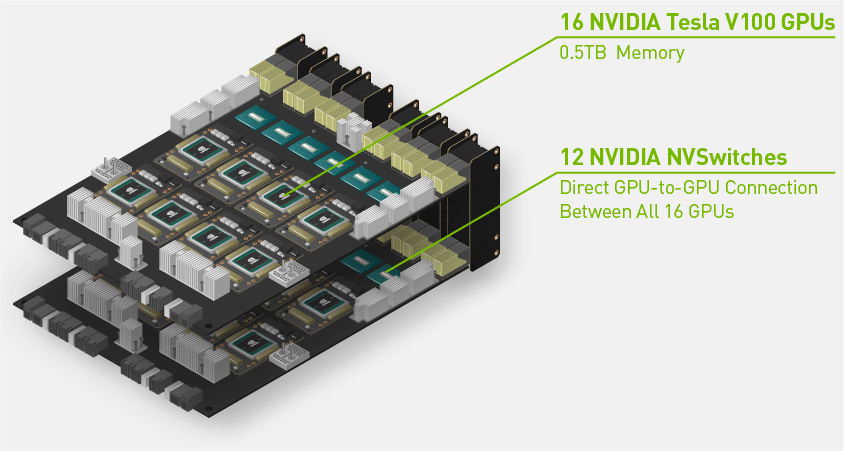

Nvidia has unveiled the HGX-2 Cloud-Server Platform packed with 16 Tesla V100 GPUs and a whopping 0.5TB of GPU memory. Nvidia claims it is the world's most powerful multi-precision computing platform and that it can meet the needs of both High Performance Computing (HPC) and Artificial Intelligence in the same package.

In total, the HDX-2 can provide up to 2 petaFLOPs of compute power. It allows for calculations "using FP64 and FP32 for scientific computing and simulations, while also enabling FP16 and Int8 for AI training and inference."

To keep each of the V100 GPUs fed with data, Nvidia is using their NVSwitch interconnect. This allows the GPUs to communicate with every other GPU at a bandwidth of 2.4TB/s. It also gives each GPU access to the full 0.5TB of HBM2 video memory.

The HGX-2 itself is not a computer, but rather a platform for manufacturers to use in creating their own machines. The first system built on this platform was Nvidia's own DGX-2 announced in March.

Based on preliminary performance numbers, the HGX-2 platform can achieve AI training speeds of 15,500 images per second. This is equivalent to up to 300 separate CPU-only servers in AI training and 60 CPU-only servers in HPC. Nvidia has reported that through hardware and software improvements to their platforms, they have been able to boost performance on deep learning workloads tenfold in the past six months.

Server makers Lenovo, QCT, Supermicro, and Wiwynn have all announced plans to bring HGX-2 based systems to market later this year. Since HPC and AI are very broad markets, Nvidia will be releasing other variants to the platform as well. This includes the HGX-T for AI training, the HGX-I for inference, and the SCX for supercomputing. These platforms have different combinations of CPUs, GPUs, and memory to meet the specific workload requirements.

https://www.techspot.com/news/74869-nvidia-new-hgx-2-platform-offers-2-petaflops.html