You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

Radeon RX 7900 XT vs. GeForce RTX 4070 Ti: 50 Game Benchmark

- Thread starter Steve

- Start date

But...

AMD wins the price/performance and doesn't have to overmarket their business hardware to gamers... it doesn't matter how much marketing NVidia tries to do, the 4070ti can't will never beat the 4080... but the XT can and does..! Seems you are afraid to admit this... and are influenced by marketing.

Additionally, the 4090 isn't gaming architecture... it's just Pro hardware being sold as "gamer" so Jensen Huang doesn't have egg on his face... so he sells an ego-cake $4k dGPU for $2,100 without pro software, so NVidia isn't embarrassed by RDNA. In reality, the $849 XT comes within 25 frames of the $2k 4090 an embarrassing amount of times, in reviews across 20+ review sites.... and even beats NVidia's flagship in a few.

After seeing the XT over and over and over punch above it's weight.... Gamers are like "whats a 4070ti...?"

Lastly, GAME DEVELOPERS don't care 1 bit about DLSS, or NVidia anything.... the Industry Standard is RDNA. (XSX/XSXs/Playstation5/Steamdeck/etc...).

IMO, many are living in the past and can not accept today's reality. I have a slew of EVGA gpus laying around, even they knew when to move on.

7900XT does not outsell 4080 or even close. I have seen B2B numbers in Europe and Nvidia dominates easily. Look at Steam HW Survey if in doubt.

9 out of 10 that are not into tech/hardware, that needs a desktop GPU, only looks at Nvidia, because they have heard terrible things about AMD, or had a bad experience earlier.

Games are not coded for RDNA at all. You are clueless. Standards are being used.

4090 is a not a pro-card, Quadro line is. 4090 is an entusiast gaming card and it's selling like hotcakes, since it's the absolute best 4K+ card available, by a huge margin. Destroys 7900XTX easily overall at 4K+

Game dev's care alot about Nvidia's money and Nvidia pays them to implement DLSS, RT and other stuff, because Nvidia can easily afford it, AMD can't.

EVGA were sleeping anyway. They have not released a good Nvidia GPU in years anyway. They will probably go bankrupt in a few years because Nvidia GPUs was like 80% of their income. They make garbage mostly. WIthout GPUs they will be gone or sold. Their PSUs are OEMs and they make peanuts from it. The rest of their hardware is pretty meh. EVGA was a good brand 5-10 years ago, today it's mostly crap.

Hahah, good one. Both HardwareUnboxed and GamersNexus have seveal videos about the issue.Too bad not a single hardware reviewer was able to replicate those black screen etc issues

you must be joking; Nvidia ui is the KISS acronym defined vs AMD's operation MC Escher.there is objectively nothing "concise" about the ui of nvidia's applications. absolutely nothing.

someone like me can navigate it, nobody, and I repeat, nobody who isn't tech savvy can use it. it's a broken mess that needs an urgent modern rework.

Last edited:

You are talking to a programmer who works in an agency surrounded by designers. A decade of experience in making different websites and web applications. I've seen it all, from bad to good.you must be joking; Nvidia ui is KISS vs AMD's operation picasso

I don't get it. It's a known fact that Nvidia's software is an ancient mess that is in dire need of a complete rework. Why are you acting as if this isn't the case? If there is one thing that people always complain about it is the Nvidia Control Panel and Geforce Experience.

As a tech-savvy person I can navigate it, but someone who isn't is just lost. They made some UI updates in 2020 to Geforce Experience, to the game list and game recording feature and that's about it.

At least it's not as bad as what intel is doing with their ARC control panel UI.

Avro Arrow

Posts: 3,721 +4,822

I totally agree with you and I might not want it either but M.2 slots aren't a big deal for gamers because NVMe load times aren't much better than SATA SSDs.That is because AMD board is at least somewhat useful and future proof. If you look carefully, that low end Intel trash has 0 (that means, zero) M.2 slots. And yes, that costs money.

Remember that big gaming storage test that Tim did awhile back?:

"What We Learned:

Why don't games benefit all that much from faster SSDs? Well, it seems clear that raw storage performance is not the main bottleneck for loading today's games. Pretty much all games released up to this point are designed to be run off hard drives, which are very slow; after all, the previous generation of consoles with the PS4 and Xbox One both used slow mechanical drives to store games.

Today's game engines simply aren't built to make full use of fast storage, and so far there's been little incentive to optimize for PCIe SSDs. Instead, the main limitation seems to be things like how quickly the CPU can decompress assets, and how quickly it can process a level before it's ready for action, rather than how fast it can read data off storage."

I agree but it's not about buying new DDR4, it's about being able to use the DDR4 that you already own. I mean, let's be honest here, what percentage of gamers looking to get this do you think are still using DDR3? I think that it's statistically zero.Older DDR versions are never worth buying. DDR5 will quite soon make DDR4 obsolete and DDR4 spare parts (motherboards etc) will be hard to find. Happened every time on DDR era. While older DDR is "fine", it's just not worth buying since it goes to trash on next upgrade or late fix.

Most people still don't see it that way. Intel has been the #1 name in x86 CPUs since the creation of the 8086 back in 1978. You don't really think that AMD managed to change 45 years of market perception in only 6 years, do you? There are probably just as many people who have never had an AMD CPU as there are who have never had a Radeon GPU. These aren't cheap devices and when people have to spend a lot of money, getting a familiar brand gives them peace-of-mind. Whether deserved or not, Intel hasn't relinquished that status. Also, don't forget how many losers are out there who think that LoserBenchmark is a reliable source of information...At this moment AMD is premium brand, Intel sells cheap trash since that's all they can do.

To you and me, sure, I agree. To most of the world, no, Intel is still the more recongnised brand. AMD's greatest success has only happened over the past six years and by no means did it relegate Intel to almost nothing like AMD was during the FX years. Sure, AMD has been out-selling Intel but only in the last three years or so. Don't be fooled into thinking that AMD is even close to market parity with Intel yet because it isn't, not on the consumer side or the server side:And premium parts always cost more than cheap ones. At least you get good value for money when buying AMD and premium brand just cannot easily accept trashy motherboards, because customers will think poor features are AMDs fault.

"In the fourth quarter of 2022, 62.8 percent of x86 computer processor or CPU tests recorded were from Intel processors, up from the lower percentage share seen in previous quarters of 2021, while 35.2 percent were AMD processors. When looking solely at laptop CPUs, Intel is the clear winner, accounting for 76.7 percent of laptop CPU test benchmark results in the fourth quarter of 2022."

That's going REALLY far back, like early AGP-era.I remember that kind of motherboards. There were some others that supported SDRAM (SDR) and DDR simultaneously.

Oh I agree, I think that AMD made the right move there. The problem was that Intel's reaction of lowering their motherboard prices was a very effective counter to that.AMD said they try to offer long term platform on AM5. Pairing DDR4 memory with long term platform rarely makes sense.

Sure, but not so much to make impossible that the majority of those sales were to the relatively-few fanboys, very wealthy gamers and scalpers (scalpers are still a thing).Also with AMD it's easier for customer since AM5 indicates board supports only DDR5 memory. With Intel it's huge mess as some boards support DDR4 and some DDR5. Intel did that only because they are now low end brand and cannot compete with AMD premium features.

Not only assumption. You could check actual 7950X3D and 7900X3D sales data at Mindfactory. 7950X3D sold (out) on hours more than any Intel CPU sold on 7 days (except 13700K). So there was decent stock. Sold out that much at that price so quickly proves high demand.

Show me decent sales numbers after they've been on the market for a month and then I'll say "Yeah, sales have been good".

That's not true at all. TSMC could produce easily produce more than AMD could sell of any cache type. I don't know where you get that idea. There's nothing there that mentions a shortage, you are still only assuming.Fact 1: V-Cache chips use custom 7nm process, not same as used on 5800X3D source https://www.techspot.com/news/97818-amd-explains-how-new-3d-v-cache-improves.html

That means, AMD couldn't produce V-Cache for Zen4 as long as 5800X3D has been on sale.

I'm sorry but that is not a fact as I see it. That is still you making assumptions.Fact 2: Only major difference between any Ryzen non-3d cache model and 3D cache model is extra V-Cache chip. There is no shortage of Ryzen IO chips. There is no shortage of Zen4 chiplets. V-Cache chips? Like usually, Epyc server chips (Genoa-X) will use V-Cache chips on Every chiplet. That means, single Epyc CPU may consume 12 V-Cache chips. AMD of course sets higher priority for Epyc CPUs and those are still unreleased.

Absolutely. They're a business and they have to focus more on the more profitable side of the business and that's commercial. I couldn't agree more.That is both blessing and curse. Since server chips and desktop chips use mostly same parts, both come from same product lines that makes then cheap and easy to produce. But it also means there won't be dedicated product lines for desktop chips.

That's not a fact either because they always make the most parts in the low and mid-ranges because the high-end parts don't sell nearly as well. This is not just true in the semiconductor business, this is true about everything.Fact 3: Never before top end desktop CPU product has been short supply unless there have been some sort of production issues. Because it makes zero sense to limit availability for product that has best profit margin. It also makes little sense to delay releasing top product with best product margin unless there are supply issues.

I'm starting to wonder if you understand what a fact is.

No, it doesn't add up, not even close. I still don't know how you managed to reach that conclusion. If there was a shortage, I can guarantee you that someone would've said something. Do you really think that you know more about what's going on than actual CPU journalists like Steve Burke, Steve Walton, Hilbert Hagedoorn, Paul Alcorn or W1zard? All you did was read some short article that Steve Walton wrote, an article that did not mention any kind of shortage or even postulate that one existed, made one very presumptuous leap and decided to call it fact. That's not how facts work.Adding those up, it's very clear that V-Cache chips are on short supply even without AMD telling it directly (they won't of course).

The article does talk about a special node but it doesn't say anything about a shortage, so you've still failed to offer evidence. If it's being made on a TSMC process dedicated to SRAM, that means there will be LOTS of it. Sure, AMD is using a lot of it (probably more than what is necessary) but there's nothing in that article that talks about limited supply, anywhere. In fact, if TSMC has a production line dedicated to it, it tells me the exact opposite. So, you're still making assumptions that I think are incorrect.

AMD uses 7nm not only because RAM doesn't really scale well beyond that but because it's far less expensive. This wouldn't be the case if there was an issue with scarcity because as we've been made painfully aware over the last couple of years, scarcity means that prices skyrocket. I really doubt that there could possibly be a scarcity issue at 7nm because, at the very least, both TSMC and Samsung are capable of producing it in large quantity. The 7nm process was originally produced seven years ago by TSMC which means that it is beyond mature at this point. Any major fab in the world could produce 7nm chips including TSMC, Samsung and GlobalFoundries as stated on its Wikipedia page:

7nm Process - Wikipedia

If there was a supply issue, all AMD would have to do is contact one of the other two to shore up supply and they would do it too because they're not going to leave profit on the table if they don't have to. As you pointed out yourself, a shortage could negatively affect EPYC (and/or Radeon Instinct if it's used there as well). This is something that AMD would never be able to accept if it didn't have to, and it doesn't have to.

At the end of the day, I'm still forced to believe that AMD's insistence on producing X3D versions of both R9 CPUs while refusing to produce an X3D version of their R5 CPU was greedy, stupid and ultimately anti-consumer. I don't say this just to rag on AMD because you know that I am far more fond of ragging on Intel and nVidia. I'm also not personally affected by what AMD does with Zen4 because I already have an R7-5800X3D and therefore have no interest in Zen4. What I see is AMD shooting themselves in the foot because AM5 isn't so good that it can't be passed up for LGA 1700.

Sure, LGA 1700 is a dead platform but you can buy an LGA 1700 motherboard that supports DDR4 for about HALF the price of the cheapest B650 board, the Gigabyte DS3H. That's EXTREMELY attractive because not only does it effectively nullify the longevity of the AM5 platform, the ability to continue using DDR4 makes it actually a better economical choice than AM5. A lot of shrewd gamers were waiting, hoping for a 6-core Zen4 X3D CPU (because why wouldn't they?) but took the Intel route immediately upon discovering that there would be no R5-7600X3D.

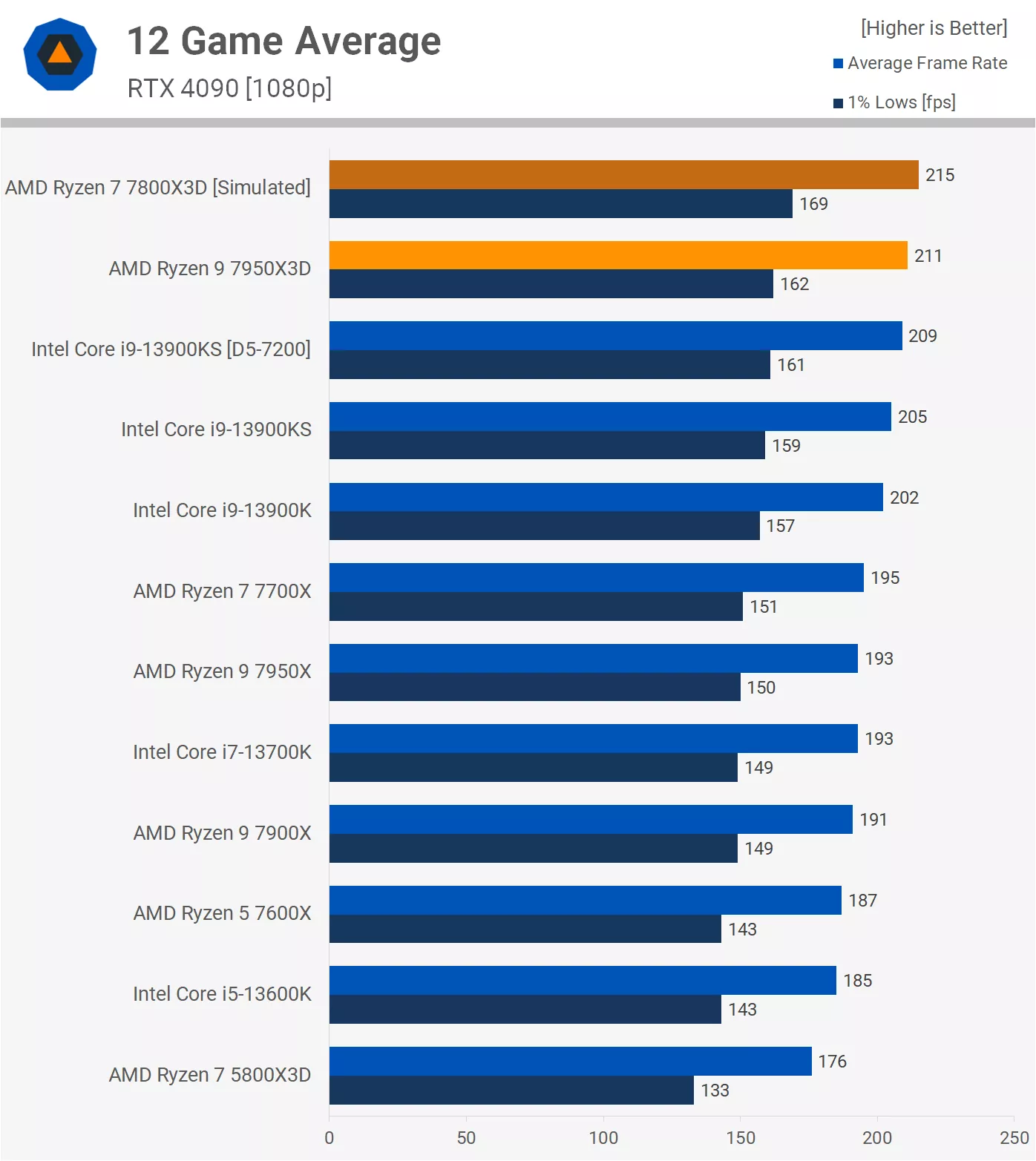

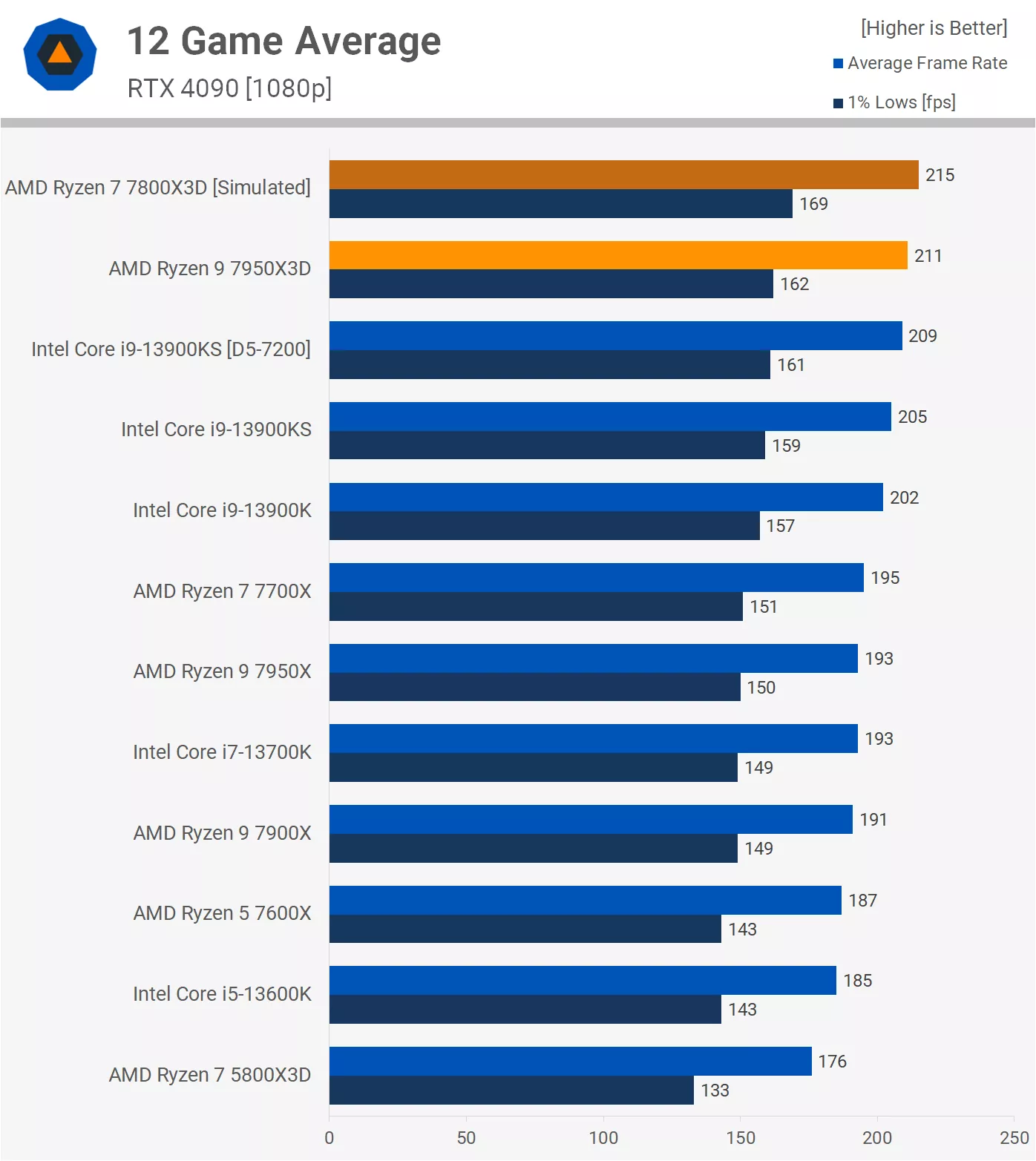

Why would they do that? Simply because they didn't think that paying $450 for an octocore CPU they didn't need on an expensive B650 motherboard and having to buy at least 16GB of DDR5 was worth it and they were right. Just look at these two scenarios:

AM5:

CPU: R7-7800X3D - $450

Motherboard: Gigabyte B650M DS3H - $150

DDR5 16GB: Patriot Signature Line 16GB DDR5-4800 - $59

Total: $659

LGA 1700:

CPU: i5-13600 - $250

Motherboard: ASRock H610M-HDV - $80

DDR5 16GB kit: $0 (Not Required)

Total: $330

Estimated gaming performance difference:

215 ÷ 185 = 1.1622

With the vast majority of people not caring one way or the other as to which CPU maker they have in their rig, who is going to want to pay literally double for a gaming performance increase of less than 20%?

To be honest, not even the R5-7600X3D would be worth it (at the $350 price point that I envisioned) for gamers from a platform cost / performance standpoint but it has that hype and "cool factor" that often overrides the intelligence of gamers in the same way that nVidia's marketing does.

AMD is already not a great value for their new platform and not having an R5-7600X3D part at $350 only makes things worse.

Last edited:

You did watch that video? Apparently not. He clearly said he was NOT having those problems. In other words, he was NOT able to replicate those black screen problemsHahah, good one. Both HardwareUnboxed and GamersNexus have seveal videos about the issue.

There you go:7900XT does not outsell 4080 or even close. I have seen B2B numbers in Europe and Nvidia dominates easily. Look at Steam HW Survey if in doubt.

Actual sales data prove that 7900XT sells more than any Nvidia including 4080. And again, that's Actual Sales Data that you can verify if you want. Next time use facts and not some imaginary Steam Survey data that proves absolutely nothing.

Of course not because games do suck. Not because NVMe drives are not faster. However this thing will change in future. How fast, I won't even predict but it will eventually.I totally agree with you and I might not want it either but M.2 slots aren't a big deal for gamers because NVMe load times aren't much better than SATA SSDs.

Remember that big gaming storage test that Tim did awhile back?:

"What We Learned:

https://www.techspot.com/review/2116-storage-speed-game-loading/#What_We_Learned:~:text=meaningless for gaming.-,What We Learned,-Why don't games

Why don't games benefit all that much from faster SSDs? Well, it seems clear that raw storage performance is not the main bottleneck for loading today's games. Pretty much all games released up to this point are designed to be run off hard drives, which are very slow; after all, the previous generation of consoles with the PS4 and Xbox One both used slow mechanical drives to store games.

https://www.techspot.com/review/2116-storage-speed-game-loading/#What_We_Learned:~:text=meaningless for gaming.-,What We Learned,-Why don't games

Today's game engines simply aren't built to make full use of fast storage, and so far there's been little incentive to optimize for PCIe SSDs. Instead, the main limitation seems to be things like how quickly the CPU can decompress assets, and how quickly it can process a level before it's ready for action, rather than how fast it can read data off storage."

Alternatively, why use old DDR4 when can buy DDR5 that is good for Next upgrade? Usually it's not very advisable to buy new motherboard for old DDR RAM version.I agree but it's not about buying new DDR4, it's about being able to use the DDR4 that you already own. I mean, let's be honest here, what percentage of gamers looking to get this do you think are still using DDR3? I think that it's statistically zero.

Many people don't see it that way but looking at retail market and retail sales, AMD is premium brand and Intel tries to hang on. On OEM and server markets AMD still has way to go because buyers are more stupid. However looking at just products and forgetting brand, AMD leads on every category.Most people still don't see it that way. Intel has been the #1 name in x86 CPUs since the creation of the 8086 back in 1978. You don't really think that AMD managed to change 45 years of market perception in only 6 years, do you? There are probably just as many people who have never had an AMD CPU as there are who have never had a Radeon GPU. These aren't cheap devices and when people have to spend a lot of money, getting a familiar brand gives them peace-of-mind. Whether deserved or not, Intel hasn't relinquished that status. Also, don't forget how many losers are out there who think that LoserBenchmark is a reliable source of information...

AMD outsells Intel on retail market and that's basically all that matters when it comes to premium products. OEM market is full of bulk trash, nothing premium there. Server market, well, there are no premium products, just boring boxes. So yes, where that premium brand matters, AMD leads. Looking at whole brand, that's different story.To you and me, sure, I agree. To most of the world, no, Intel is still the more recongnised brand. AMD's greatest success has only happened over the past six years and by no means did it relegate Intel to almost nothing like AMD was during the FX years. Sure, AMD has been out-selling Intel but only in the last three years or so. Don't be fooled into thinking that AMD is even close to market parity with Intel yet because it isn't, not on the consumer side or the server side:

"In the fourth quarter of 2022, 62.8 percent of x86 computer processor or CPU tests recorded were from Intel processors, up from the lower percentage share seen in previous quarters of 2021, while 35.2 percent were AMD processors. When looking solely at laptop CPUs, Intel is the clear winner, accounting for 76.7 percent of laptop CPU test benchmark results in the fourth quarter of 2022."

Yes, even then there was innovation on that side. Nowadays pretty much no.That's going REALLY far back, like early AGP-era.

Intel don't decide motherboard prices. Also AMD does not force manufacturers to use all expensive features. This is basically what I talk about: AMD is premium brand on motherboards and makers don't want to make cheap trash like on Intel side.Oh I agree, I think that AMD made the right move there. The problem was that Intel's reaction of lowering their motherboard prices was a very effective counter to that.

Good sales for product that just launched. Waiting for next batch and then we'll see better.Sure, but not so much to make impossible that the majority of those sales were to the relatively-few fanboys, very wealthy gamers and scalpers (scalpers are still a thing).

Show me decent sales numbers after they've been on the market for a month and then I'll say "Yeah, sales have been good".

Probably not since that SRAM optimized 7nm node is fairly new. New nodes always have lower capacity than old ones. There are also other customers than just AMD. New node -> not so much capacity.That's not true at all. TSMC could produce easily produce more than AMD could sell of any cache type. I don't know where you get that idea. There's nothing there that mentions a shortage, you are still only assuming.

If you look almost any review, majority of then are assumptions. That is, because if fact is something manufacturer clearly states, there are very few facts.I'm sorry but that is not a fact as I see it. That is still you making assumptions.

Example: I claim that AMD designed Zen4 mainly for server use, not for desktops. Looking at known facts, that is very obvious. But, if fact = AMD clearly tells that (they naturally don't), then it's not.

Most parts go for mid and low end products because sale numbers are bigger. But, there is problem. With desktop Ryzen high end and mid end use Same parts. It makes absolutely no sense to put limit high end availability when there is no difference between high end and low end parts. We saw that when quad core Ryzens were short supply. Why put Same parts on low end Ryzen when they could be put on high and mid end Ryzens?That's not a fact either because they always make the most parts in the low and mid-ranges because the high-end parts don't sell nearly as well. This is not just true in the semiconductor business, this is true about everything.

I'm starting to wonder if you understand what a fact is.

Your logic might apply if high and mid end parts require different product likes like with Intel they usually do. But that does not apply for AMD.

Perhaps none of those have direct answer from AMD that there is shortage? And why would AMD tell that to anyone? All those agree with me that there is shortage of V-Cache chips because it's only logical explanation that actually makes sense. Logical, makes sense, it almost always is.No, it doesn't add up, not even close. I still don't know how you managed to reach that conclusion. If there was a shortage, I can guarantee you that someone would've said something. Do you really think that you know more about what's going on than actual CPU journalists like Steve Burke, Steve Walton, Hilbert Hagedoorn, Paul Alcorn or W1zard? All you did was read some short article that Steve Walton wrote, an article that did not mention any kind of shortage or even postulate that one existed, made one very presumptuous leap and decided to call it fact. That's not how facts work.

TSMC 7nm process started mass production over 6 years ago. Because process is 7nm, while TSMC is almost ready with 3nm, there will NOT be lot of it. No, TSMC won't invest much on 6+ year old process. TSMC have excess 7nm capacity and they decided to make different process to get more use for 7nm process.The article does talk about a special node but it doesn't say anything about a shortage, so you've still failed to offer evidence. If it's being made on a TSMC process dedicated to SRAM, that means there will be LOTS of it. Sure, AMD is using a lot of it (probably more than what is necessary) but there's nothing in that article that talks about limited supply, anywhere. In fact, if TSMC has a production line dedicated to it, it tells me the exact opposite. So, you're still making assumptions that I think are incorrect.

Yeah and again that is New version of 7nm that is based on old 7nm node AMD is using. Again, TSMC won't put much effort on old node.AMD uses 7nm not only because RAM doesn't really scale well beyond that but because it's far less expensive. This wouldn't be the case if there was an issue with scarcity because as we've been made painfully aware over the last couple of years, scarcity means that prices skyrocket. I really doubt that there could possibly be a scarcity issue at 7nm because, at the very least, both TSMC and Samsung are capable of producing it in large quantity. The 7nm process was originally produced seven years ago by TSMC which means that it is beyond mature at this point. Any major fab in the world could produce 7nm chips including TSMC, Samsung and GlobalFoundries as stated on its Wikipedia page:

7nm Process - Wikipedia

GlobalFoundries abandoned 7nm on 2018, that's why AMD is only using TSMC.

You mean AMD would manufacture just V-Cache chips on other foundries? It's very expensive to start manufacturing on different process. Very expensive. Also Samsung's "7nm" is pretty far from TSMC 7nm that makes very hard or impossible to fit V-Cache chips in place. Also AMD decided to stick with TSMC (and not using Samsung) after leaving GF 7nm despite they knew there will be shortage of capacity.If there was a supply issue, all AMD would have to do is contact one of the other two to shore up supply and they would do it too because they're not going to leave profit on the table if they don't have to. As you pointed out yourself, a shortage could negatively affect EPYC (and/or Radeon Instinct if it's used there as well). This is something that AMD would never be able to accept if it didn't have to, and it doesn't have to.

Of course it's greedy. But that's exactly what I'm saying here. AMD wants to maximize profits and because there is shortage of V-Cache chips, those go first on high end products. There is not yet R7 V-Cache version so no wonder R5 version is not out.At the end of the day, I'm still forced to believe that AMD's insistence on producing X3D versions of both R9 CPUs while refusing to produce an X3D version of their R5 CPU was greedy, stupid and ultimately anti-consumer. I don't say this just to rag on AMD because you know that I am far more fond of ragging on Intel and nVidia. I'm also not personally affected by what AMD does with Zen4 because I already have an R7-5800X3D and therefore have no interest in Zen4. What I see is AMD shooting themselves in the foot because AM5 isn't so good that it can't be passed up for LGA 1700.

Since LGA1700 is dead end platform, there is also no need to make long term BIOS support. Not to mention that motherboard is trash when it comes to features. As usual, it makes sense for Some people but not for all.Sure, LGA 1700 is a dead platform but you can buy an LGA 1700 motherboard that supports DDR4 for about HALF the price of the cheapest B650 board, the Gigabyte DS3H. That's EXTREMELY attractive because not only does it effectively nullify the longevity of the AM5 platform, the ability to continue using DDR4 makes it actually a better economical choice than AM5. A lot of shrewd gamers were waiting, hoping for a 6-core Zen4 X3D CPU (because why wouldn't they?) but took the Intel route immediately upon discovering that there would be no R5-7600X3D.

RTX 4090 costs more than AMD+Intel parts combined.Why would they do that? Simply because they didn't think that paying $450 for an octocore CPU they didn't need on an expensive B650 motherboard and having to buy at least 16GB of DDR5 wasn't worth it and they were right. Just look at these two scenarios:

AM5:

CPU: R7-7800X3D - $450

Motherboard: Gigabyte B650M DS3H - $150

DDR5 16GB: Patriot Signature Line 16GB DDR5-4800 - $59

Total: $659

LGA 1700:

CPU: i5-13600 - $250

Motherboard: ASRock H610M-HDV - $80

DDR5 16GB kit: $0 (Not Required)

Total: $330

Estimated gaming performance difference:

215 ÷ 185 = 1.1622

With the vast majority of people not caring one way or the other as to which CPU maker they have in their rig, who is going to want to pay literally double for a gaming performance increase of less than 20%?

And yes, AMD offers much better features and those cost money.

Like said previously, AMD wants to build premium brand and has already achieved that on retail market. Premium brand does not allow to sell cheap trash. Those filthy peasants that want cheap platform with poor features, may choose Intel. Wealthy noblemans choose AMD. Basically, you cannot please everyone. Those who buy AMD get quality simply because there is no pure trash available.To be honest, not even the R5-7600X3D would be worth it (at the $350 price point that I envisioned) for gamers from a platform cost / performance standpoint but it has that hype and "cool factor" that often overrides the intelligence of gamers in the same way that nVidia's marketing does.

AMD is already not a great value for their new platform and not having an R5-7600X3D part at $350 only makes things worse.

Avro Arrow

Posts: 3,721 +4,822

I find it funny how Steve writes "rubbish" but says "garbage". It strikes me as VERY Aussie to do that.

m3tavision

Posts: 1,733 +1,510

AMD purchased ATi.. you seem mad about this.Well it does matter when most older games run with no issue with the nvidia drivers but either have issues or straight up won't run with the AMD driver; that is a quantifiable demerit for AMD. Why have a driver that prevents playing games or cannot execute basic functions like forcing AF or disabling vsync ? Also the stuttering is far more of a thing with the AMD driver in certain games than it is for Nvidia. If you can't tell the difference between the organization of the ncp with everthing in a single screen vs the mess from team red...then I can't help you there.

End of the day; obviously people DO care about older games or there wouldn't be things like GoG. Why would someone buy a rasberry pi when they can be 99% confident the older game will work on their pc with nvidia driver? But issue is not just older games; frametimes are a serious issue for amd vs nvidia; again though because of subpar effort on red side drivers, not due to the hardware potential.

For the amount of money these companies charge; I expect a matching level of effort on the driver side, and here AMD has been a disappointment sadly.

And also...ArmA 3 doesn't run much better than Operation Flashpoint. And definitely not as grand a campaign as Resistance.

You are in fact, complaining about something NOBODY cares about... as almost everyone knows, if you are a legacy gamer, many old games need a work around, to function today. You really can't be serious, or believe your own redirect. And yes, why use a Pentium III for old games, when you can have all of that pocket size...?

And for performance, you are aware that AMD has better frame times than nVidia right...? Since they dumped GCN... so it does seem you are stuck in the past, when everything you said use to be true... but no longer. And if you use both types of GPUs... then you truly know this already.

Last edited:

m3tavision

Posts: 1,733 +1,510

7900XT does not outsell 4080 or even close. I have seen B2B numbers in Europe and Nvidia dominates easily. Look at Steam HW Survey if in doubt.

9 out of 10 that are not into tech/hardware, that needs a desktop GPU, only looks at Nvidia, because they have heard terrible things about AMD, or had a bad experience earlier.

Games are not coded for RDNA at all. You are clueless. Standards are being used.

4090 is a not a pro-card, Quadro line is. 4090 is an entusiast gaming card and it's selling like hotcakes, since it's the absolute best 4K+ card available, by a huge margin. Destroys 7900XTX easily overall at 4K+

Game dev's care alot about Nvidia's money and Nvidia pays them to implement DLSS, RT and other stuff, because Nvidia can easily afford it, AMD can't.

EVGA were sleeping anyway. They have not released a good Nvidia GPU in years anyway. They will probably go bankrupt in a few years because Nvidia GPUs was like 80% of their income. They make garbage mostly. WIthout GPUs they will be gone or sold. Their PSUs are OEMs and they make peanuts from it. The rest of their hardware is pretty meh. EVGA was a good brand 5-10 years ago, today it's mostly crap.

Yes, we are 100% aware that lemmings choose nVidia. (due to incessant marketing)

That doesn't mean they are better, it means they have better marketing that non-tech savvy people fall for. Anyone IN THE KNOW... who read reviews, know that the XT is superior and better that the laughable 4070ti. Even you know this and can't argue..

Safety in numbers only means you are too afraid to argue price/performance logic.

And yes, RDNA is the gaming standard.... It's what all AAA games are developed for. Don't be mad that RDNA is in everything, including phones soon.

EVGA is rumored to making a high-end Gaming box using AMD's APU. Since nobody needs CUDA anymore, why deal with nVidia and all the non-gamer marketing & proprietary hardware meant for content creation...? (GTX cards use FSR.... & those are the cards that need upscaling)

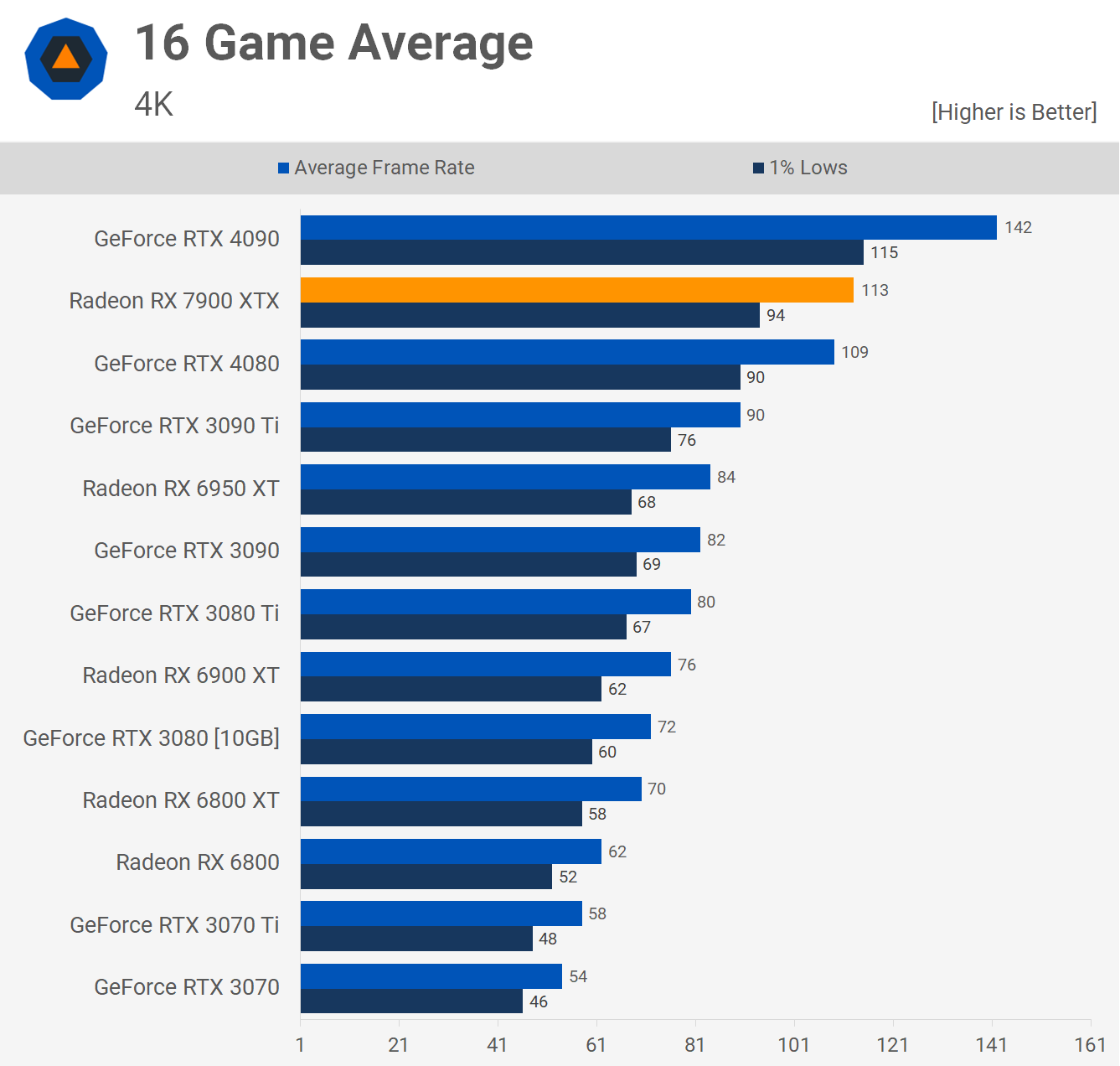

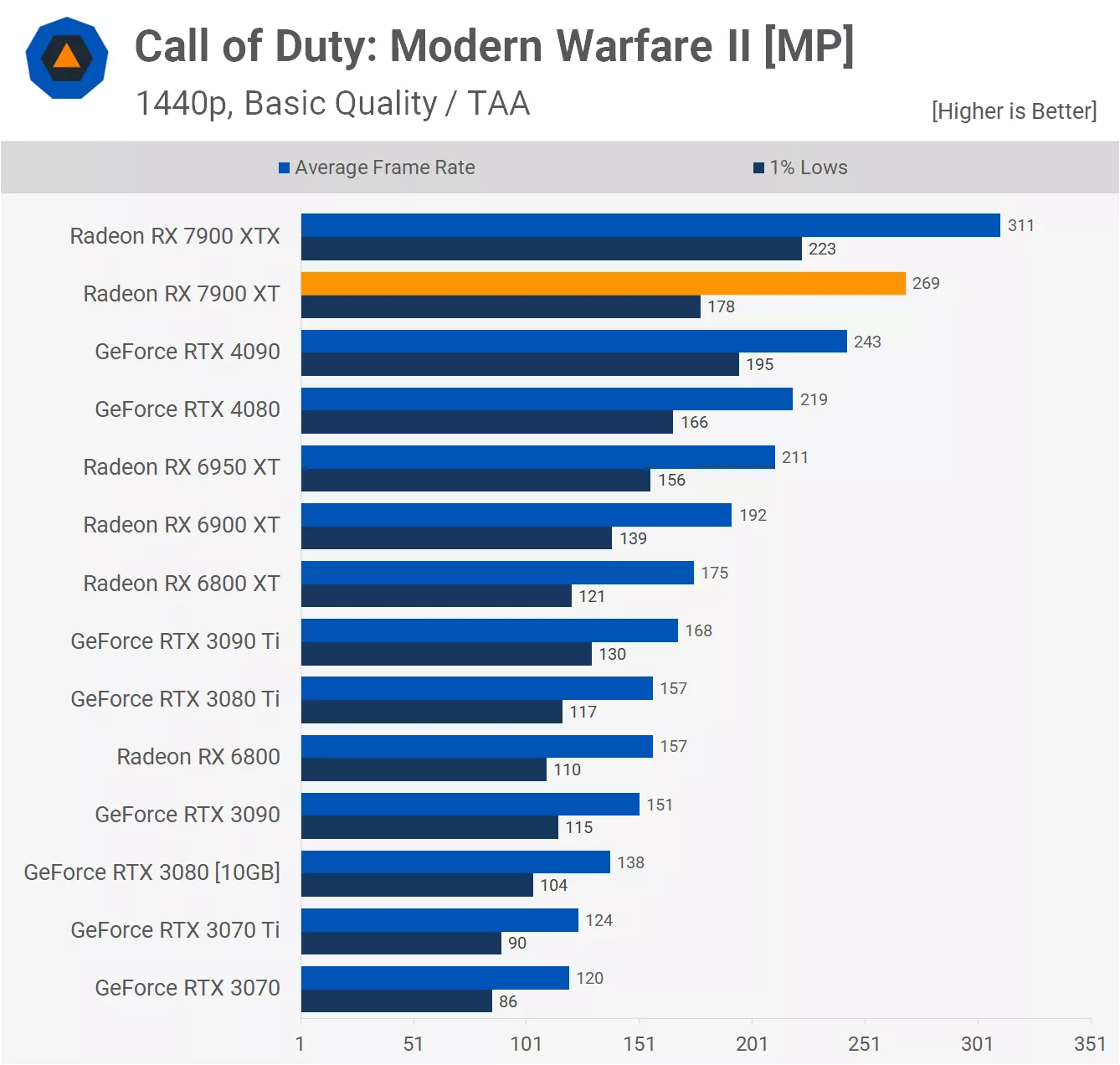

Gamers do not buy a $800+ gpu for less frames. the RTX 4090 ONLY beats AMD in slow-frames (RT). But I have already established that for $1,300 less the Radeon XT actually beats the rtx 4090 in fast frames (raster) on a few games... and the XTX beats the 4090 in many games....

Remember:

RTX4090 = $2,100

XTX = $1,049

For Competitive players this game sold $1billion in pre-sales. Nobody is buying a GPU for old games...

Cherrypicking sales numbers and benchmarks does not equal facts

7900XTX is not even close to 4090 to overall. Features are way less and worse. RT perf is on 3090 Ti level at best. Hence the price.

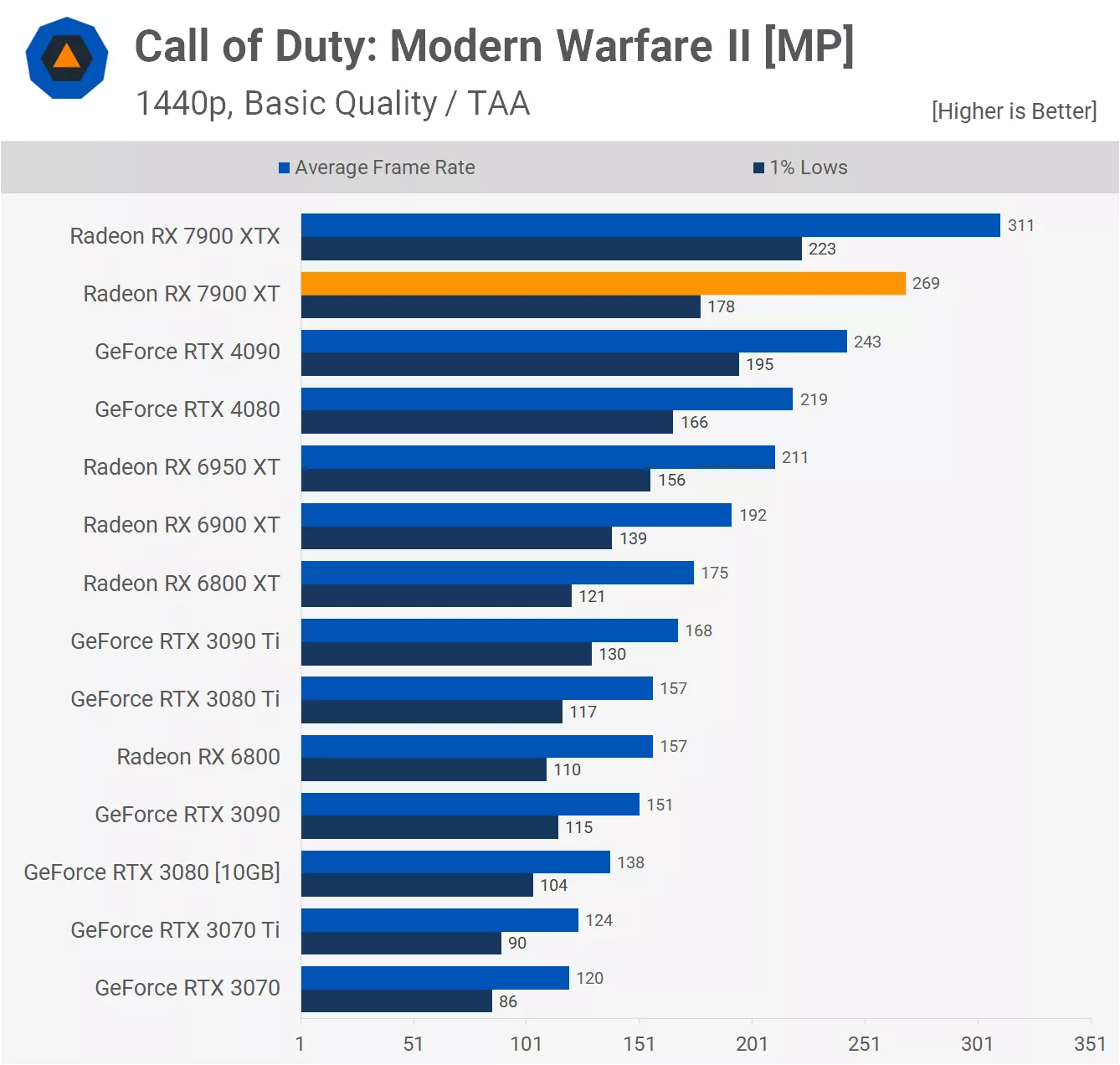

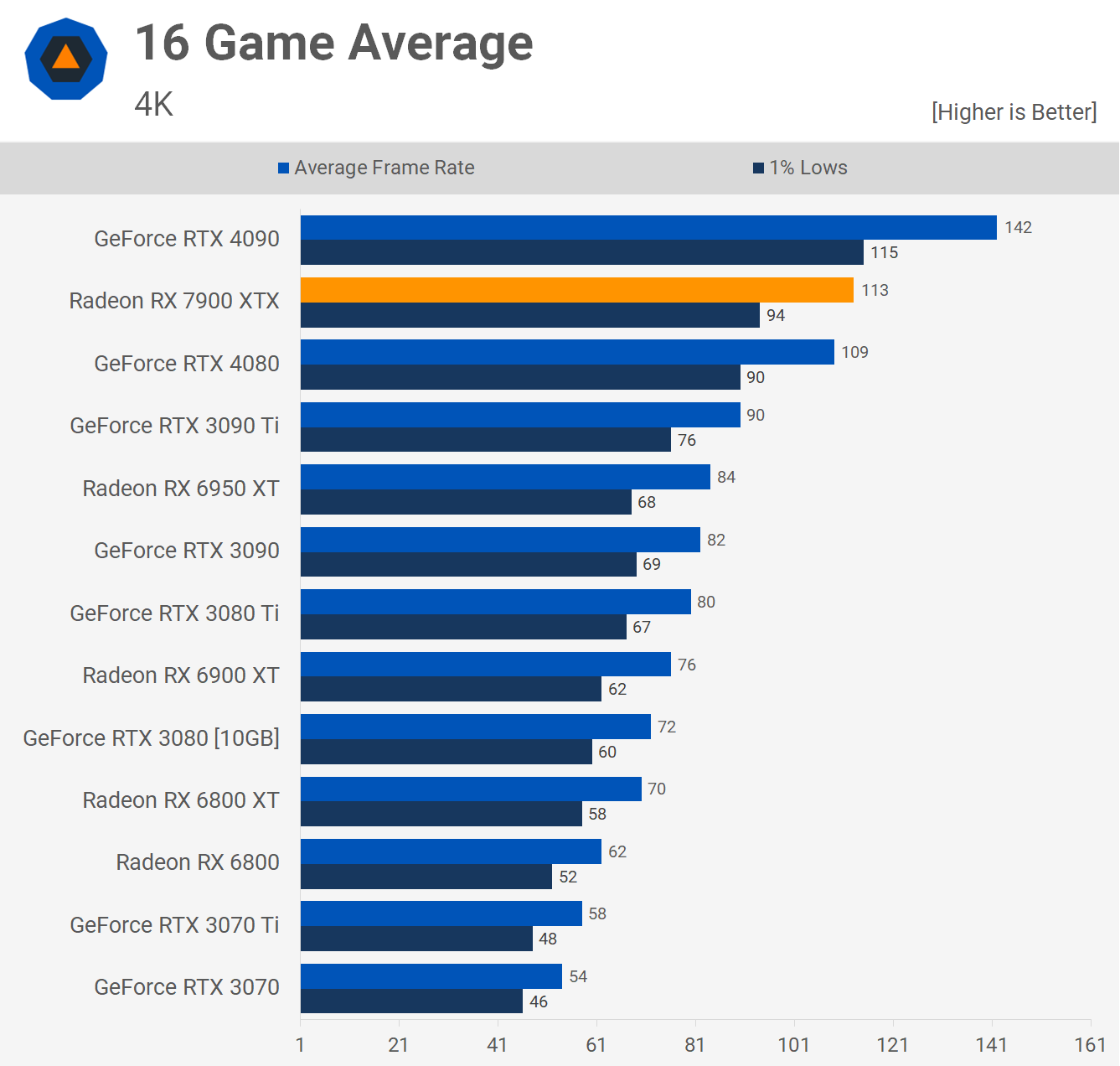

Lets look at overall performance instead. Also, lets look at 4K which is probably what people that buy a 1000+ dollar GPU will use;

7900XTX is not even close to 4090 to overall. Features are way less and worse. RT perf is on 3090 Ti level at best. Hence the price.

Lets look at overall performance instead. Also, lets look at 4K which is probably what people that buy a 1000+ dollar GPU will use;

Last edited:

takaozo

Posts: 1,077 +1,641

Looking at that chart and to see the 2060 @26% of a 4080/7900 while having 70-80 fps in Hogwarts legacy in 1440p makes me wonder why we all need to upgrade (except 4k folks).

Looks to me older cards still holding strong even with UE4. I will only look for an upgrade when UE5 will be the norm and GTA VI will come along.

Looks to me older cards still holding strong even with UE4. I will only look for an upgrade when UE5 will be the norm and GTA VI will come along.

2060 is more like 50-55 fps @ 1440p with medium settings in that game and 240-360 Hz 1440p panels existLooking at that chart and to see the 2060 @26% of a 4080/7900 while having 70-80 fps in Hogwarts legacy in 1440p makes me wonder why we all need to upgrade (except 4k folks).

Looks to me older cards still holding strong even with UE4. I will only look for an upgrade when UE5 will be the norm and GTA VI will come along.

Going to ultra settings and 2060 barely holds 30 fps at 1440p

Also, RT can be enabled on top.

takaozo

Posts: 1,077 +1,641

I don't see too much difference between medium, high and ultra settings, so medium it is.2060 is more like 50-55 fps @ 1440p with medium settings in that game and 240-360 Hz 1440p panels exist

Going to ultra settings and 2060 barely holds 30 fps at 1440p

Also, RT can be enabled on top.

Also I disabled general fog, yes that one that even indoors is visible.

m3tavision

Posts: 1,733 +1,510

Cherrypicking sales numbers and benchmarks does not equal facts

7900XTX is not even close to 4090 to overall. Features are way less and worse. RT perf is on 3090 Ti level at best. Hence the price.

Lets look at overall performance instead. Also, lets look at 4K which is probably what people that buy a 1000+ dollar GPU will use;

Those "cherry picked" illustrate one thing....

That the XTX does beat the over-inflated RTX4090 is several games.... even the XT beat the 4090.

What is really odd, is that the RTX4080 NEVER beats the RTX4090....

And again, any time ANYONE brings up RT we can laugh in your face because it's not remotely valid, bcz nobody actually games using RT. They try it for a few hours then go back to what they paid for... frames.

Over-all performance...? <-- AMD's XTX wins. Unless you want to pay +$1,300 more for 0~15% more frames in some games, or else you are stuck with a $1,200 RTX4080 that doesn't produce as much frames as the XTX...

Trying to compare a $2.3k card to a $1k card, when the $1k card beats the 2.3k card in several games is laughable. NOBODY EXPECTS THE $1k to EQUAL the $2.3 card... ever. But the XTX is so powerful, people like you are comparing it to the 2x more expensive 4090....

Those "cherry picked" illustrate one thing....

That the XTX does beat the over-inflated RTX4090 is several games.... even the XT beat the 4090.

What is really odd, is that the RTX4080 NEVER beats the RTX4090....

And again, any time ANYONE brings up RT we can laugh in your face because it's not remotely valid, bcz nobody actually games using RT. They try it for a few hours then go back to what they paid for... frames.

Over-all performance...? <-- AMD's XTX wins. Unless you want to pay +$1,300 more for 0~15% more frames in some games, or else you are stuck with a $1,200 RTX4080 that doesn't produce as much frames as the XTX...

Trying to compare a $2.3k card to a $1k card, when the $1k card beats the 2.3k card in several games is laughable. NOBODY EXPECTS THE $1k to EQUAL the $2.3 card... ever. But the XTX is so powerful, people like you are comparing it to the 2x more expensive 4090....

4080 beats 7900XTX in some games too, what is your point? Cherrypicking benchmarks is pointless, that is why you look at average across many games instead. Logic 101.

Overall a 4090 is 25% faster than 7900XTX at 4K in pure raster, if you enable RT it's more like 50-75% faster. Thats what you pay for.

7900XTX and 4080 are pretty much even, untill you enable RT then 4080 is much faster. 4080 also have way more and better features (NvEnc, DLSS2/3, Shadowplay) + Better performance per watt as 4080 uses 50 watts less.

7900XTX should be 899 dollars tops.

7900XT should be 699 tops.

AMD are in no position to ask Nvidia prices, because they are not even close in terms of features and support. Especially lesser known titles and early access games tends to run like pure garbage on AMD GPUs. AMD mostly optimizes for benchmarks (3Dmark etc.) and popular games that gets benchmarked alot. Again, Logic 101, when you don't have the manpower to optimize for everything. Most game dev's knows that the majority will be using a Nvidia card, hell most of them does exactly this. Thats why all games always run well on Nvidia. AMD is hit or miss.

At this point, Nvidia could have killed AMD if they wanted. They rather wanted to push for RT tho. If Nvidia actually used 100% of the die for CUDA Cores, AMD would have been done in the GPU market by now, or, maybe they would be able to compete in the low-end market with dirt cheap GPUs.

AMD goes full raster perf and still lose

AMD is always one step behind Nvidia, that is the problem. The solution is to be cheaper than Nvidia, but right now, they are not cheap enough.

Last edited:

60% more money isn't worth it over the XTX. But Nvidia will say it's only 33% over the 4080 so it ain't too bad. Lol suckers are born everyday. Raw fps is #1 priority. Software gimmicks is pure marketing. Leather jacket man said it himself it's software company.Those "cherry picked" illustrate one thing....

That the XTX does beat the over-inflated RTX4090 is several games.... even the XT beat the 4090.

What is really odd, is that the RTX4080 NEVER beats the RTX4090....

And again, any time ANYONE brings up RT we can laugh in your face because it's not remotely valid, bcz nobody actually games using RT. They try it for a few hours then go back to what they paid for... frames.

Over-all performance...? <-- AMD's XTX wins. Unless you want to pay +$1,300 more for 0~15% more frames in some games, or else you are stuck with a $1,200 RTX4080 that doesn't produce as much frames as the XTX...

Trying to compare a $2.3k card to a $1k card, when the $1k card beats the 2.3k card in several games is laughable. NOBODY EXPECTS THE $1k to EQUAL the $2.3 card... ever. But the XTX is so powerful, people like you are comparing it to the 2x more expensive 4090....

And again, both XT & XTX will last a long time.

L

People are easily Influenced by fake tubers testing RT games and then say buy ngreedia. I stay away from frauds like LTT and J2C. AMD offers price to performance. Bottom line.

Lol. Lemmings and parrots pay more for green team and get less frames and worse if they pair it with a blue team CPUs. Then they'll comment and say how come I'm not getting the frames as such and such is getting.Yes, we are 100% aware that lemmings choose nVidia. (due to incessant marketing)

That doesn't mean they are better, it means they have better marketing that non-tech savvy people fall for. Anyone IN THE KNOW... who read reviews, know that the XT is superior and better that the laughable 4070ti. Even you know this and can't argue..

Safety in numbers only means you are too afraid to argue price/performance logic.

And yes, RDNA is the gaming standard.... It's what all AAA games are developed for. Don't be mad that RDNA is in everything, including phones soon.

EVGA is rumored to making a high-end Gaming box using AMD's APU. Since nobody needs CUDA anymore, why deal with nVidia and all the non-gamer marketing & proprietary hardware meant for content creation...? (GTX cards use FSR.... & those are the cards that need upscaling)

Gamers do not buy a $800+ gpu for less frames. the RTX 4090 ONLY beats AMD in slow-frames (RT). But I have already established that for $1,300 less the Radeon XT actually beats the rtx 4090 in fast frames (raster) on a few games... and the XTX beats the 4090 in many games....

Remember:

RTX4090 = $2,100

XTX = $1,049

For Competitive players this game sold $1billion in pre-sales. Nobody is buying a GPU for old games...

People are easily Influenced by fake tubers testing RT games and then say buy ngreedia. I stay away from frauds like LTT and J2C. AMD offers price to performance. Bottom line.

L

Lol. Lemmings and parrots pay more for green team and get less frames and worse if they pair it with a blue team CPUs. Then they'll comment and say how come I'm not getting the frames as such and such is getting.

People are easily Influenced by fake tubers testing RT games and then say buy ngreedia. I stay away from frauds like LTT and J2C. AMD offers price to performance. Bottom line.

People buy Nvidia for driver support / compatibility and features. Nvidia is obviously ahead in terms of features, if you think otherwise, you have no clue. AMD copies Nvidia all the time, when it comes to features, pretty much every time, the AMD feature is worse;

Gsync > Freesync

DLSS > FSR

Shadowplay > Relive

4080 easily beats 7900XTX on perf/watt. 7900XTX uses 50 watts more on average and performs similar overall in raster, when you enable RT, 4080 is much faster.

Also, 4080 has DLSS, DLAA, DLDSR, NvEnc, Shadowplay and way better overall support in lesser know titles and especially early access titles.

Nvidia cards also keep their value better than AMD, because AMD always compete on price and lowers them overtime (just like Android phones vs iPhones).

I know a guy who bought 6950XT on release for 1200 dollars. He sold it for 400 dollars recently. Then went back to Nvidia with RTX 4080. I can sell my backup 3080 easily for 500 dollars today in comparison.

Even AMD said 7900XTX can't compete with 4090...

AMDs OWN WORDS; "7900XTX is made to compete with 4080"

-> ON RASTER that is. When it comes to RT PERF, AMD is years behind.

I don't give much for RT, however some people love it. Who are you to dictate what others want. Nvidia sells well, AMD does not, thats reality for you.

7900XT already dropped ~150 dollars since launch.

4070 Ti went up slightly since launch, because people gobbled them up. Sold like hotcakes, just like 4090.

4090 is the only true 4K card right now. No other GPU is even close. Techpowerup shows 25% lead over 7900XTX -OVERALL- in 4K. Same as 4080 -> 4090.

Just because you are too poor to buy Nvidia, does not mean AMD is better. If AMD was actualy better, they would dominate, but they don't. The end.

Last edited:

m3tavision

Posts: 1,733 +1,510

AMD are in no position to ask Nvidia prices, because they are not even close in terms of features and support. Especially lesser known titles and early access games tends to run like pure garbage on AMD GPUs. AMD mostly optimizes for benchmarks (3Dmark etc.) and popular games that gets benchmarked alot. Again, Logic 101, when you don't have the manpower to optimize for everything. Most game dev's knows that the majority will be using a Nvidia card, hell most of them does exactly this. Thats why all games always run well on Nvidia. AMD is hit or miss.

What support and features are you talking about...? Non-gaming ones..? Or gaming ones..?

See, how I can unpack your argument very easily.

Again... it seems influenced by marketing and not price/performance.

Huh???People buy Nvidia for driver support / compatibility and features. Nvidia is obviously ahead in terms of features, if you think otherwise, you have no clue. AMD copies Nvidia all the time, when it comes to features, pretty much every time, the AMD feature is worse;

Gsync > Freesync

DLSS > FSR

Shadowplay > Relive

4080 easily beats 7900XTX on perf/watt. 7900XTX uses 50 watts more on average and performs similar overall in raster, when you enable RT, 4080 is much faster.

Also, 4080 has DLSS, DLAA, DLDSR, NvEnc, Shadowplay and way better overall support in lesser know titles and especially early access titles.

Nvidia cards also keep their value better than AMD, because AMD always compete on price and lowers them overtime (just like Android phones vs iPhones).

I know a guy who bought 6950XT on release for 1200 dollars. He sold it for 400 dollars recently. Then went back to Nvidia with RTX 4080. I can sell my backup 3080 easily for 500 dollars today in comparison.

Even AMD said 7900XTX can't compete with 4090...

AMDs OWN WORDS; "7900XTX is made to compete with 4080"

-> ON RASTER that is. When it comes to RT PERF, AMD is years behind.

I don't give much for RT, however some people love it. Who are you to dictate what others want. Nvidia sells well, AMD does not, thats reality for you.

7900XT already dropped ~150 dollars since launch.

4070 Ti went up slightly since launch, because people gobbled them up. Sold like hotcakes, just like 4090.

4090 is the only true 4K card right now. No other GPU is even close. Techpowerup shows 25% lead over 7900XTX -OVERALL- in 4K. Same as 4080 -> 4090.

Just because you are too poor to buy Nvidia, does not mean AMD is better. If AMD was actualy better, they would dominate, but they don't. The end.

If you already own a 30 series you can probably skip the 40 series entirely. If you don't already own one, it's going to be dicier. These new GPUs cost too much, might as well get the 4090 when you start going to these amounts, otherwise, pray Intel Arc actually becomes viable and there is mid-range pressure again. Haven't seen mid-range value since the 16 series, which was a long time ago. And that really wasn't enough of an upgrade over the 10 series for 1060 owners, which is why that GPU stayed doggedly at the top of charts until maybe just very recently. I do not expect the 4060 to change that.

Neither of the reviewed GPUs looks to deliver standout 1440p performance at these prices. You'd want to be able to at least hold 120+fps stable on max graphics settings for games that are already out, because within the next 2 years you'll have to drop settings and lose performance. It's way too much money to not be able to max out when you buy them.

Neither of the reviewed GPUs looks to deliver standout 1440p performance at these prices. You'd want to be able to at least hold 120+fps stable on max graphics settings for games that are already out, because within the next 2 years you'll have to drop settings and lose performance. It's way too much money to not be able to max out when you buy them.

godrilla

Posts: 1,857 +1,287

There are signs are prices are coming down for gpus. While this is quoting microcenter's pricing currently the 4080 by pny fell to $1129Prices need to drop for both GPUs by a lot more.

$1,229.99 SAVE $100.00

$1,129.99

Get Redfall Bite Back Edition with select GeForce RTX 40 Series

https://www.microcenter.com/product...-triple-fan-16gb-gddr6x-pcie-40-graphics-card

the 6950XT fell to $599 with cpu Combo

7900xtx by Asrok fell to $979

https://www.microcenter.com/product...d-triple-fan-24gb-gddr6-pcie-40-graphics-card

The current strategy is launch super high and adjust the prices according to demand. It was a matter of who blinks first. Hopefully this is a sign for more price cuts.

Similar threads

- Replies

- 58

- Views

- 1K

- Locked

- Replies

- 61

- Views

- 568

Latest posts

-

Nintendo Switch 2 specs suggest GPU performance similar to a GTX 1050 Ti

- DragonSlayer101 replied

-

Factory video shows Unitree robot going berserk, nearly injuring workers

- NicktheWVAHick replied

-

Tesla cancels $16,000 Cybertruck range extender

- Axeia replied

-

TechSpot is dedicated to computer enthusiasts and power users.

Ask a question and give support.

Join the community here, it only takes a minute.