sorry but you misunderstood. what I'm saying is that the whole stack of GPUs need to go down by one or two levels. right now GPU prices are obscene. a 10-20% drop won't do jack compared to the 50-100% price increase that happened in the past few years.There are signs are prices are coming down for gpus. While this is quoting microcenter's pricing currently the 4080 by pny fell to $1129

$1,229.99 SAVE $100.00

$1,129.99

Get Redfall Bite Back Edition with select GeForce RTX 40 Series

https://www.microcenter.com/product...-triple-fan-16gb-gddr6x-pcie-40-graphics-card

the 6950XT fell to $599 with cpu Combo

7900xtx by Asrok fell to $979

https://www.microcenter.com/product...d-triple-fan-24gb-gddr6-pcie-40-graphics-card

The current strategy is launch super high and adjust the prices according to demand. It was a matter of who blinks first. Hopefully this is a sign for more price cuts.

You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

Radeon RX 7900 XT vs. GeForce RTX 4070 Ti: 50 Game Benchmark

- Thread starter Steve

- Start date

godrilla

Posts: 1,860 +1,289

I didn't misunderstand. I am just balancing my hopes and expectations with reality. Unfortunately this is what happens In a duopoly which Intel was supposed to fix in our favor. I agree prices should be better across the board. The only thing we can do is complainabout it. I on the other hand with some other's like Daniel Owen's yt channel like to shed light on great deals for potential buyers. If the potential buyers doesn't buy the 7900xt at $799 and buys the 6950XT xt at $569 ( with cpu Combo and 5% discount) that puts pressure on AMD to lower prices on the 7900xt. Same goes for the 4080 if the potential buyer buys the $1129 pny version it puts pressure on the other vendors to lower prices. Nvidia is the pinnacle when it comes to cronyism and now unfortunately AMD is following their lead.sorry but you misunderstood. what I'm saying is that the whole stack of GPUs need to go down by one or two levels. right now GPU prices are obscene. a 10-20% drop won't do jack compared to the 50-100% price increase that happened in the past few years.

There is no point in arguing about this. Nobody makes a 1 or 2 generation upgrade unless they have money in which case they don't care about the DDR4 thing.I totally agree with you and I might not want it either but M.2 slots aren't a big deal for gamers because NVMe load times aren't much better than SATA SSDs.

Remember that big gaming storage test that Tim did awhile back?:

"What We Learned:

Why don't games benefit all that much from faster SSDs? Well, it seems clear that raw storage performance is not the main bottleneck for loading today's games. Pretty much all games released up to this point are designed to be run off hard drives, which are very slow; after all, the previous generation of consoles with the PS4 and Xbox One both used slow mechanical drives to store games.

Today's game engines simply aren't built to make full use of fast storage, and so far there's been little incentive to optimize for PCIe SSDs. Instead, the main limitation seems to be things like how quickly the CPU can decompress assets, and how quickly it can process a level before it's ready for action, rather than how fast it can read data off storage."

I agree but it's not about buying new DDR4, it's about being able to use the DDR4 that you already own. I mean, let's be honest here, what percentage of gamers looking to get this do you think are still using DDR3? I think that it's statistically zero.

Most people still don't see it that way. Intel has been the #1 name in x86 CPUs since the creation of the 8086 back in 1978. You don't really think that AMD managed to change 45 years of market perception in only 6 years, do you? There are probably just as many people who have never had an AMD CPU as there are who have never had a Radeon GPU. These aren't cheap devices and when people have to spend a lot of money, getting a familiar brand gives them peace-of-mind. Whether deserved or not, Intel hasn't relinquished that status. Also, don't forget how many losers are out there who think that LoserBenchmark is a reliable source of information...

To you and me, sure, I agree. To most of the world, no, Intel is still the more recongnised brand. AMD's greatest success has only happened over the past six years and by no means did it relegate Intel to almost nothing like AMD was during the FX years. Sure, AMD has been out-selling Intel but only in the last three years or so. Don't be fooled into thinking that AMD is even close to market parity with Intel yet because it isn't, not on the consumer side or the server side:

"In the fourth quarter of 2022, 62.8 percent of x86 computer processor or CPU tests recorded were from Intel processors, up from the lower percentage share seen in previous quarters of 2021, while 35.2 percent were AMD processors. When looking solely at laptop CPUs, Intel is the clear winner, accounting for 76.7 percent of laptop CPU test benchmark results in the fourth quarter of 2022."

That's going REALLY far back, like early AGP-era.

Oh I agree, I think that AMD made the right move there. The problem was that Intel's reaction of lowering their motherboard prices was a very effective counter to that.

Sure, but not so much to make impossible that the majority of those sales were to the relatively-few fanboys, very wealthy gamers and scalpers (scalpers are still a thing).

Show me decent sales numbers after they've been on the market for a month and then I'll say "Yeah, sales have been good".

That's not true at all. TSMC could produce easily produce more than AMD could sell of any cache type. I don't know where you get that idea. There's nothing there that mentions a shortage, you are still only assuming.

I'm sorry but that is not a fact as I see it. That is still you making assumptions.

Absolutely. They're a business and they have to focus more on the more profitable side of the business and that's commercial. I couldn't agree more.

That's not a fact either because they always make the most parts in the low and mid-ranges because the high-end parts don't sell nearly as well. This is not just true in the semiconductor business, this is true about everything.

I'm starting to wonder if you understand what a fact is.

No, it doesn't add up, not even close. I still don't know how you managed to reach that conclusion. If there was a shortage, I can guarantee you that someone would've said something. Do you really think that you know more about what's going on than actual CPU journalists like Steve Burke, Steve Walton, Hilbert Hagedoorn, Paul Alcorn or W1zard? All you did was read some short article that Steve Walton wrote, an article that did not mention any kind of shortage or even postulate that one existed, made one very presumptuous leap and decided to call it fact. That's not how facts work.

The article does talk about a special node but it doesn't say anything about a shortage, so you've still failed to offer evidence. If it's being made on a TSMC process dedicated to SRAM, that means there will be LOTS of it. Sure, AMD is using a lot of it (probably more than what is necessary) but there's nothing in that article that talks about limited supply, anywhere. In fact, if TSMC has a production line dedicated to it, it tells me the exact opposite. So, you're still making assumptions that I think are incorrect.

AMD uses 7nm not only because RAM doesn't really scale well beyond that but because it's far less expensive. This wouldn't be the case if there was an issue with scarcity because as we've been made painfully aware over the last couple of years, scarcity means that prices skyrocket. I really doubt that there could possibly be a scarcity issue at 7nm because, at the very least, both TSMC and Samsung are capable of producing it in large quantity. The 7nm process was originally produced seven years ago by TSMC which means that it is beyond mature at this point. Any major fab in the world could produce 7nm chips including TSMC, Samsung and GlobalFoundries as stated on its Wikipedia page:

7nm Process - Wikipedia

If there was a supply issue, all AMD would have to do is contact one of the other two to shore up supply and they would do it too because they're not going to leave profit on the table if they don't have to. As you pointed out yourself, a shortage could negatively affect EPYC (and/or Radeon Instinct if it's used there as well). This is something that AMD would never be able to accept if it didn't have to, and it doesn't have to.

At the end of the day, I'm still forced to believe that AMD's insistence on producing X3D versions of both R9 CPUs while refusing to produce an X3D version of their R5 CPU was greedy, stupid and ultimately anti-consumer. I don't say this just to rag on AMD because you know that I am far more fond of ragging on Intel and nVidia. I'm also not personally affected by what AMD does with Zen4 because I already have an R7-5800X3D and therefore have no interest in Zen4. What I see is AMD shooting themselves in the foot because AM5 isn't so good that it can't be passed up for LGA 1700.

Sure, LGA 1700 is a dead platform but you can buy an LGA 1700 motherboard that supports DDR4 for about HALF the price of the cheapest B650 board, the Gigabyte DS3H. That's EXTREMELY attractive because not only does it effectively nullify the longevity of the AM5 platform, the ability to continue using DDR4 makes it actually a better economical choice than AM5. A lot of shrewd gamers were waiting, hoping for a 6-core Zen4 X3D CPU (because why wouldn't they?) but took the Intel route immediately upon discovering that there would be no R5-7600X3D.

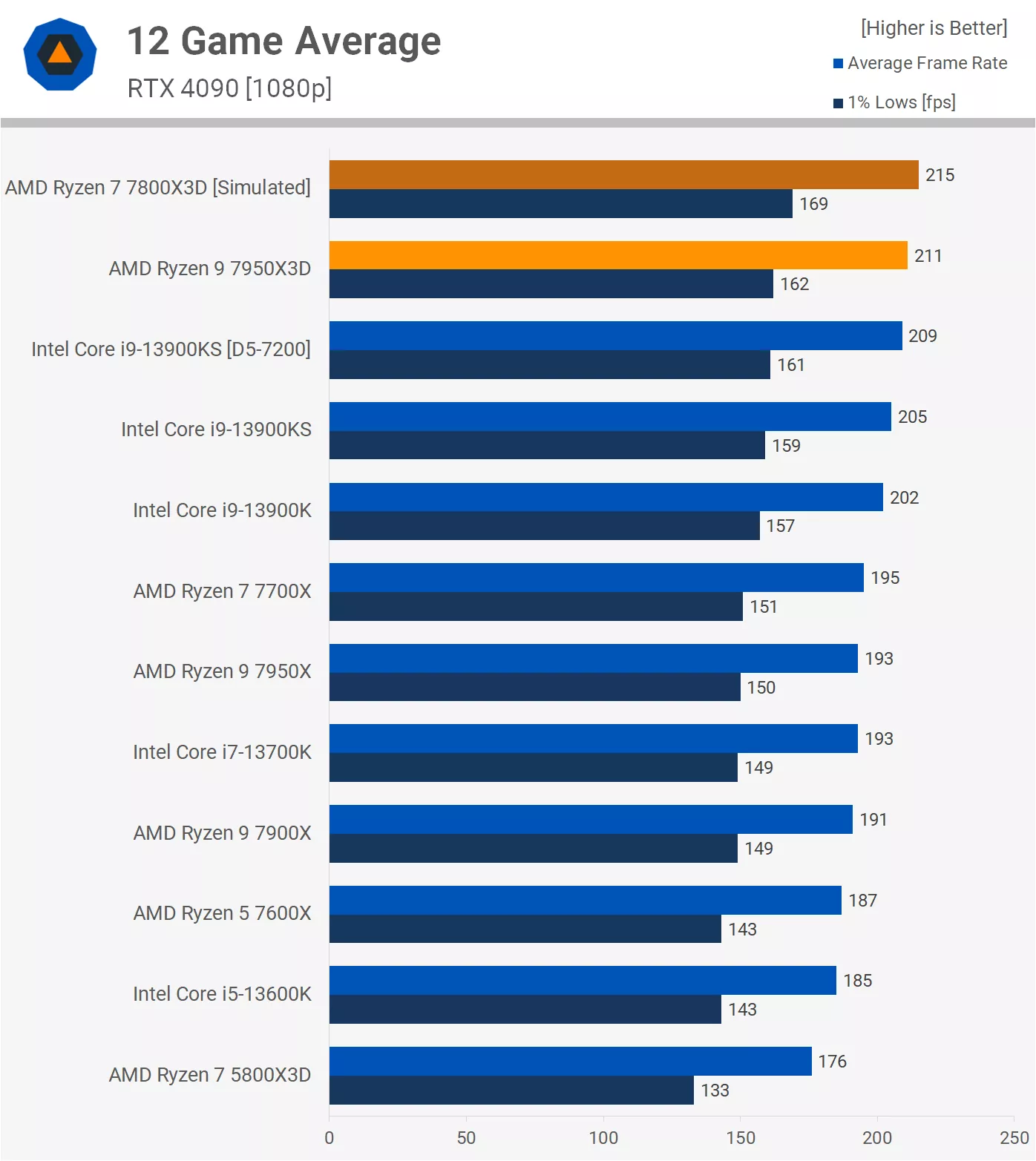

Why would they do that? Simply because they didn't think that paying $450 for an octocore CPU they didn't need on an expensive B650 motherboard and having to buy at least 16GB of DDR5 was worth it and they were right. Just look at these two scenarios:

AM5:

CPU: R7-7800X3D - $450

Motherboard: Gigabyte B650M DS3H - $150

DDR5 16GB: Patriot Signature Line 16GB DDR5-4800 - $59

Total: $659

LGA 1700:

CPU: i5-13600 - $250

Motherboard: ASRock H610M-HDV - $80

DDR5 16GB kit: $0 (Not Required)

Total: $330

Estimated gaming performance difference:

215 ÷ 185 = 1.1622

With the vast majority of people not caring one way or the other as to which CPU maker they have in their rig, who is going to want to pay literally double for a gaming performance increase of less than 20%?

To be honest, not even the R5-7600X3D would be worth it (at the $350 price point that I envisioned) for gamers from a platform cost / performance standpoint but it has that hype and "cool factor" that often overrides the intelligence of gamers in the same way that nVidia's marketing does.

AMD is already not a great value for their new platform and not having an R5-7600X3D part at $350 only makes things worse.

These CPU upgrades are targeted at ppl who need an upgrade. That's the whole point of these benchmarks. DDR4 was great for the 12th gen when DDR5 was expensive and the platform was just launching. Only OEMs will still make PCs with with 13th gen and DDR4 now.

FYI your own config with the 13600 is way down the performance chart. Ignore the image you linked with the 13600k. With a good high end 3600MHz CL14 DDR4 kit, the i5 13600 will be at least 10% slower than the 13600K with DDR5 (if not 15%). And let's be honest, the majority will have 3200MHz CL16 in their PCs (it's the most common config by far) so you can cut a few more percent there.

let's do some math since you seem to like it:

185 - 185*10% = 166.5

215 ÷ 166.5 =1.291

Around 29% is pretty big. Right? And I was being conservative.

TL;DR you made a huge post that says nothing. and you were so smug about it too

Great extensive breakdown relative to my post. The 13500/13400 are DOA. Doesn't make sense for either CPU when it's kind of just in the middle of the road. How wrong and misleading that guy is. His wall of text is all fluff. The 7950X3D is sold out and now scalped for $1,000+ and the 7900X3D is available but with a slight markup. So they're doing pretty good and even better coming soon.There is no point in arguing about this. Nobody makes a 1 or 2 generation upgrade unless they have money in which case they don't care about the DDR4 thing.

These CPU upgrades are targeted at ppl who need an upgrade. That's the whole point of these benchmarks. DDR4 was great for the 12th gen when DDR5 was expensive and the platform was just launching. Only OEMs will still make PCs with with 13th gen and DDR4 now.

FYI your own config with the 13600 is way down the performance chart. Ignore the image you linked with the 13600k. With a good high end 3600MHz CL14 DDR4 kit, the i5 13600 will be at least 10% slower than the 13600K with DDR5 (if not 15%). And let's be honest, the majority will have 3200MHz CL16 in their PCs (it's the most common config by far) so you can cut a few more percent there.

let's do some math since you seem to like it:

185 - 185*10% = 166.5

215 ÷ 166.5 =1.291

Around 29% is pretty big. Right? And I was being conservative.

TL;DR you made a huge post that says nothing. and you were so smug about it too

Similar threads

- Replies

- 58

- Views

- 1K

- Locked

- Replies

- 61

- Views

- 604

Latest posts

-

Nvidia accused of delaying RTX 5060 reviews by withholding drivers

- Beerfloat replied

-

Doom: The Dark Ages discs contain almost no data, require full game downloads

- The Talking Tech replied

-

Doom: The Dark Ages, 36 GPU Benchmark

- Paul1979 replied

-

Where's the cache today?

- Up2you2 replied

-

TechSpot is dedicated to computer enthusiasts and power users.

Ask a question and give support.

Join the community here, it only takes a minute.