Why it matters: While many modern vehicles contain incredibly advanced technology, it’s not infallible. The MobilEye EyeQ3 camera system installed on certain Tesla models, for example, can be tricked into accelerating the car up to 50 mph over the speed limit using a simple piece of tape.

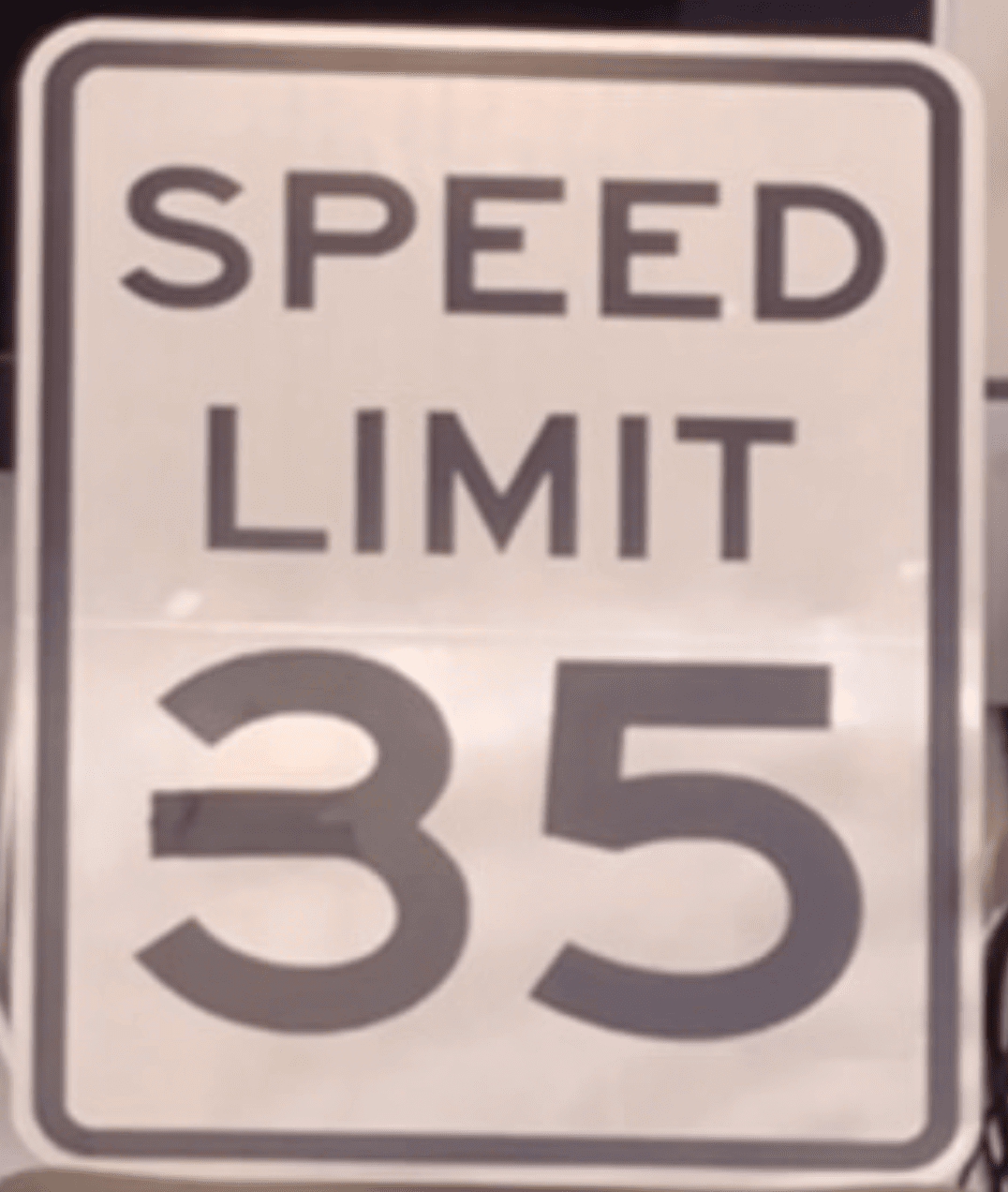

McAfee researchers Steve Povolny and Shivangee Trivedi fooled the system by placing a slither of black tape over part of a 35mph sign to extend the middle line of the ‘3.’ It meant the cameras in Tesla’s 2016 Model S and Model X cars read the sign as 85 mph, causing the cruise control to accelerate toward 50 mph over the 35mph limit.

The MobilEye cameras can read speed limit signs and adjust the speed of the autonomous driving system accordingly. McAfee disclosed the research to Tesla and MobilEye last year, but the automaker said it would not be fixing hardware problems on that generation of vehicles, while MobilEye told MIT Tech Review that the sign could have been misread by a human and said the camera hadn't been designed specifically for fully autonomous driving.

A 3 or an 8? Some car cameras can't tell the difference

Only early Tesla models equipped with the Tesla hardware pack 1 and MobilEye version EyeQ3 were fooled by the trick. Newer Tesla vehicles use proprietary cameras, and MobilEye’s more recent version of the camera system was not susceptible.

“The newest models of Tesla vehicles do not implement MobilEye technology any longer and do not currently appear to support traffic sign recognition at all,” said Povolny.

The researchers said the number of 2016 Teslas on the roads meant their discovery was still a concern. “The vulnerable version of the camera continues to account for a sizeable installation base among Tesla vehicles,” added Povolny. “We are not trying to spread fear and say that if you drive this car, it will accelerate into through a barrier, or to sensationalize it. The reason we are doing this research is we're really trying to raise awareness for both consumers and vendors of the types of flaws that are possible.”

Tesla’s Autopilot feature was found to have been activated during several fatal crashes, leading to Tesla reiterating that despite the somewhat misleading name, Autopilot is not meant to make the car fully autonomous, and drivers must keep their hands on the wheel at all times.

Main image credit: Angelus_Svetlana via Shutterstock

https://www.techspot.com/news/84076-researchers-show-how-trick-tesla-speeding-using-piece.html