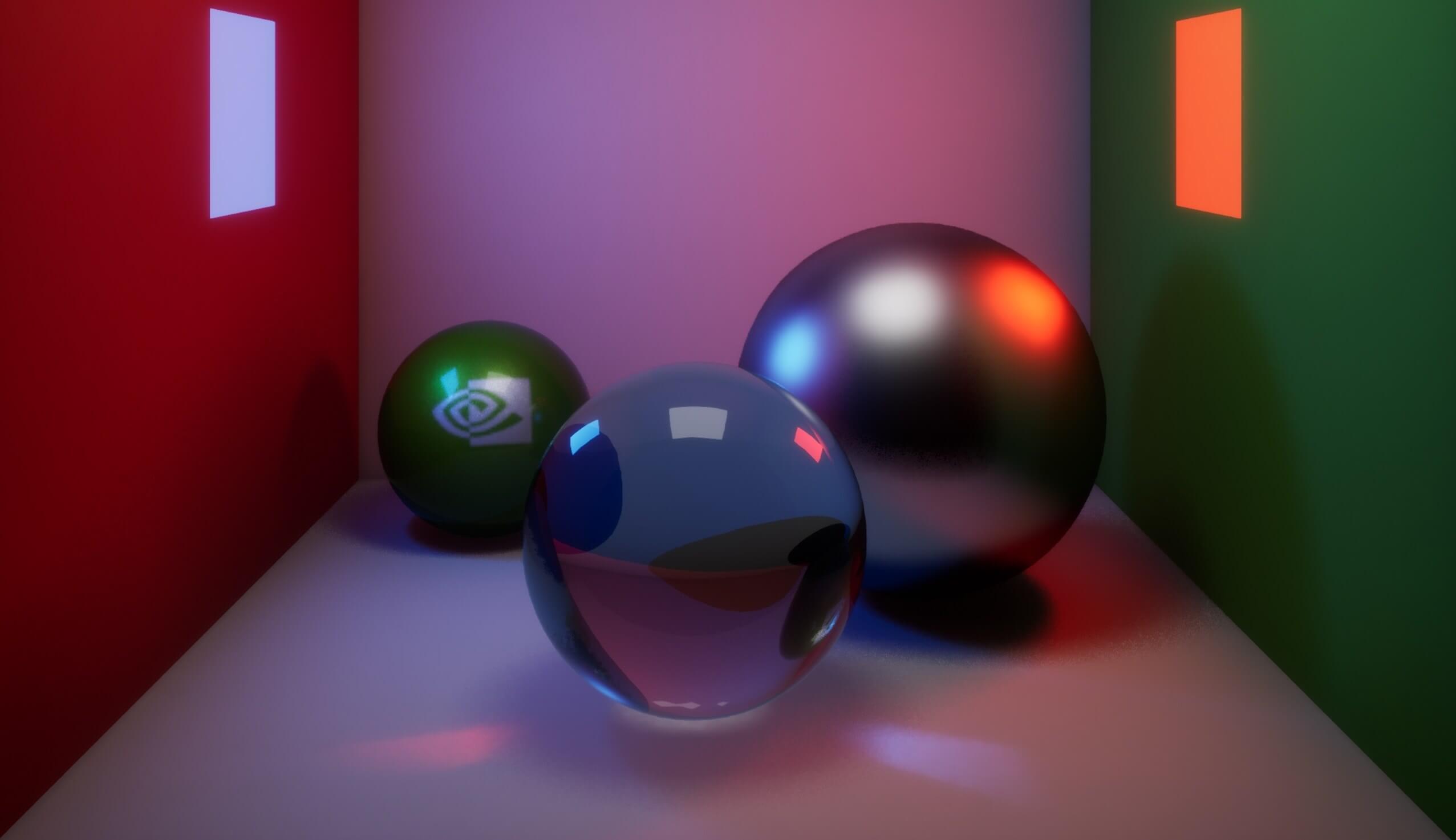

In brief: A graphics and game engine developer made a simple ray tracer work in Notepad on Windows by manipulating the app's memory space to change the displayed text and render a simple 3D scene at 30 frames per second.

Ray tracing is a hot topic in computer graphics right now, especially with all the hype around Nvidia's Ampere architecture which debuted with a powerful enterprise GPU and should soon bring about the GeForce RTX 3000 series consumer GPUs.

The reason why everyone is waiting for the newest graphics hardware is that ray tracing is so taxing that very few of us are able to experience it while keeping a smooth 60fps+ frame rate. And that doesn't even get into the issue that there aren't a lot of games out there that support it even if you have the hardware to handle it.

However, there's one place where ray tracing works that might surprise some, and that is the Notepad app on Windows. Kyle Halladay, who is a graphics and game engine programmer by trade and the author of a book on practical shader development, managed to make a rudimentary ray tracing demo work on Notepad at 30 frames per second.

Halladay's project is not meant to be a serious ray tracer by any means, but instead it is a great showcase of what can be achieved by combining little hacks - in this case it's rendering a scene through techniques like DLL injection and memory scanning. He also made a Snake game that can be played inside Notepad, that for some of you might bring memories of the text mode Quake II and Doom.

For those of you who feel inclined to dive into the nitty gritty technical details of how he achieved this, you can read them here.

https://www.techspot.com/news/85313-someone-made-rudimentary-ray-tracing-demo-notepad-30fps.html