Editor's take: If you want to become a serious tech industry watcher or a hardcore tech enthusiast, then you need to start closely watching what's happening in the semiconductor industry. Not only are chips at the literal heart of all our tech devices, but they also power the software and experiences we've all become so reliant on. Most important of all, however, they are the leading-edge indicator of where important technology trends are headed, because chip designs, and the technologies that go into them, must be completed years ahead of products that use them and the software needed to leverage them.

With the above thought in mind, let me explain why a seemingly modest announcement about a new industry consortium and semiconductor industry standard, called Universal Chiplet Interconnect Express (or UCIe), is so incredibly important.

First, a bit more context. Over the last few years, there's been a great deal of debate and discussion about the ongoing viability of Moore's Law and the potential stalling of chip industry advancements. Remember that Intel co-founder Gordon Moore famously predicted just over 50 years ago that semiconductor performance would double roughly every 18-24 months and his prognostication has proven to be remarkably prescient. In fact, many have argued that the sum of Silicon Valley and the tech industry at large's incredible advances over the last half century have essentially been a "fulfillment" of that law.

Over the last few years, there's been a great deal of debate and discussion about the ongoing viability of Moore's Law and the potential stalling of chip industry advancements.

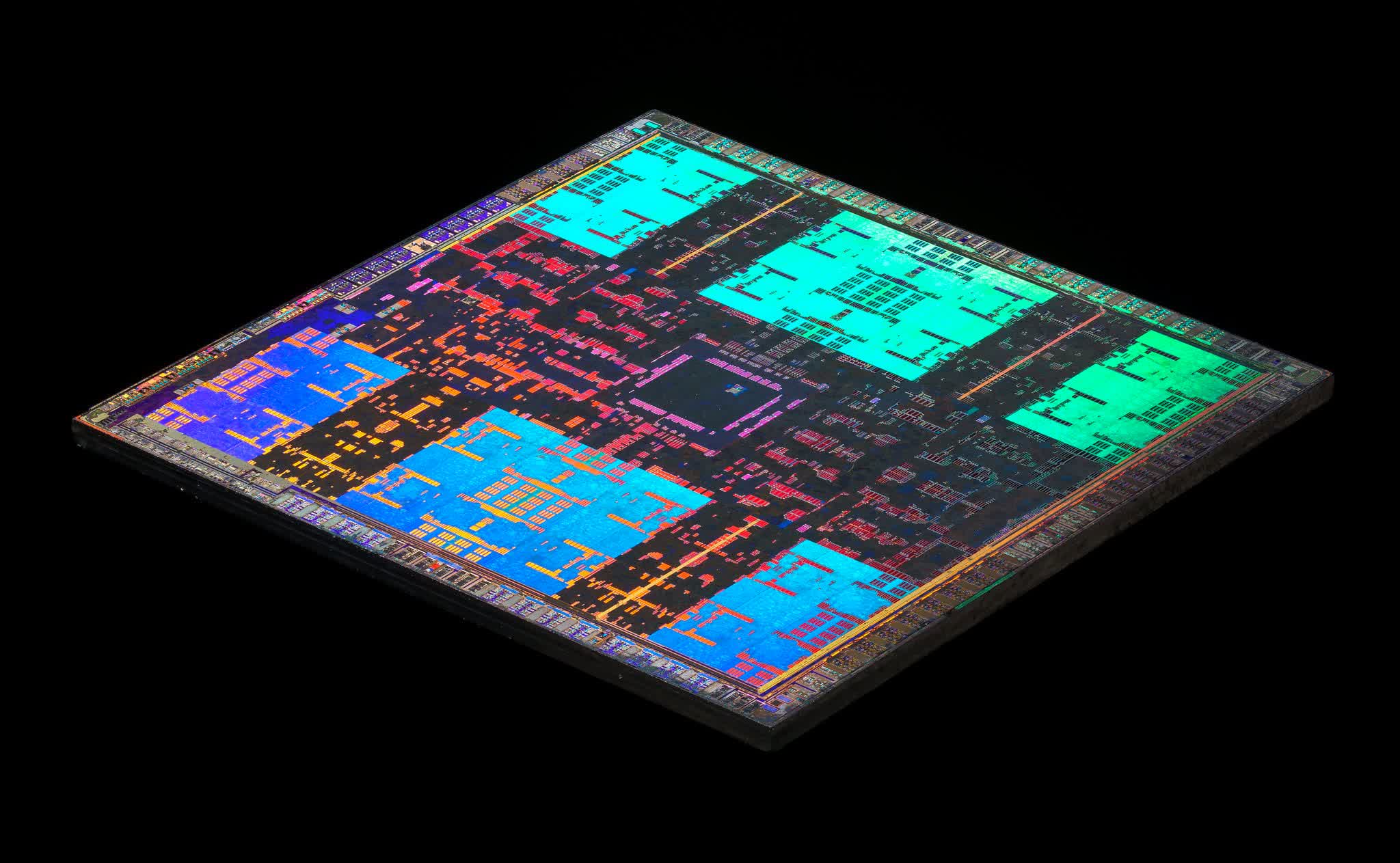

As the chipmaking process has advanced, however, the industry has started to face some potential physical limitations that seem very challenging to overcome. Individual transistors have become so small that they're approaching the size of individual atoms -- and you can't get any smaller than that. As a result, traditional efforts to improve performance by shrinking transistors and fitting more and more of them onto a single die is coming to an end. However, chip companies recognized these potential challenges years ago and started focusing on other ideas and chip design concepts to keep performance advancing at a Moore's Law-like rate.

Chief among these are ideas around breaking up large monolithic chips into smaller components, or chiplets, and combining these in clever ways. This has led to a number of important advancements in chip architectures, chip packaging, and the interconnections between a number of components.

Just over 10 years ago, for example, Arm introduced the idea of big.LITTLE, which consisted of multiple CPU cores of different sizes connected together to get high-quality performance but at significantly reduced power levels. Since then, we've seen virtually every chip company leverage the concept with Intel's new P and E cores in 12th-gen CPUs being the most recent example.

The rise of multi-part SoCs, where multiple different elements, such as CPUs, GPUs, ISPs (image signal processors), modems, etc. are all combined onto a single chip -- such as what Qualcomm does with its popular Snapdragon line -- is another development from the disaggregation of large, single die chips. The connections between these chiplets have also seen important advances.

When AMD first introduced Ryzen CPUs back in 2017, for example, one of the unique characteristics of the design was the use of a high-speed Infinity Fabric to connect several equal-sized CPU cores together so that they could function more efficiently.

"Want to mix an Intel CPU with an AMD GPU, a Qualcomm modem, a Google TPU AI accelerator and a Microsoft Pluton security processor onto a single chip package, or system on package (SOP)?"

With a few exceptions, most of these packaging and interconnect capabilities were limited to a company's own products, meaning it could only mix and match various components of its own. Recognizing that the ability to combine components from different vendors could be useful -- particularly in high-performance server applications -- led to the creation of the Compute Express Link standard. CXL, which is just starting to be used in real-world products, is ideally optimized to do things like interconnect specialized accelerators, like AI processors, with CPUs and memory in a speedy, efficient manner.

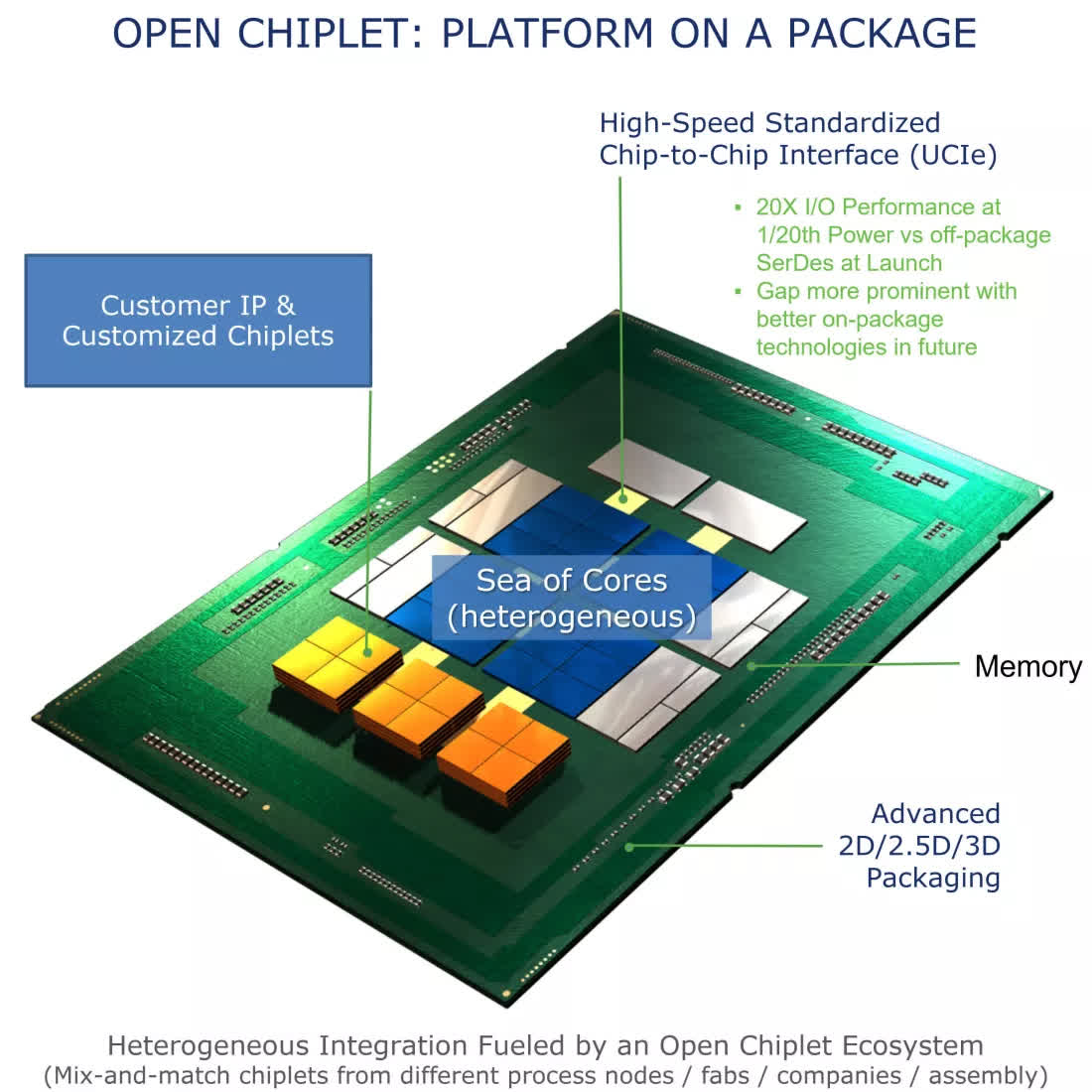

But as great as CXL may be, it didn't quite take things to the level of being able to mix and match different chiplets made by different companies using different types and sizes of manufacturing processes in a true Lego-like fashion. That's where the new UCIe standard comes in.

Started by a powerful consortium of Intel, AMD, Arm, Qualcomm, Samsung, Google, Meta, and Microsoft, as well as chipmakers TSMC and ASE, UCIe builds on the CXL and PCIe 5.0 standards and defines the physical (interconnect) and logical (software) standards by which companies can start designing and building the chips of their dreams.

Want to mix an Intel CPU with an AMD GPU, a Qualcomm modem, a Google TPU AI accelerator and a Microsoft Pluton security processor onto a single chip package, or system on package (SOP)? When UCIe-based products start to get commercialized in say the 2024-2025 timeframe, that's exactly what you should be able to do.

Not only is this technologically and conceptually cool, but it also opens a whole new range of opportunities for chip companies and device makers and creates many new types of options for the semiconductor industry as a whole. For example, this could enable the creation of smaller yet still financially viable semiconductor companies that only focus on very specialized chiplets or who only concentrate on putting together interesting combinations of exiting parts made by others.

For device manufacturers, this theoretically allows them to build their own custom chip design without the burden (and cost) of an entire semiconductor team. In other words, you could create an Apple-level of chip specificity at what should be a significantly lower development cost.

From the manufacturing side, there are huge benefits as well. Though it's not well-known, not all chips can benefit from being built at cutting edge process nodes, such as today's 4 nm and 3 nm. In fact, many chips, particularly those that process analog signals, are actually better off being built at larger process nodes.

Things like 5G modems, RF front ends, WiFi and Bluetooth radios, etc., perform significantly better when built at larger nodes, because they can avoid issues like signal leakage. As a result, companies like GlobalFoundries and others that don't have the smallest process nodes but do specialize in unique manufacturing, process, or packaging technologies should have an even brighter future in a chiplet-driven semiconductor world.

The ability to show value won't be limited to those who remain on the cutting edge of process technology -- though, to be sure, that will continue to be extremely valuable for the foreseeable future. Instead, chip design companies or foundries that can demonstrate the ability to offer unique capabilities at one of many different steps along the semiconductor industry supply chain should be able to build more viable businesses. Plus, the ability to mix and match across multiple companies could lead to a more competitive market and, hopefully, should be able to reduce the kind of supply chain disruptions we've seen over the last few years.

There's still a lot of work to be done to broaden support for UCIe even further and ensure that it works as well, and as seamlessly, as the concept first suggests. Thankfully, the initial set of companies launching the standard are impressive enough that they're bound to encourage both some obvious missing players (I'm looking at you Apple and Nvidia) as well as a broad array of lesser-known companies to participate.

The possibilities for UCIe and, most importantly, its potential for disruption is enormous. Today's semiconductor industry has already morphed into an exciting and competitive new era, and because of the pandemic-drive chip shortages we've experienced in all aspects of society, awareness of the importance semiconductors has never been higher. With the launch of UCIe, I believe there's the potential for the industry to reach an even higher level, and that, most certainly, will be interesting to watch.

Bob O'Donnell is the founder and chief analyst of TECHnalysis Research, LLC a technology consulting firm that provides strategic consulting and market research services to the technology industry and professional financial community. You can follow him on Twitter @bobodtech.

https://www.techspot.com/news/93694-future-semiconductors-ucie.html