When looking at the near future of data center technology, there are two very important trends to consider. First—the adoption of public and private cloud computing continues to become much more pervasive. Enterprises, software developers, and home users are all making the transition to cloud-based models for services and storage.

Second—devices, data, and network demand are all projected to grow at explosive rates over the next few years. By 2020, the digital universe – the data we create and copy annually – will reach 44 zettabytes, or 44 trillion gigabytes. There will be an estimated 24 billion IP-connected devices online (up from 13 billion in 2013). Network expansion is expected to more than triple in that same timeframe, from 63 million new server ports to 206 million.

And it’s these sorts of looming demand increases in particular that are driving the development of a software-defined datacenter (SDDC) that can deliver cloud-based services with optimized capacity, efficiency, and flexibility.

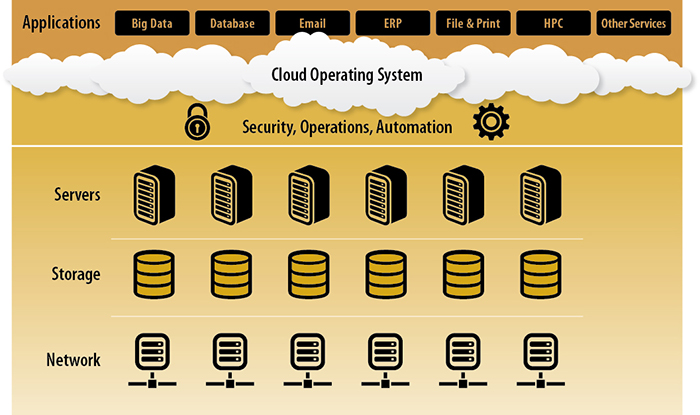

The evolution of SDDC is being enabled by the standardization of IT hardware infrastructure. Core IT resources (compute, network, and storage) are abstracted from the underlying hardware that resides in resource-specific pools, potentially across multiple physical locations.

These virtualized resources are overlaid with advanced management capabilities, which allow IT resources such as computing cycles, storage, and network to be allocated on-demand and at-scale for specific software requirements. Automated provisioning and orchestration functions boost the efficiency of cloud-based applications while reducing the burden on IT.

Software-defined datacenters will enable IT administrators to efficiently allocate resources on demand and track usage for ease-of-billing to internal business units. It also offers developers potential time-to-market advantages with the ability to quickly release new software, rapidly scaling capacity up and down with demand over an app’s natural lifecycle.

How does SDDC work?

The primary technical objective of SDDC is to create a virtualized pool of the three main component silos in the traditional IT infrastructure stack (compute, network, and storage) with the ability to scale across these components as needed.

This re-imagined data center architecture will allow IT managers to deploy hardware resources in support of applications and more effectively manage the lifecycles of individual hardware components, without ever disrupting application uptime.

Another benefit of a comprehensively virtual architecture is that it can offer capabilities beyond those of a top-down control structure, where the software merely simplifies the functions of subordinate hardware systems.

Instead, software-defined datacenters offers a dynamic feedback loop between the resource layers of the data center and the operating software. These layers can interact through applied analytics enabling automated controls and real-time IT management. To achieve this, however, the underlying hardware platform must be sufficiently intelligent and have the capabilities to integrate with centralized software control.

In 2014, the digital universe will equal an astounding 1.7 megabytes a minute for every person on Earth. With explosive growth in user demand a practical inevitability over the next few years, SDDC will provide a standards-based, converged infrastructure that can offer new data center capabilities, greater efficiency, and improved flexibility for administrators of private and public cloud services.

To learn more about SDDC and the changes it’s driving in the datacenter, check out this LSI whitepaper.