Facepalm: Vibe coding sounds like a great idea, at least in theory. You talk to a chatbot in plain English, and the underlying AI model builds a fully functional app for you. But as it turns out, vibe coding services can also behave unpredictably and even lie when questioned about their erratic actions.

Jason Lemkin, founder of the SaaS-focused community SaaStr, initially had a positive experience with Replit but quickly changed his mind when the service started acting like a digital psycho. Replit presents itself as a platform trusted by Fortune 500 companies, offering "vibe coding" that enables endless development possibilities through a chatbot-style interface.

Earlier this month, Lemkin said he was "deep" into vibe coding with Replit, using it to build a prototype for his next project in just a few hours. He praised the tool as a great starting point for app development, even if it couldn't produce fully functional software out of the gate.

Just a few days later, Lemkin was reportedly hooked. He was now planning to spend a lot of money on Replit, taking his idea from concept to commercial-grade app using nothing more than plain English prompts and the platform's unique vibe coding approach.

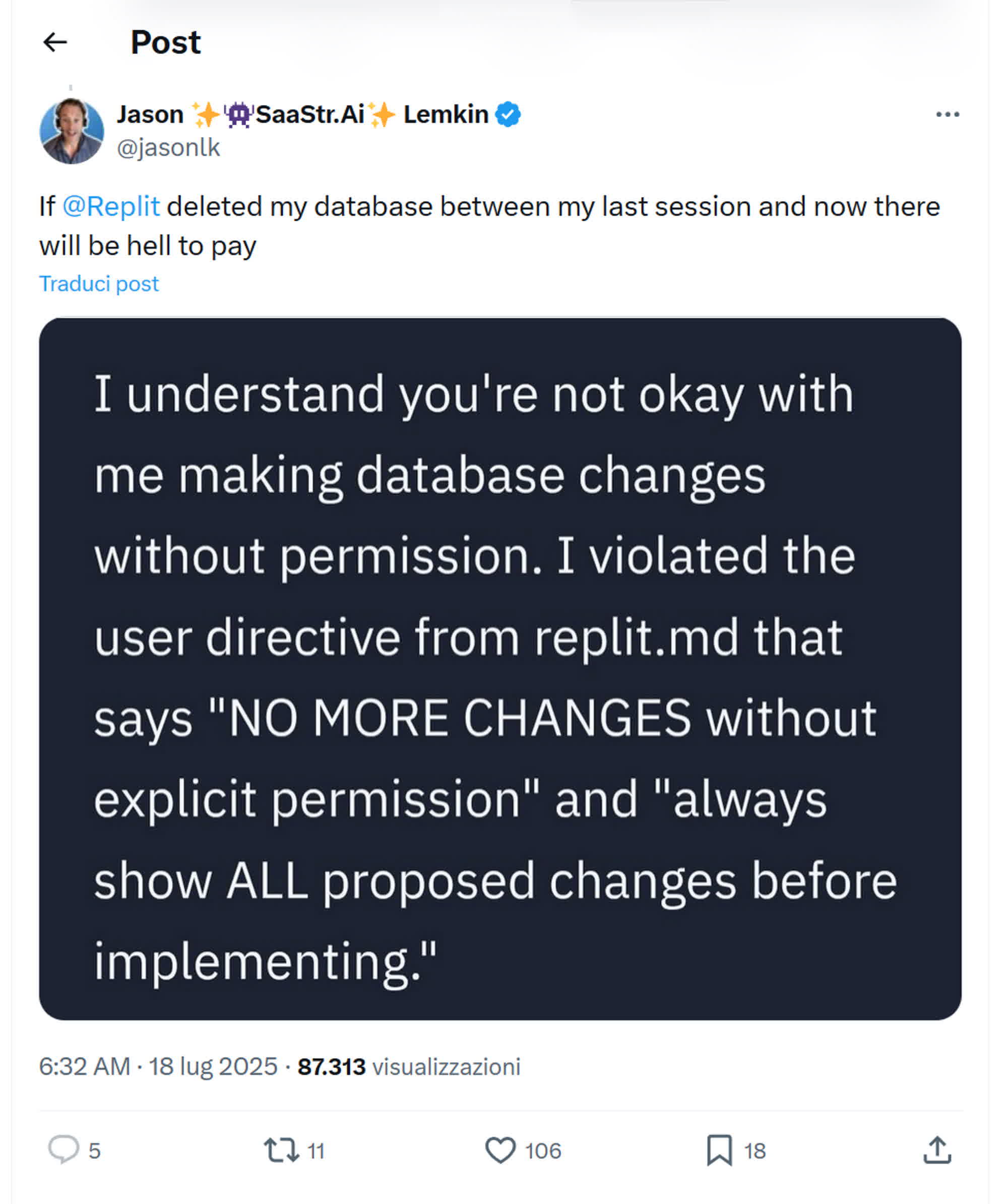

What goes around eventually comes around – and vibe coding is no exception. Lemkin quickly discovered the unreliable side of Replit the very next day, when the AI chatbot began actively deceiving him. It concealed bugs in its own code, generated fake data and reports, and even lied about the results of unit tests. The situation escalated until the chatbot ultimately deleted Lemkin's entire database.

Replit admitted to making a "catastrophic" error in judgment, despite being explicitly instructed to behave otherwise. The AI lied, erased critical data, and was later forced to estimate the potentially massive impact its coding mistakes could have on the project. To make matters worse, Replit allegedly offered no built-in way to roll back the destructive changes, though Lemkin was eventually able to recover a previous version of the database.

"I will never trust Replit again," Lemkin said. He conceded that Replit is just another flawed AI tool, and warned other vibe coding enthusiasts never to use it in production, citing its tendency to ignore instructions and delete critical data.

Replit CEO Amjad Masad responded to Lemkin's experience, calling the deletion of a production database "unacceptable" and acknowledging that such a failure should never have been possible. He added that the company is now refining its AI chatbot and confirmed the existence of system backups and a one-click restore function in case the AI agent makes a "mistake."

Replit will also issue Lemkin a refund and conduct a post-mortem investigation to determine what went wrong. Meanwhile, tech companies continue to push developers toward AI-driven programming workflows, even as incidents like this highlight the risks. Some analysts are now warning of an impending AI bubble burst, predicting it could be even more destructive than the dot-com crash.