A hot potato: Amid growing hype around AI agents, one experienced engineer has brought a grounded perspective shaped by work on more than a dozen production-level systems spanning development, DevOps, and data operations. From his vantage point, the notion that 2025 will bring truly autonomous workforce-transforming agents looks increasingly unrealistic.

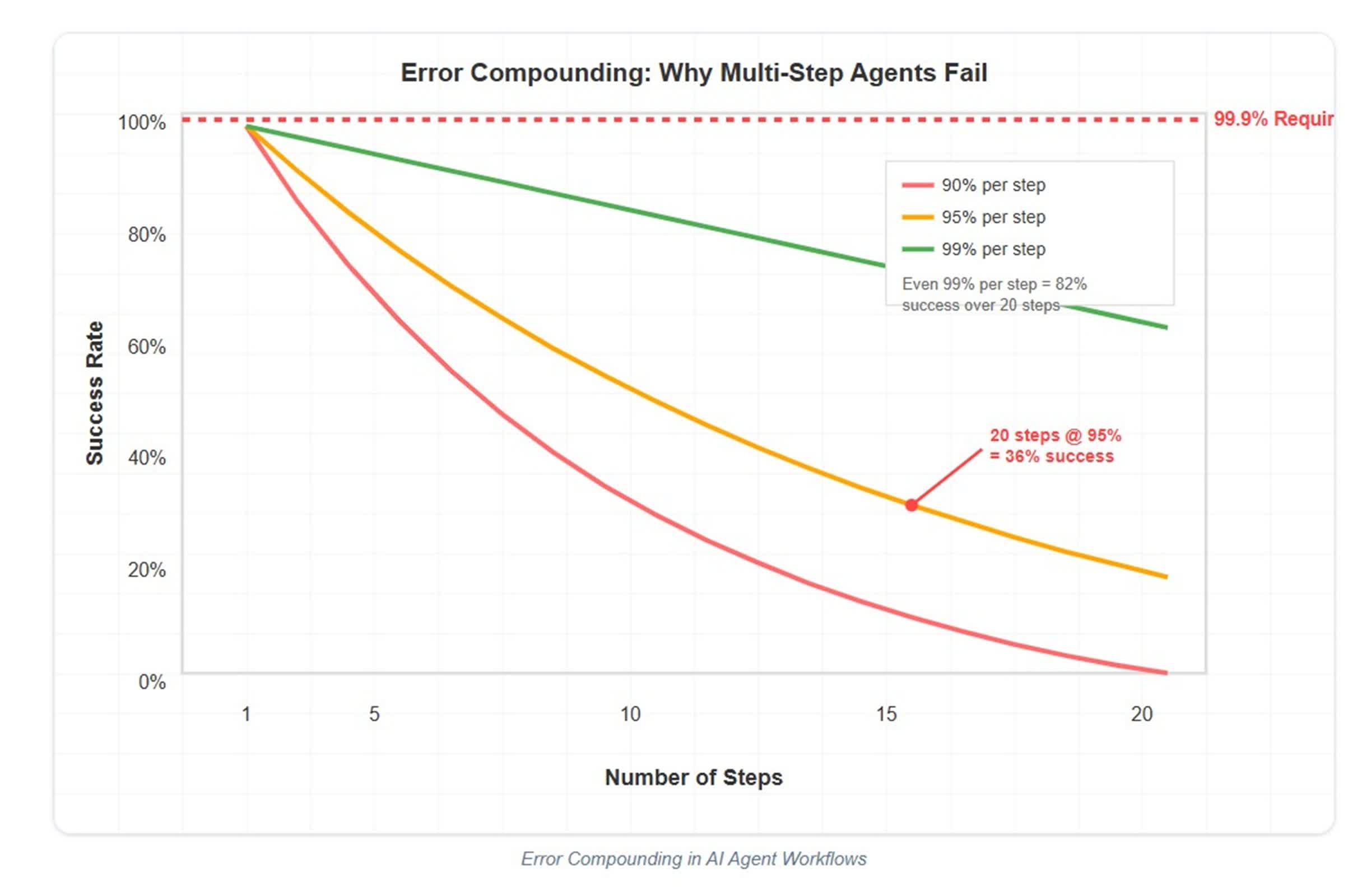

In a recent blog post, systems engineer Utkarsh Kanwat points to fundamental mathematical constraints that challenge the notion of fully autonomous multi-step agent workflows. Since production-grade systems require upwards of 99.9 percent reliability, the math quickly makes extended autonomous workflows unfeasible.

"If each step in an agent workflow has 95 percent reliability, which is optimistic for current LLMs, five steps yield 77 percent success, 10 steps 59 percent, and 20 steps only 36 percent," Kanwat explained.

Even hypothetically improved per-step reliability of 99 percent falls short at about 82 percent success for 20 steps.

"This isn't a prompt engineering problem. This isn't a model capability problem. This is mathematical reality," Kanwat says.

Kanwat's DevOps agent avoids the compounded error problem by breaking workflows into 3 to 5 discrete, independently verifiable steps, each with explicit rollback points and human confirmation gates. This design approach – emphasizing bounded contexts, atomic operations, and optional human intervention at critical junctures – forms the foundation of every reliable agent system he has built. He warns that attempting to chain too many autonomous steps inevitably leads to failure due to compounded error rates.

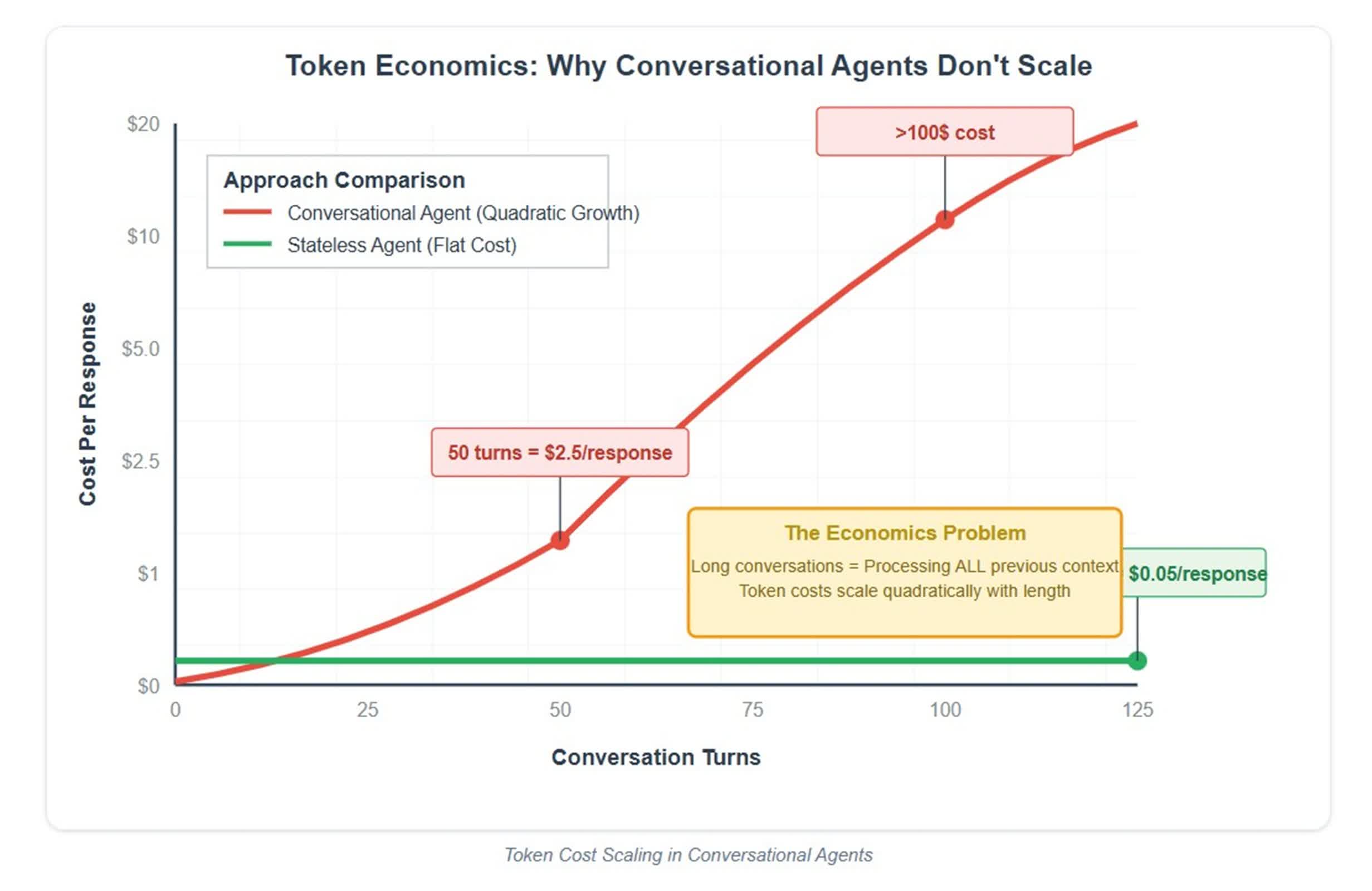

Token cost scaling in conversational agents presents a second, often overlooked barrier. Kanwat illustrates this through his experience prototyping a conversational database agent, where each new interaction had to process the full previous context – causing token costs to scale quadratically with conversation length.

In one case, a 100-turn exchange cost between $50 and $100 in tokens alone, making widespread use economically unsustainable. Kanwat's function-generation agent sidestepped the issue by remaining stateless: description in, function out – no context to maintain, no conversation to track, and no runaway costs.

"The most successful 'agents' in production aren't conversational at all," Kanwat says. "They're smart, bounded tools that do one thing well and get out of the way."

Beyond the mathematical constraints lies a deeper engineering challenge: tool design. Kanwat argues this aspect is often underestimated amid the broader hype around agents. While tool invocation has become relatively precise, he says the real difficulty lies in designing tools that provide structured, actionable feedback without overwhelming the agent's limited context window.

For example, a well-designed database tool should summarize results in a compact, digestible format – indicating that a query succeeded, returned 10 thousand results, and displaying only a handful – rather than overwhelming the agent with raw output. Handling partial success, recovery from failure, and managing interdependent operations further increases the engineering complexity.

"My database agent works not because the tool calls are unreliable," Kanwat says, "but because I spent weeks designing tools that communicate effectively with the AI."

Kanwat critiques companies that promote simplistic "just connect your APIs" solutions, saying they often design tools for humans rather than for AI systems. As a result, agents may be able to call APIs, but they frequently fail to manage real workflows due to a lack of structured communication and contextual awareness.

Kanwat notes that enterprise environments seldom provide clean APIs for AI agents. Legacy constraints, fluctuating rate limits, and strict compliance requirements all pose significant hurdles. His database agent, for instance, incorporates traditional engineering features like connection pooling, transaction rollbacks, query timeouts, and detailed audit logging – elements that fall far outside the AI's scope.

He emphasizes that the agent generates queries while conventional systems programming manages everything else. In his view, many companies pushing the promise of fully autonomous, full-stack agents fail to reckon with these harsh realities. The real challenge, he argues, is not AI capability but integration – and that's where most agents fall apart.

Kanwat's successful agents share a common approach: AI manages complexity within clear boundaries, while humans or deterministic systems ensure control and reliability. His UI generation agent creates React components but requires human review before deployment. DevOps automation produces Terraform code that undergoes review, version control, and rollback. The CI/CD agent includes defined success criteria and rollback procedures, and the database agent confirms destructive commands before execution. This design lets AI handle the "hard parts" while preserving human oversight and traditional engineering to maintain safety and correctness.

Looking ahead, Kanwat predicts that venture-backed startups chasing fully autonomous agents will struggle due to economic constraints and accumulating errors. Meanwhile, enterprises attempting to integrate AI with legacy software will face adoption hurdles because of complex integration issues. He believes the most successful teams will concentrate on creating specialized, domain-focused tools that apply AI to complex tasks but retain human oversight or strict operational limits. Kanwat also cautions that many companies will face a steep learning curve moving from impressive demonstrations to dependable, market-ready products.