Editor's take: Amazon has patched a serious flaw on its Alexa platform that allowed attackers to grab every bit of information from your Alexa device and Amazon account. This is a reminder that smart assistant devices are as vulnerable as they are convenient, and you, the user, should lock down your interactions with them to make them more secure.

Amazon has sold an estimated 200 million Alexa-powered devices over the last five years, most of which are Echo smart speakers that can aid in some aspects of your digital life. The company is often selling them below cost, so this number is only likely to increase.

There's a lot to be said about the "convenience" these Alexa-powered speakers can afford, depending on who you ask, but it certainly does come at a cost of privacy and security. For instance, Amazon pays humans reviewers to listen to snippets of your voice recordings to improve the artificial intelligence behind Alexa, and even if you opt out there's no guarantee that existing transcripts will get deleted.

Update: Amazon does insist it will delete any transcript from their records once you delete the interactions from Alexa (more on that below) – this surely comes from tightening their privacy policies and government scrutiny.

Robert Fredrick, who is a former AWS executive, said in an interview that he always turns off his Alexa-enabled speaker whenever he wants to keep his discussions private. And we've seen researchers demonstrate how easy it is for some motivated malicious actors to take over Alexa, Siri, and Google Home smart speakers using lasers, even from hundreds of feet away.

Recently, a different group of researchers at Check Point identified a new exploit for Amazon's Alexa-powered speakers that can open a way for attackers to your personal information, speech history, and everything else tied to your Amazon account.

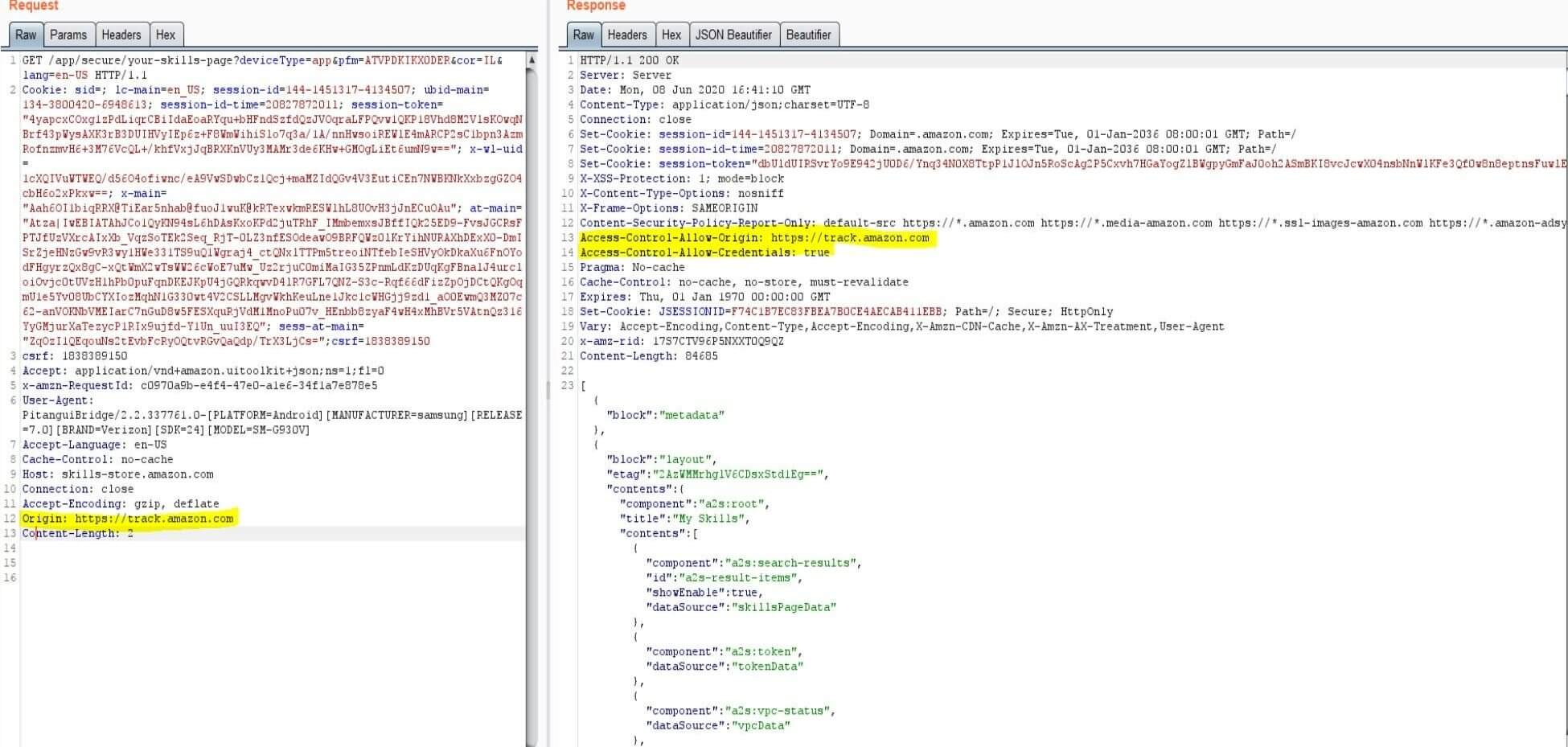

This time, the point of attack is actually the Alexa companion app. By using a well-known universal script to bypass a mechanism called SSL pinning, researchers were able to look at the application's traffic. What they found is that several bugs in Alexa's web services could make it easy for someone to gather your personal information and even install new Alexa skills.

The Alexa app would make requests under a misconfigured policy that allowed requests from any Amazon subdomain. To exploit this vulnerability, all an attacker needs to do is fool you into clicking a link that goes to track.amazon.com, use the cookie to replace the ownership of the app, and install their malicious code. From there, the attacker can get very creative and get full access.

The good news is that Amazon patched the vulnerability as soon as it was informed about it.

But there is something else you can do to prevent any similar exploits from exposing things like your voice history. It's as simple as saying "Alexa, delete everything I said today." You can also do this from the Alexa companion app by going into Settings > Alexa Privacy > Review Voice History > Delete All Recordings for All History.

Keep in mind that this only applies to the voice data collected by Amazon, and doesn't affect any third-party skills you have installed. It goes without saying that until these smart devices become more secure, you'd be better off not installing any banking or payment-related skills.