What just happened? Samsung this week announced it has developed what it is calling the industry's first High Bandwidth Memory (HBM) with built-in artificial intelligence. The new processing-in-memory, PIM for short, brings AI computing capabilities directly inside the memory subsystem, accelerating processing within data centers, high-performance computers and AI-enabled mobile apps.

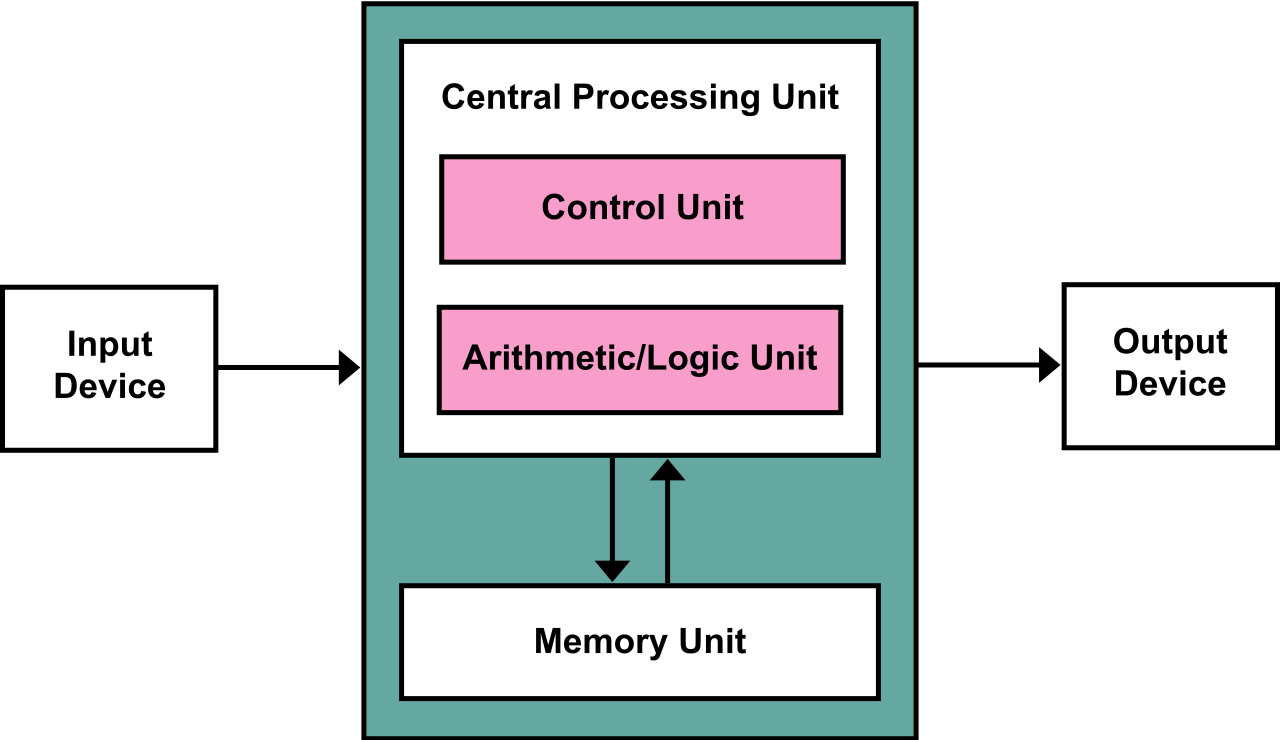

As Samsung explains, most computing systems today are based on the von Neumann architecture. This sequential processing approach involves separate CPU and memory units with data constantly being shuffled between them, creating a bottleneck.

Samsung's HBM-PIM puts a DRAM-optimized AI engine inside each memory bank. This enables parallel processing and minimizes data movement, improving performance and reducing power consumption. According to Samsung, when used in conjunction with Samsung's HBM2 Aquabolt, the architecture can deliver more than "twice the system performance while reducing energy consumption by more than 70 percent."

Sammy further noted that HBM-PIM doesn't require any hardware or software changes, enabling faster integration into existing systems.

Samsung's new HBM-PIM is currently being tested by AI solution partners, with validations expected to be finished by the first half of 2021. Samsung is also presenting the new tech at the International Solid-State Circuits Virtual Conference (ISSCC) today in a paper titled, "A 20nm 6GB function-in-memory DRAM based on HBM2 with a 1.2TFLOPS programmable computing unit using bank-level parallelism for machine learning applications."