In a nutshell: Stable Diffusion is a phenomenal example of how much a picture is worth more than a thousand words. In fact, by cutting the image-generation text prompt altogether, the visual AI could be used to get a highly compressed, high quality image file.

Stable Diffusion is a machine learning algorithm capable of generating weirdly complex and (somewhat) believable images just from interpreting natural language descriptions. The text-to-image AI model is incredibly popular among users despite the fact that online art communities have started to reject AI-based images.

Other than being a controversial example of machine-assisted visual expression, Stable Diffusion could have a future as a powerful image compression algorithm. Matthias Bühlmann, a self-described "software engineer, entrepreneur, inventor and philosopher" from Switzerland, recently explored the opportunity to employ the machine learning algorithm for a completely different kind of graphics data manipulation.

In its traditional model, Stable Diffusion 1.4 can generate artwork thanks to its acquired ability to make relevant statistic associations between images and related words. The algorithm has been trained by feeding millions of Internet images to the "AI monster," and it needs a 4GB database which contains compressed, smaller mathematical representations of the previously analyzed images that can be extracted as very small images when decoded.

In Bühlmann's experiment, the text prompt was bypassed altogether to put Stable Diffusion's image encoder process to work. Said process takes the small source images (512x512 pixels) and turns them into an even smaller (64×64) representation. The compressed images are then extracted to their original resolution, with pretty interesting results.

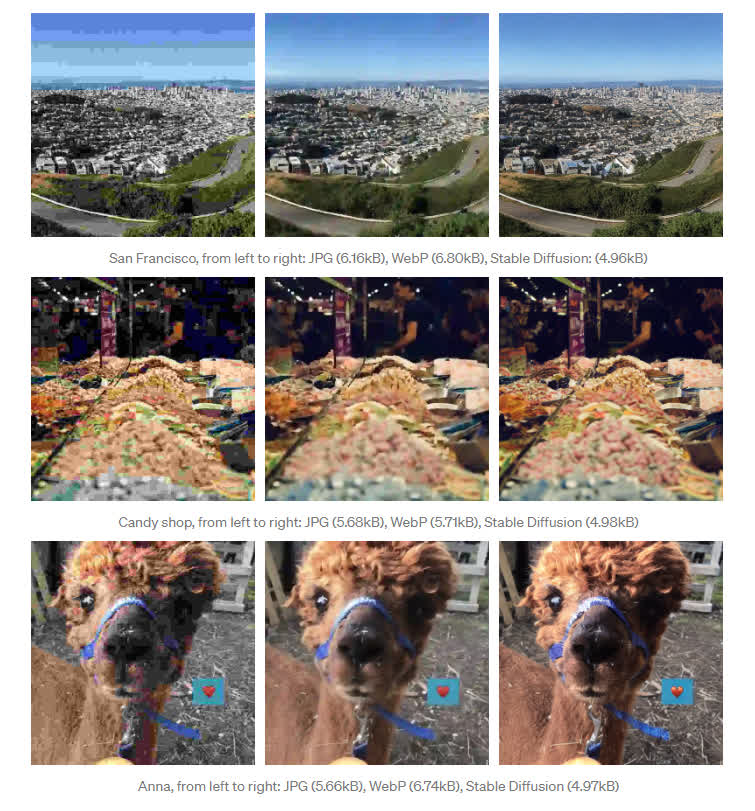

The developer highlighted how SD-compressed images had a "vastly superior image quality" at a smaller file size when compared to JPG or WebP formats. The Stable Diffusion images were smaller and exhibited more defined details, showing fewer compression artifacts than the ones generated by standard compression algorithms.

Could Stable Diffusion have a future as a higher quality algorithm for lossy compression of images on the Internet and elsewhere? The method used by Bühlmann (for which there's a code sample online) still has some limitations, as it doesn't work so well with text or faces and it can sometimes generate additional details that were not present in the source image. The need for a 4GB database and the time-consuming decoding process are a pretty substantial burden as well.