Forward-looking: First they came for our art, then they came for our text and garbled essays. Now they are coming for music, with a "new" machine learning algorithm that's adapting image generation to create, interpolate and loop new music clips and genres.

Seth Forsgren and Hayk Martiros adapted the Stable Diffusion (SD) algorithm to music, creating a new kind of weird "music machine" as a result. Riffusion works on the same principle as SD, turning a text prompt into new, AI-generated content. The main difference is that the algorithm has been specifically trained with sonograms, which can depict music and audio in visual form.

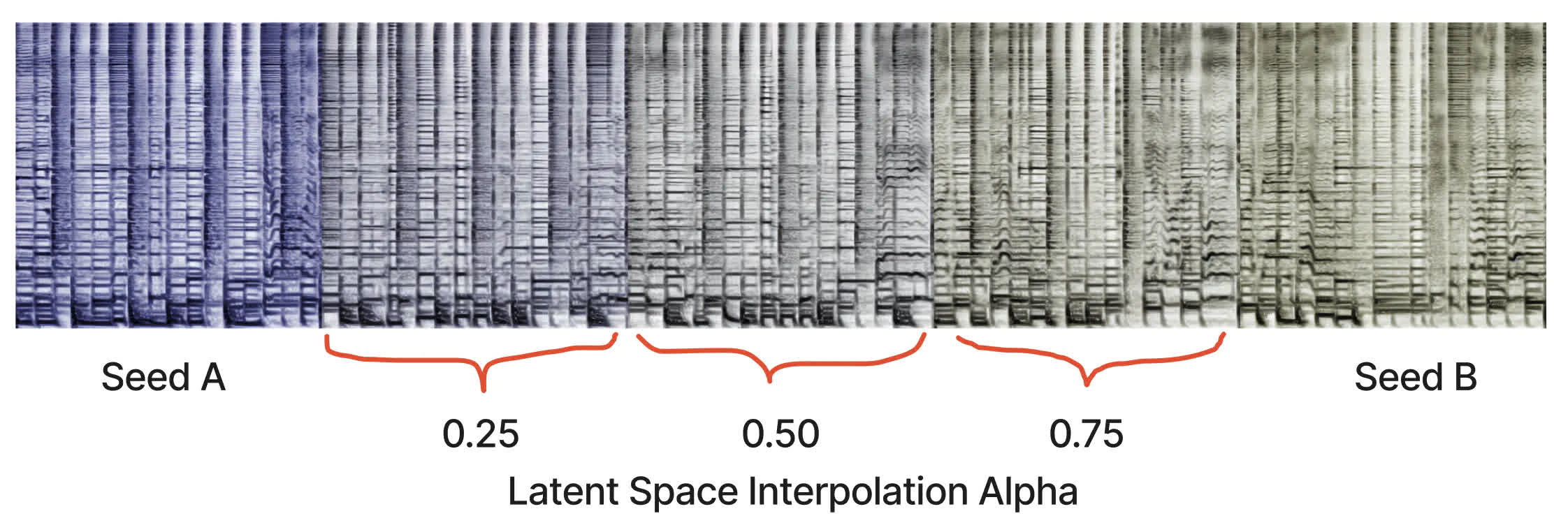

As explained on the Riffusion website, a sonogram (or a spectrogram for audio frequencies) is a visual way to represent the frequency content of a sound clip. The X-axis represents time, while the Y-axis represents frequency. The color of each pixel gives the amplitude of the audio at the frequency and time given by its row and column.

Riffusion adapts v1.5 of the Stable Diffusion visual algorithm "with no modifications," just some fine tuning to better process images of sonograms/audio spectrograms paired with text. Audio processing happens downstream of the model, while the algorithm can also generate infinite variations of a prompt by varying the seed.

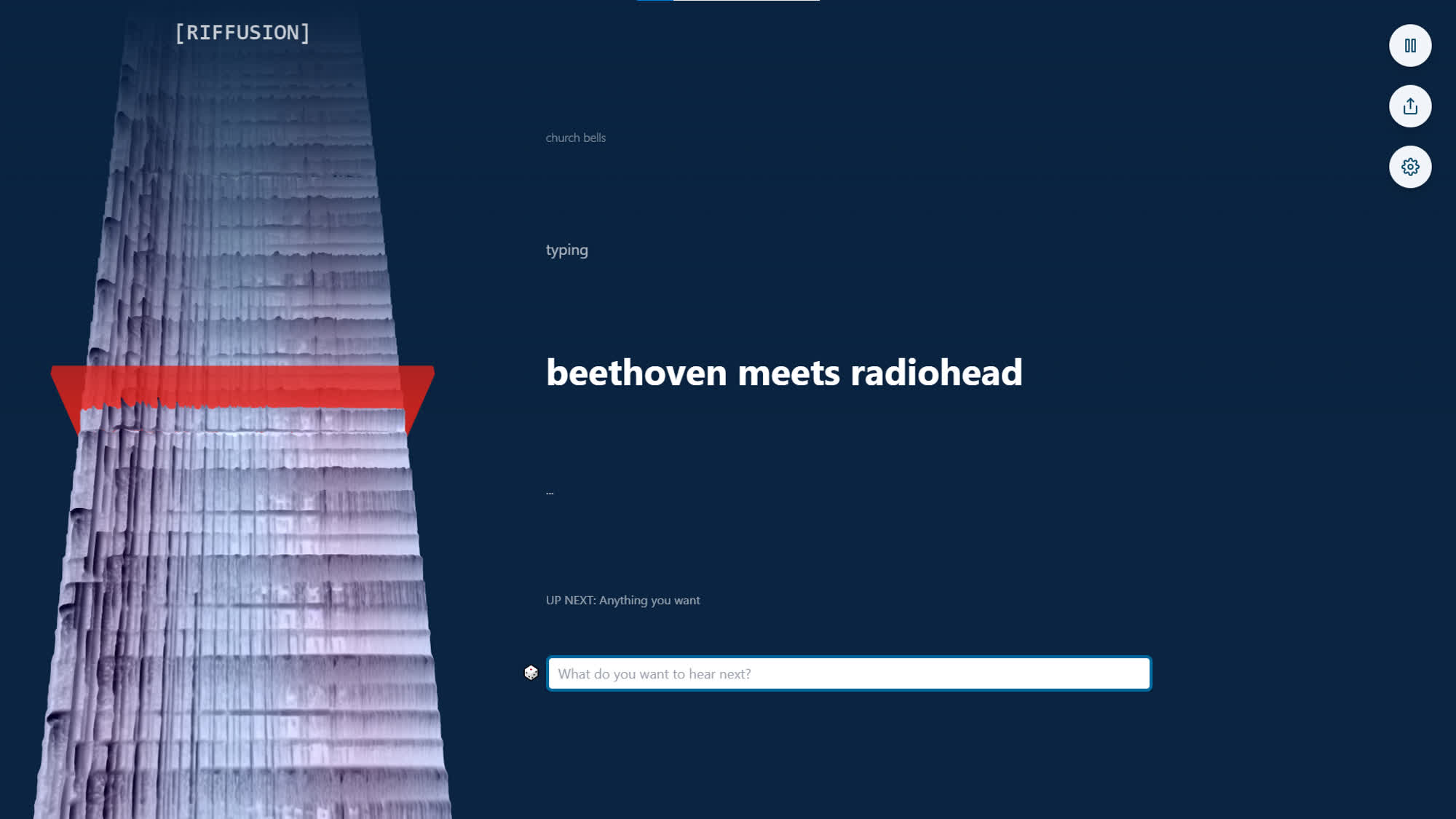

After generating a new sonogram, Riffusion turns the image into sound with Torchaudio. The AI has been trained with spectrograms depicting sounds, songs or genres, so it can generate new sound clips based on all kinds of textual prompts. Something like "Beethoven meets Radiohead," for instance, which is a nice example of how otherworldly or uncanny machine learning algorithms can behave.

After designing the theory, Forsgren and Martiros put it all together into an interactive web app where users can experiment with the AI. Riffusion takes text prompts and "infinitely generates interpolated content in real time, while visualizing the spectrogram timeline in 3D." The audio smoothly transitions from one clip to another; if there is no new prompt, the app interpolates between different seeds of the same prompt.

Riffusion is built upon many open source projects, namely Next.js, React, Typescript, three.js, Tailwind, and Vercel. The app's code has its own Github repository as well.

Far from being the first audio-generating AI, Riffusion is yet another offspring of the ML renaissance which already led to the development of Dance Diffusion, OpenAI's Jukebox, Soundraw and others. It won't be the last, either.