A hot potato: While the explosion in the use of AI, both generative and otherwise, this year has brought concerns over job losses and the death of human creativity, it's also exacerbated long-held fears regarding artificial intelligence's implementation in weapons. In Israel, the country has been utilizing autonomous guns and drones for years. Now, its air forces have started using AI to select targets for air strikes and organize wartime logistics.

Bloomberg reports that Israeli military officials have confirmed the use of an AI recommendation system that analyzes swathes of data to determine which targets to select for air strikes. The raids can then be quickly organized using another AI model called Fire Factory, which uses data about targets to calculate munition loads, prioritize and assign thousands of targets to aircraft and drones, and propose an attack schedule.

Giving AIs large levels of control over military operations has brought plenty of controversies and debate. An Israel Defense Forces (IDF) official tried to alleviate fears by emphasizing that the systems are overseen by humans who vet and approve targets and plans, but the technology is not subject to state or international regulation.

Israel's AI systems have been used in battlefield situations before, including an 11-day conflict in the Gaza Strip in 2021 that the IDF called the first "AI war" due to its use of the tech to identify rocket launchpads and deploy drone swarms. Should tensions with Iran over its nuclear enrichment program continue to ramp up, Israel's AI tools will be deployed in a larger-scale war in the Middle East.

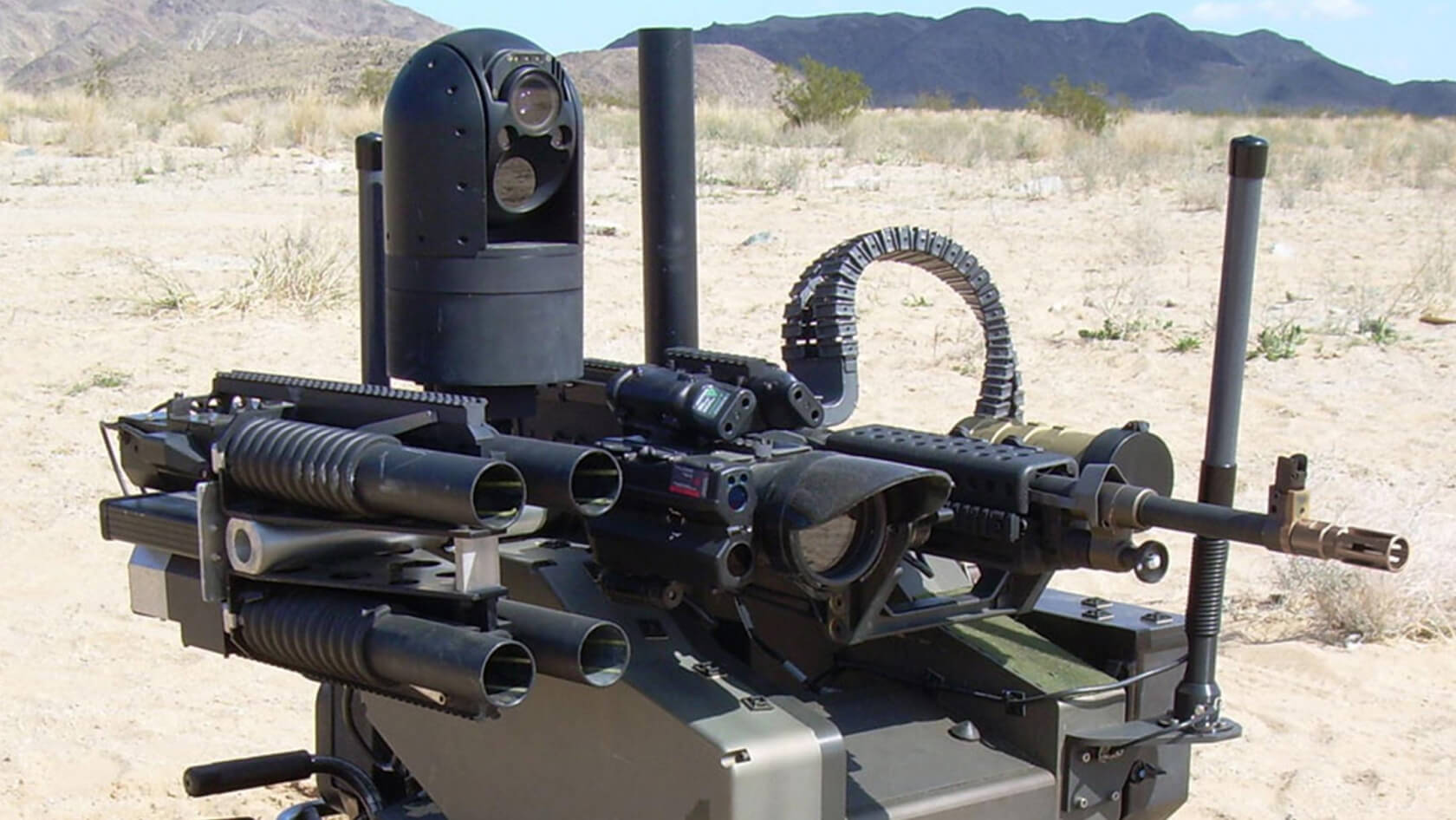

Israel is a global leader when it comes to autonomous weapons and systems. The AI-powered gun turrets on its borders can track targets with enhanced accuracy when firing the likes of tear gas and sponge-tipped bullets. The country has also used autonomous suicide drones, or loitering munitions, that "loiter" in the air before striking a target that meets previously identified criteria.

Proponents of AI being used in these systems claim that they could reduce civilian casualties. Those arguing against their use say a mistake by a machine could wipe out innocents, leaving nobody culpable.

This year has seen several advancements in autonomous weapons. In February, a new Lockheed Martin training jet was flown by an artificial intelligence for 17 hours, marking the first time that AI has been engaged in this way on a tactical aircraft. That same month saw the US Navy take delivery of a ship that can operate autonomously for up to 30 days.

These advancements, and a warning from former Google boss Eric Schmidt that AI could have a similar effect on wars as the introduction of nuclear weapons, led to more than 60 nations agreeing to address concerns over AI use in warfare at the first global Summit on Responsible Artificial Intelligence in the Military Domain (REAIM). The only attendee not to sign this call for action was Israel.