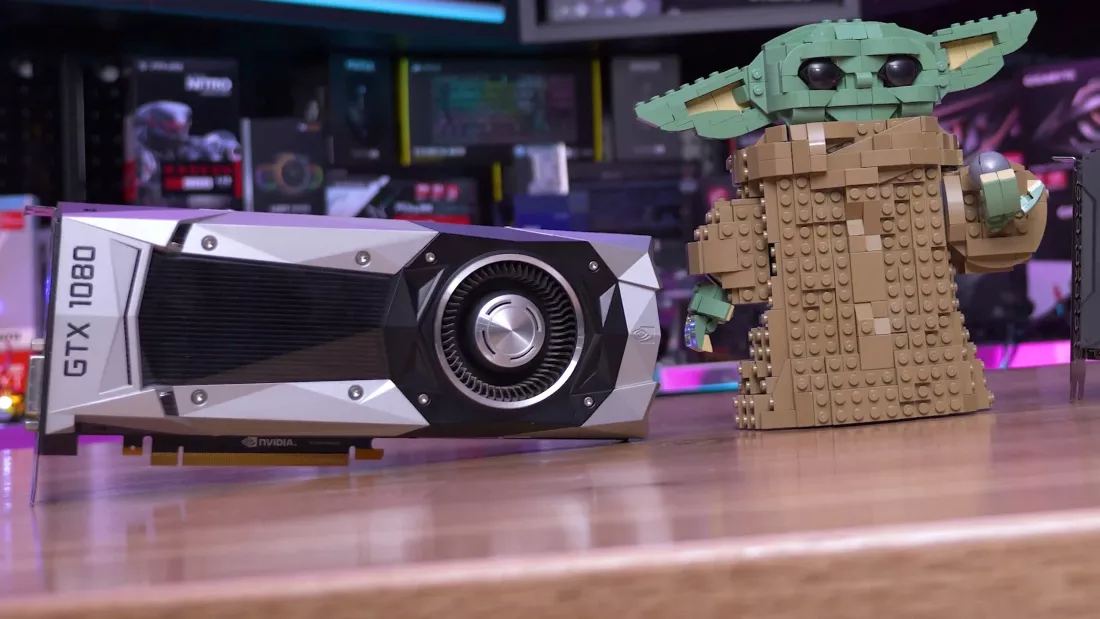

Can today's value king, the Radeon RX 6600, hold a candle to 2016's flagship GeForce GPU, the GTX 1080? Let's find out how these two GPUs match up by testing them in 51 games and get our answer.

You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

2016 Flagship GPU vs 2022 Budget GPU: GeForce GTX 1080 vs. Radeon RX 6600

- Thread starter Steve

- Start date

WhoCaresAnymore

Posts: 775 +1,224

To me it really goes to show that we really don't need to "upgrade" so often. For at least myself, a locked horizontal refresh rate of 60hz has always proven perfect. No tearing. So anything over 60fps to me isn't a big deal.

For instance, my 2080 Super. There really shouldn't be a need to upgrade this until the RTX 5 series comes out and titles no longer utilize 1080p. My main monitor is an odd because it's also for work/spreadsheets/word, etc. I'm not thrilled with it, but I wanted big text for my old *** eyes. It's a Sceptre 38 inch cured 2560x1440. Not thrilled with the clarity, but it's good enough. I gave my daughter my Dell 34" curved gaming monitor. Man that thing was sharp as a tack, but not tall enough. I don't like the long monitors with no height. Not very work friendly.

For instance, my 2080 Super. There really shouldn't be a need to upgrade this until the RTX 5 series comes out and titles no longer utilize 1080p. My main monitor is an odd because it's also for work/spreadsheets/word, etc. I'm not thrilled with it, but I wanted big text for my old *** eyes. It's a Sceptre 38 inch cured 2560x1440. Not thrilled with the clarity, but it's good enough. I gave my daughter my Dell 34" curved gaming monitor. Man that thing was sharp as a tack, but not tall enough. I don't like the long monitors with no height. Not very work friendly.

passwordistaco

Posts: 572 +1,240

THANK YOU! A couple of months ago I was making this decision, except it was 1080 vs 6600XT. The only comparison info was websites that just make a table comparing the chip/mem specs with some dubious benchmarks. They were neck & neck by that measure.

Looking at this article, it's obvious the 6600XT is the faster card by quite a margin.

Looking at this article, it's obvious the 6600XT is the faster card by quite a margin.

letsgoiowa

Posts: 197 +353

I'd be really interested to see how Vega aged compared to the 1080 here. Remember people bought Vega because of the "fine wine" aspect where AMD cards typically kept receiving important driver performance upgrades over long periods of time?

To me it looks like the 1080 *should* be the faster card here; it's just artificially limited by Nvidia dropping support.

To me it looks like the 1080 *should* be the faster card here; it's just artificially limited by Nvidia dropping support.

WhiteLeaff

Posts: 470 +854

I have a 6600XT and a 1080 and the 6600XT uses less power putting out higher FPS so it's notably better in that regard, the 6600 should be similar. And all current gen GPUs that use direct PSU power are overclocked way out of their efficiency zone anyway so you can save more power with both of them. I regularly ran my 1080 at 135W max while maintaining an "overclock" of 1911-1923MHz (@0.9v). I regularly run the 6600XT at 110W max while underclocking it a bit to 2500MHz (@~1.05v IIRC).

GEE THANKS STEVE, just what I wanted to see today, another reminder that my GTX 1080 is getting loooong in the tooth ha ha ha.

reported TDPs of the cards are about 170w for the 1080, and about 140w for the 6600. Granted that doesn't translate to real-world draws in a numerical sense, but considering one is designed for a far lower power envelope gives a fairly decent indication that not only is is significantly much faster, but does it with less power as well.What would be the difference in power consumption

AIC1Drew

Posts: 80 +66

The RTX 2060 is currently on sale for $229 (single fan model but still)The RX 6600 is a strong 1080p and even decent 1440p card. If I was building a budget build I would probably go with the RX 6600 XT, but both are good choice. Unfortunately, Nvidia doesn't have a great budget option.

brucek

Posts: 1,727 +2,694

I love the format of the two summary charts at the end, that's a lot of high-density information presented in an easy to process style. I'm looking forward to seeing those for next generation's head-to-head purchase decision.

Prrredictable

Posts: 97 +54

1080p will certainly be around in two more RTX card series. The most popular card on the market remains the 1060, which was released six years ago.To me it really goes to show that we really don't need to "upgrade" so often. For at least myself, a locked horizontal refresh rate of 60hz has always proven perfect. No tearing. So anything over 60fps to me isn't a big deal.

For instance, my 2080 Super. There really shouldn't be a need to upgrade this until the RTX 5 series comes out and titles no longer utilize 1080p. My main monitor is an odd because it's also for work/spreadsheets/word, etc. I'm not thrilled with it, but I wanted big text for my old *** eyes. It's a Sceptre 38 inch cured 2560x1440. Not thrilled with the clarity, but it's good enough. I gave my daughter my Dell 34" curved gaming monitor. Man that thing was sharp as a tack, but not tall enough. I don't like the long monitors with no height. Not very work friendly.

BadThad

Posts: 1,362 +1,637

Great article! It's always interesting to see these old vs new comparisons. One recommendation, cut back on the number of games tested. You could have gotten to the point with just a few games. As it stands, that's a HELLUV a lot of work!

terzaerian

Posts: 1,517 +2,260

My advice, scour E-bay for old flat-screen medical monitors. The medical field was able to force manufacturers to keep cranking out 4:3 aspect ratio in color for a while longer than we saw them in the consoomer market which gives you more of that vertical real estate. 2048 x 1536 is the highest resolution I've commonly seen at that ratio. IBM and a couple others did make higher resolutions but I've never seen them for sale anywhere. As an added bonus the color fidelity tends to also be top-tier. Granted, they won't work well for twitchy shooters and the like but I didn't play that junk to begin with. In terms of brands I can't say enough good things about Eizo - I bought a used Eizo, had some issues, and called their US tech support and they were happy to help me out. Even told me that the monitor in question was still under warranty and, despite being bought second-hand, could still be replaced until the warranty ran out. Thankfully that wasn't necessary but I was amazed at that.To me it really goes to show that we really don't need to "upgrade" so often. For at least myself, a locked horizontal refresh rate of 60hz has always proven perfect. No tearing. So anything over 60fps to me isn't a big deal.

For instance, my 2080 Super. There really shouldn't be a need to upgrade this until the RTX 5 series comes out and titles no longer utilize 1080p. My main monitor is an odd because it's also for work/spreadsheets/word, etc. I'm not thrilled with it, but I wanted big text for my old *** eyes. It's a Sceptre 38 inch cured 2560x1440. Not thrilled with the clarity, but it's good enough. I gave my daughter my Dell 34" curved gaming monitor. Man that thing was sharp as a tack, but not tall enough. I don't like the long monitors with no height. Not very work friendly.

Man am I glad I switched to AMD - functionality not locked out at the software level and the driver support goes for longer? Cracking!I'd be really interested to see how Vega aged compared to the 1080 here. Remember people bought Vega because of the "fine wine" aspect where AMD cards typically kept receiving important driver performance upgrades over long periods of time?

To me it looks like the 1080 *should* be the faster card here; it's just artificially limited by Nvidia dropping support.

Last edited:

Alfatawi Mendel

Posts: 299 +497

Interesting review Steve. I'm not one to indulge in the GPU 2nd-hand market...But given the similar current price points of the two cards, I can see your point. Also, would've been nice to include a short review of the FSR performance between the two cards. A lot of people don't know it can run on older hardware.

Wow; definitely not worth the upgrade then. Just imagine how a 1080Ti does.

THANK YOU! A couple of months ago I was making this decision, except it was 1080 vs 6600XT. The only comparison info was websites that just make a table comparing the chip/mem specs with some dubious benchmarks. They were neck & neck by that measure.

Looking at this article, it's obvious the 6600XT is the faster card by quite a margin.

The card benchmarked here is actually the RX 6600 (non XT) it's the weakest of the video encoder enabled RDNA2 cards.

passwordistaco

Posts: 572 +1,240

I know. The cheesy "comparison" sites had the 1080 and 6600XT even. If the 6600 is faster than the 1080, then the 6600XT is also, and to a greater degree.The card benchmarked here is actually the RX 6600 (non XT) it's the weakest of the video encoder enabled RDNA2 cards.

Colonel Blimp

Posts: 90 +77

Agreed. My Vega56 does everything I require of it at 1440p.I'd be really interested to see how Vega aged compared to the 1080 here. Remember people bought Vega because of the "fine wine" aspect where AMD cards typically kept receiving important driver performance upgrades over long periods of time?

To me it looks like the 1080 *should* be the faster card here; it's just artificially limited by Nvidia dropping support.

My main monitor is an odd because it's also for work/spreadsheets/word, etc. I'm not thrilled with it, but I wanted big text for my old *** eyes. It's a Sceptre 38 inch cured 2560x1440. Not thrilled with the clarity, but it's good enough. I gave my daughter my Dell 34" curved gaming monitor. Man that thing was sharp as a tack, but not tall enough. I don't like the long monitors with no height. Not very work friendly.

Just curious, why didn't you get sharper 4K at that size, and you can use Windows DPI scaling to get bigger text & UI. Your eyes don't seem to be that bad if you can notice the difference

What if the used 1080 and the RX6600 were the same price? What if a used RX6600 is cheaper than the 1080? Because I recently got a used RX6600 for $170. Like new, never mined. There is no way in heck in 2022 I would pay more for a 1080 than I just did for a much newer card. I used to own a GTX970, the cheapo option back when the 10-series of cards came out. It was a decent card. For six years ago.

My only issue at all with the RX6600 is the DirectX11 support. The game I play is DX11 and apparently Radeon cards are not very good with that. This is the first Radeon I have ever owned or even used, and I just didn't know that beforehand.

My only issue at all with the RX6600 is the DirectX11 support. The game I play is DX11 and apparently Radeon cards are not very good with that. This is the first Radeon I have ever owned or even used, and I just didn't know that beforehand.

kiwigraeme

Posts: 1,999 +1,410

Both strategies are actually winners

I believe the 1080 was a new high price on release - yet those that bought one - have had 6 years of gaming - and still can - the can offset by selling for $200 ( let's ignore Bitcoin , Covd inflated prices ).

Someone buying a 6600XT new gets a good card with lower risk when warranty ends - and they can save even more money if play older GOTY edition bought on sale -- so cheaper card - cheaper games ( this main not apply to the latest multiplayer of the day AAA with full servers ) .

Also a 6600XT owner gets benefit of cheaper monitor ie really good 1440p monitors are cheap .

For the 6600XT maybe be our only choice with after school job - and maybe when we are 80 we will go back there - as we change our gaming type

I believe the 1080 was a new high price on release - yet those that bought one - have had 6 years of gaming - and still can - the can offset by selling for $200 ( let's ignore Bitcoin , Covd inflated prices ).

Someone buying a 6600XT new gets a good card with lower risk when warranty ends - and they can save even more money if play older GOTY edition bought on sale -- so cheaper card - cheaper games ( this main not apply to the latest multiplayer of the day AAA with full servers ) .

Also a 6600XT owner gets benefit of cheaper monitor ie really good 1440p monitors are cheap .

For the 6600XT maybe be our only choice with after school job - and maybe when we are 80 we will go back there - as we change our gaming type

New Driver update has increased DX11 performance for RDNA2, so might be worth checking your driver's.What if the used 1080 and the RX6600 were the same price? What if a used RX6600 is cheaper than the 1080? Because I recently got a used RX6600 for $170. Like new, never mined. There is no way in heck in 2022 I would pay more for a 1080 than I just did for a much newer card. I used to own a GTX970, the cheapo option back when the 10-series of cards came out. It was a decent card. For six years ago.

My only issue at all with the RX6600 is the DirectX11 support. The game I play is DX11 and apparently Radeon cards are not very good with that. This is the first Radeon I have ever owned or even used, and I just didn't know that beforehand.

Vega 56 overtook the GTX 1070 after about 2 years of driver optimisations, so I'd expect the Vega 64 to be on par or better than the GTX 1080, which the 1660 Super is on par with.I'd be really interested to see how Vega aged compared to the 1080 here. Remember people bought Vega because of the "fine wine" aspect where AMD cards typically kept receiving important driver performance upgrades over long periods of time?

To me it looks like the 1080 *should* be the faster card here; it's just artificially limited by Nvidia dropping support.

Similar threads

- Replies

- 42

- Views

- 544

- Replies

- 17

- Views

- 233

- Replies

- 28

- Views

- 303

Latest posts

-

Ford is losing boatloads of money on every electric vehicle sold

- ScottSoapbox replied

-

Microsoft releases MS-DOS 4.0 source code and floppy images through an open-source license

- Alfonso Maruccia replied

-

Generative AI could soon decimate the call center industry, says CEO

- pcnthuziast replied

-

TechSpot is dedicated to computer enthusiasts and power users.

Ask a question and give support.

Join the community here, it only takes a minute.