As we now know, nobody cared back then about power consumption, did they? And it seems that efficiency is important to you, so now, or in the future RDNA should be right up your ally.

But Maxxi, You can't protect Nvidia anymore, Jensen can't control all the channels. The truth is out. Major discount incoming for RTX cards soon.

AMD has a class act going and it seems the entire gaming industry is on-board.

AMD GCN has been in consoles for years, yet NVIDIA IS FASTER. So quit your tales, no one is interested in. All of the RX series including VEGA are using GCN architecture.

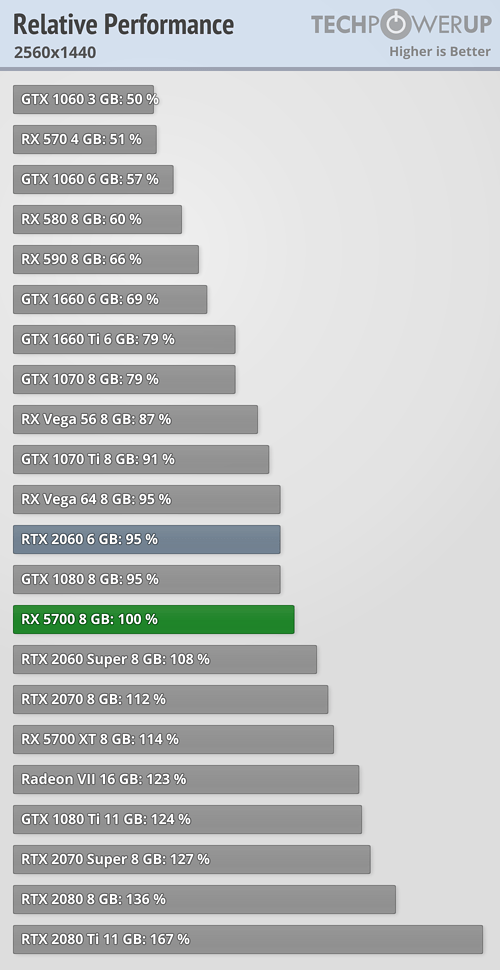

Turing chip is on an older node, 2x larger, half of the die is dedicated for raytracing, yet it consumes as much power as 7nm underperforming RDNA and offers more performance.

So yeah, when comes to efficiency, NVIDIA is more efficient.

I am sorry, that AMD got you again, I see their marketing worked. It was a few years ago when the current consoles were annouced with AMD cpus and GCN gpus. "Intel is done, Nvidia is done. All the game gonna be optimized for AMD and will perform better on it." people were saying.

We know how it turned out.. but but but but.

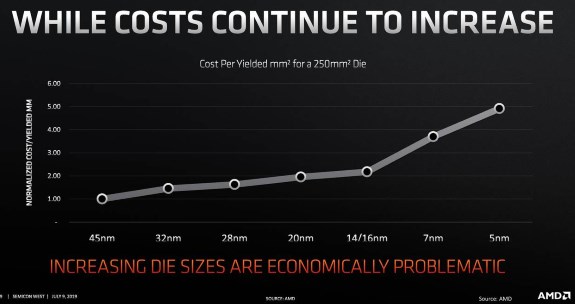

Now the circlejerk has started again. I am really suprised how often the same trick keeps working again and again. Now go read AMD tweets how RDNA is awesome, how is cheaper to manufacture whilst the opposite is true because 7nm is significantly expensive with lower yields than 12nm. Go celebrate with them that after a year they realised something which can barely match mainstream Nvidia gpus card whilst being on a better node and having no raytracing support. Oh god, RDNA is trully awesome and superior.

AMD marketing: So after a year we finally have released 2 gpus on the 7nm node. Nvidia is using the 12 nm node. Despite the fact of using the clearly superior node and not supporting raytracing, thus not wasting additional die space which is turned off most of time, OUR CARDS CAN ONLY MATCH MAINSTREAM NVIDIA GPUS. *amd panics* What we gonna do? What we gonna do? You know what, we cut the prices and pretend it was intended, we will hype our RDNA like never before and tweet how we played Nvidia and how their chips are bigger and more expensive to make. Our fan base is dumb enough to believe anything we say and anyone with decent knowledge will not buy our gpus, so no loss! #RDNA4LIFE #RDNA4EVER