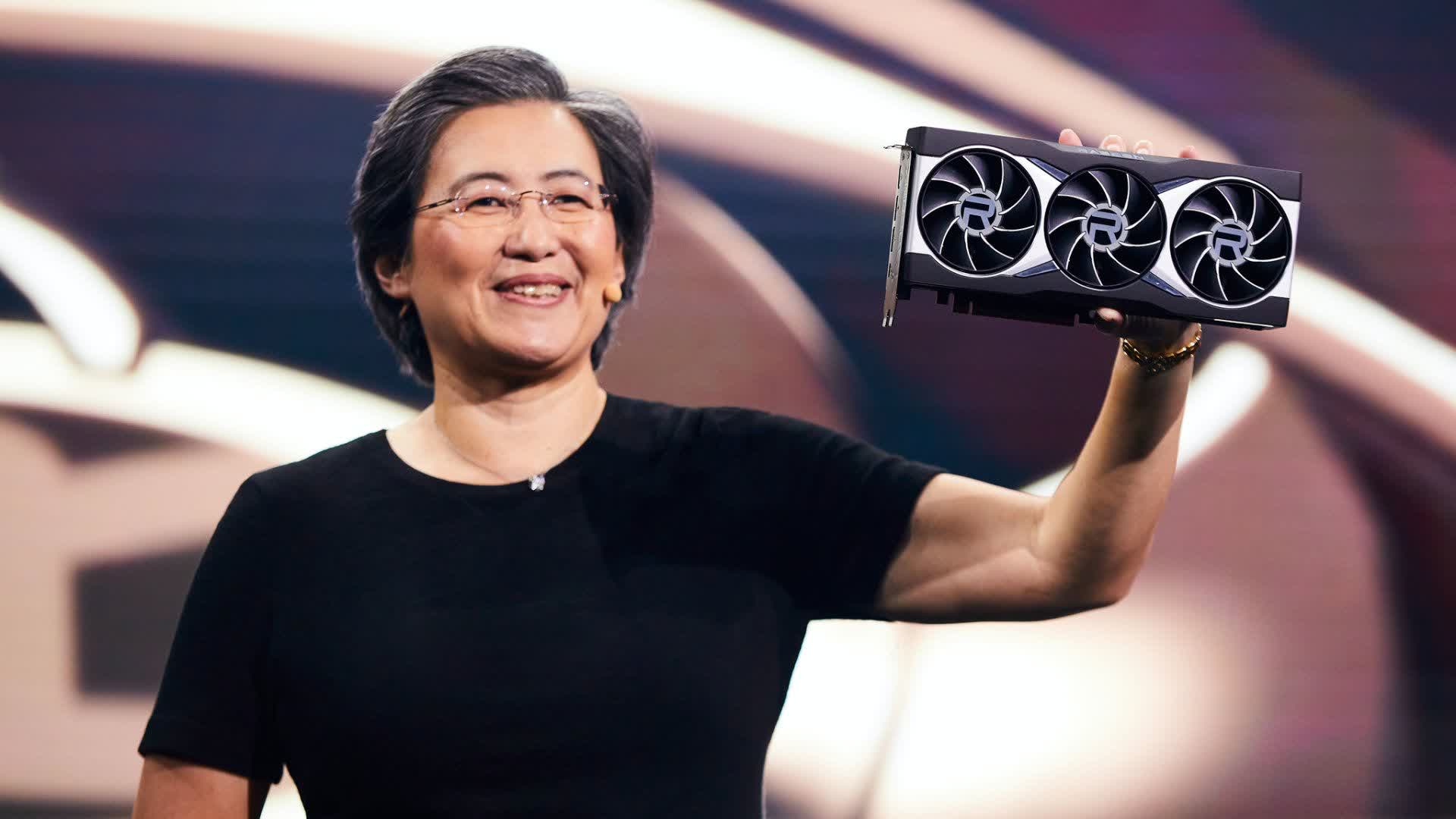

In brief: AMD's refreshed RX 6x50 XT cards won't blow your socks off, but they could turn out to be decent upgrades for what are now aging RDNA 2 deisgns. Early benchmarks suggest they'll hold their own against Nvidia counterparts, but pricing is what will ultimately determine their success.

We're less than a week away from the expected release of AMD's refreshed RDNA 2 graphics cards. As we inch closer to the actual launch, more details are popping up online, particularly in terms of power consumption.

Several variants of the upcoming Radeon RX 6950 XT have been confirmed to have a total board power (TBP) of 335 watts — a 35 watts boost over the RX 6900 XT. Previous rumors pointed to a TBP of 350 watts, but it appears that AMD opted for a more modest increase in terms of power budget compared to Nvidia's-100 watt boost for the GeForce RTX 3090 Ti.

The RX 6950 XT's TBP is also just five watts shy of the RX 6900 XT Liquid Edition's power rating. The game clock has been increased to 2,100 MHz, and the GPU will boost to 2,310 MHz if the power budget and thermals allow it. As for the memory, we already know the new card will ship with 18 Gbps memory that translates into an effective memory bandwidth of 1,728 gigabytes per second.

Interestingly, early benchmarks suggest AMD's updated RDNA 2 flagship will not only be faster than the RTX 3090, but also the RTX 3090 Ti. They look even more impressive when you consider the latter card sports a massive overclock and much larger power budget, not to mention 21 Gbps memory and more CUDA cores than the RTX 3090.

The tests in question were performed using the 3DMark TimeSpy benchmark on a system equipped with a Ryzen 7 5800X3D CPU and DDR4-3600 memory. When using pre-release drivers and the TimeSpy Performance preset, the RX 6950 XT was 6.5 percent faster than the RTX 3090 Ti at 1440p, with a graphics score of 22,209 points.

As you'd expect, AMD's new flagship isn't able to challenge Nvidia's best Ampere card when it comes to ray tracing. The RX 6950 XT can get close to an RTX 3080 in the 3DMark Port Royal test, but the RTX 3090 Ti is around 50 percent faster.

The RX 6750 XT will come with a 250-watt TBP similar to the RX 6800, and leaked benchmarks suggest it will rival Nvidia's RTX 3070 in terms of rasterization performance. The ray tracing performance is more modest than that of the RTX 3060 Ti, but that's not a huge surprise. Spec-wise, the new card will supposedly sport a game clock of 2,495 MHz and a boost clock of 2,600 MHz, along with 18 Gbps memory.

AMD has given the RX 6650 XT a 20-watt higher TBP compared to the RX 6600 XT which allowed it to increase the game and boost clocks to 2,410 MHz and 2,635 MHz, respectively. That makes it 11 percent faster in the 3DMark TimeSpy benchmark, but it will be interesting to see how well that translates into actual gaming performance.

Pricing is still an unknown, but we're hoping to see Team Red undercut similar offerings from Nvidia as we're talking about aging RDNA 2 designs that don't shine in the ray tracing department. Curiously, now that prices for the RX 6000 series are reasonably close to MSRP, they 're starting to show up with more frequency in the Steam hardware survey.

If anything, this suggests AMD could make some gains in the mainstream and low-end segments if it can put the budget brand hat back on. The refreshed RDNA 2 cards are expected to land on May 10, so it won't be long before independent reviews will bring gaming performance and pricing into focus.

https://www.techspot.com/news/94460-amd-radeon-rx-6x50-xt-lineup-specifications-confirmed.html