Or when the things it adds are too hard to notice. Reflections are basically the only thing that may be worth a bit of fps drop for some games. I don't think shadows and global illuminations are good enough to cut the fps that much.No it doesn't, it allowance more realistic lighting/reflections, the only reason you'd turn it off if you don't have an RTX card.

You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

AMD's upcoming RX 7900 XT rumored to have 24 GB of VRAM, 384-bit memory bus

- Thread starter Tudor Cibean

- Start date

To me, RT is nice to have if your hardware can keep up. In most RT games, with RT enabled, you lose a big chunk of performance, which is quite silly. The whole reason why people buy a high end GPU is that it allows them to play games at high FPS and IQ. I actually doubt RT performance penalty will go away even with next gen GPUs. They may spam more RT cores on it, but that just takes up die space.

Other reason you turn it off is when it takes a heavy toll on performance. Do note that not all RTX card have the ability to run RT. The moment you get to a card like the RTX 3070 which is supposed to target 1440p gaming, enabling RT generally means you must enabled DLSS or drop to 1080p. Engaging the RT and DLSS cores also requires some VRAM allocation, which the 8GB is already starting to show its limits. When you go lower to say a RTX 3060, I won’t even think these are RT worthy cards despite the marketing and the supposed hardware support. One is better off leaving RT disabled so that they can play games with higher frame rates.No it doesn't, it allowance more realistic lighting/reflections, the only reason you'd turn it off if you don't have an RTX card.

Vanderlinde

Posts: 433 +273

Both camps are aiming for the highest FPS for 4K based gaming. Thats where this massive increase in memory, GPU computational power and such come from.

Both camps have advantages and disadvantages. Nvidia goes for monolithic big chips. Lower overall latency, higher (heat) power consumption, higher performance, more difficult to cool (heat density).

AMD goes for multiple GPU's hardwired together as a whole which does increase yield production, lower power consumption (you can fine pick the best cores with best attributes) and IF to compensate for any of the latency losses due to it's design.It's not like crossfire, because crossfire is still over a PCI-E bus.

It will be very interesting times.

Both camps have advantages and disadvantages. Nvidia goes for monolithic big chips. Lower overall latency, higher (heat) power consumption, higher performance, more difficult to cool (heat density).

AMD goes for multiple GPU's hardwired together as a whole which does increase yield production, lower power consumption (you can fine pick the best cores with best attributes) and IF to compensate for any of the latency losses due to it's design.It's not like crossfire, because crossfire is still over a PCI-E bus.

It will be very interesting times.

I expect AMD to be on par with Nvidia, they used to be.So what's your point? That AMD is late? Sure, you won... the internet debate. Not.

My point still stands: 1st gen vs 1st gen is the same, horrible RT perf for both.

Don't you even dare to claim you would have expected AMD to leapfrog nvidia in one generation and maybe what, beat them outright in raster and RT with RDNA2 when they jumped to 3090 performance from 5700 XT? Really? Pffft.

You should take off those horse blinders off... or you might fall of your high horse too, like the other guy.

3070 = 1080pOther reason you turn it off is when it takes a heavy toll on performance. Do note that not all RTX card have the ability to run RT. The moment you get to a card like the RTX 3070 which is supposed to target 1440p gaming, enabling RT generally means you must enabled DLSS or drop to 1080p. Engaging the RT and DLSS cores also requires some VRAM allocation, which the 8GB is already starting to show its limits. When you go lower to say a RTX 3060, I won’t even think these are RT worthy cards despite the marketing and the supposed hardware support. One is better off leaving RT disabled so that they can play games with higher frame rates.

3080 = 1440p

3090 = 4K

You are basically lowballing the target resolution to substantiate your claims. Even on Nvidia’s site , the graph they gave is showing 1440p.3070 = 1080p

3080 = 1440p

3090 = 4K

https://www.nvidia.com/en-sg/geforce/graphics-cards/30-series/rtx-3070-3070ti/

The same graph for RTX 3080 shows 4K.

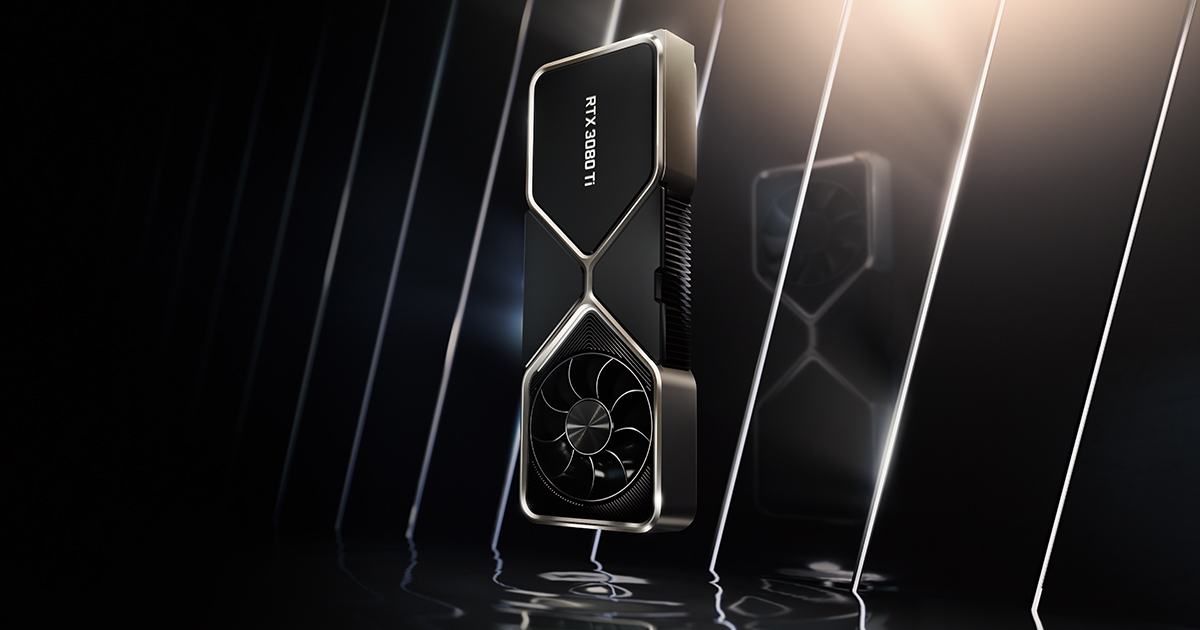

NVIDIA GeForce RTX 3080 Family

The Ultra High Performance that Gamers Crave, powered by NVIDIA Ampere Architecture.

www.nvidia.com

Last edited:

how can the 3070 target 1080p? it makes zero sense. it literally replaces last gen's 4K card, the 2080ti.3070 = 1080p

3080 = 1440p

3090 = 4K

Depends if you're factoring in RTX or not.how can the 3070 target 1080p? it makes zero sense. it literally replaces last gen's 4K card, the 2080ti.

No, it doesn't depend. What kind of logic is that? The 3060 is for 360p by that logic.Depends if you're factoring in RTX or not.

360p if you want max settingsNo, it doesn't depend. What kind of logic is that? The 3060 is for 360p by that logic.

They will be with RDNA3, actually maybe even a little ahead this time around if some leaks are true.I expect AMD to be on par with Nvidia, they used to be.

Also AMD has a fraction of nvidia's budget. Do you think that matters, when you make your wishes of AMD = nvidia?

If the situation would be reversed, AMD ahead and nvidia behind with a fraction of AMD's budget, would you still ask the same from nvidia?

I'm trying to determine how big of an nvidia fanboy you are and if you have any sane principles of morality and fairness.

Alfatawi Mendel

Posts: 299 +497

I wonder if Leatherman will be promising 16k gaming with the new Nvidia cards, as he stood up on stage and promised 8k gaming with the RTX 3xxx series.

wiyosaya

Posts: 9,763 +9,646

IMO, unless there is some industrial espionage going on, there is no company that is always able to match its competitors all the time.I expect AMD to be on par with Nvidia, they used to be.

wiyosaya

Posts: 9,763 +9,646

I guess we will have to wait for benchmarks to see if there is any truth behind these AMD claims.

AMD has been consistently behind for over a decade.IMO, unless there is some industrial espionage going on, there is no company that is always able to match its competitors all the time.

Squid Surprise

Posts: 5,597 +5,227

Accusing someone of being an Nvidia fanboy after posting that kind of shows us what YOU are…They will be with RDNA3, actually maybe even a little ahead this time around if some leaks are true.

Any leaks about RDNA3 should be taken with a HUGE grain of salt. AMD has been behind Nvidia for about a decade - until they PROVE otherwise, saying anything else is simply being an AMD fanboy.

m3tavision

Posts: 1,438 +1,222

I am referring to the Thousands of Streamers and competitive players...Who is "we"? Are you talking about yourself in plural?

You make too many assumptions, you "big person" that you think you are, about "little people" - I suppose that includes me, rofl.

Yes, I actually play a lot of games (you want a list for 2021-2022? last 10 or 20 years maybe?) and I could not give a flying **** about $1000 GPUs or more, I'll never pay more than $600-700.

That being said my point still stands: 1st gen nvidia RT, as in Turing, was and still is as abysmal in RT as 1st gen RT from AMD, as in, RDNA2. Those are facts, not feelings.

Secondly vision is more important than sound, in every metric, in every tier and class and even social spectrum. Again facts, not feelings. If you're a competitive player or just a audio-snob, don't care which, you are still the minority with your wishful-dreaming of RT sound. Again facts, not feelings.

Careful not to fall off your high horse, "big person" - you might wake up to reality. In the mean time go cry to nvidia to make RT sound for you and your minority, see how that goes...

Nobody cares how many games you play... Unless you are playing them competitively against others.. where games are recorded. In which environmental sound placement is are critical.

You ever hear of Dr. Disrespect? He can have a stadium full of people following him every stream and is designing his own game where sound is important!

Casual players need not apply.. They like shiny things and afraid to compete.

Secondly, you entirely made my point about RT... because even you will not buy an expensive video card to get RT frames playable... You just admitted it.

Again, people buy expensive gpus to compete.. To have an advantage, NOBODY cares about 60fps ray-tracing.. or 120fps ray tracing, they are buying $1k+ 144hz monitors and $1k GPUs because they want moAr frames, not less.

And to your ultra casual point, show me one Streamer who uses Ray Tracing.... even the ones who got FREE rtx3090 don't use ray tracing.

Again, for those people buying high end cards, are not doing it for lighting.. When they turn all that garbage off, to get highest frames.

What is more important, perfect reflection or to be able to tell exactly where that bullet came from..?

Your a casual player and have no idea how many gaming clans there are or how much competitive play goes on daily... You should follow twitch TV more... Then you won't seem so out of touch with reality...

My EVGA RTX2080 will never run Ray tracing at gaming speeds, so why would I buy a newer GPU at $1,200+, to get less frames than I already do.. for a superficial gimmick.

Kinda weird that 15 years ago people would spend big money on a 3d sound card... Nobody is buying a reflections generator..

Similar threads

- Replies

- 16

- Views

- 456

- Replies

- 4

- Views

- 287

Latest posts

-

Ford is losing boatloads of money on every electric vehicle sold

- Jigs Gaton replied

-

Generative AI could soon decimate the call center industry, says CEO

- Jigs Gaton replied

-

BlizzGone: Blizzard cancels 2024 convention but promises an eventual return

- Superconductor replied

-

Lenovo and Micron are first to announce a laptop using LPCAMM2 memory

- Shirley Dulcey replied

-

TechSpot is dedicated to computer enthusiasts and power users.

Ask a question and give support.

Join the community here, it only takes a minute.