The big picture: While everyone who closely tracks the tech industry understands there’s an important link between today’s latest semiconductor chips and software application performance, there does seem to be a new, deeper level of connection being developed between the two. From cloud-based chip emulation kits to AI framework enhancements to new tools for creating applications that function across network connections, we appear to be embarking on a new era of silicon-focused software optimizations being created -- surprise, surprise -- by chip makers themselves.

The first Intel On event represents a rejuvenation of the company’s former IDF, or Intel Developer Forum, where the company highlighted the importance of software optimization through a series of announcements. Collectively, these announcements -- including the launch of an updated Developer Zone website -- re-emphasized Intel’s desire to put developers of all types and levels at the forefront of its efforts.

Intel’s focus on its oneAPI platform highlights the increasingly essential role that software tools play in getting the most from today’s silicon advancements. First introduced last year, oneAPI is designed to dramatically ease the process of writing applications that can leverage both x86 CPUs as well as GPUs and other types of accelerators via an open, unified programming environment. The forthcoming 2022 version is expected to be released next month with over 900 new features, including things like a unified C++/SYCL/Fortran compiler and support for Data Parallel Python.

Given the increasing sophistication of CPUs and GPUs, it’s easy to see why new developer tools are necessary to not only more fully leverage these individual components but also to take advantage of the capabilities made possible by combinations of these chips. While Intel has always had a robust set of in-house software developers and has built advanced tools like compilers that play an important role in application and other software development, the company has been focused on a tiny, elite fraction of the total developer market. To make a bigger impact, it is important for the company to create software tools that can be used by a much broader set of the developer population. As Intel itself put it, the company wants to “meet developers wherever they are” on the skill and experience front.

As a result, Intel is doing things like increasing the number of oneAPI Centers of Excellence to provide more locations where people can learn the skills they need to best utilize oneAPI. In addition, the company announced that it was building new acceleration engines and new optimized versions of popular AI toolkits, all designed to best use its forthcoming datacenter-focused Intel Xeon Scalable Processor.

The company also talked about cloud-based chip emulation environments that can enable a wider array of programmers to build applications for various types of accelerators without needing to have physical access to them.

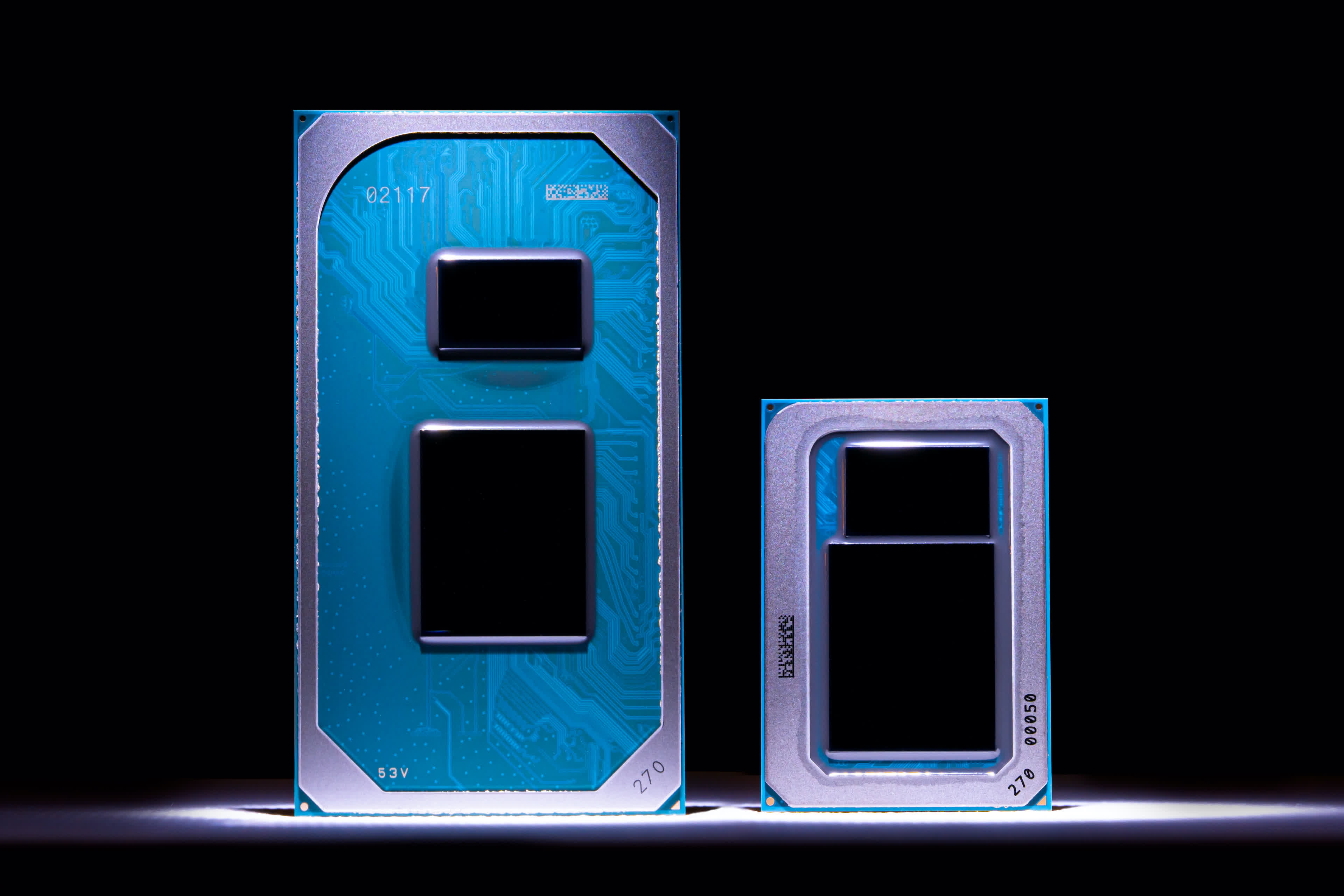

Much of these developments are clearly driven by the increased complexity of various individual chip architectures. While architectural enhancements in these semiconductors have obviously improved performance overall, even the most experienced programmers are unlikely to be able to keep track of all the functionality that’s been enabled.

Toss in the possibility of creating applications that can leverage multiple chip types and, well, it’s easy to imagine how things could get overwhelming pretty quickly. It’s also easy to understand why few applications really take full advantage of today’s latest CPUs, GPUs, and other chips. Conversely, it’s not hard to understand why many applications (especially the ones that aren’t kept up-to-date on a regular basis) don’t perform as well as they could with some new chips.

Taking a step back, what’s interesting about these Intel efforts is that they seem to be a logical extension of other similar motions recently announced by Arm and Apple, two other silicon-focused companies (each in its own unique way). At Arm’s Developer Summit last week, the company described its own efforts to create cloud-based versions of virtual chip hardware as part of its Total Solutions for IoT effort and its new Project Centauri. As with Intel, Arm’s goal is to increase the range of developers that can potentially write applications for its architecture.

In terms of chip-focused software optimizations, early benchmark data seems to suggest that Apple’s latest Arm-based M1 Pro and M1 Pro Max chips offer extremely impressive results with Apple’s own applications -- which were undoubtedly optimized for these new chips. On other types of non-optimized applications and workloads, however, the results appear to be a bit more moderate. This simply highlights how achieving the best possible performance for a given chip architecture requires an increased amount of software optimization.

Now, some might argue that the hardware-agnostic (or at least hardware-ambivalent) nature of Intel’s oneAPI is at direct odds with the highly tuned, chip-specific software optimizations that some of Intel’s other new efforts incorporate. At a foundational level, however, they’re all highlighting a great deal of low-level software enhancement that the increasingly sophisticated new chip architectures (such as Intel’s new 12th-gen Core CPUs) demand in order to get the best possible performance.

To put it simply, the pure, across-the-board performance improvements that we’ve enjoyed from hardware-based chip advances are getting harder to achieve. As a result, it’s going to take increasingly sophisticated and well optimized software to keep computing performance advancing at the level to which we’ve become accustomed. In that light, Intel’s latest efforts will likely bring more attention to the platform in the short term but deliver even more meaningful impact over time.

Bob O’Donnell is the founder and chief analyst of TECHnalysis Research, LLC a technology consulting firm that provides strategic consulting and market research services to the technology industry and professional financial community. You can follow him on Twitter @bobodtech.

https://www.techspot.com/news/91959-alder-lake-brings-biglittle-desktop-pcs-intel-highlights.html