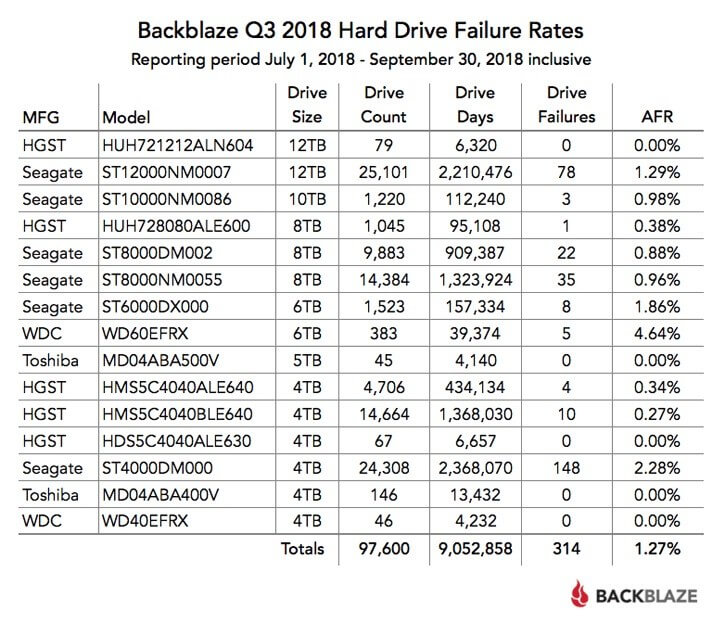

In brief: Cloud storage provider Backblaze has released its latest quarterly hard drive stats. For Q3 2018, Western Digital’s 6TB WD60EFRX had the highest annual failure rate (AFR)—4.46%—though only five of these 383 drives failed last quarter. But the main takeaway was that its large-capacity drives are more reliable than their smaller cousins.

Backblaze, which was the pick of storage providers in our 'Back to school' feature, had 99,636 spinning hard drives during Q3, of which 1866 were boot drives and 97,7770 were data drives. The company removed the last of its 3TB Western Digital drives during the last quarter, replacing them with 12TB drives.

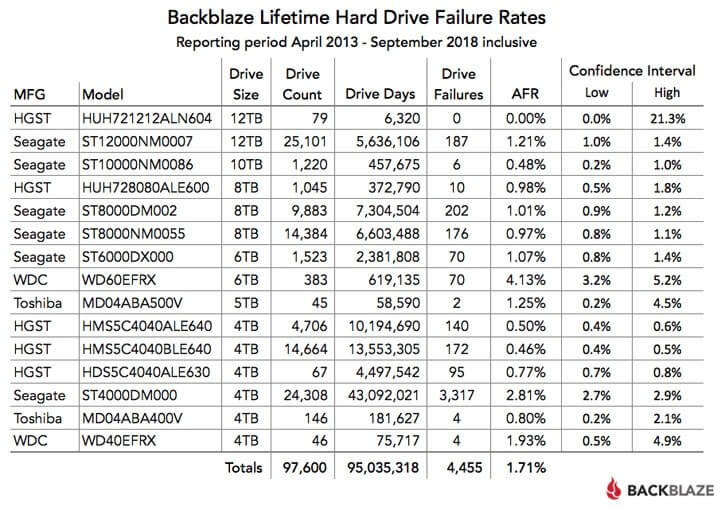

The 79 HGST 12TB drives models it added in Q3 have a 0 percent failure rate, though that’s not too surprising, given their low numbers and fewer drive days. But 12TB HDDs are reliable in general: Backblaze uses 25,101 12TB Seagate drives of this capacity and only had 187 drive failures last quarter; they have an AFR of 1.29 percent.

According to Backblaze, large-capacity drives (8TB – 12TB) are more reliable than the smaller drives, boasting annual failure rates of 1.21 percent or lower.

"The failure rates of all of the larger drives (8TB, 10TB, and 12TB) are very good: 1.21 percent AFR (Annualized Failure Rate) or less. In particular, the Seagate 10TB drives, which have been in operation for over 1 year now, are performing very nicely with a failure rate of 0.48 percent," writes the company.

Backblaze notes that its overall drive failure rate of 1.71 percent is now the lowest it has ever achieved, beating its previous low of 1.82 percent from Q2 2018.

https://www.techspot.com/news/77030-backblaze-q3-report-shows-large-capacity-drives-more.html