Take the computer know-how, the love of games, and the interest in components, and mix them all together. It's a perfect recipe for diving into benchmarking. In this article, we'll explain how you can use games to benchmark your PC and what you can do to analyze the results.

You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

Benchmarking Your PC: A Guide to Best Practices

- Thread starter neeyik

- Start date

Cycloid Torus

Posts: 4,892 +1,711

QuantumPhysics

Posts: 6,306 +7,258

krizby

Posts: 429 +286

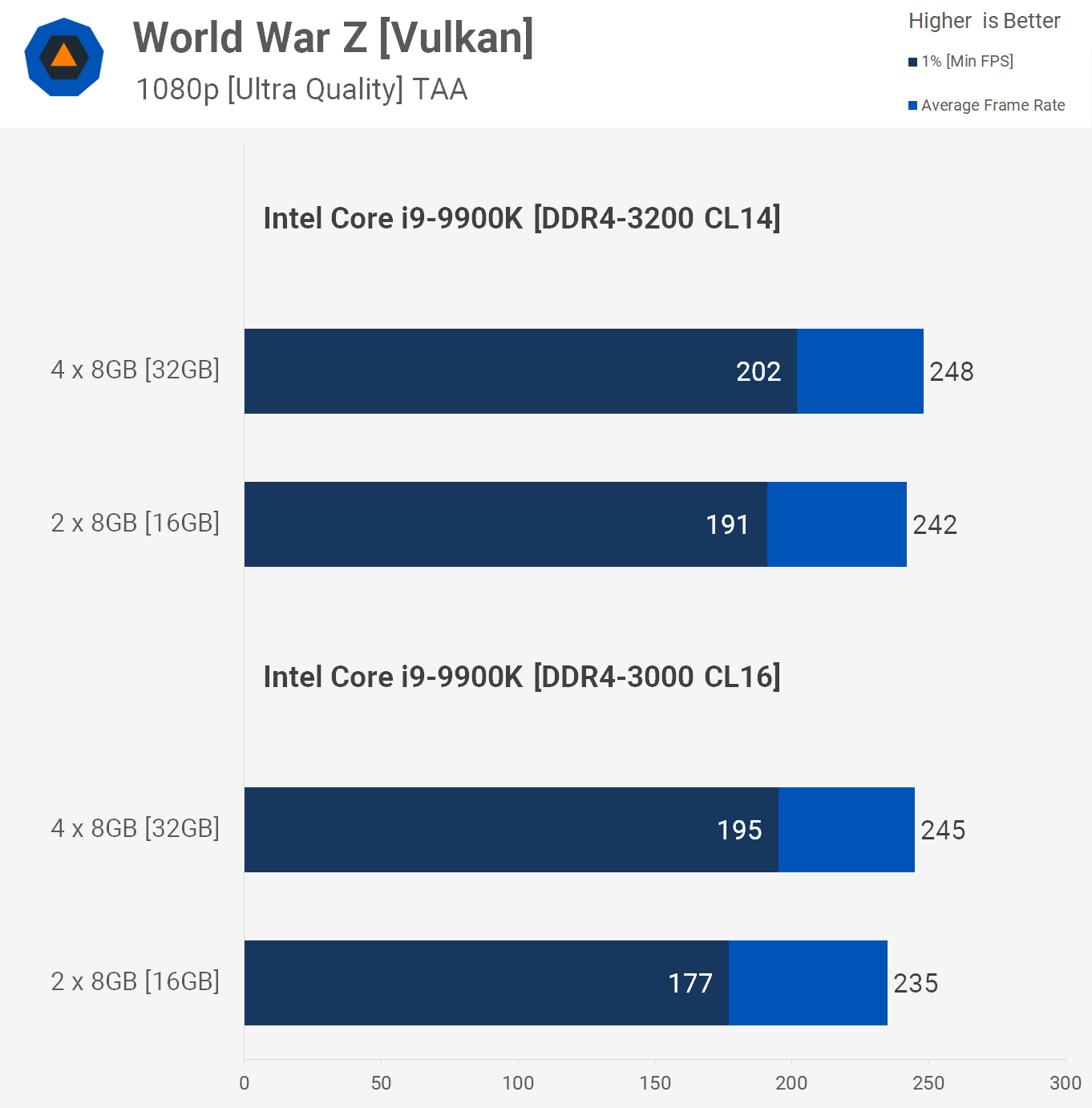

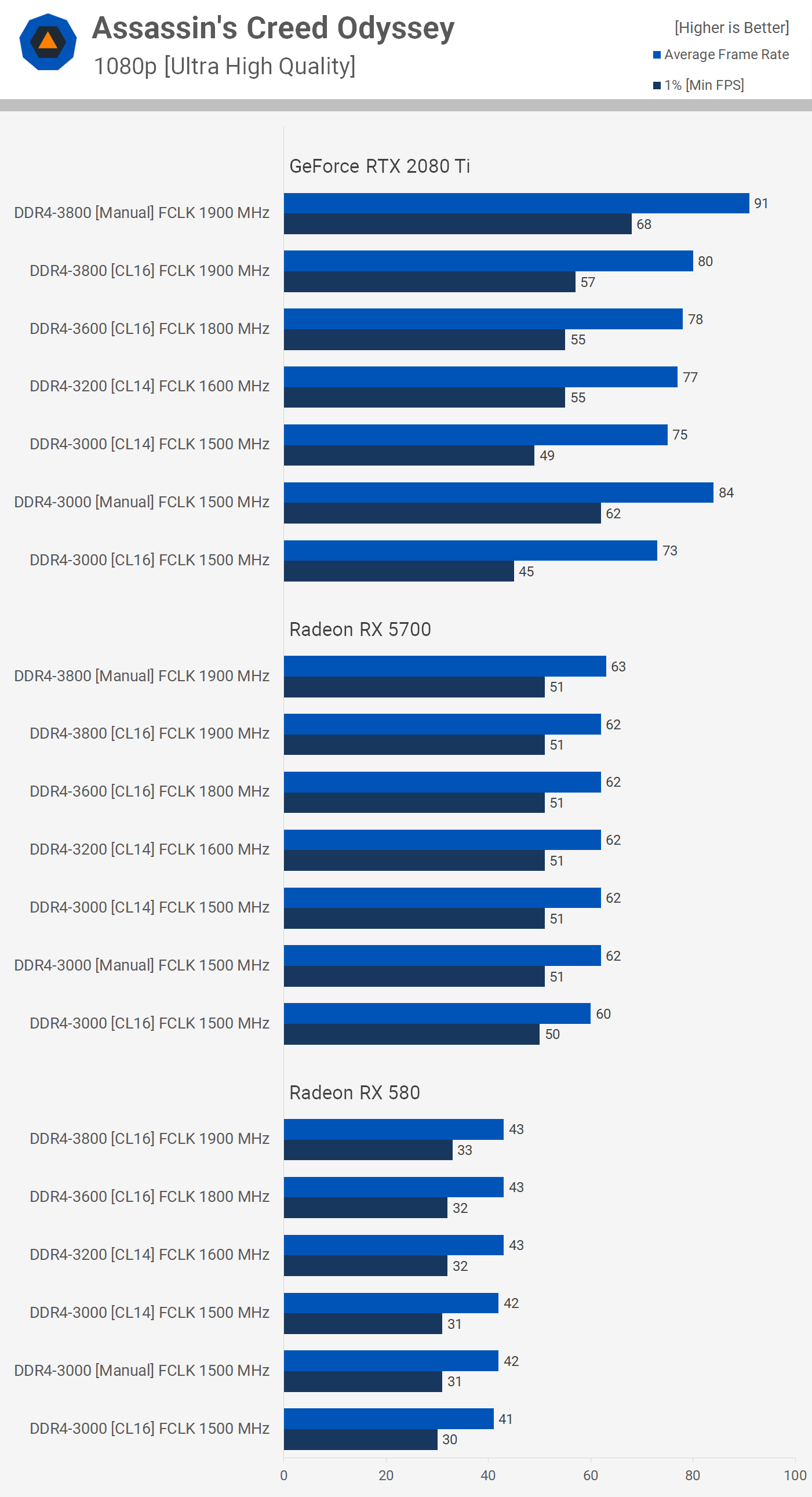

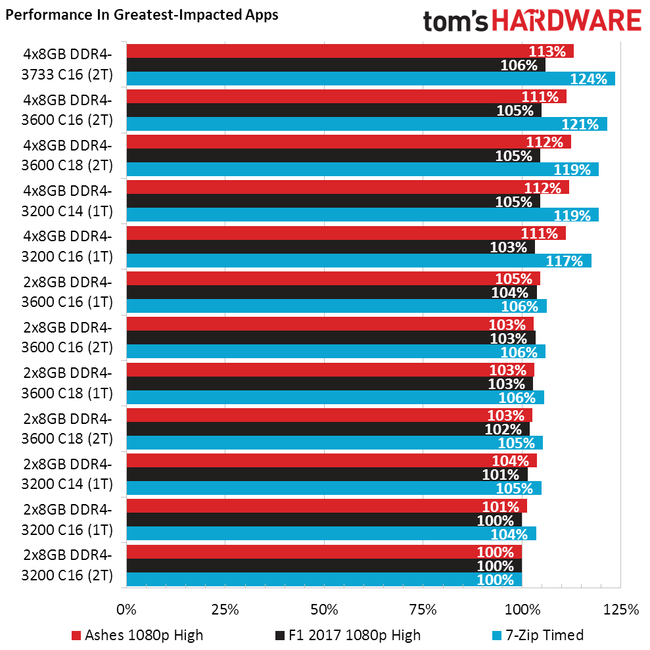

Now I only wish Techspot use higher performing RAM config when benchmarking their GPU, something like 4x8GB 3600mhz cas 14 DDR4 kit, I know these kits cost like 400usd but it's one way to alleviate the CPU bottleneck that the highest end GPU will run into at 1080p. The 3200mhz 2x8GB bog standard kit that Techspot is using doesn't really even represent the the mainstream PC gamer nowadays.

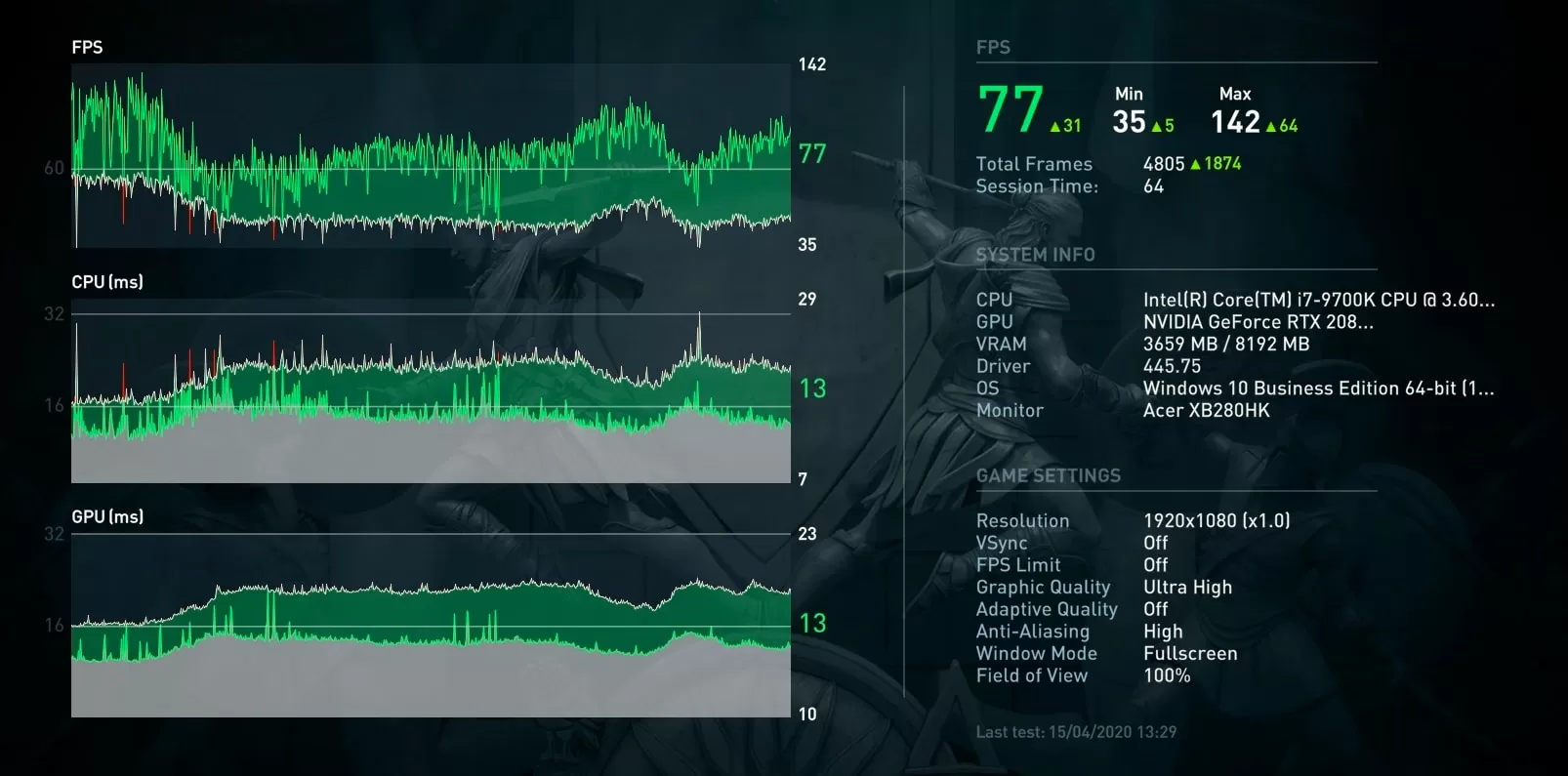

Test setup: Core i9 9900K + RTX 2080

This is with a RTX 2080 and the difference is already 10fps+ with the higher performing kit, imagine the 2080 Ti or next gen 3080 Ti

Test setup: Core i9 9900K + RTX 2080

This is with a RTX 2080 and the difference is already 10fps+ with the higher performing kit, imagine the 2080 Ti or next gen 3080 Ti

Bullwinkle M

Posts: 911 +817

ExactlyI just use “Can U Run it” detection to see if I can run specific hardware.

Or I use real world “enjoyability” as my measure.

Will it boot to a fresh copy of Windows XP-SP2 in 3 seconds flat?

Check!

Can it edit uncompressed/lossless audio up to 352Khz / 64bit?

Check!

Can it avoid all the nastly Walled Garden/DRM garbage that all new X86 computers have?

Check!

Will it never again have a Blue Screen of Death?

Check!

Will it prevent ALL forms of malware (including ransomware) from wrecking the O.S.?

Check!

Can I connect a 65" 4K HDMI display to the DVI port?

Check!

Can I add an outboard DAC and an Optical Toslink port to the motherboard for much improved audio?

Check!

Can it run 4 or 5 programs simultaneosly without crashing?

Check!

Can I run it from my bed with a $10 remote keyboard / gyro mouse / learning remote control like the Rii MX6?

Check!

Can I use the latest Bluetooth 5 Low Latency and HD codecs?

Check!

But that's just my old Nehalem

My Sandy Bridge computers are way better

Oh.....

and if I want an unstable mess that bluescreens, deletes my data, takes 8 times longer to boot up, adds nothing of value, but includes adware, spyware, extortionware, backdoors and DRM garbage I have no use for, then.... yeah, it also runs Windows 10

Last edited:

Now I only wish Techspot use higher performing RAM config when benchmarking their GPU, something like 4x8GB 3600mhz cas 14 DDR4 kit, I know these kits cost like 400usd but it's one way to alleviate the CPU bottleneck that the highest end GPU will run into at 1080p. The 3200mhz 2x8GB bog standard kit that Techspot is using doesn't really even represent the the mainstream PC gamer nowadays.

Test setup: Core i9 9900K + RTX 2080

This is with a RTX 2080 and the difference is already 10fps+ with the higher performing kit, imagine the 2080 Ti or next gen 3080 Ti

The best performing RAM in that graph is not 3600 CL14, it's Apacer NOX 3200MHz CL16 which has even worst timings than TechSpot's Ballistix 16GB 3200 MHz CL14, which is *not* the same memory from that TechPowerup graph, which is Ballistix 16GB 3200 MHz CL16.

krizby

Posts: 429 +286

The best performing RAM in that graph is not 3600 CL14, it's Apacer NOX 3200MHz CL16 which has even worst timings than TechSpot's Ballistix 16GB 3200 MHz CL14, which is *not* the same memory from that TechPowerup graph, which is Ballistix 16GB 3200 MHz CL16.

You totally nail that, the Apacer NOX is a 2x16GB 3200mhz kit, which are dual ranks modules, you get more performance out of quad ranks kit (2x16GB or 4x8GB) even at lower frequency and higher cas latency.

Techspot already has a article about this.

In this case the 4x8GB 3600mhz cas 14 like the Trident Z kit are readily on sale everywhere are pretty much the best RAM for Ryzen 3000 CPU without being out of sync with Infinity Fabric clocks (few Ryzen 2 CPU are capable of hitting 3733mhz or 3800mhz IF clock). I have checked alot of X570 board QVL and they were verified to work with these kits.

So I will put this in performance order:

4x8GB 3600mhz cas 14 > 2x16GB 3600mhz cas 16 > Apacer NOX 2x16GB 3200Mhz as shown above > the one kit Techspot use.

Last edited:

grumblguts

Posts: 496 +418

Stock cas 14-14-14-31-82-1 (tCAS-tRC-tRP-tRAS-tCS-tCR)

3600 mhz from 3200 mhz with just a small bump in voltage is where im at.

https://valid.x86.fr/4s0gl1

8 pack all day long.

3600 mhz from 3200 mhz with just a small bump in voltage is where im at.

https://valid.x86.fr/4s0gl1

8 pack all day long.

You totally nail that, the Apacer NOX is a 2x16GB 3200mhz kit, which are dual ranks modules, you get more performance out of quad ranks kit (2x16GB or 4x8GB) even at lower frequency and higher cas latency.

Techspot already has a article about this.

In this case the 4x8GB 3600mhz cas 14 like the Trident Z kit are readily on sale everywhere are pretty much the best RAM for Ryzen 3000 CPU without being out of sync with Infinity Fabric clocks (few Ryzen 2 CPU are capable of hitting 3733mhz or 3800mhz IF clock). I have checked alot of X570 board QVL and they were verified to work with these kits.

So I will put this in performance order:

4x8GB 3600mhz cas 14 > 2x16GB 3600mhz cas 16 > Apacer NOX 2x16GB 3200Mhz as shown above > the one kit Techspot use.

Yow, that stuff's expensive. 3200 CAS14 is probably a rare buy even among enthusiasts, especially since you need a 4x configuration and you won't see 4GB sticks with those timings. 32GB is the minimum entry point.

Edit: I meant to type 3600 CAS14. Time to step away from the KB.

Last edited:

krizby

Posts: 429 +286

Yow, that stuff's expensive. 3200 CAS14 is probably a rare buy even among enthusiasts, especially since you need a 4x configuration and you won't see 4GB sticks with those timings. 32GB is the minimum entry point.

Yeah 2x8GB 3200mhz cas 14 is like 120usd right now, not really something you would put in testing rig that do benchmarks on GPU that cost 10x as much...

Even doubling the memory to 32GB with 4x8GB 3200mhz cas 14 is a good option to reduce the CPU bottleneck when testing at 1080p. If Techspot focus on benchmarks at 1080p + 1440p at least update your CPU + RAM combo as so not to hinder high performance GPU...

Now it's easy to analyze at which GPU performance level there will exist CPU bottlenecking at 1080p and even 1440p with TPU performance summary

Latest TPU GPU review

Vega 64 and any GPU below doesn't get CPU bottlenecked because their relative performance to 5500XT look to be the same throughout 1080p-1440p-4k

But if you look at any GPU faster than Vega 64 they will extend their lead compare to 5500XT when the resolution increase

1080p 1440p 4k

5700 159% 162% 165%

5700XT 179% 183% 186%

Radeon VII 180% 191% 202%

RTX 2070 171% 175% 180%

RTX 2080S 210% 224% 235%

TPU use a testing rig with 9900K + 2x8GB 3867mhz cas 18 DDR4

Now if any Techspot editor is reading this, please update the testing rig as it is extremely unfair for high performance GPU to be bound to 1080p-1440p testing with slow RAM config. At least use a 2x16GB 3600mhz cas 16 kit for both Intel and AMD testing rigs, it's 2020 now not 2017.....

Last edited:

JRMBelgium

Posts: 10 +10

Now I only wish Techspot use higher performing RAM config when benchmarking their GPU, something like 4x8GB 3600mhz cas 14 DDR4 kit, I know these kits cost like 400usd but it's one way to alleviate the CPU bottleneck that the highest end GPU will run into at 1080p. The 3200mhz 2x8GB bog standard kit that Techspot is using doesn't really even represent the the mainstream PC gamer nowadays.

Test setup: Core i9 9900K + RTX 2080

This is with a RTX 2080 and the difference is already 10fps+ with the higher performing kit, imagine the 2080 Ti or next gen 3080 Ti

Sorry but I think you are living on another planet if you think 16GB DDR4 3200Mhz doesn't represent the mainstream PC today. Even if you look at the Top 10 best selling memory on Amazon, only 2/10 kits are 3600Mhz and only 3/10 kits are more then 16GB. So if current buyers don't even buy 32GB 3600Mhz, what do you think that all the buyers in the past years did? Compared to a mainstream gaming PC, 16GB 3200Mhz is the sweetspot. And the majority will still have slower RAM then that.

And even if 3600Mhz is the standard these days, the bottleneck in most games is very small. And from all sites to compare with, you pick graphics from TPU, wich doesn't even use 1% lows. TPU reviews aren't even usable for GPU/CPU comparisons because they only use AVG FPS... That might have been fine in 2010, but in 2020 we know that 1% lows give a FAR better view of the actual game experience you can expect, especially when you have hardware slower then the hardware used in the review.

Techpowerup reader will buy System A, Techspot reader will buy System B and will be much happier

krizby

Posts: 429 +286

Sorry but I think you are living on another planet if you think 16GB DDR4 3200Mhz doesn't represent the mainstream PC today. Even if you look at the Top 10 best selling memory on Amazon, only 2/10 kits are 3600Mhz and only 3/10 kits are more then 16GB. So if current buyers don't even buy 32GB 3600Mhz, what do you think that all the buyers in the past years did? Compared to a mainstream gaming PC, 16GB 3200Mhz is the sweetspot. And the majority will still have slower RAM then that.

And even if 3600Mhz is the standard these days, the bottleneck in most games is very small. And from all sites to compare with, you pick graphics from TPU, wich doesn't even use 1% lows. TPU reviews aren't even usable for GPU/CPU comparisons because they only use AVG FPS... That might have been fine in 2010, but in 2020 we know that 1% lows give a FAR better view of the actual game experience you can expect, especially when you have hardware slower then the hardware used in the review.

Techpowerup reader will buy System A, Techspot reader will buy System B and will be much happier

If you ever been into overclocking RAM, you will know that having higher frequency/ lower Latency translate to higher 1% low FPS, it is extremely beneficial to 1% Low rather than Avg FPS, try it!

Sure 3200mhz RAM represent the best price to performance ratio atm, however for testing Rig, you don't want to have a bottleneck elsewhere rather than the GPU you are testing. At this moment it is unfair to high performance GPU when pitched against budget offerings, simply because the test system is choking the performance out of high-end GPU.

This is the reason Techspot choose a 9900K rather than 3950X to benchmark GPU, however the Memory subsystem is simply inadequate, you don't couple 500usd CPU with 120usd RAM in any system, let alone a benchmarking rig.

I'm not even suggesting the craziest RAM config out there, a 250usd 4x8GB 3600mhz cas 16 will simply the job. Pretty much any Ryzen 2 can run the same Ram config so it is parity between Intel and AMD platform.

Last edited:

The funny thing for me is the systems I game on have always been RAM speed limited in that I've never had a Mobo I could add higher speed RAM to.

Currently an Intel B360 so I'm stuck at 2666 but at least I can get 3200 CL16 and run it at 2666 CL13 and get the reduced latency benefits, which I do see. Someday I'll buy a real Mobo so I can use some real RAM.

Even when I gamed with a NUC, I looked around for 2133 CL13 instead of the crap CL15 sold everywhere. With an iGPU every bit of latency reduction helps. Looked for 2666 CL14 or 3200 CL16 I could run at 2133 CL12 or even 11 but it was just too expensive for a cheap NUC.

BTW, kids' Ryzens use 3200 CL16 but they're 1600AF and 2600 so again worth it to get there but not to push above that level of memory performance.

Currently an Intel B360 so I'm stuck at 2666 but at least I can get 3200 CL16 and run it at 2666 CL13 and get the reduced latency benefits, which I do see. Someday I'll buy a real Mobo so I can use some real RAM.

Even when I gamed with a NUC, I looked around for 2133 CL13 instead of the crap CL15 sold everywhere. With an iGPU every bit of latency reduction helps. Looked for 2666 CL14 or 3200 CL16 I could run at 2133 CL12 or even 11 but it was just too expensive for a cheap NUC.

BTW, kids' Ryzens use 3200 CL16 but they're 1600AF and 2600 so again worth it to get there but not to push above that level of memory performance.

krizby

Posts: 429 +286

The funny thing for me is the systems I game on have always been RAM speed limited in that I've never had a Mobo I could add higher speed RAM to.

Currently an Intel B360 so I'm stuck at 2666 but at least I can get 3200 CL16 and run it at 2666 CL13 and get the reduced latency benefits, which I do see. Someday I'll buy a real Mobo so I can use some real RAM.

Even when I gamed with a NUC, I looked around for 2133 CL13 instead of the crap CL15 sold everywhere. With an iGPU every bit of latency reduction helps. Looked for 2666 CL14 or 3200 CL16 I could run at 2133 CL12 or even 11 but it was just too expensive for a cheap NUC.

BTW, kids' Ryzens use 3200 CL16 but they're 1600AF and 2600 so again worth it to get there but not to push above that level of memory performance.

As you can see in Techspot article, if you populate all 4 DIMM slots it should give you higher performance than 2x8GB 3000mhz even when you are stuck at 2666mhz, not to mention you can reduce the timings at 2666mhz too.

This is something I can do. And have been putting off...As you can see in Techspot article, if you populate all 4 DIMM slots it should give you higher performance than 2x8GB 3000mhz even when you are stuck at 2666mhz, not to mention you can reduce the timings at 2666mhz too.

I have 4 slots but, ah, installed my tower cooler blocking one of the RAM slots. Total noob move and I need to take it apart and rotate 90deg fo fix it. This should provide a push to do it as I love testing things like this. I'll steal some RAM from one of the kids' machines.

krizby

Posts: 429 +286

This is something I can do. And have been putting off...

I have 4 slots but, ah, installed my tower cooler blocking one of the RAM slots. Total noob move and I need to take it apart and rotate 90deg fo fix it. This should provide a push to do it as I love testing things like this. I'll steal some RAM from one of the kids' machines.

The pathetic IPC/Core Clock increase that Intel introduce with each iteration of Sky Lake right now means there is more of an actual improvement to upgrade your memory subsystem rather than the CPU.

Want up to 10% more FPS ? just buy 2 more sticks of RAM. I highly doubt the 10900K at 5.3Ghz can beat my 8700K 5.1Ghz by 5% in any of the current game.

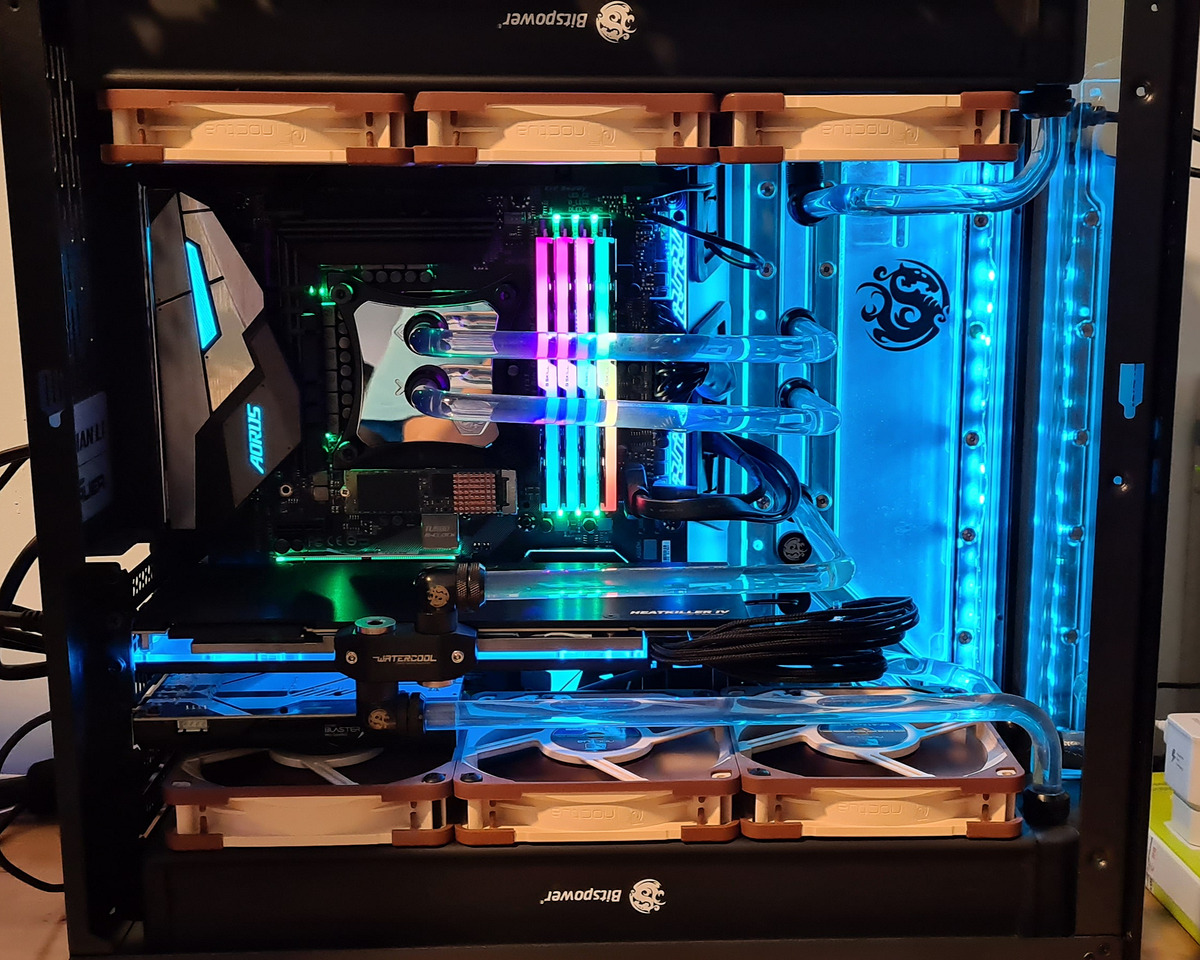

In fact I just bought the G.Skill Trident Neo 4x8GB 3600mhz cas 16-16-16-36 kit that brought my Timespy CPU score from 9132 to 9646 which is in the top 20th CPU score for 8700K.

https://www.3dmark.com/compare/spy/11989136/spy/11963073

First result is with 4x8GB 3600mhz 14-14-14-28

Second result is with 2x8GB 3466mhz 15-15-15-30

Techspot has done many RAM deep dives in the past, they know the important of fast RAM config, I don't know why they still stuck with 3200mhz cas 14 for the testing rig.

Even in their article they already knows that by doubling their 3200mhz cas 14 RAM to 4x8GB 3200mhz cas 14, the 2080 Ti can gain 3% in avg FPS and 5% in 1% Low FPS

Last edited:

In both scores, was the system set to use the XMP profile? If so, then the measurable difference between the use of 2 vs 4 DIMMs slots has been mixed in with the changes between the RAM speed and timings. If you can spare the time, try running the tests again, with the new memory kit, but between each run alter the number of DIMMs used - I.e. start with 1, then go to 2, etc.

Unless you only alter one variable in a test, you're never going to be able to tell for certain that the experienced change in performance is down to the aspect you're hoping to analyse.

Edit: It's worth being a little cautious when using 3DMark to compare changes that result in just a few % difference. Here are 3 runs I did this morning:

www.3dmark.com

www.3dmark.com

There's a 6% difference between the lowest and highest CPU results, and yet the only thing between each run was rebooting the PC.

Unless you only alter one variable in a test, you're never going to be able to tell for certain that the experienced change in performance is down to the aspect you're hoping to analyse.

Edit: It's worth being a little cautious when using 3DMark to compare changes that result in just a few % difference. Here are 3 runs I did this morning:

Result

There's a 6% difference between the lowest and highest CPU results, and yet the only thing between each run was rebooting the PC.

Last edited:

krizby

Posts: 429 +286

In both scores, was the system set to use the XMP profile? If so, then the measurable difference between the use of 2 vs 4 DIMMs slots has been mixed in with the changes between the RAM speed and timings. If you can spare the time, try running the tests again, with the new memory kit, but between each run alter the number of DIMMs used - I.e. start with 1, then go to 2, etc.

Unless you only alter one variable in a test, you're never going to be able to tell for certain that the experienced change in performance is down to the aspect you're hoping to analyse.

Edit: It's worth being a little cautious when using 3DMark to compare changes that result in just a few % difference. Here are 3 runs I did this morning:

Result

www.3dmark.com

There's a 6% difference between the lowest and highest CPU results, and yet the only thing between each run was rebooting the PC.

Well did you close all background programs and monitoring softwares before the benchmark ? I closed everything but the 3Dmark so my score is reproducible after multiple runs.

Here are the result between 2 sticks and 4 sticks

https://www.3dmark.com/compare/spy/11994365/spy/11994072

1st score with 4x8GB 3600Mhz 16-16-16-32

2nd score with 2x8GB 3600Mhz 16-16-16-32

All timings are manually tuned so there aren't any other variable but the difference in number of sticks.

Interestingly enough due to the nature of T-Topology on almost all Intel motherboards, I was unable to use Cas 14 with 2 sticks (windows unable to load), but with 4 sticks at 3600mhz 14-14-14-28 everything is fine and dandy.

Therefore with Intel platform it's better to use 4 sticks, you can get dual channel quad ranks memory plus higher frequency/lower timings

Here is the result with 3600mhz 14-14-14-28, only a minor increase to CPU score

https://www.3dmark.com/spy/11994454

If you check the 9700K timespy score over at Guru3d

Their result is 400points higher than your best score, the difference is they used 4x4GB 3200mhz RAM

So it would be awesome if Techspot decide to test how much of an improvement going from 2x8GB 3200mhz cas 14 to 4x8GB 3600mhz cas 16 on multiple GPU configs

Well I would love to do some further investigations had my PC not built for benchmarking purposes :/

Last edited:

Yes, including blocking network traffic.Well did you close all background programs and monitoring softwares before the benchmark ?

There is one other variable: amount of memory. But let's assume that there is no difference at all between the system parameters between the two tests (e.g. temperatures for all components is exactly the same, so there's no difference in clock speeds) and that the results are harmonic means of multiple runs (so the results represent the worse case scenario of the central tendency of the frame rate distribution), then the use of 4 DIMMs has increased the more graphically intense test of the two by 0.1%, so for GPU testing it's far too small to be significant enough.Here are the result between 2 sticks and 4 sticks

https://www.3dmark.com/compare/spy/11994365/spy/11994072

1st score with 4x8GB 3600Mhz 16-16-16-36

2nd score with 2x8GB 3600Mhz 16-16-16-36

All timings are manually tuned so there aren't any other variable but the difference in number of sticks.

The 5.6% difference in the CPU test is of the same margin that my mornings achieved - of course, the test parameters are not quite the same, but if a simple reboot can achieve a 6% variation in test results, it makes it hard to extract any significant from the 5.6% gained by altering the number of DIMMs, given that a reboot is required to alter the amount of memory used.

However, if we go ahead and attribute the difference to solely being caused by using 4 DIMMs over 2, then we must assume that in this particular CPU limited situation on a test system such as yours, then filling every memory slot will raise performance by 5~6%. Does this mean that every CPU and motherboard combination, in every CPU-limited situation, will improve by the same amount? We cannot tell and it would be too much of an extrapolation to assume it would.

Therefore the only way to tell would be test multiple CPUs, from both vendors, across a range of motherboards, and a battery of CPU-limited tests - an enormous amount of work. And to what end? If it turns out that every configuration does improve by 5~6%, then all current reviews and product comparisons remain relevant. If it's the opposite case (I.e. only certain system setups and tests used produce the performance increase), then you'd avoid using them to ensure products can be reliably compared.

krizby

Posts: 429 +286

Techspot did test the difference between dual channels dual ranks vs dual channels quad ranks memory.

www.techspot.com

www.techspot.com

The difference is there, some game might not benefit from higher performance RAM config but up to 10% better AVG and 1% Low fps can be seen in some games. This test didn't include faster RAM, just doubling the ranks.

Here is a problem with your hypothesis, the performance uplift from faster RAM won't apply to all GPU configs, lower ends GPU will simply run out of grunt before they are bottlenecked by RAM. Therefore you run into a situation where lower end GPU look more favorable to higher end GPU on a cost per frame basis (or price to performance) at 1080p or even 1440p.

So my suggestion is update the testing rig up to 2020 standard before the next gen GPU are coming, it is only fair doing so, especially when you are dealing with possibly 2080 Ti + 50% perf. After all Techspot chose the best CPU to benchmark GPU, RAM though is probably quite far from the best...

Are More RAM Modules Better for Gaming? 4 x 4GB vs. 2 x 8GB

Today we're taking a look at the performance impact having four DDR4 memory modules can have on performance in a dual-channel system, opposed to just two modules....

www.techspot.com

www.techspot.com

The difference is there, some game might not benefit from higher performance RAM config but up to 10% better AVG and 1% Low fps can be seen in some games. This test didn't include faster RAM, just doubling the ranks.

Here is a problem with your hypothesis, the performance uplift from faster RAM won't apply to all GPU configs, lower ends GPU will simply run out of grunt before they are bottlenecked by RAM. Therefore you run into a situation where lower end GPU look more favorable to higher end GPU on a cost per frame basis (or price to performance) at 1080p or even 1440p.

So my suggestion is update the testing rig up to 2020 standard before the next gen GPU are coming, it is only fair doing so, especially when you are dealing with possibly 2080 Ti + 50% perf. After all Techspot chose the best CPU to benchmark GPU, RAM though is probably quite far from the best...

Last edited:

Using a RAM configuration to raise performance just creates the same situation for the high end GPU - I.e. its cost-per-frame ratio at 1080p is artificially lower than it should be, because the test is CPU-limited (or more rather, RAM-limited). It's a bit of a no-win situation, though, as comparing a raft of GPUs at a given resolution will invariably result in the performance dependency being associated with the wrong component: at 1080p, a 2080 Ti won't look at much better than, say, a 1660 Ti but at 4K, it will be vastly better.Therefore you run into a situation where lower end GPU look more favorable to higher end GPU on a cost per frame basis (or price to performance) at 1080p or even 1440p.

While I disagree with your initial assertion that 4 DIMMs of 8 GB DDR4-3600 is the current standard (Amazon sales suggest 2 x 8GB 3000 or 3200), there always comes a time when a test system needs to be updated. Whether or not the team wishes to do so is down to them.So my suggestion is update the testing rig up to 2020 standard before the next gen GPU are coming

krizby

Posts: 429 +286

Using a RAM configuration to raise performance just creates the same situation for the high end GPU - I.e. its cost-per-frame ratio at 1080p is artificially lower than it should be, because the test is CPU-limited (or more rather, RAM-limited). It's a bit of a no-win situation, though, as comparing a raft of GPUs at a given resolution will invariably result in the performance dependency being associated with the wrong component: at 1080p, a 2080 Ti won't look at much better than, say, a 1660 Ti but at 4K, it will be vastly better.

While I disagree with your initial assertion that 4 DIMMs of 8 GB DDR4-3600 is the current standard (Amazon sales suggest 2 x 8GB 3000 or 3200), there always comes a time when a test system needs to be updated. Whether or not the team wishes to do so is down to them.

Well let me rephrase it better: 2x8GB 3200mhz is not standard RAM config for typical 9900K/3900X users. For reviewers it's an even worse choice since you are potentially choking higher-end GPU...

Yes I know Techspot is all about price to performance ratio but just by spending another 100-200usd for RAM an average user can extract more performance out of their system, more than upgrading to the 10th gen Intel CrapPU.

Here I'm just gonna cherry pick another chart from Techspot Ryzen 3rd gen memory testing

The 2080 Ti goes from 77fps with 3200mhz cas 14 to 91fps with 3800mhz tuned timings. There are potential to go even further here as I have seen users from other forum posting 4x8GB 3800mhz cas 14 config on Ryzen 3000 platform, gaininng a boost from quad ranks memory. There are hardly any changes to RX 5700 fps because the GPU is not even being remotely bottlenecked.

For Ryzen 3000 owners, there are even bigger gain to be had by doubling the memory ranks (2x8GB to 4x8GB config)

best memory for Ryzen 3000

Last edited:

Would users with high end CPUs and GPUs be gaming at 1080p though? I appreciate the points you're raising, as it's valid for CPU testing/reviewing, but it is down the review team as to what system configuration they want to use.Well let me rephrase it better: 2x8GB 3200mhz is not standard RAM config for typical 9900K/3900X users. For reviewers it's an even worse choice since you are potentially choking higher-end GPU...

Also note that 3000 MHz, with manual timings at the FLCK at 1500 MHz, produced higher performance than 3800 MHz, CAS 16, FLCK 1900 MHz. If one just used those two pieces of data alone, it would seem that there's little need to go with faster RAM, as long as one can adjust the timings appropriately.The 2080 Ti goes from 77fps with 3200mhz cas 14 to 91fps with 3800mhz tuned timings.

krizby

Posts: 429 +286

Would users with high end CPUs and GPUs be gaming at 1080p though? I appreciate the points you're raising, as it's valid for CPU testing/reviewing, but it is down the review team as to what system configuration they want to use.

Also note that 3000 MHz, with manual timings at the FLCK at 1500 MHz, produced higher performance than 3800 MHz, CAS 16, FLCK 1900 MHz. If one just used those two pieces of data alone, it would seem that there's little need to go with faster RAM, as long as one can adjust the timings appropriately.

Well since I do have the monitor that HUB deemed the best IPS Super Fast Gaming monitor, the Asus TUF VG279QM, that is a 1080p, 280hz screen. The CPU/RAM/GPU horsepower required to drive this monitor at 200+fps for competitive gameplay is quite insane really.

The 3000mhz tuned memory performance proved that there are bigger performance gain to be had with faster RAM rather than the higher Infinity Fabric clocks, going quad ranks memory with tuned timings is even going to be better as shown in Tomshardware article.

And well I suggested going for both higher freq + quad ranks memory, but I guess going only for quad ranks is a better price to performance upgrade.

That's a good point about low resolution, super high refresh rate gaming needing surprisingly powerful components. It does beg the question that should reviews containing performance benchmarks of components be separated or flagged up for specific users/scenarios? For example, the latest CPU review would be initially a general one, using the usual test platform, whereas a similar review could be targeted at e-sport professionals by only looking at those particular game benchmarks, but with additional test paraments (such as memory overclocking). The only problem there is that it's less of a CPU review, and more of a RAM/platform one.Well since I do have the monitor that HUB deemed the best IPS Super Fast Gaming monitor, the Asus TUF VG279QM, that is a 1080p, 280hz screen. The CPU/RAM/GPU horsepower required to drive this monitor at 200+fps for competitive gameplay is quite insane really.

Similar threads

- Replies

- 25

- Views

- 275

- Replies

- 41

- Views

- 480

Latest posts

-

Biggest gamma-ray burst ever recorded came from a "regular" supernova

- hahahanoobs replied

-

New Affordable AMD B650 Motherboard Roundup

- Avro Arrow replied

-

10 Things We Hate About Nvidia

- Endymio replied

-

TechSpot is dedicated to computer enthusiasts and power users.

Ask a question and give support.

Join the community here, it only takes a minute.