Bottom line: AIB partner ASRock has slashed pricing on some of Intel's Arc graphics cards, suddenly making them far more attractive to GPU shoppers in the market for a deal. Given the cuts and Intel's evolving drivers, maybe it is time to consider an Arc card?

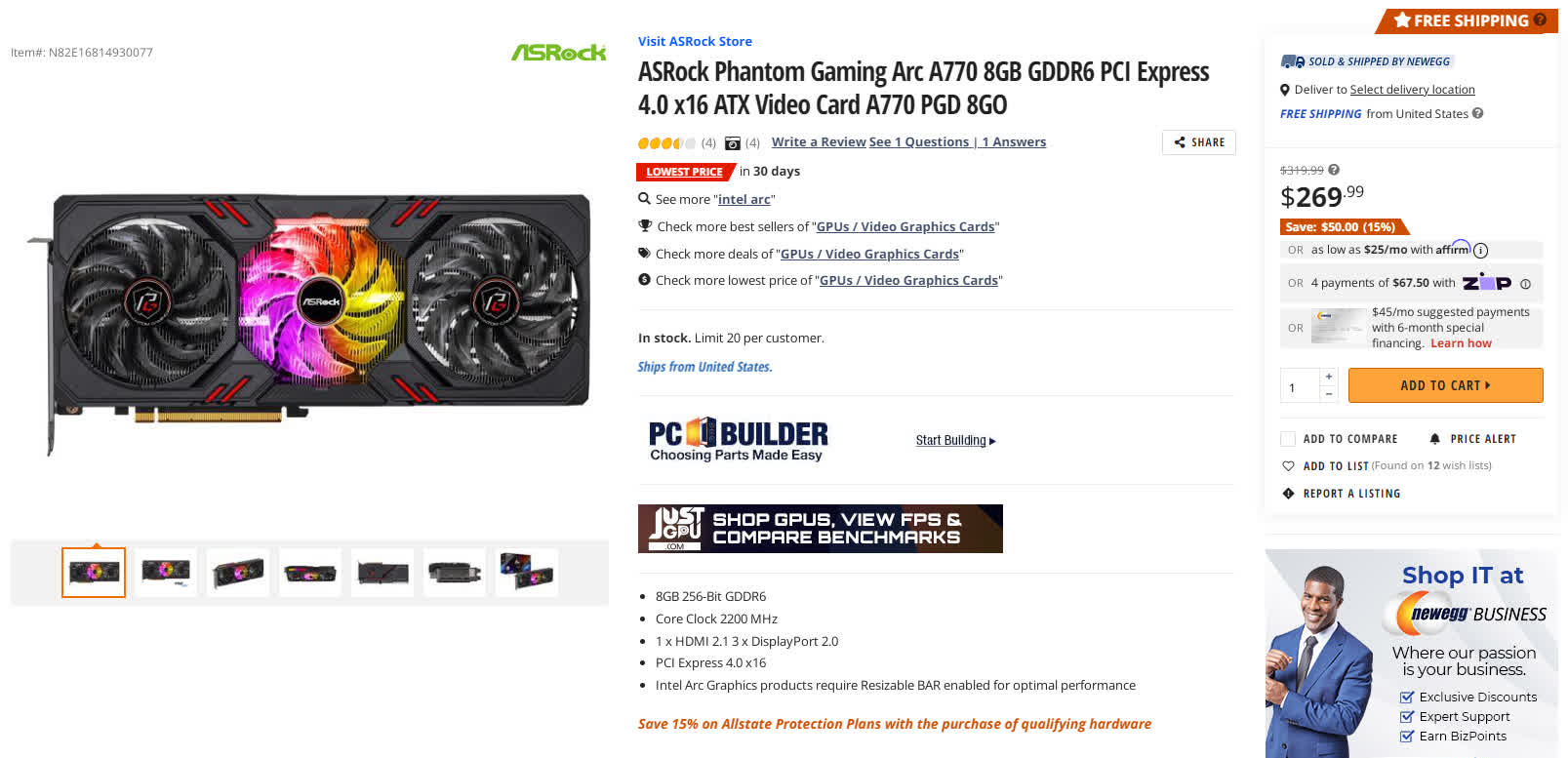

Over on Newegg, the ASRock Phantom Arc A770 8GB is down to $269.99 after a $50 instant price cut and the ASRock Challenger Arc A380 6GB commands just $119.99 after instant savings of $20. This apparently makes it the most affordable AV1 solution on the market with 6GB of memory.

Intel recently lowered the price of its A750 down to $250, but ASRock is taking it a step further. The ASRock Challenger Arc A750 8GB can be had for $239.99, besting Intel's price by $10.

Also read: Intel Arc GPU Re-Review: New Drivers, New Performance?

The A770 at $270 is only $30 more expensive than the A750 at $240, and is also very attractive compared to Nvidia's far more expensive GeForce RTX 3060. Some believe additional price cuts could also be in the cards.

Our own Steve Walton recently revisited Arc GPU performance following Intel's release of new drivers that claim to deliver mega performance improvements. Across a dozen games, the Arc A770 with the newer drivers performed about nine percent faster than it did at launch when playing at 1080p and eight percent faster on average at 1440p.

Wondering what else you can currently pick up for around the same price? The ASRock Radeon RX 6600 8GB is priced at $224.99 over on Newegg while the ASRock Phantom Radeon RX 6600 XT 8GB can be taken home for $274.99. Sticking with ASRock, the Challenger Radeon RX 6650 XT 8GB is $289.99. On the more affordable end, a Radeon RX 6400 is going to set you back about $140 on average.

Are you considering any of ASRock's Intel Arc cards given their new lower price points, or perhaps you share the opinion that additional price cuts are still to come in the not too distant future?

https://www.techspot.com/news/97918-intel-arc-graphics-cards-asrock-receive-attractive-price.html