What just happened? Multiple leaked benchmark scores of the Apple M2 Ultra's GPU seem to suggest that it could be slower than some standalone graphics cards, like the Nvidia RTX 4080. The benchmark results also suggest that the CPU component of the SoC could be slower than current-generation top desktop processors from Intel and AMD.

Starting off with the CPU scores, the M2 Ultra notched up 2,809 points in the Geekbench 6 single-core benchmark and 21,531 points in the multi-core tests. This is lower than the 3,083 and 21,665 points racked up by the Intel Core i9-13900KS, as well as the 2,875 and 19,342 points scored by AMD's Ryzen 9 7950X. Do note that these synthetic benchmarks do not necessarily give us the full picture, but they do indicate that the M2 Ultra may not be the speed demon that Apple would have us believe.

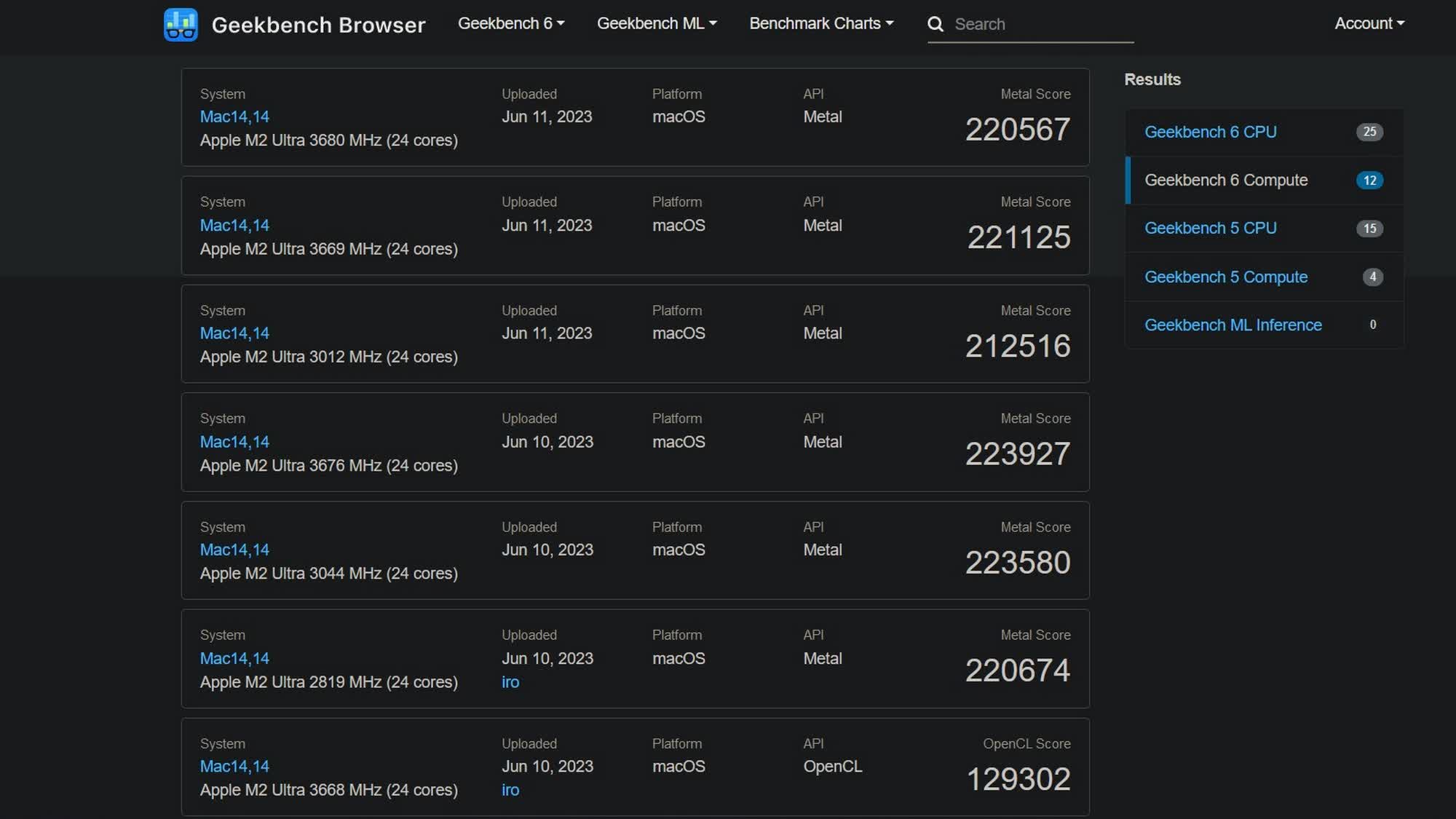

On the GPU side, the M2 Ultra's 220,000+ score in Geekbench 6 Compute (Metal API) benchmark is slightly higher than the 208,340 (OpenCL) points scored by the RTX 4070 Ti, but it's still lower than the RTX 4080's score of 245,706.

In comparison, the GPU in the M1 Ultra could only notch up about 155,000, meaning the new chip is a significant improvement over its predecessor in terms of graphics performance (h/t Tom's Hardware).

If you're looking for a direct Geekbench 6 OpenCL comparison, the M2 Ultra's OpenCL score of around 155,000 is significantly lower than those of the current-gen Nvidia cards and somewhat similar to that of the AMD Radeon RX 6800 XT. That said, it is once again worth mentioning that synthetic benchmarks do not always indicate real-world performance, so take them with a pinch of salt.

In GFXBench 5.0, the M2 Ultra managed to notch up 331.5 fps in the 4K Aztec Ruins high-tier offscreen tests, which would suggest that the graphics in the new chip is roughly 55 percent faster than its predecessor. However, GFXBench is hardly the best tool to benchmark high-end desktop GPUs, so the results do not necessarily mean that the M2 Ultra would be that much faster than the M1 in games and other graphics applications.

The M2 Ultra is Apple's latest flagship desktop silicon that comes with 24 CPU cores (16 performance cores, 8 efficiency cores), up to 76 GPU cores, a new 32-core neural engine, and support for up to 192GB of unified memory at a bandwidth of 800 GB/s. The chip will power Apple's new Mac Studio and Mac Pro desktop computers that were announced at WWDC earlier this month.

https://www.techspot.com/news/99026-early-benchmarks-suggest-apple-m2-ultra-could-slower.html