Bottom line: Although a majority of gamers play at 1440p or 1080p resolutions (and for good reasons), there are some who like to go all out in spending towards making the ultimate gaming PC that goes through AAA titles like a hot knife through butter. However, it seems that even money isn't going to solve the present limitations with 8K gaming as demonstrated by a Gears 5 benchmark that went through five of the most powerful GPUs with the required VRAM on-board to see if the game is playable at 8K resolution.

Gears 5 was praised for its brilliant single-player campaign even though it got off to a rocky start on PC if Steam reviews are to be considered. Nonetheless, a PC release made it possible for the game to run at an 8K resolution, an experience which Tweaktown recently showed as being barely playable on even the current most powerful "consumer" graphics card: the Nvidia Titan RTX.

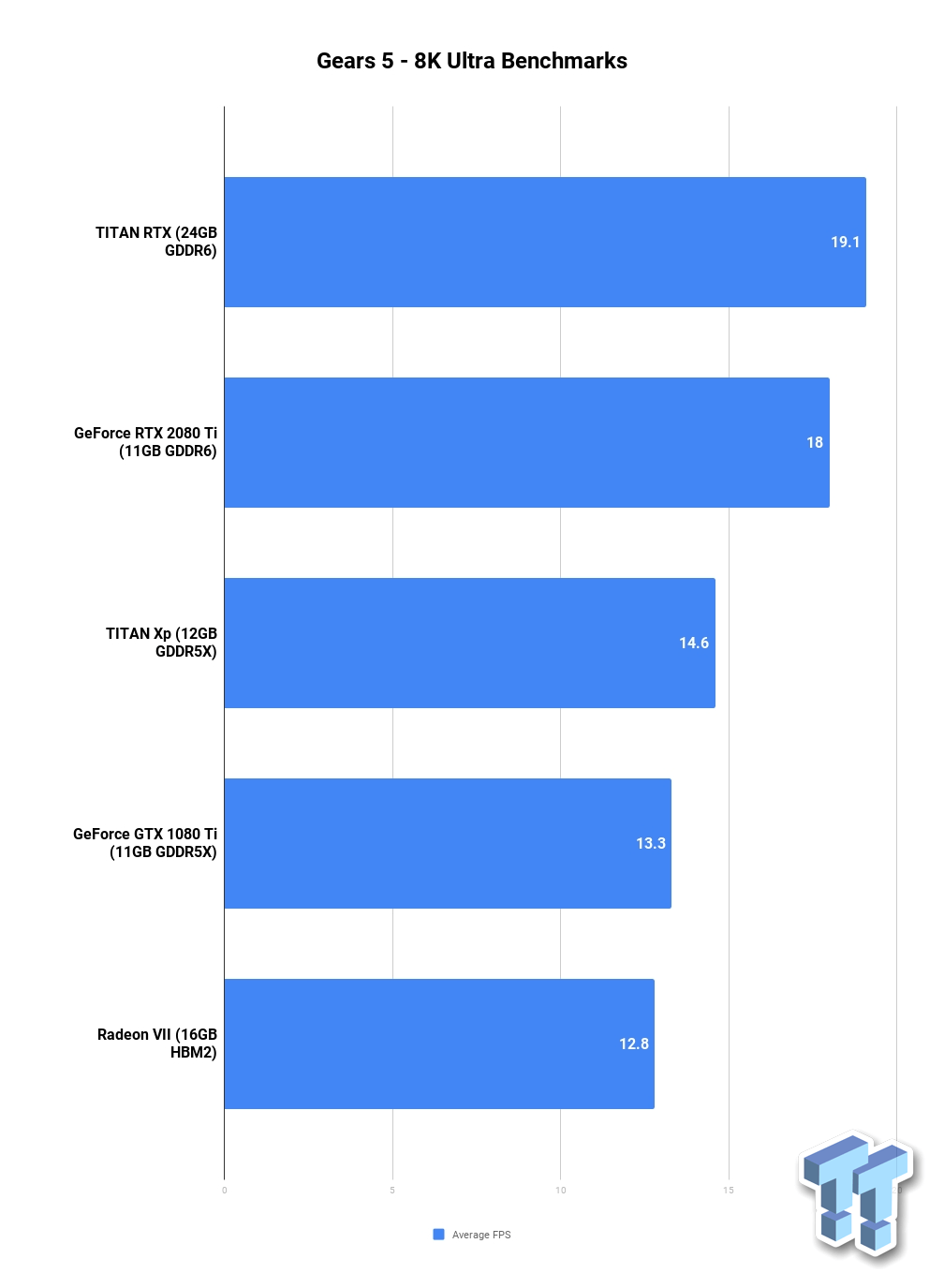

The benchmark didn't just include Nvidia's current flagship but four other GPUs as well, all of which had at least 11GB of VRAM or more since the game refused to load at 8K resolution for cards with 8GB VRAM. Thus the only ones to qualify other than the Titan RTX were Nvidia's GTX 1080 Ti, RTX 2080 Ti, Titan Xp and AMD's Radeon VII.

The cards were tested on a gaming rig with an Intel Core i7-8700K running at 5GHz (cooled by Corsair's H115i Pro), 16GB of DDR4-2933 HyperX Predator RAM and 512GB + 1TB OCZ NVMe M.2 SSDs on a Z370 AORUS Gaming 7 motherboard, powered by InWin's 1065W PSU.

With the high resolution pack installed, motion blur and vertical sync were disabled to get 8K benchmarks at medium settings.

While the game was rendered somewhat playable by the RTX Titan at nearly 30fps, the average number reduced to less than 20 (and even lower for other cards) with the graphics set to Ultra Settings (motion blur and vertical sync disabled).

"Gears 5 at 7680 x 4320 with 4K high-res textures and Ultra settings brings every graphics card known to man to its knees. Nvidia's flagship $2499 monster Titan RTX graphics card can't even muster 20FPS, while the 16GB of HBM2 is spinning its wheels it seems with only 12.8FPS on the Radeon VII," noted the publication.

Although such benchmarks may just simply be a stress test for these GPUs, they also give a hint about the time frame by which we can expect 8K gaming to become mainstream, which as of now seems to be at least 2 or 3 years away for 8K@60fps.

Speaking of which, it'll be interesting to see how upcoming consoles like the PS5/PS5 Pro and Xbox Project Scarlett manage to support 8K gaming at high frame rates, especially considering their (expected) lower price of admission compared to an 8K-capable gaming PC.

https://www.techspot.com/news/82054-gears-5-8k-resolution-brings-2500-nvidia-titan.html