You can throw barbs at my old girl all you want.

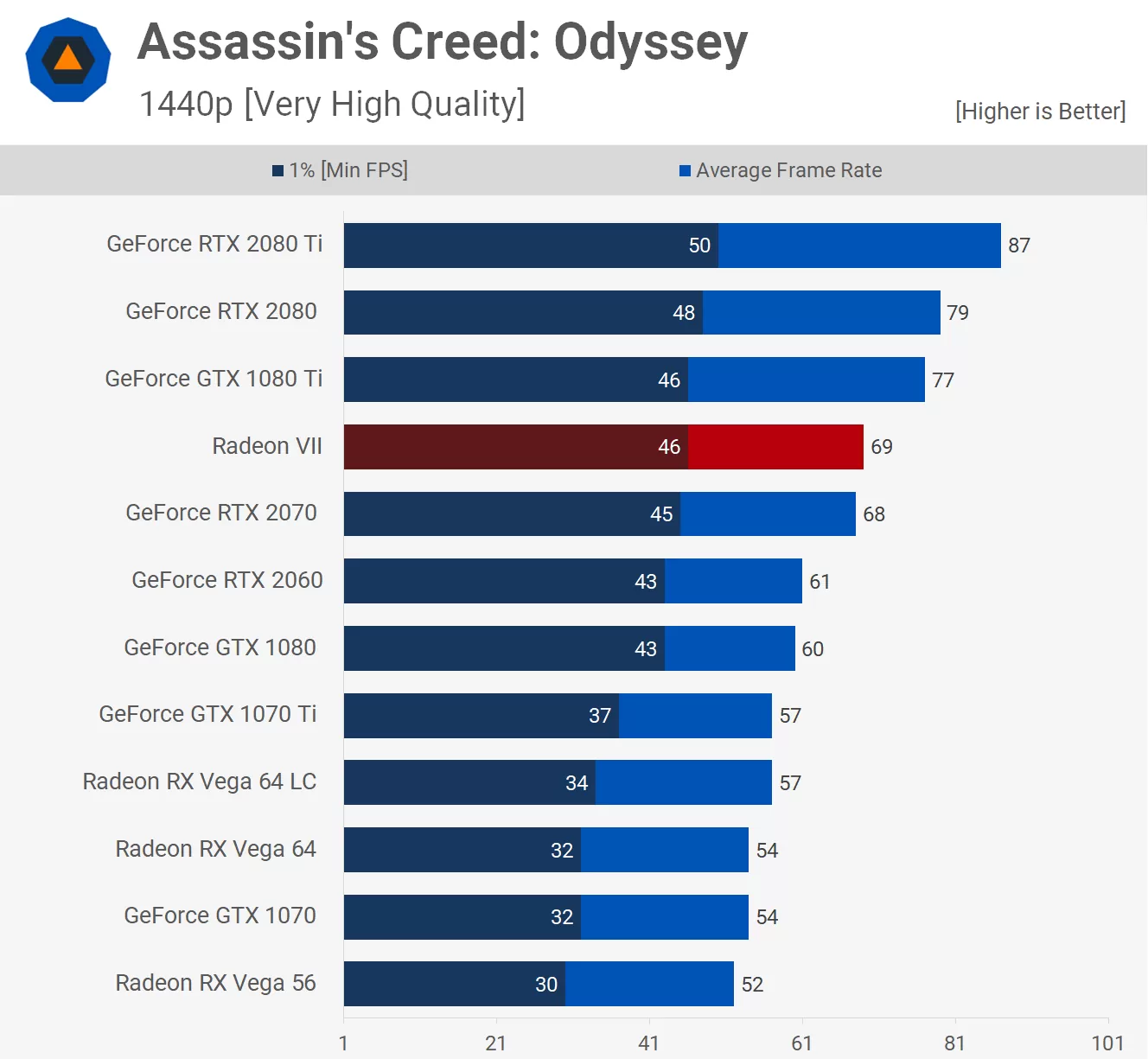

I've gotten 10 years and counting of top tier top level gaming performance out of it, and it still rocks the latest games at High Settings, Very High Settings, or sometimes maxed @ 1440p/144Hz. I am sure there is a bottleneck here or there but I don't feel the slightest hiccup or slowdown paired with my GSync 1MS HP Omen, its silky smooth gameplay and my Pascal is doing most the work at 2560 X 1440 anyways. DX12 games will really tax the CPU though.

When I upgrade its going to be like time traveling.

This is a great revisit of it:

Newer chips are a little to a lot faster but I don't care, it still does what I need it to.

I bought my 930 around the time Sandy Bridge released....its been THAT long.

Talk about bang for the buck.