Never before have I been so happy to have chosen the RX 6800 XT over the RTX 3080. When I saw the specs on it after Jensen had done his "taking it out of the oven" schtick, I remember laughing because it only had 10GB of VRAM, less than a GTX 1080 Ti. I was sure that the card was DOA, especially considering that the base RTX 2080 Ti also had 11GB and was available with 12GB.

My exact thoughts were:

"Who the hell would be insane enough to pay

that much money for a card with only 10GB on it? That's going to make it

very short-lived!"

- If I only knew.....

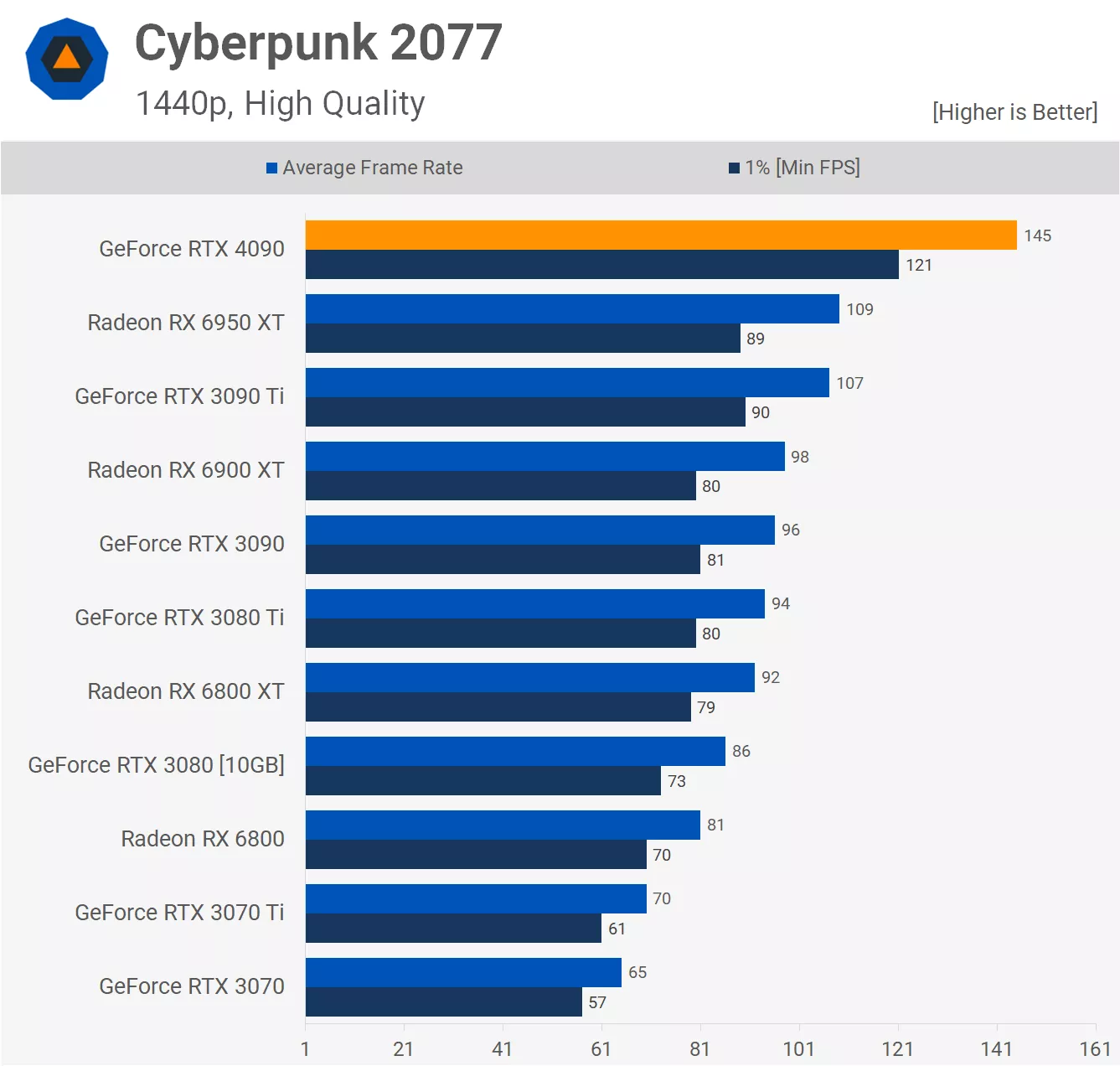

Sure enough, about a month after the RTX 30-series launched, Ubisoft released Far Cry 6, a game that required 11GB of VRAM to use the HD textures. So, this card, that was JUST released and cost $800USD, was ALREADY incapable of using HD textures in Far Cry 6. At the time, I was annoyed by this because I had purchased my RX 5700 XT two months prior and it only has 8GB of VRAM so I couldn't use the textures either. Of course, the difference there was that I paid the equivalent of ~$365USD for the RX 5700 XT, not $800! If I HAD paid $800 on a card and a game came out the same month that had requirements that my card didn't meet, I'd have been FUMING!

Then the tech press started with their nVidia fawning over stupid things like DLSS (something that no high-end card should need anyway) and ray-tracing (which paradoxically needs MORE VRAM to function) which pushed those who didn't know better (which is most people) towards the RTX 3080.

What the tech press said:

"The performance of the RTX 3080 is fantastic and is a much better value than the RTX 2080 Ti that people were spending well over a thousand dollars for a little over two months ago."

(NO MENTION MADE OF HOW ABSURD IT WAS TO ONLY PUT 10GB ON THIS CARD)

What the ignorant masses heard:

"It's ok to be lazy and just buy the same brand you had before because the RTX 3080 is a great value when compared to a card that was a horrible value."

What the tech press SHOULD have said:

"The RTX 3080's performance is fantastic, eclipsing the horribly-overpriced RTX 2080 Ti by an almost 40% performance margin. It's also a much better value than the RTX 2080 Ti but that's not saying much because nVidia set the value bar pretty low with that card.

However, we can't recommend it because that 10GB VRAM buffer is FAR too small for a card with a GPU as potent as nVidia's GA102. This will undoubtedly cause the RTX 3080 to become obsolete LONG before it should and we're already seeing this 10GB VRAM buffer being a limitation in Far Cry 6. No card that costs $800USD should be limited by its amount of VRAM in the same month that it's released. For most people, I would say to wait a few weeks to see what Big Navi brings to the table. If you have no problem spending $800USD on a card that might be unusable above 1080p in less than four years, only care about its performance TODAY and can't wait a few weeks to see what AMD is releasing, then by all means buy it but don't complain when that 10GB is no longer enough because it won't be long."

I've been publicly saying this since the RTX 3080 came out, completely puzzled how the RTX 3080 (what Jensen called "The Flagship Card") could have a smaller VRAM buffer than the GTX 1080 Ti, RTX 2080 Ti and ESPECIALLY the RTX 3060 (that one STILL makes me scratch my head). Of course, the RTX 3070 is even worse off (and probably sold more) but the ignorant masses went hog-wild for the RTX 3080 while the RTX 3070 was primarily purchased by high FPS 1080p gamers so they won't have problems. All I could do was shrug my shoulders and think to myself "This won't end well but hey, people are allowed to be stupid so I'll just sit back and watch it play out as I know it will. Where's the popcorn?" and chuckled.

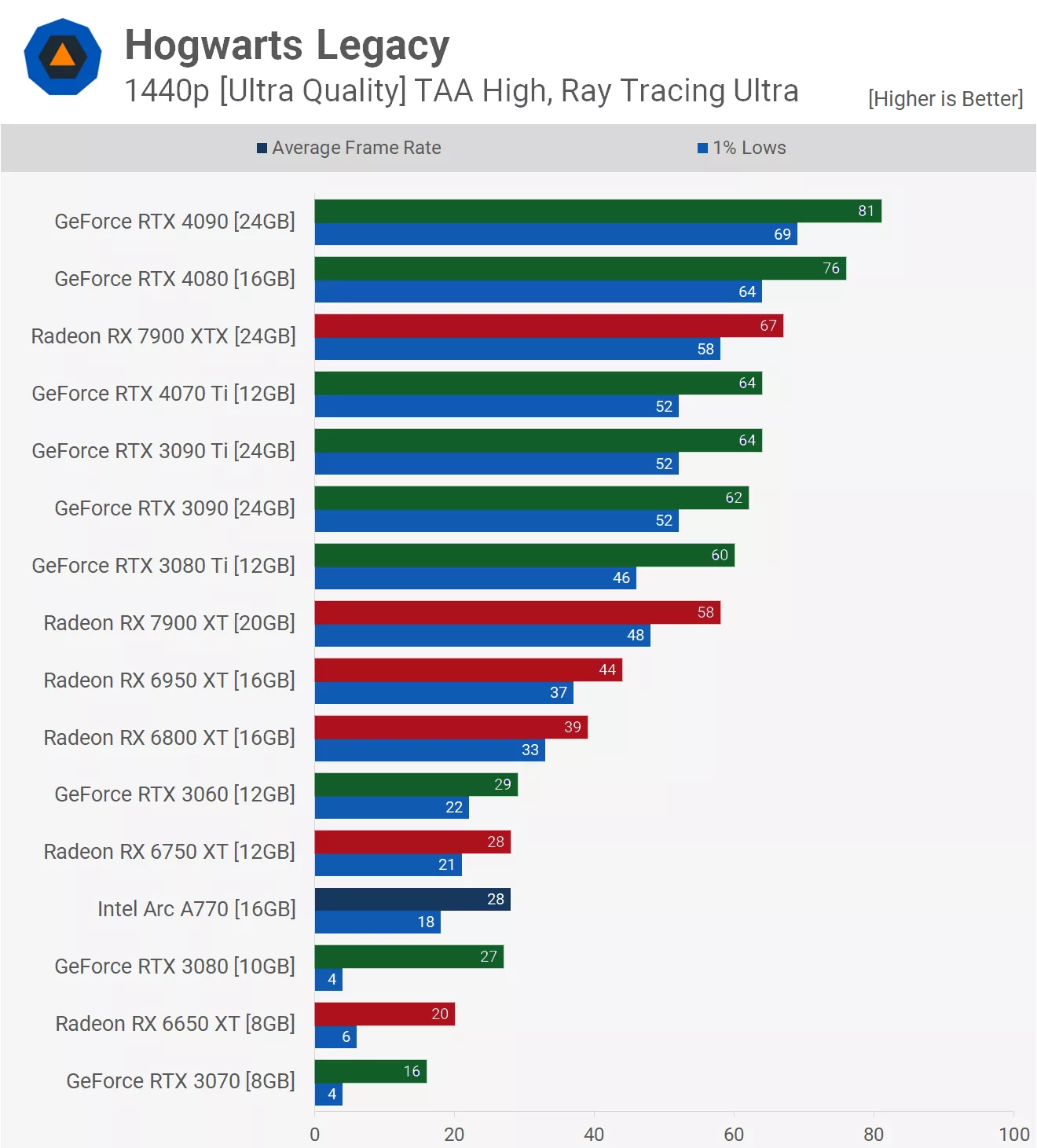

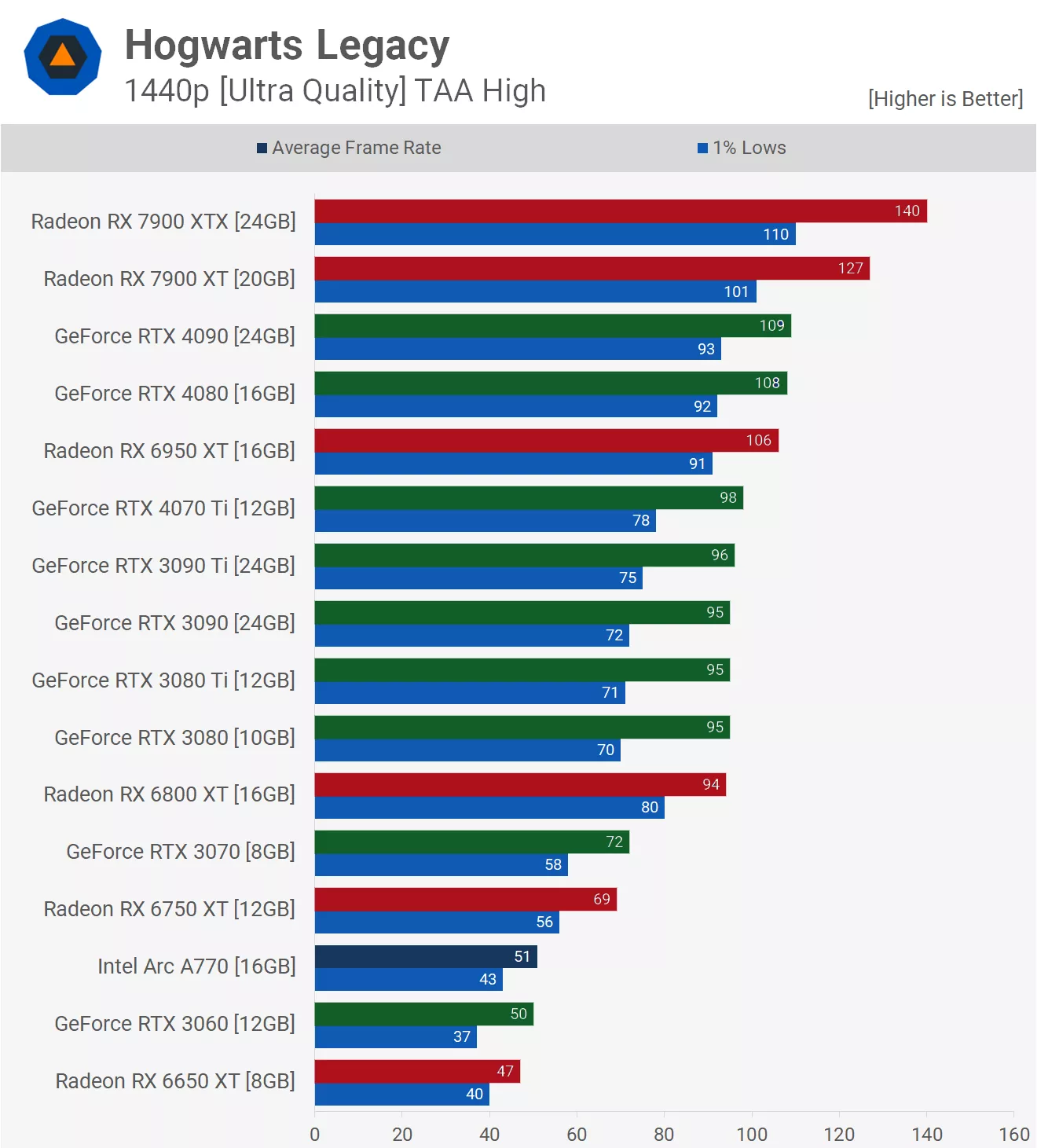

Then the RX 6800 XT came out with that beautiful (to me anyway) reference model. It was what the RTX 3080 should have been with the same incredible performance from its GPU and a far more appropriately-sized 16GB VRAM buffer. Sure the RT performance wasn't there but let's be honest, the RT performance of the RTX 3080 wasn't very good either and most people I know who own the RTX 3080 don't bother with RT because when you compare low-FPS with RT ON to high-FPS with RT OFF, the RT OFF wins every time. Sure, it was USABLE with the RTX 3080 but usually required either dropping the resolution to 1080p or using DLSS if you wanted a good gaming experience. In the end, to most people, it just wasn't worth it.

Now that the 10GB isn't enough VRAM, especially for ray-tracing, something that this card was paradoxically designed for, the comments that I saw in the YouTube channel from (what I must assume are) RTX 3080 owners were both hilarious and pathetic at the same time. I saw one person calling the game "The worst-optimized game EVER!" while some others called the game "broken". This made me laugh because even an old card like the RX 580 is able to get 60fps at 1080p with a perfectly playable experience, unlike a game that really is badly-optimized.

The best (read: worst) comment I read was some buffoon complaining about how "AMD CPUs hinder the performance of GeForce cards." which I know is a load of baloney because right now, the most common combination of CPU and GPU in gamer rigs today is Ryzen and GeForce. So basically, this fool is trying to blame AMD (???) for the fact that a game requires more than 10GB of VRAM on its highest settings. If that statement had ANY truth to it, people wouldn't be using this hardware combination.

These are nothing more than people making lame attempts to deflect responsibility onto developers and AMD (???) and away from themselves even though the reason that they're in this situation is their own bad purchasing decisions. They chose to pay through the nose for a card that clearly had a pitifully small VRAM buffer, something that was blatantly obvious to anyone with more than five years of PC building/gaming experience.

Don't get me wrong, giving the RTX 3070 and RTX 3080 only 8GB and 10GB of VRAM respectively was a slimy move on nVidia's part considering what they were charging for them (slime is green after all..heheheh) but, unlike some of their other endeavours, they weren't the least bit secretive or dishonest about it. They never tried to obfuscate or mislead people when it came to how much VRAM these cards had. As a result, the onus is on the consumers to find out if 8/10GB is enough VRAM for their purposes in the mid to long-term.

Of course, these people didn't end up doing that because they're too lazy, too stupid or both. It only takes an hour or so to of reading to get at least somewhat informed, informed enough to see the pitfall in front of them. Nope, they decided that they didn't need to be the least bit careful when spending this kind of money so they just went out and bought whatever they thought was fast and was in a green box.

It's not like nVidia put a gun to these people's heads. The ultimate decision (and therefore, ultimate responsibility) in this case belongs 100% to the consumers. The relevant information was all there and easily accessible. Instead of behaving like children and trying to blame everyone else for their own screw-up, they should take it like a grownup, eat their plate of crow and keep this lesson with them going forward. Otherwise, they'll just do it over and over and over again ad nauseum.

When I see behaviour like this, I thank my lucky stars that I'm not like that. Parents who spoil their kids aren't doing them any favours, they're just taking the easy way out and this is the result.