At some point you may have heard someone say that for gaming you need X amount of cores. Examples include "6 is more than enough cores," or "you need a minimum of 8 cores for gaming," let's address that misconception.

You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

How CPU Cores & Cache Impact Gaming Performance

- Thread starter Steve

- Start date

Achaios

Posts: 478 +1,284

QUOTE

For example, games no longer run properly, or at all, on dual-core CPUs

UNQUOTE

This statement is completely false, my laptop has got a 2C/4T Skylake CPU and I can assure you that I game with it just fine when I am on the move.

I can also assure you, and provide examples, that games run properly and do run regardless.

Ofc, my definition of "games" might be different than yours.

For example, games no longer run properly, or at all, on dual-core CPUs

UNQUOTE

This statement is completely false, my laptop has got a 2C/4T Skylake CPU and I can assure you that I game with it just fine when I am on the move.

I can also assure you, and provide examples, that games run properly and do run regardless.

Ofc, my definition of "games" might be different than yours.

So how "fine" do these 6 games tested here run on your 2c/4t CPU?QUOTE

For example, games no longer run properly, or at all, on dual-core CPUs

UNQUOTE

This statement is completely false, my laptop has got a 2C/4T Skylake CPU and I can assure you that I game with it just fine when I am on the move.

I can also assure you, and provide examples, that games run properly and do run regardless.

Ofc, my definition of "games" might be different than yours.

Unless you mean Tetris, by your "definition of games"...

Theinsanegamer

Posts: 5,409 +10,147

I would LOVE to see the proof of your skylake dual core laptop running the likes of CP2077, HZD, and RotTR, since you are so eager to provide proof....For example, games no longer run properly, or at all, on dual-core CPUs

[\QUOTE]

This statement is completely false, my laptop has got a 2C/4T Skylake CPU and I can assure you that I game with it just fine when I am on the move.

I can also assure you, and provide examples, that games run properly and do run regardless.

Ofc, my definition of "games" might be different than yours.

While it may be true on the desktop that the likes of dual core i3s with AVX are still technically capable of playing modern games at 60 FPS (I have a desktop at home that does this), especially if they are like the 7300k and can be OCed, they are really getting long in the tooth and any major background task will derail game performance. And some games just slog through with poor load times and frame times.

fadingfool

Posts: 330 +415

I'm assuming hyper-threading was still on during these tests? As such does the cache size effect the performance improvement with hyper-threading activated versus de-activated - how does the cache size affect the performance of real cores v virtual cores? I would suspect the larger the cache the better the ratio increase with hyper-threading though I don't have the selection of hardware to test this.

From my own analysis 6 threads is generally required in games these days without a significant performance impact - though we are moving towards an 8 thread minimum (probably due to the console effect).

From my own analysis 6 threads is generally required in games these days without a significant performance impact - though we are moving towards an 8 thread minimum (probably due to the console effect).

He probably means many people (especially tech sites) regularly over-focus on the same 12x AAA's (Hitman, Tomb Raider, Far Cry, etc) over and over in benchmarks, whilst the vast majority of the 30,000 games on Steam / 4,000 on GOG do indeed run just fine on quad cores (and yes, most Indie's do run on dual-cores).So how "fine" do these 6 games tested here run on your 2c/4t CPU? Unless you mean Tetris, by your "definition of games"...

Perhaps it also needs pointing out that what gets hyped in hardware reviews, and what most people actually play are not the same thing and haven't been for several years now, given that we're only a few comments in and we're already seeing "Oh, yeah, show me Cyberpunk 2077 on a Celeron. It's that or pong" dumb snark, which is literally not what the guy said at all (hint: Do the 530,000 DOTA2 gamers give a sh*t about what hardware the 7,300 (and falling) Cyberbug 2077 players think they 'must' own to be a "real" PC gamer?...)

Last edited:

Achaios

Posts: 478 +1,284

"Unless you play Cyberfail 2077, u r not a real gamer and ur opinion doesn't count, pog"

Yeah, no. I don't think that gaming means what you think it means.

Yeah, no. I don't think that gaming means what you think it means.

brucek

Posts: 1,716 +2,686

Interesting article!

Is it true that additional cache requires less wafer space than additional cores? If so it appears Intel is missing an opportunity to provide gamers with a higher performance, lower cost gaming CPU that is optimized around what drives gaming performance.

Is it true that additional cache requires less wafer space than additional cores? If so it appears Intel is missing an opportunity to provide gamers with a higher performance, lower cost gaming CPU that is optimized around what drives gaming performance.

Toju Mikie

Posts: 279 +265

Also, be careful about sites like userbenchmark.com when looking at a new CPU to purchase. It makes quad core CPUs like the i3-10320 look really good for gaming when it really isn't.

"Unless you play Cyberfail 2077, u r not a real gamer and ur opinion doesn't count, pog"

Yeah, no. I don't think that gaming means what you think it means.

Agreed games covers a huge area not just triple A titles.

QUOTE

For example, games no longer run properly, or at all, on dual-core CPUs

UNQUOTE

This statement is completely false, my laptop has got a 2C/4T Skylake CPU and I can assure you that I game with it just fine when I am on the move.

I can also assure you, and provide examples, that games run properly and do run regardless.

Ofc, my definition of "games" might be different than yours.

If you can run even half the games featured in this content 'properly' on a dual-core, I'd like to buy this 20 GHz CPU you've stumbled upon.

Agreed games covers a huge area not just triple A titles.

Yeah I mean if he took it to mean that I meant all games ever made, Commander Keen included, well... I should have been clearer

Interesting piece!

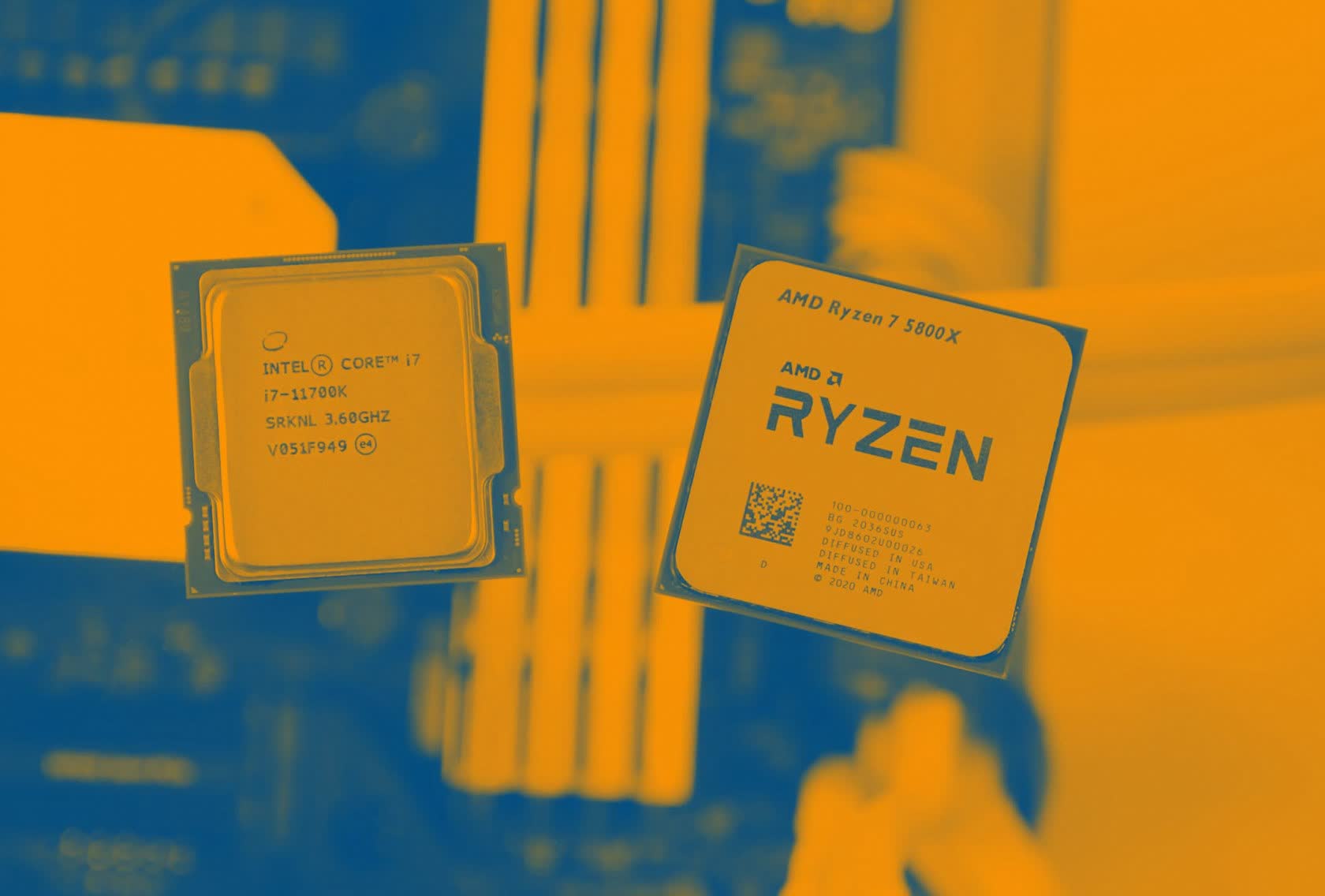

I went ahead and splurged on a 5800X when they came out last year because last time I built a PC everyone kept telling me I didn't need more than four cores. That was back when people were still running games on overclocked i5-2500Ks which were still holding on after a few years. But maybe four or five years later I ran into games that maxed out my old quad-core, and I kept using it for another few years.

Partially I got the 5800X in anticipation for what I might be trying to run four years from now (and I've started using Handbrake again), and I think that's what scares some people -- the fact that people upgrade CPUs much less frequently than GPUs, often only ever having one CPU in a system for its lifetime because maybe newer ones don't work with their old motherboards.

I went ahead and splurged on a 5800X when they came out last year because last time I built a PC everyone kept telling me I didn't need more than four cores. That was back when people were still running games on overclocked i5-2500Ks which were still holding on after a few years. But maybe four or five years later I ran into games that maxed out my old quad-core, and I kept using it for another few years.

Partially I got the 5800X in anticipation for what I might be trying to run four years from now (and I've started using Handbrake again), and I think that's what scares some people -- the fact that people upgrade CPUs much less frequently than GPUs, often only ever having one CPU in a system for its lifetime because maybe newer ones don't work with their old motherboards.

elementalSG

Posts: 270 +481

Great article! I had been looking for someone to write an article about my question of whether I need 8 cores to run today's games or not, so I'm glad Techspot finally answered it affirmatively today!

Shadowboxer

Posts: 2,074 +1,655

I’ve always found looking at cinebench single core performance is a good indicator of where a processor sits for gaming. There are other factors, there seems to be a minimum core count on some games. If we look back to those overclockable dual core pentiums, they ran very fast in some games and then hopelessly slow in others.

Fastturtle

Posts: 145 +79

Funny thing is, I built my R5-1600 four years ago (2017) and upgraded to a 3600 just before the supply chain blew up. Then got an oppurtunity a few months ago to get my desired 3600 XT that I'd originally wanted at below MSRP (Great Sale price). Couldn't be happier with it and my GP104 GTX 1060 (2018) version I ended up with after the last one died and yes I run at 1440 now with Win10 and couldn't be happier.Interesting piece!

I went ahead and splurged on a 5800X when they came out last year because last time I built a PC everyone kept telling me I didn't need more than four cores. That was back when people were still running games on overclocked i5-2500Ks which were still holding on after a few years. But maybe four or five years later I ran into games that maxed out my old quad-core, and I kept using it for another few years.

Partially I got the 5800X in anticipation for what I might be trying to run four years from now (and I've started using Handbrake again), and I think that's what scares some people -- the fact that people upgrade CPUs much less frequently than GPUs, often only ever having one CPU in a system for its lifetime because maybe newer ones don't work with their old motherboards.

Ludak021

Posts: 775 +590

Also, be careful about sites like userbenchmark.com when looking at a new CPU to purchase. It makes quad core CPUs like the i3-10320 look really good for gaming when it really isn't.

it's worse than stronger cpus but stronger than weaker ones. It's stronger than Ryzen 2200/2400G that were recommended by many for games...

hahahanoobs

Posts: 5,216 +3,072

hahahanoobs

Posts: 5,216 +3,072

You don't simply just add more cache to a CPU. You design for it - in advance.Interesting article!

Is it true that additional cache requires less wafer space than additional cores? If so it appears Intel is missing an opportunity to provide gamers with a higher performance, lower cost gaming CPU that is optimized around what drives gaming performance.

AMD is about to do it, but that's going on an AMD design and we haven't seen the final results yet.

hahahanoobs

Posts: 5,216 +3,072

I'll repeat what I wrote under the HUB video.

"You need 8 cores for gaming as much as you need a $1000 dGPU."

The video was great confirmation for the ones that were confused and the ones that just wanted to see the data like myself, as the majority of us came to the conclusion already just from reading all the reviews we've seen on the internet over time.

"You need 8 cores for gaming as much as you need a $1000 dGPU."

The video was great confirmation for the ones that were confused and the ones that just wanted to see the data like myself, as the majority of us came to the conclusion already just from reading all the reviews we've seen on the internet over time.

I'll repeat what I wrote under the HUB video.

"You need 8 cores for gaming as much as you need a $1000 dGPU."

The video was great confirmation for the ones that were confused and the ones that just wanted to see the data like myself, as the majority of us came to the conclusion already just from reading all the reviews we've seen on the internet over time.

Lulz, that was you? I saw the comment but didn't note the name.

What I wonder about is where in HZD Steve tests as I've tried out the game in a number of minimally-specced systems (R3 1200 4c4t @3.7GHz, Core i7-4790 not K 4c8t @3.8GHz), and with a lot of machine AI on the screen, the R3 dips into the mid 20s fps and the 4790 into the mid 30s fps, both with 100% CPU use.

In other words, this game can be CPU-intensive enough that in order to maintain 60fps when you really need it, it may need a decent 6-core, though I'd love to know if an OC 3300X or i3-10320 might just be good enough. For science, of course as I do have better PCs to play the game on.

Avro Arrow

Posts: 3,720 +4,821

No, it's not completely false.QUOTE

For example, games no longer run properly, or at all, on dual-core CPUs

UNQUOTE

This statement is completely false,

Which is exactly why the statement wasn't completely false. Usually, when talking games, we're referring to AAA-level titles like Far Cry, Assassin's Creed, Deus Ex, Hitman, F1, Dark Souls, etc. because it is generally accepted that older games like GTA5, Skyrim, etc. will play easily on any modern PC hardware. To discuss them would be more or less pointless at this time.Ofc, my definition of "games" might be different than yours.

Naw, they're just making you buy more cores if you want more cache. Intel with their artificial market segmentation strikes again!Interesting article!

Is it true that additional cache requires less wafer space than additional cores? If so it appears Intel is missing an opportunity to provide gamers with a higher performance, lower cost gaming CPU that is optimized around what drives gaming performance.

I don't think that anyone here is noobish enough to take loserbenchmark seriously.Also, be careful about sites like userbenchmark.com when looking at a new CPU to purchase. It makes quad core CPUs like the i3-10320 look really good for gaming when it really isn't.

Sure but it's the AAA titles that require the most potent hardware. Other games can run on more or less anything that's even remotely modern.Agreed games covers a huge area not just triple A titles.

MasterMace

Posts: 311 +271

You're gonna have a tough time convincing me that the game you are testing at 1080p isn't GPU bottlenecked when the framerates are the same across multiple CPUs on the graphics card, and the framerates steadily increase as you upgrade the GPU, with the CPUs FPS remaining flat.

hahahanoobs

Posts: 5,216 +3,072

The 3300X at stock did well against 6 and 8 core parts. Couldn't really buy it, because it was almost never in stock. If you can get one it might be worth it.Lulz, that was you? I saw the comment but didn't note the name.

What I wonder about is where in HZD Steve tests as I've tried out the game in a number of minimally-specced systems (R3 1200 4c4t @3.7GHz, Core i7-4790 not K 4c8t @3.8GHz), and with a lot of machine AI on the screen, the R3 dips into the mid 20s fps and the 4790 into the mid 30s fps, both with 100% CPU use.

In other words, this game can be CPU-intensive enough that in order to maintain 60fps when you really need it, it may need a decent 6-core, though I'd love to know if an OC 3300X or i3-10320 might just be good enough. For science, of course as I do have better PCs to play the game on.

The 3300X at stock did well against 6 and 8 core parts. Couldn't really buy it, because it was almost never in stock. If you can get one it might be worth it.

I ended jumping and got a 2600 as the 3300X was just never available and the 5600X is really for high end GPUs. Of course afterwards I did see the 3300X available last week for about $140 which is a good price for it but I don't need another CPU. I'll squeeze out performance with the 2600 and 5600XT and wait for the GPU market to even out in 2027 or whenever.

So...rediscovering what we already knew courtesy of the Gen5 Intel i7-5775c Broadwell CPU? I've been enjoying my 4 Core/6MB L2 chips with 128 MB eDram cache for years. When you disable the onboard Iris Pro 6200 GPU it makes that eDram available in full to the CPU.

With the CPU OC'ed to just 4 GHz and the eDram to 2GHz it frames up quite beastly using just a low profile air cooler. I pair them with 2 slot cards, either a 2080 TI or 3080. Another positive is the native compatibility with older Windows operating systems and it can even run OSX on the right mainboard. Good streamers as well.

The caveat? You won't be winning in many multicore benchmarks but then again I only use these chips for SFF gaming rigs.

With the CPU OC'ed to just 4 GHz and the eDram to 2GHz it frames up quite beastly using just a low profile air cooler. I pair them with 2 slot cards, either a 2080 TI or 3080. Another positive is the native compatibility with older Windows operating systems and it can even run OSX on the right mainboard. Good streamers as well.

The caveat? You won't be winning in many multicore benchmarks but then again I only use these chips for SFF gaming rigs.

Similar threads

- Replies

- 50

- Views

- 721

- Replies

- 28

- Views

- 3K

- Replies

- 28

- Views

- 211

Latest posts

-

How to build a Wii the size of a deck of cards

- Daniel Sims replied

-

Sony's Ghost of Tsushima brings familiar PlayStation features to PC

- WhiteLeaff replied

-

Logitech thinks the computer mouse needs an AI upgrade

- ScottSoapbox replied

-

Apple is finally rolling out AirPlay support in hotel rooms

- VitalyT replied

-

TechSpot is dedicated to computer enthusiasts and power users.

Ask a question and give support.

Join the community here, it only takes a minute.