Something to look forward to: Intel made a number of intriguing disclosures and bold proclamations during last week’s Intel Analyst Day, highlighting how it sees its future evolving. What made the information even more intriguing—and frankly, more compelling—is that they did so while also offering a refreshing level of honesty and transparency about challenges they’ve faced.

While the information about manufacturing delays at both their 10nm and 7nm process nodes isn’t new, the competitive challenges they’re facing from AMD, Arm architectures, and others are well known, their responses felt new.

From CEO Bob Swan on down, the manner with which Intel executives talked about these issues made it clear that they’ve not only accepted them, they’ve developed strategies to help overcome them.

On the manufacturing front, despite calls from some in the industry to get out of chip fabrication or, at the least, concerns about the reliability or stability of that portion of their business, company executives were extremely clear: they have absolutely no intention of moving away from being an IDM (Integrated Device Manufacturer) that both designs and builds its own chips. And yes, they know they have to regain some of the trust they lost after slipping from their long-held lead as manufacturing process champions.

Recent announcements on fundamental transistor improvements as well as innovative chip packaging technologies, however, coupled with their long history of effort and innovation in these areas gives them the confidence that they can compete and even win (see “Intel Chip Advancements Show They’re Up for a Competitive Challenge” ).

"Intel made it clear that CPUs will continue to be their primary focus, but they are greatly stepping up their efforts on GPUs with their Xe line"

The basic chip hardware strategy focuses on two key elements. The first of these they’re calling disaggregation—that is, the breaking up of larger monolithic chip designs into a variety of smaller chiplets connected together via high-speed links and packaged together with a variety of different technologies.

The second is referred to generically as XPUs, but essentially means a diversification of core chip architectures, with much greater support for more specialized “accelerator” silicon designs. Again, Intel made it clear that CPUs will continue to be their primary focus, but they are greatly stepping up their efforts on GPUs with their Xe line, as well as various types of AI accelerators, particularly those from its acquisition of Habana, and on FPGAs, such as its Stratix line.

While they are all interesting and important on their own, it’s their ability to potentially work together where the real opportunity is. In a presentation from SVP and Chief Architect Raja Koduri, the company demonstrated how diverse types of data analysis are significantly overwhelming the current trajectories for existing CPU designs. That’s why different types of chip architectures with specialized capabilities that are better suited for certain aspects of these computing tasks are so critical—hence the need for more variety.

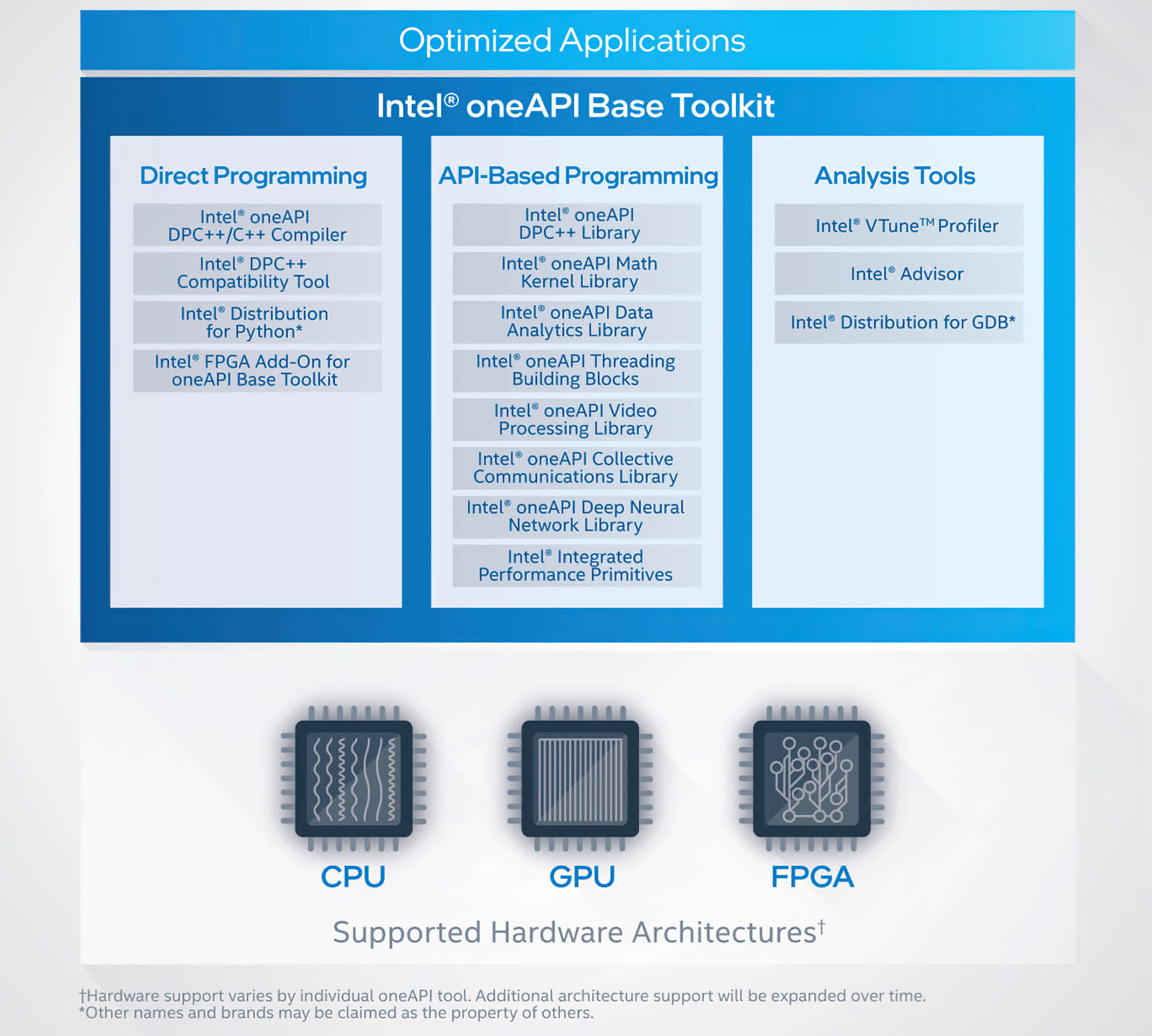

The real magic, however, can only happen with software that unites them all and that’s where Intel’s oneAPI fits in. Originally announced two years ago, oneAPI is an open, standards-based, unified programming model that’s designed to make it easier for developers to be able to write software that can take advantage of all the unique capabilities of these different chip architectures. Critically, it does so without the need to know how to specifically write code that’s customized for them. This is absolutely essential because there is a very limited set of developers that can write software for each one of these different accelerators, let alone all of these different chip types.

The company achieves this important capability by providing a hardware abstraction layer and a set of software development tools that does the incredibly hard work of figuring out what bits of code can run most effectively on each of the different chips (or, at least, each of the components available in a given system). It’s a challenging goal, so it isn’t surprising that it’s taken a while to come to fruition, but at the Analyst Event last week, Intel announced that they had started shipping the base oneAPI toolkit along with several other options that are specialized for applications such as HPC (High Performance Computing), AI, IoT, and Rendering. This marks a step forward in the evolution of Intel’s software strategy that will take a while to fully unfold, but shows that they’re bringing this audacious vision to life.

"It’s clear that Intel is moving forward with its strategy of unifying its increasingly diverse set of chip architectures through a single unified software platform"

Equally interesting was an explanation of how Intel is making this seemingly magical cross-architecture technology work. Basically, the company is applying some of the same principles and learnings from their experience with adding instruction extensions for CPUs (such as AVX, AVX-512, etc.) to additional chip architectures.

As it found with those efforts, usage of the new capabilities can take a while unless there is full support throughout a range of development tools such as compilers, performance libraries, and more. That’s what Intel is launching with its oneAPI toolkit in an effort to jumpstart the technology’s usage. In addition, the company has created a compatibility tool for porting code written in Nvidia’s popular CUDA language for GPUs. This provides a big head start for developers who have experience with or software already written in CUDA.

Given that it was just released, the final performance and real-world effectiveness of the oneAPI toolkit remains to be seen. However, it’s clear that Intel is moving forward with its strategy of unifying its increasingly diverse set of chip architectures through a single unified software platform. Conceptually, it’s been a very appealing idea since the company first unveiled it several years back—here’s hoping the reality proves to be equally appealing.

Bob O’Donnell is the founder and chief analyst of TECHnalysis Research, LLC a technology consulting firm that provides strategic consulting and market research services to the technology industry and professional financial community. You can follow him on Twitter @bobodtech.

https://www.techspot.com/news/87980-intel-bets-future-software-manufacturing.html