Why it matters: Recent developments in hardware production have intensified the conversation regarding how much time Moore's Law has left. Nvidia has repeatedly declared its death, but AMD and Intel believe it has only slowed down and that numerous innovations can help new products maintain the performance improvements clients expect.

MIT recently posted a video of a talk from earlier this year where Intel CEO Pat Gelsinger commented on recent assertions that Moore's Law could end soon. Gelsinger believes the rule guiding chip production for almost six decades still holds but admits that it hasn't maintained its usual pace lately.

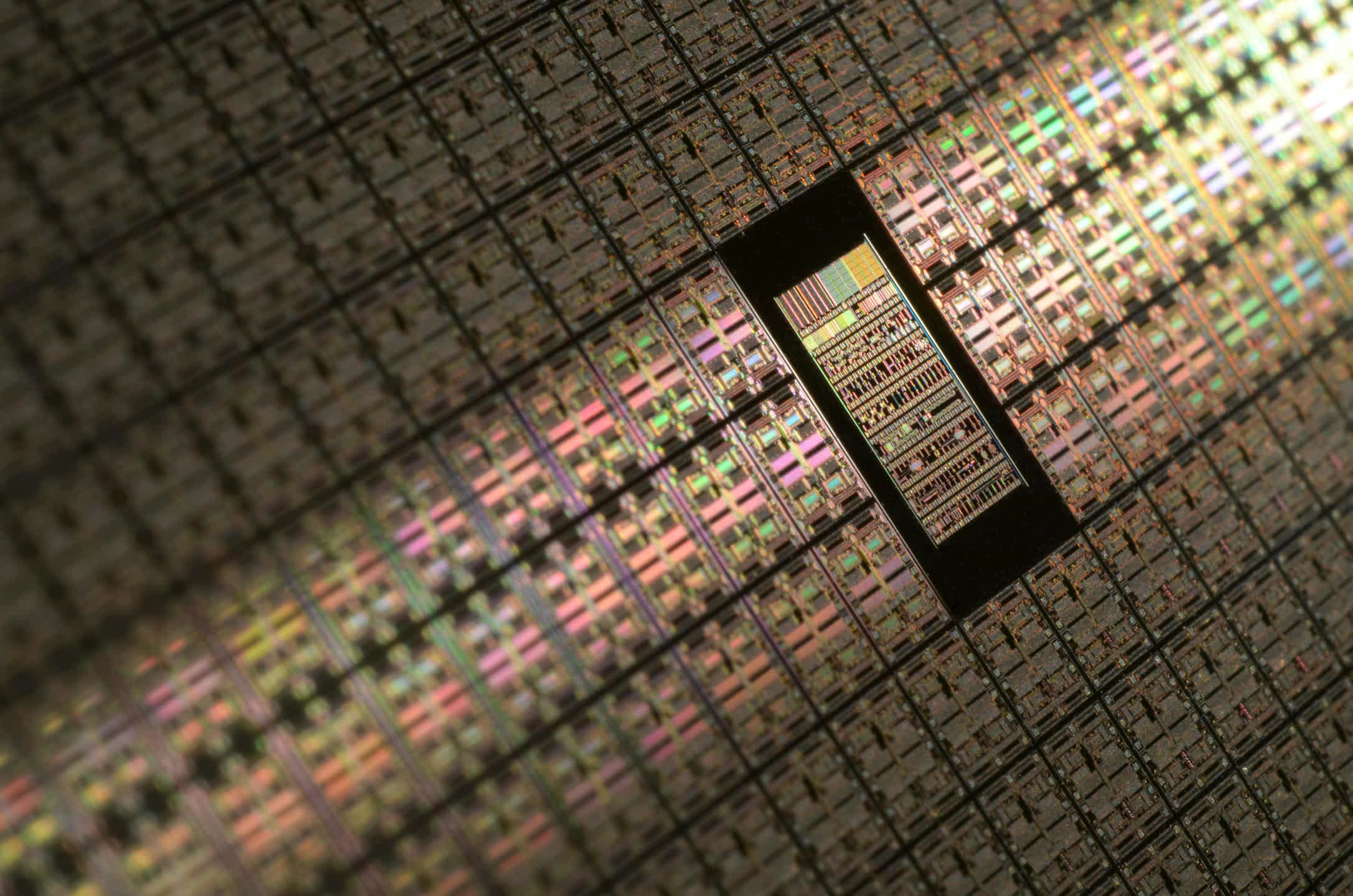

Moore's Law, coined by the late Intel co-founder Gordon Moore in 1965, postulates that the number of transistors per square inch on a circuit board will roughly double every two years. That rule has mostly held firm ever since, enabling reliable performance gains.

Were Moore's Law to end, as Nvidia CEO Jensen Huang has claimed numerous times since 2017, then building faster devices would theoretically require pumping more electricity into more transistors, significantly raising costs and energy consumption. The last time Huang declared time-of-death on Moore's Law in 2022, the assertion was in response to criticism of price increases from the company's RTX 3000 to RTX 4000 graphics cards.

Speaking at MIT, Gelsinger said that the recent rate of transistor doubling has been more like three years instead of two, admitting that the "Golden Age" of Moore's Law is over. The rising costs of semiconductor fabs have been a central factor.

Gelsinger noted that the cost of a modern fab has grown from around $10 billion to $20 billion in the last seven or eight years. Consulting firm IBS recently predicted that a 2nm fab could cost about $28 billion – 50 percent more than a 3nm facility. The rising need for EUV lithography tools is a primary cause behind the growing expenses.

Because performance uplifts are becoming harder to achieve, companies like Intel, AMD, TSMC, Samsung, and even Nvidia are devising tricks to increase efficiency. Gelsinger noted innovations like 3D packaging, gate-all-around transistors, backside power delivery, and lithography advances, echoing comments from AMD.

Hardware manufacturers have also begun adopting chiplet-based designs, which increase flexibility by allowing multiple semiconductor process nodes on a given product. Nvidia has become a primary proponent of AI and machine learning, introducing upscaling techniques to dramatically improve gaming performance.

Gelsinger declared that Intel hopes to progress from 100 billion transistors per package to 1 trillion before the end of this decade.

https://www.techspot.com/news/101330-intel-ceo-pat-gelsinger-moore-law-isnt-dead.html