In context: Intel first announced the Sapphire Rapids line of Xeon processors in late 2019 and has released information about them at a trickle ever since. With up to 56 cores and in-package memory, they'll be Intel's most powerful and enormous processors to date.

Intel has been steadily updating the relevant enterprise software in preparation for Sapphire Rapids for over a year. Some of that software is open source and the source of small leaks. Last week, a trawler found a reference to an unknown processor in the boot log of a Linux kernel, the Intel W9-3495.

First, the name: Intel uses "W" for its workstation CPUs, for example, the W-3375. It hasn't put numbers after the "W" yet, so that could mean a few things, but it looks a lot like the nomenclature used by the Core series -- i5, i7, i9. It might imply the existence of similar W7 or W5 CPUs with lower core counts.

Second, the specs. The boot log says that the W9-3495 has 56 cores / 112 threads and a base clock of 1.8 GHz (that likely isn't final). It lists AMX and AVX-512 instructions as features of the CPU.

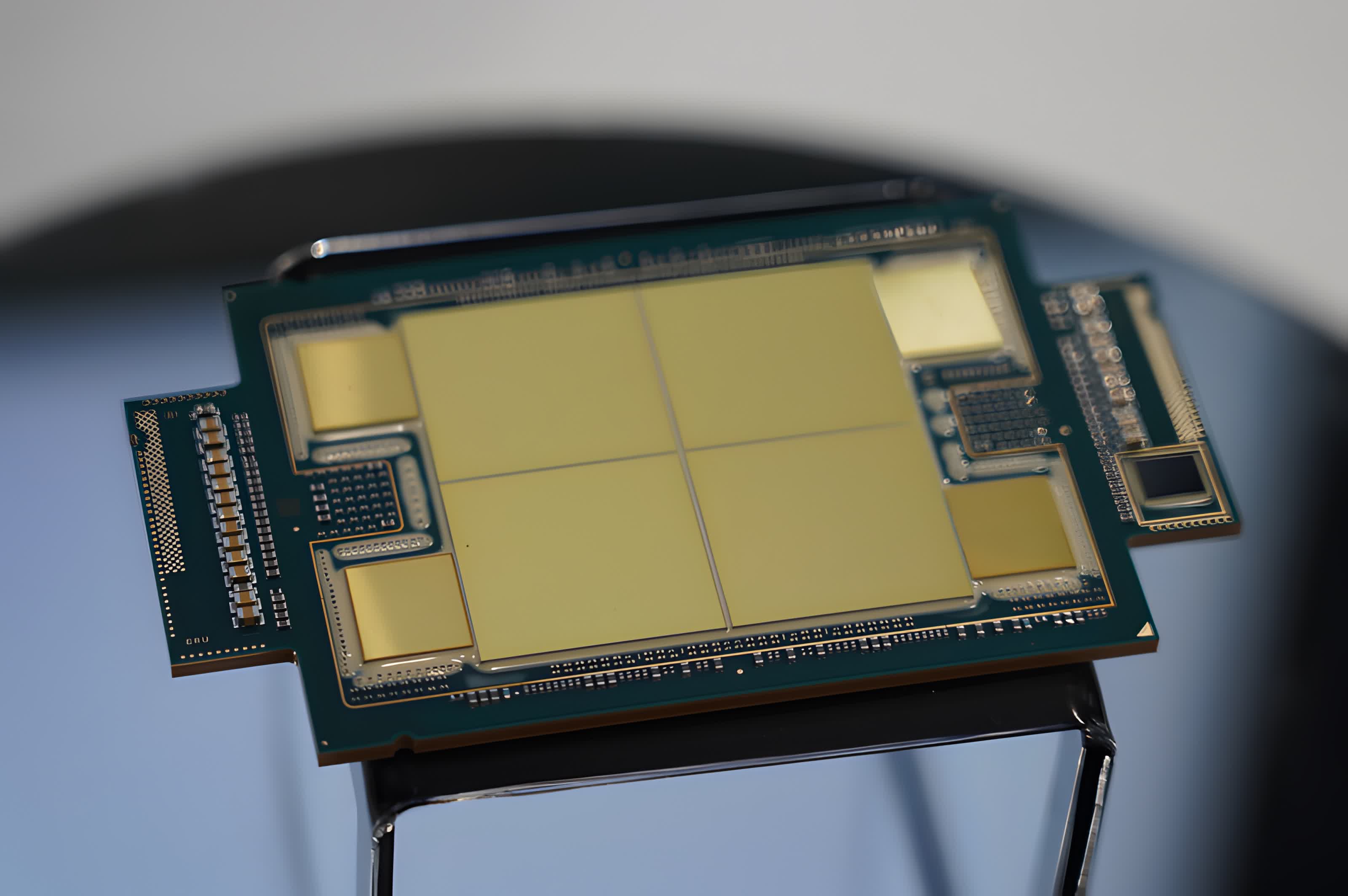

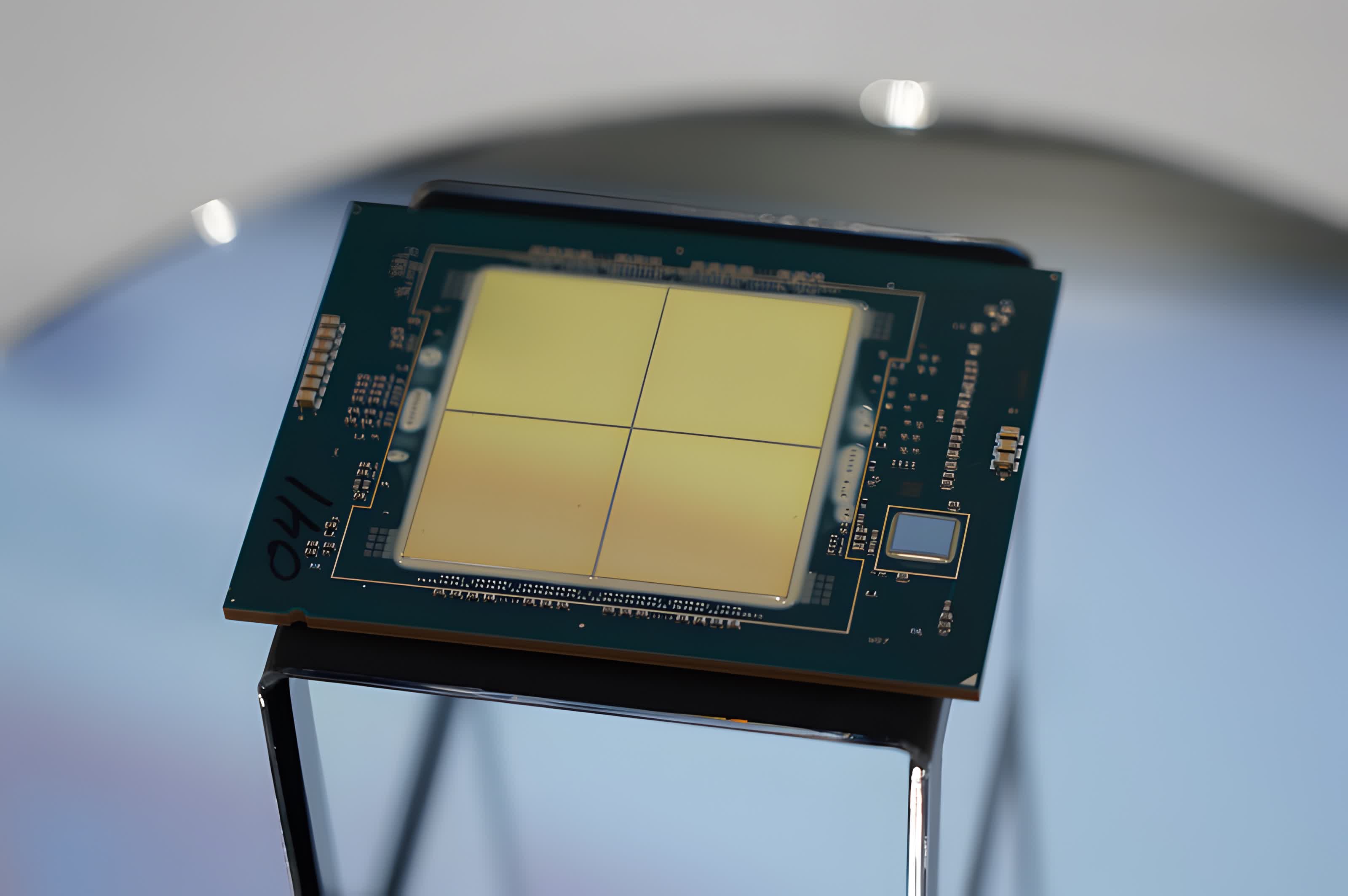

A Sapphire Rapids CPU without in-package memory.

However, it doesn't say whether or not the W9-3495 has the 64 GB of in-package HBM2e memory that Sapphire Rapids has garnered infamy for, and I'd wager that it won't. It's prohibitively expensive, and also something that Intel would reserve for its flagship data center processors as a selling point.

Intel's workstation CPUs do usually have most of the features of their server-side counterparts, though. If the W9-3495 is no exception, then it will have eight lanes of DDR5 and a mix of PCIe 5.0 and PCIe 4.0 lanes summing to 80. It will definitely use the Willow Cove architecture and Intel 7 node (a rebrand of the 10nm Enhanced SuperFin Mouthful node) and the new LGA4677-X socket.

It will also be a mile above Intel's current offerings. Today's workstation flagship is the W-3375, which has only 38 cores / 76 threads and uses the Ice Lake architecture from late 2019 and the first-gen 10nm node. It's also stuck with DDR4 and PCIe 4.0. It costs a whopping $4,499 - ouch - and is only really available through OEMs, a fate that hopefully the W9-3495 can avoid.

https://www.techspot.com/news/95402-intel-could-bringing-56-core-cpus-workstations.html