Forward-looking: In an era where it's clear that PCs still matter, Intel is making a statement about the future of the PC category with the much-anticipated launch of the Core Ultra chip (codenamed "Meteor Lake"). Not surprisingly, the direction is straight towards AI.

During Intel's "AI Everywhere" event in New York City this week, the company unveiled new Core Ultra CPUs for laptops and the 5th-gen Xeon CPU for servers and data centers. Both offer enhanced AI capabilities, along with significant improvements in power efficiency and performance.

Core Ultra combines several new technologies into a single SoC – in fact, Intel claims it's their biggest architectural leap in PC processors in about 40 years. The one change likely to get the most attention is the company's first integration of an NPU, or Neural Processing Unit, into an SoC design.

The NPU is specifically tasked with accelerating certain types of AI workloads, freeing the CPU and GPU (also built into the chip) to do other tasks. In the process, it enables new types of applications to run on PCs and improves the laptop's battery life and overall efficiency.

That's a pretty heady combination, and Intel is using it to herald the dawn of the "AI PC" era – perfectly timed to tap into the huge focus on generative AI that's swept over the tech industry and much of the world this past year.

Chief rival AMD actually had the first PC chip with a built-in NPU earlier in the year with the Ryzen 7040 and just unveiled the updated Ryzen 8040 with a second generation NPU last week.

However, given Intel's massive position in PCs (as well as the limited software support for AMD's initial NPU), there's little doubt that the first Core Ultra-based PCs – which are available as of today – will be seen by many as the first in this burgeoning new category.

From a practical perspective, most people will appreciate the more traditional enhancements Intel has made to the chip – even though they may not be as sexy as the NPU. Core Ultra features the combination of up to 6 performance P Cores and 8 efficiency-focused E cores, but also includes two new ultra low-power cores they're calling a low-power island that can kick in when system demands are low and extend battery life further.

The new GPU in Core Ultra is based on the Arc architecture, offers up to 8 Xe graphics cores and features an impressive 2x improvement over previous Intel integrated graphics solutions.

Core Ultra is interesting at a manufacturing level as well. The main CPU element is the first chip Intel is building with its Intel 4 process technology (the second of five manufacturing nodes the company is on track to complete over the next four years), and it includes an advanced version of the Foveros 3D chip-stacking technology.

Foveros allows Intel to combine multiple different chiplets made at multiple different process nodes into a single, more sophisticated SoC design.

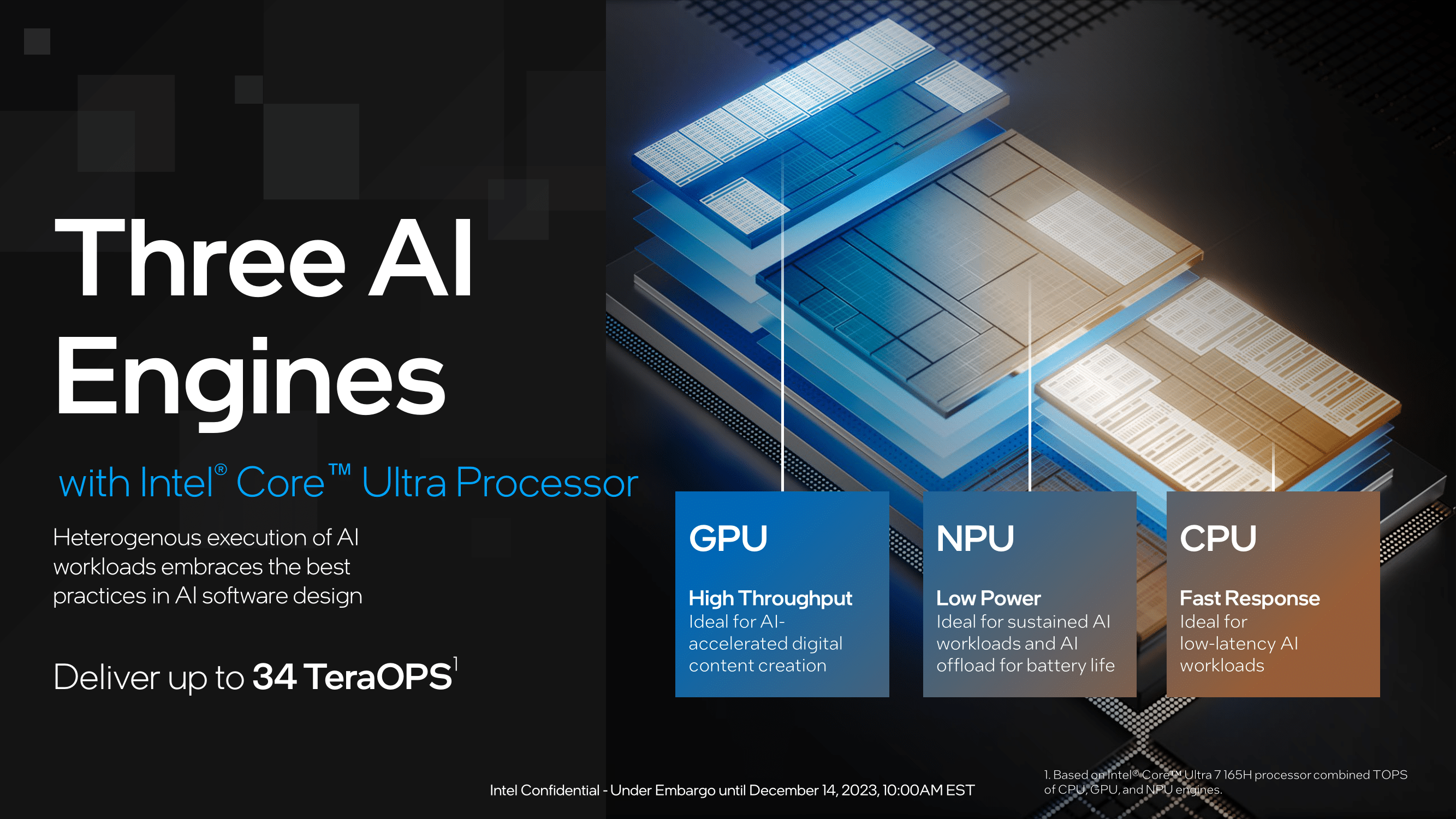

One of the key strategies Intel is using with Core Ultra, as part of its broader AI PC initiative, is to emphasize the collaborative functioning of the NPU, CPU, and GPU in handling AI-related workloads.

To date, the industry has predominantly focused on the TOPS (Tera Operations Per Second) of the NPU, with Qualcomm, for instance, showcasing its upcoming Snapdragon X Elite, which boasts an NPU with 45 TOPS. Intel's NPU in Meteor Lake offers a raw TOPS performance of 10, while AMD's recently upgraded NPU in the Ryzen 8040 claims to reach 16 TOPS.

Notably, both Intel and AMD are shifting their focus to the total system TOPS, acknowledging that the CPU and GPU significantly contribute to these figures. Intel claims that Core Ultra can achieve up to 34 system TOPS, and AMD suggests up to 39 system TOPS with the Ryzen 8040.

There's an enormous debate about the real value of TOPS numbers of any kind, but particularly for the NPU. To Intel and AMD's credit, in practical use, AI-focused applications are using the CPU and the GPU already and will be adding support for NPUs over time. In other words, there aren't a whole lot of applications that leverage only the NPU, so the current real-world value of TOPS performance benchmarks is up to question.

This is particularly true because so much of the AI-related work being done on PCs right now is done via the cloud and doesn't do any local processing (think ChatGPT, Microsoft 365, etc.). Eventually, of course, the hope is that more of this work will start to be done locally, but as with most software-related efforts, it will take time.

In the meantime, there are a few examples of applications that can start to leverage the hardware available on Core Ultra. Most notable was Superpower, which can record all the things you do and see on your PC and then easily find them and allow you to generate new content (such as emails and documents) based on the documents and data you have on your own PC.

At present, the company is using a 7B parameter version of Meta's Llama2 foundation model, but discussions with the company's founders suggest there could be other possibilities in the future. From an operational perspective, what's interesting about Superpower is that it uses both the onboard NPU and CPU on Core Ultra, so it represents a good real-world example of how AI-focused apps are likely to evolve.

Unlike the Mac version of the app, SuperPower on Core Ultra equipped PCs can run even when it isn't connected by leveraging the local LLM and the NPU. Macs don't currently have NPUs, so it requires an internet connection to function. Another demo Intel created in conjunction with HP involved a gaming app that used the NPU to do live streaming, freeing up the entire GPU for the game.

While these are interesting, the obvious question that many people will still have is what does this mean to the average business PC user or consumer?

Intel's EVP and GM of its client computing group, Michelle Johnston Holthaus, provided a refreshingly honest answer during a Q&A at the event: it's still early days, and they don't know for sure. There's little doubt that AI-enabled PCs are going to represent an important new era for the devices in the future. It's clear, however, that people are still figuring out what exactly that's going to be, so at least Intel is acknowledging that.

As the long-time industry leader in PC architectures, Intel's endeavors in driving forward the idea of an AI-capable PC are definitely important and newsworthy. Between the company's enormous marketing efforts, its work with over 100 software developers to bring more AI-accelerated applications to market, and the huge infrastructure and ecosystem it has built over the past few decades, Intel can have an outsized influence on how the idea of AI-powered PCs come to market. Given what a critical transition this will likely prove to be, it's great to see the company taking these important first steps.

Bob O'Donnell is the founder and chief analyst of TECHnalysis Research, LLC a technology consulting firm that provides strategic consulting and market research services to the technology industry and professional financial community. You can follow him on X @bobodtech

https://www.techspot.com/news/101224-intel-refining-computing-vision-where-sees-pc-going.html