Why it matters: Just sneaking in before the end of Q1 2022, Intel are finally detailing their first Arc discrete GPUs, as promised. We've got all the details, including specs on the GPU dies themselves, the various product configurations that Intel are announcing first, and some of the exclusive features Intel will be bringing to their graphics family.

As expected, the first Intel Arc GPUs are destined for laptops, which is a little different to what AMD and Nvidia usually do, which is to launch desktop products first. However, Arc desktop cards are still coming. Intel is saying Q2 2022 for these products and some of what we're looking at today applies to those cards, such as the GPU die details. Intel is doing some sort of small teaser for desktop add-in cards today, which we'll be covering in our regular coverage.

Intel is preparing three tiers of Arc GPUs, with a similar naming scheme as we see in CPUs: Arc 3 is at the low end, this will be basic discrete graphics with somewhat higher performance than integrated; Arc 5 as a mainstream option equivalent to an RTX 3060 type of position in the market; and then Arc 7 for Intel's highest performing parts.

We're not expecting Intel's flagship GPU to necessarily compete with the fastest GPUs from AMD or Nvidia today (RX 6800 and RTX 3080 tiers and above), so Arc 7 is probably going to top out around a mid- to high-end GPU in the market right now.

The first GPUs to hit the market will be Arc 3, coming in April, with Arc 5 and Arc 7 coming in the summer of 2022. It's looking like an "end of Q2" timeframe, when we'll likely also see desktop products.

Let's dive straight into the product specifications, and I'll start with the GPU dies. The two chips here are not the GPU SKUs we'll see in laptops, rather the actual die specifications that Intel is making in the Alchemist series.

The larger die is called ACM-G10, this is like a GA104 or Navi 22 equivalent from AMD and Nvidia, respectively. The smaller die is ACM-G11 which is closer in size to GA107 and Navi 24. Both are fabricated on TSMC's N6 node as has been previously announced.

ACM-G10 features 32 Xe Cores and 32 ray tracing cores. If you're more familiar with Intel's older "execution unit" measurement for their GPUs, 32 Xe cores is the equivalent of 512 execution units with each Xe core containing 16 vector engines for standard shader work and 16 matrix engines primarily for machine learning work. Intel combines 4 Xe Cores into a render slice, so the top ACM-G10 configuration has 8 render slices.

We're also seeing 16 MB of L2 cache in the spec sheet, and a 256-bit GDDR6 memory subsystem. We're getting PCIe 4.0 x16 with this die, 2 media engines and a 4-pipe display engine so essentially supporting 4 outputs.

As for die size specifications, Intel told us this larger variant is 406 sq.mm and 21.7 billion transistors. Size-wise, this is larger than AMD's Navi 22, which is 335 sq.mm and 17.2 billion transistors. It ends up more around Nvidia's GA104, which is 393 sq.mm but less dense on Samsung's 8nm at 17.4 billion transistors.

That should give you rough expectations here, the size and class of Intel's biggest GPU die is similar to the upper mid-tier dies from their competitors, which go into products like the RTX 3070 Ti and RX 6700 XT. Intel do not have a 500 sq.mm plus die this generation to compete with the biggest GPUs from AMD and Nvidia.

The smaller die is ACM-G11 and that has 8 Xe cores and 8 ray tracing units, so this is 128 execution units or just 2 render slices. The L2 cache is cut down to 4 MB as a result, and the memory subsystem ends up as 96-bit GDDR6. However, while this GPU has just a quarter of the Xe cores as the larger die, we'll still be getting 2 media engines and 4 display pipelines which will be very useful for content creators. It also features a PCIe 4.0 x8 interface, Intel did not make the mistake of dropping this to x4 like AMD did with their entry-level GPUs.

As for die size Intel is quoting 157 sq.mm and 7.2 billion transistors. This sits pretty much between AMD's Navi 24 and Nvidia's GA107. Navi 24 is tiny at just 107 sq.mm and 5.4 billion transistors. Nvidia hasn't spoken officially about GA107 but we've measured it to be roughly 200 sq.mm in laptop form factors.

In only including 8 Xe cores with the ACM-G11 design, this isn't that much larger than the integrated GPU Intel includes with 12th-gen Alder Lake CPUs. Those top out at 96 execution units, making ACM-G11 just 33% larger, so this is firmly an entry-level GPU. However, ACM-G11 does benefit from features like GDDR6 memory and ray tracing cores, there are more avenues for performance uplifts over integrated graphics than just having more cores.

As for end products that consumers will be buying, there are five SKUs in total that use these two dies: the two Arc 3 products use ACM-G11, while the three GPUs split across Arc 5 and 7 will use ACM-G10. So when Intel says Arc 3 is launching now, this is clearly a launch for the ACM-G11 die while the larger ACM-G10 has to wait.

In the Arc 3 series, we have an 8 core option (the A370M) and a 6 core option (the A350M), both with 4GB of GDDR6. Interestingly, despite Intel just quoting a 96-bit memory bus for ACM-G11, that's being cut down for these products to just 64-bit to better align with 4GB of capacity. Were Intel to stick to 96-bit, these GPUs would have had to choose between 6 GB or 3 GB of memory in a standard configuration.

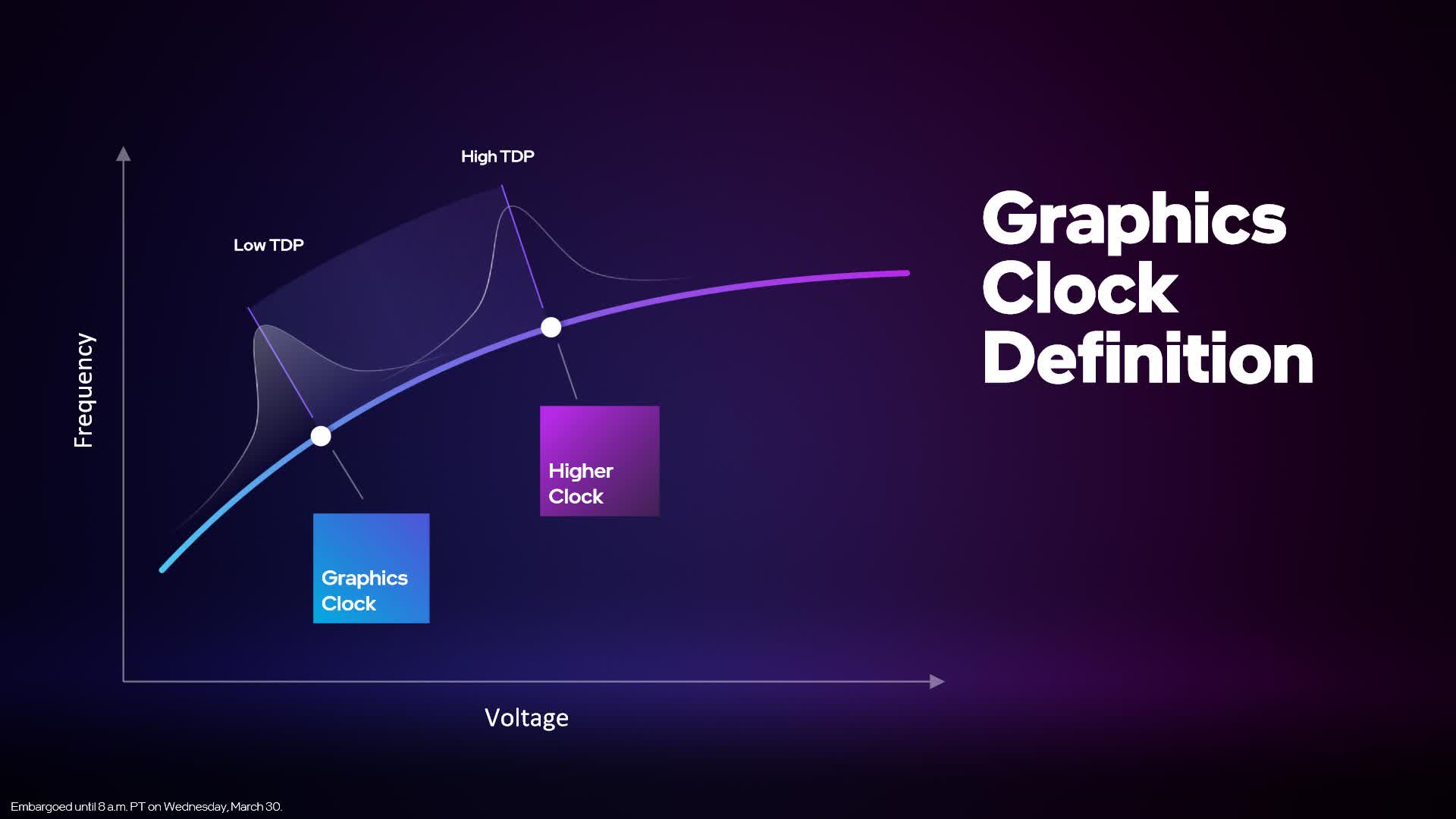

When it comes to clock speeds, the A370M is listed at 1550 MHz for its "graphics clock" while the A350M is at 1150 MHz. What is a graphics clock? Well this is very similar to AMD's game clock definition. Intel is saying the graphics clock isn't the maximum frequency the GPU can run at, rather the typical average frequency you'll see across a wide range of workloads. Specifically for these mobile products, the graphics clock listed on the spec sheet is in relation to the lowest TDP configuration Intel offers. So for the A370M with a 35-50W power range, the 1550 MHz clock is what you'll typically see at 35W, with 50W giving users a higher frequency. Intel is specifically being conservative here to avoid misleading customers: the clock speed is kind of a minimum specification.

Arc 5 with the A550M uses half of an ACM-G10 die, cut down to 16 Xe cores and a 128-bit GDDR6 memory bus supporting 8GB of VRAM. With a 60W TDP it'll run at a 900 MHz graphics clock, which is quite low, but there will also be up to 80W configurations.

Then for Arc 7, we get the full-spec configurations for top-tier gaming laptops. The A770M includes the entire ACM-G10 die with 32 Xe cores, 16GB of GDDR6 on a 256-bit bus, and a graphics clock of 1650 MHz at 120W. Intel says that some configurations of Arc can run at the 2 GHz mark or higher, so this is likely what we'll see for the 150W upper-tier variants. It's also good to see Intel being generous with the memory; 16GB really is what we should be getting for these higher-tier GPUs and that's what Intel is providing – expect similar for desktop cards.

The A730M is a cut down ACM-G10 with 24 Xe cores and a 192-bit memory bus supporting 12GB of GDDR6. Its graphics clock is 1100 MHz with an 80-120W power range. So between all of these products, Intel is covering all the usual laptop power options from 25W right through to 120W.

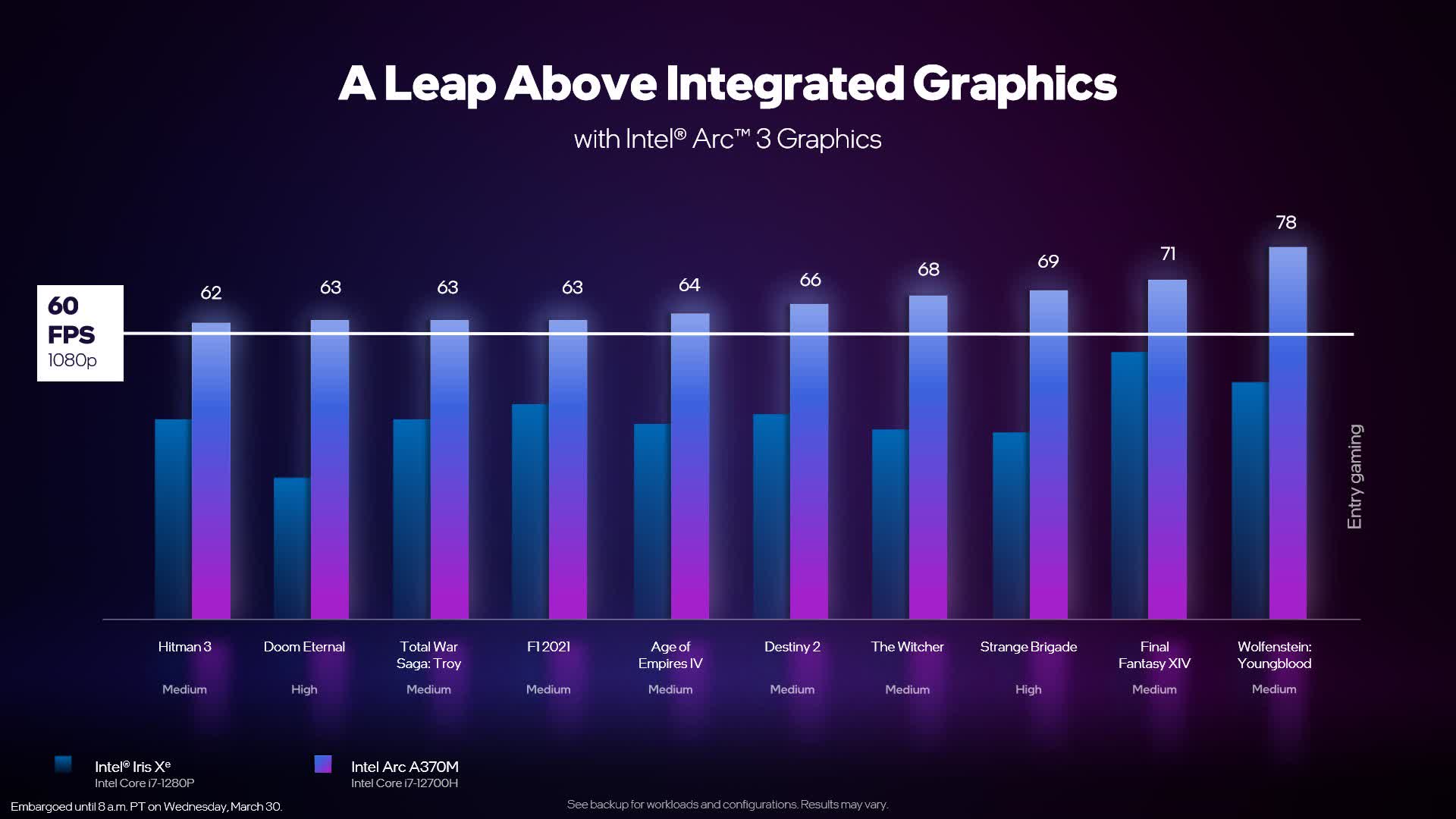

As Intel is mostly focusing on the Arc 3 launch for now, we did get a few performance benchmarks comparing the A370M in a system with Intel's Core i7-12700H to the integrated 96 execution unit Xe GPU in the Core i7-1280P. The Arc A370M is not exactly setting a high performance standard with Intel targeting just 1080p 60FPS using medium- to high-quality settings, however this is a very basic low-power discrete GPU option for mainstream thin and light laptops. Depending on the game we appear to be getting between 25 and 50 percent more performance than Intel's best iGPU option, although Intel didn't specify what power configuration we are looking at for the A370M.

However, I do have question marks over whether the A370M will be faster than the new Radeon 680M integrated GPU AMD offers in their Ryzen 6000 APUs. We know this iGPU as configured in the Ryzen 9 6900HS is approximately 35% faster than the 96 execution unit iGPU in the Core i9-12900HK, so it's quite possible that the A370M and Radeon 680M will trade blows. This wouldn't be a particularly amazing outcome for Intel's smaller Arc Alchemist die, so we'll have to hope that it does outperform the current best iGPU option when we get around to benchmarking it. Intel didn't offer any performance estimates for how their products compare to either AMD or Nvidia options, and usually Intel doesn't mind comparing their stuff to their competitors, so this is a bit of a red flag.

Alright, let's now breeze through some of the features Arc GPUs will be offering. The first is that the Xe cores themselves are able to run floating point, integer and XMX instructions concurrently. The vector engines themselves have separate FP and INT units, so with modern GPUs you'd expect concurrent usage and that's possible here in keeping with other architectures.

The media engine appears to be extremely powerful. These Arc GPUs are the first to offer AV1 hardware encoding acceleration, so this isn't just decoding like AMD and Nvidia's latest GPUs offer, this is full encoding support as well. We also get the usual support for H.264 and HEVC with up to 8K 12-bit decodes and 8K 10-bit encodes.

AV1 encoding support is huge for moving forward the AV1 ecosystem, especially for content creators that might want to leverage the higher coding efficiency AV1 offers over older codecs. However, Intel's demo here was a bit bizarre, showing AV1 for game streaming purposes. It's all well and good that Arc GPUs can stream Elden Ring in an AV1 codec, but this isn't actually useful in practice right now, as major streaming services like Twitch and YouTube don't support AV1 ingest. In fact, Twitch right now doesn't even support HEVC, so I wouldn't hold your breath for AV1 support any time soon. Arc GPUs supporting this will be much more useful for creator productivity workloads for now.

While the media engine is looking great, the display engine… not so much. Intel is supporting DisplayPort 1.4a and HDMI 2.0b here, and claiming it's DisplayPort 2.0 10G ready. However, there is no support for HDMI 2.1, which is a pretty ridiculous omission given the current state of GPUs. Not only has the HDMI 2.1 specification been available since late 2017, it's been integrated into both AMD and Nvidia GPUs since 2020, with a wide variety of displays now including HDMI 2.1. Using last-generation HDMI is a terrible misstep and hurts compatibility with TVs in particular that usually don't offer DisplayPort.

Intel's solution for OEMs wanting to integrate HDMI 2.1 is building in a DisplayPort to HDMI 2.1 converter using an external chip, but this is hardly an ideal solution, especially for laptops that are constrained on size and power. Intel didn't want to elaborate too much on the HDMI 2.1 issue so I'm still not sure whether this only applies to certain products but I'd be pretty disappointed if their desktop Arc products don't support HDMI 2.1 natively and it's not looking good in that area.

Another disappointing revelation from Intel's media conference was in relation to XeSS. Intel is showing support for 14 XeSS titles when the technology launches with Arc 5 and 7 GPUs this summer, however there's a catch here: the first implementation of XeSS will only support Intel's XMX instructions and therefore be exclusive to Intel GPUs. As a refresher, XMX are Intel's Xe Matrix Extensions, basically an equivalent to Nvidia's Tensor operations, which are vendor exclusive and vendor optimized. As XMX is designed for Arc to be run on their XMX cores, it only runs on Arc GPUs.

XeSS will eventually support other GPUs through a separate pipeline, the DP4a pipeline which will work on GPUs supporting Shader Model 6.4 and above, so that's Nvidia Pascal and newer, plus AMD RDNA and newer. However, Intel mentioned in a tidbit that the DP4a version will not be available at the same time as the XMX version, with the initial focus going towards XeSS via XMX on Intel GPUs. This is despite Intel previously saying XeSS uses a single API with one library that then has two paths inside for each version depending on the hardware. It seems that while this may be the goal eventually, possibly a second iteration of XeSS or an update down the line, the initial XeSS implementation is XMX only.

This isn't good news for XeSS and could make the technology dead on arrival. Around the time XeSS is supposed to launch, AMD will be releasing FidelityFX Super Resolution 2.0, which is a temporal upscaling solution that will work on all GPUs at launch. I don't see the incentive for developers to integrate XeSS into their games if it only works on Arc GPUs, which will only be a miniscule fraction of the total GPU market, especially if they could use FSR 2.0 instead. Intel can't afford to go down the DLSS path with exclusivity, that works for Nvidia as they have a dominant market share and developers integrating DLSS know they can at least target a large percentage of customers. Intel doesn't have that in the GPU market yet and won't any time soon, so releasing the version of XeSS that works on competitor GPUs is crucial for adoption of that technology.

One technology Intel talked about did catch my eye though, and that's smooth sync. This is a technology built into the display engine that can blur the boundary between two frames when you're playing in a Vsync-off configuration. This is mostly meant for basic fixed refresh rate displays where you still want to game at a high frame rate above the monitor's refresh rate for latency benefits. Intel says this only adds 0.5ms of latency for a 1080p frame. Unfortunately, the demo image here is simulated, but I'll be keen to check out how effective this is in practice.

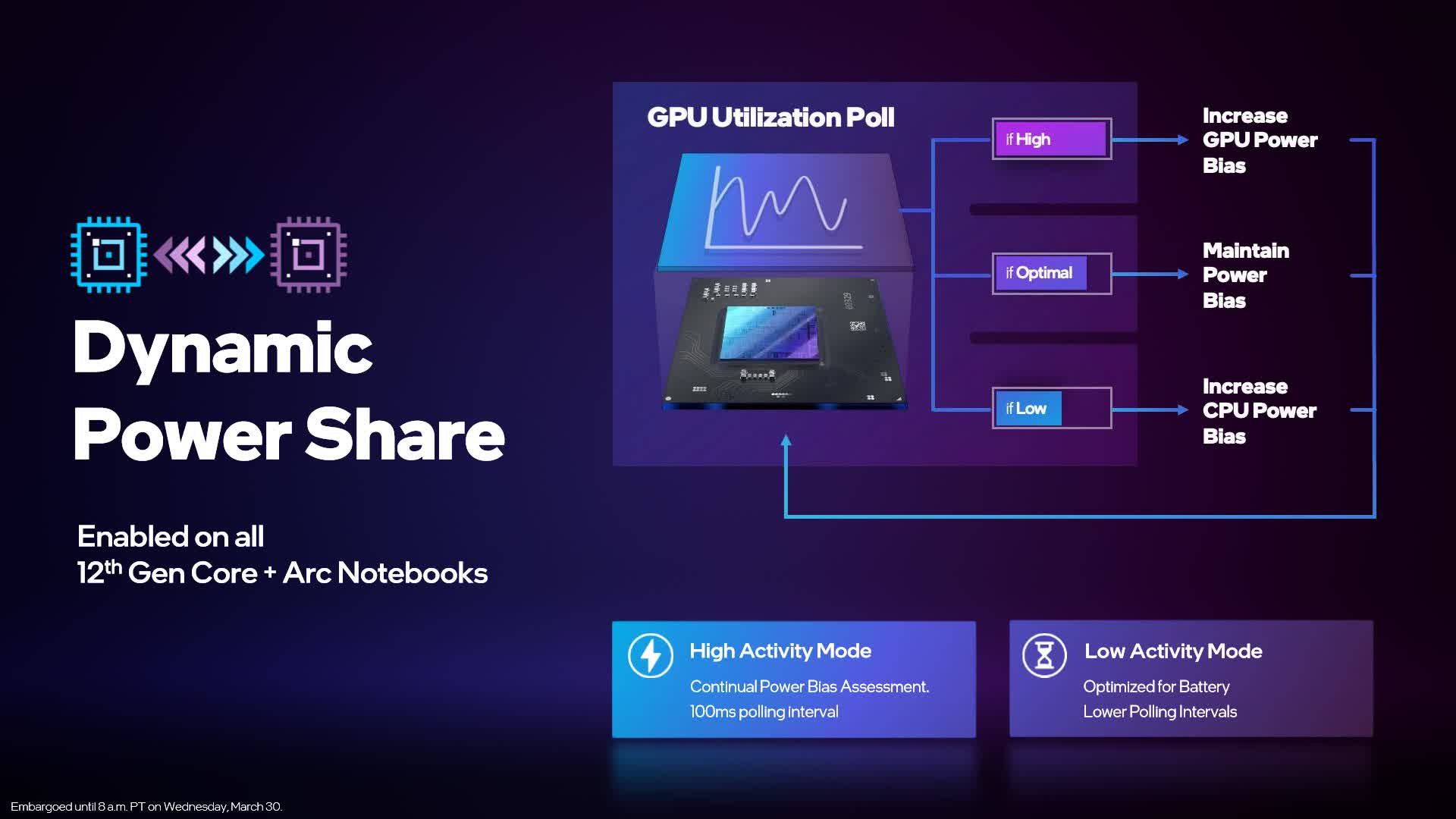

Intel also announced a technology called Dynamic Power Share, which is essentially a copy of AMD's SmartShift and Nvidia's Dynamic Boost designed to work with laptops that have Intel CPUs and Intel GPUs. As we've seen from SmartShift and Dynamic Boost, these technologies balance the total power budget of a laptop between the CPU and GPU depending on the demands of the workload, and that's exactly what Dynamic Power Share brings.

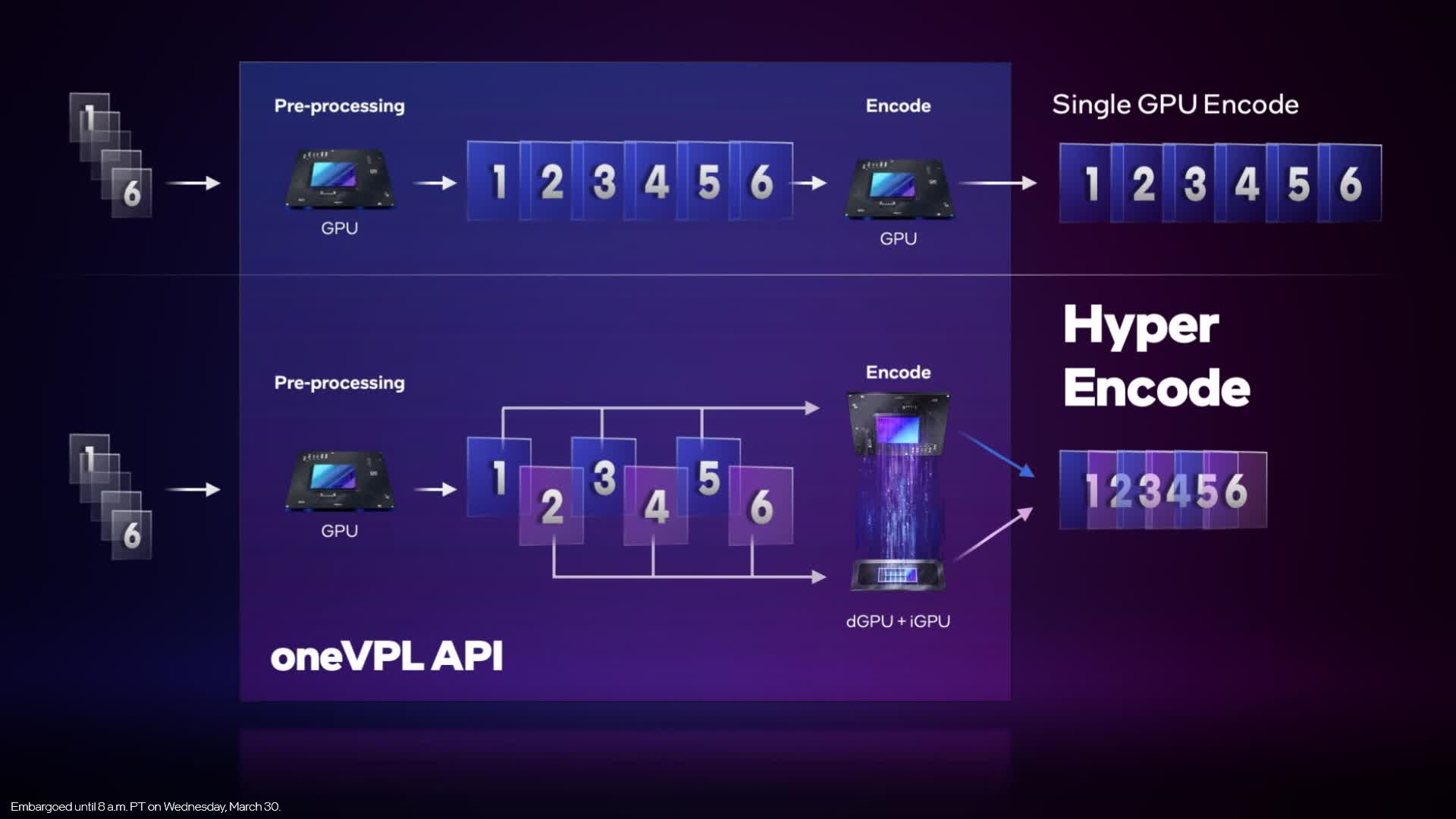

Then we have two other technologies, one called Hyper Encode which is able to combine the media encoding engine on an Intel CPU with the encoding engine on the GPU for increased performance. In supported applications using the oneVPL API, this essentially splits up the encoding process between the two engines before stitching them back together. Intel says this can provide up to a 60% performance uplift over using one encoding engine. And there's a similar tech for compute called Hyper Compute which offers up to 24% more performance.

Lastly, Intel showed off their new control center as part of their driver suite called Arc Control, which will be available for all of their GPU products. Intel's driver suite did need a bit of an overhaul and is a key point of contention for buyers looking at an Arc GPU vs. Nvidia or AMD. Arc Control is set to at least improve their interface in offering features like performance metrics and tuning, creator features like background removal for webcams, built in driver update support and of course all the usual settings. And unlike GeForce Experience, it won't require a user account or login.

The bigger concern for Intel is more driver optimization in the games themselves and there's nothing really that Intel can say here to satisfy buyers until reviewers can see how these products perform across a wide range of games. Intel say they will be providing day-1 driver updates in line with what Nvidia and AMD have been doing and have been putting a lot of work into developer relations, but this is a mammoth task for a new GPU vendor and something both AMD and Nvidia have had to build up over time, so it will definitely be interesting to see where it all lands when Arc GPUs are available to test.

And that's pretty much it for Intel's Arc GPU announcement. Bit of a mixed bag if I'm honest here, there are definitely some positive points and things to look forward to, but also some disappointments around the technologies and features. With Intel only ready to launch Arc 3 series GPUs at this point rather than the more powerful Arc 5 and 7 series. which appear at least 3 months away, in some respects it feels like this launch was mostly about Intel fulfilling their promise of a Q1 2022 launch for Arc – I'm sure ideally Intel wouldn't be launching low-end products first. We still have yet another wait to see what the big GPUs have in store for us.

With that said it was good to see some actual specifications for Arc GPU dies and SKUs at this point, including memory configurations, which have lined up relatively well with a lot of what the rumors were saying. I'm also glad to see Intel pushing ahead with AV1 hardware encoding acceleration, the first of any vendor to offer that feature.

However, I was pretty unimpressed to see the lack of HDMI 2.1 support, and the news that XeSS will be launching only with Intel-exclusive XMX support to begin with. That combined with delays for high-end SKUs that push closer to the launch of next-gen Nvidia and AMD GPUs means there are certainly a lot of hurdles for Intel to overcome with this launch. But either way we'll hopefully be able to benchmark Arc discrete graphics soon.

https://www.techspot.com/news/93976-intel-lifts-lid-arc-laptop-gpus.html