I easily imagine a 499 $ for the 4060. Ngreedia will strike again, no doubt.

It not going to be $500 it going to be $600 and be on par with 3050 not 3060.

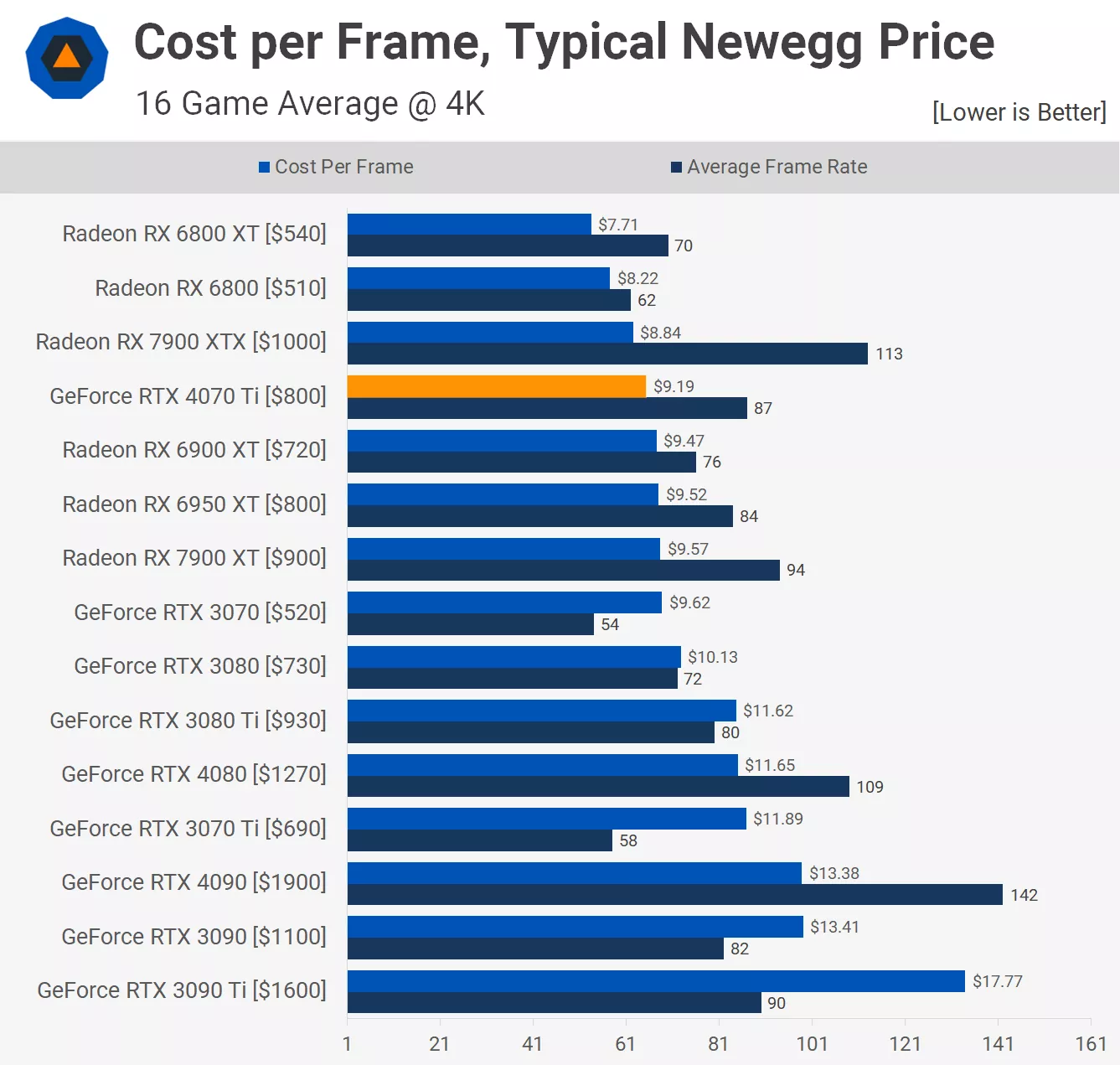

If all these tech web sites where not getting money from nvidia they would tell the public to skip the 4000s series cards and buy 3000s series cards.