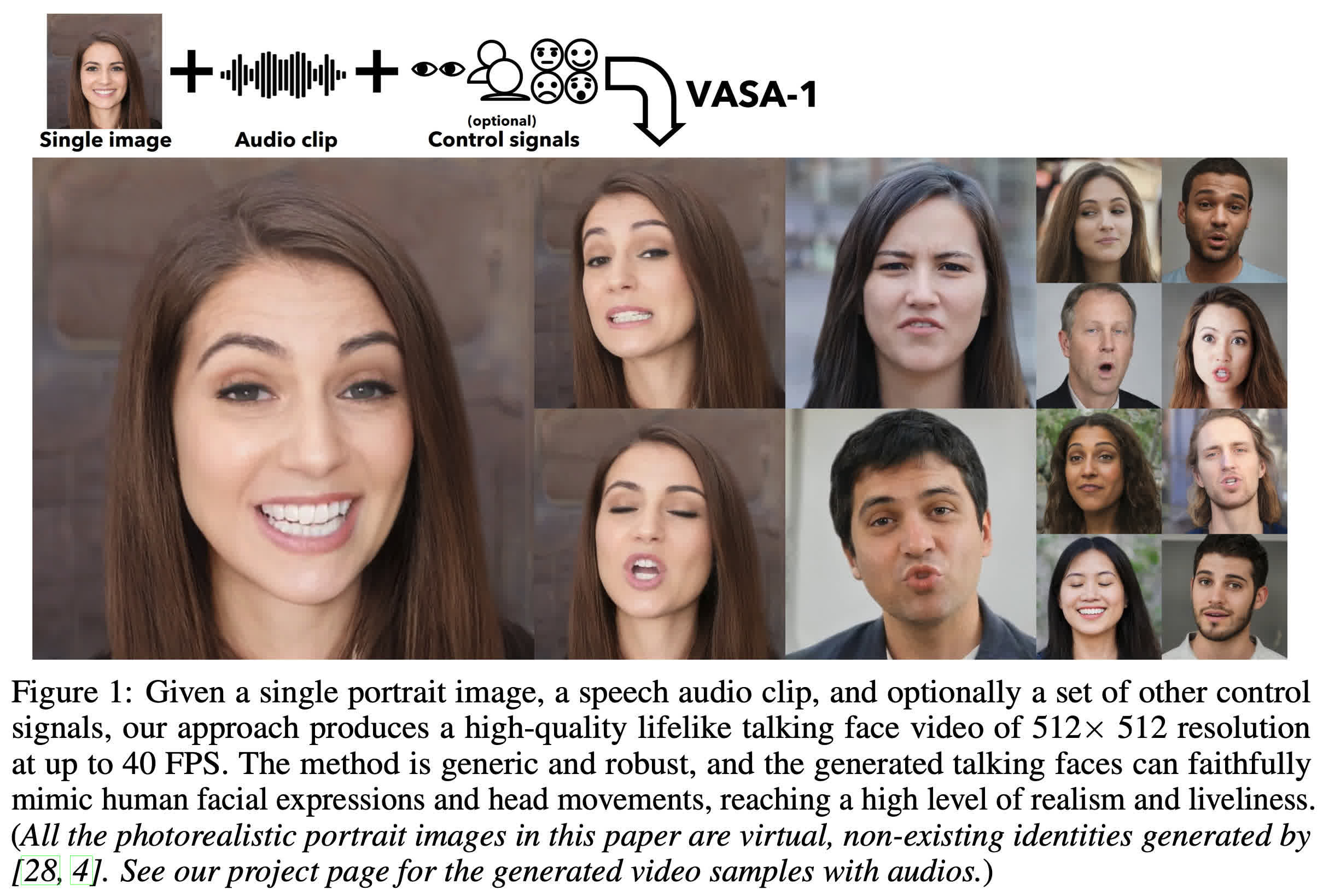

Through the looking glass: Microsoft Research Asia has released a white paper on a generative AI application it is developing. The program is called VASA-1, and it can create very realistic videos from just a single image of a face and a vocal soundtrack. Even more impressive is that the software can generate the video and swap faces in real time.

The Visual Affective Skills Animator, or VASA, is a machine-learning framework that analyzes a facial photo and then animates it to a voice, syncing the lips and mouth movements to the audio. It also simulates facial expressions, head movements, and even unseen body movements.

Like all generative AI, it isn't perfect. Machines still have trouble with fine details like fingers or, in VASA's case, teeth. Paying close attention to the avatar's teeth, one can see that they change sizes and shape, giving them an accordion-like quality. It is relatively subtle and seems to fluctuate depending on the amount of movement going on in the animation.

There are also a few mannerisms that don't look quite right. It's hard to put them into words. It's more like your brain registers something slightly off with the speaker. However, it is only noticeable under close examination. To casual observers, the faces can pass as recorded humans speaking.

The faces used in the researchers' demos are also AI-generated using StyleGAN2 or DALL-E-3. However, the system will work with any image – real or generated. It can even animate painted or drawn faces. The Mona Lisa face singing Anne Hathaway's performance of the "Paparazzi" song on Conan O'Brien is hilarious.

Joking aside, there are legitimate concerns that bad actors could use the tech to spread propaganda or attempt to scam people by impersonating their family members. Considering that many social media users post pictures of family members on their accounts, it would be simple for someone to scrape an image and mimic that family member. They could even combine it with voice cloning tech to make it more convincing.

Microsoft's research team acknowledges the potential for abuse but does not provide an adequate answer for combating it other than careful video analysis. It points to the previously mentioned artifacts while ignoring its ongoing research and continued system improvement. The team's only tangible effort to prevent abuse is not releasing it publicly.

"We have no plans to release an online demo, API, product, additional implementation details, or any related offerings until we are certain that the technology will be used responsibly and in accordance with proper regulations," the researchers said.

The technology does have some intriguing and legitimate practical applications, though. One would be to use VASA to create realistic video avatars that render locally in real-time, eliminating the need for a bandwidth-consuming video feed. Apple is already doing something similar to this with its Spatial Personas available on the Vision Pro.

Check out the technical details in the white paper publish on the arXiv repository. There are also more demos on Microsoft's website.

Microsoft VASA tech can create realistic deepfakes using a single photo and one audio track