Impressive: One of the quickest and easiest ways to boost your computer’s overall performance is to upgrade the memory subsystem. In many instances, it can take fewer than five minutes to remove your old memory and swap in a new kit that is faster and / or offers more capacity. It’s no wonder, then, that this is often among the first upgrades to consider when sprucing up an older system. What Russian modder VIK-on has accomplished in his latest video series, however, is many levels beyond a basic memory upgrade.

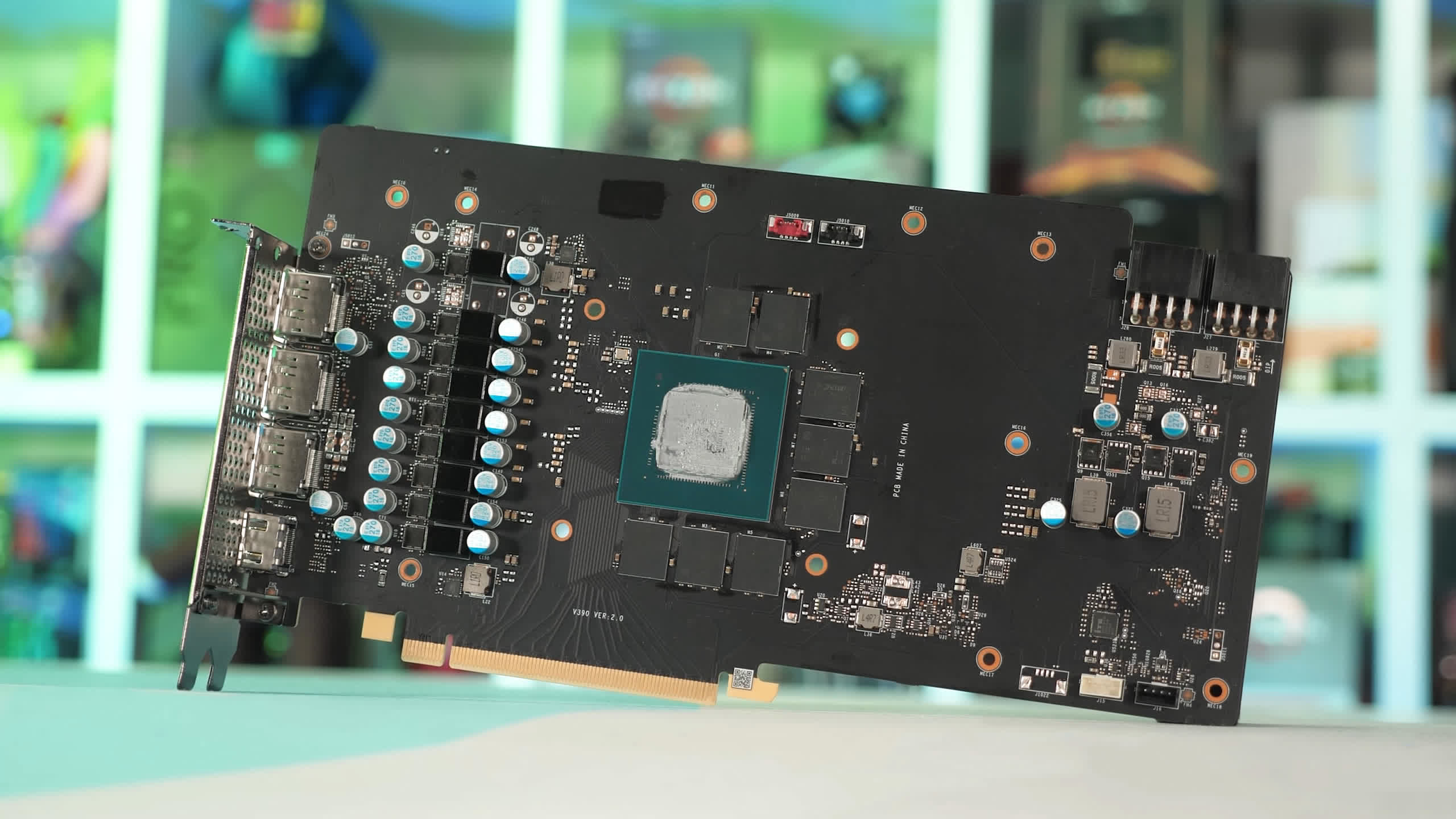

The hardware tweaker took a Palit-branded GeForce RTX 3070, removed the 1GB GDDR6 memory modules (K4Z803256C-HC14) and replaced them with 2GB GDDR6 models (K4ZAF3258M-HC14), effectively doubling the card's memory to 16GB.

If that weren’t challenging enough, VIK-on also had to change some resistor settings in order to trick the card into recognizing that it now has twice as much memory on tap.

Unfortunately, issues still persisted. Similar to what happened when the mod was performed on a GeForce RTX 2070, the card would crash after running an intense workload. This is where the YouTuber’s subscribers chimed in with a potential solution.

As it turns out, using the EVGA Precision X1 tuning software to lock the card’s frequency rectified the issue, allowing the modder to complete benchmarking runs in 3DMark Time Spy and Unigine Superposition – 8K Optimized. The results from those tests are in line with what you’d expect, proving that the upgrade was indeed a success.

https://www.techspot.com/news/88855-modder-doubles-memory-geforce-rtx-3070-16gb.html