The big picture: Starting tomorrow, Nvidia is hosting its GTC developer conference. Once a sideshow for semis, the event has transformed into the center of attention for much of the industry. With Nvidia's rise, many have been asking the extent to which Nvidia's software provides a durable competitive moat for its hardware. As we have been getting a lot of questions about that, we want to lay out our thoughts here.

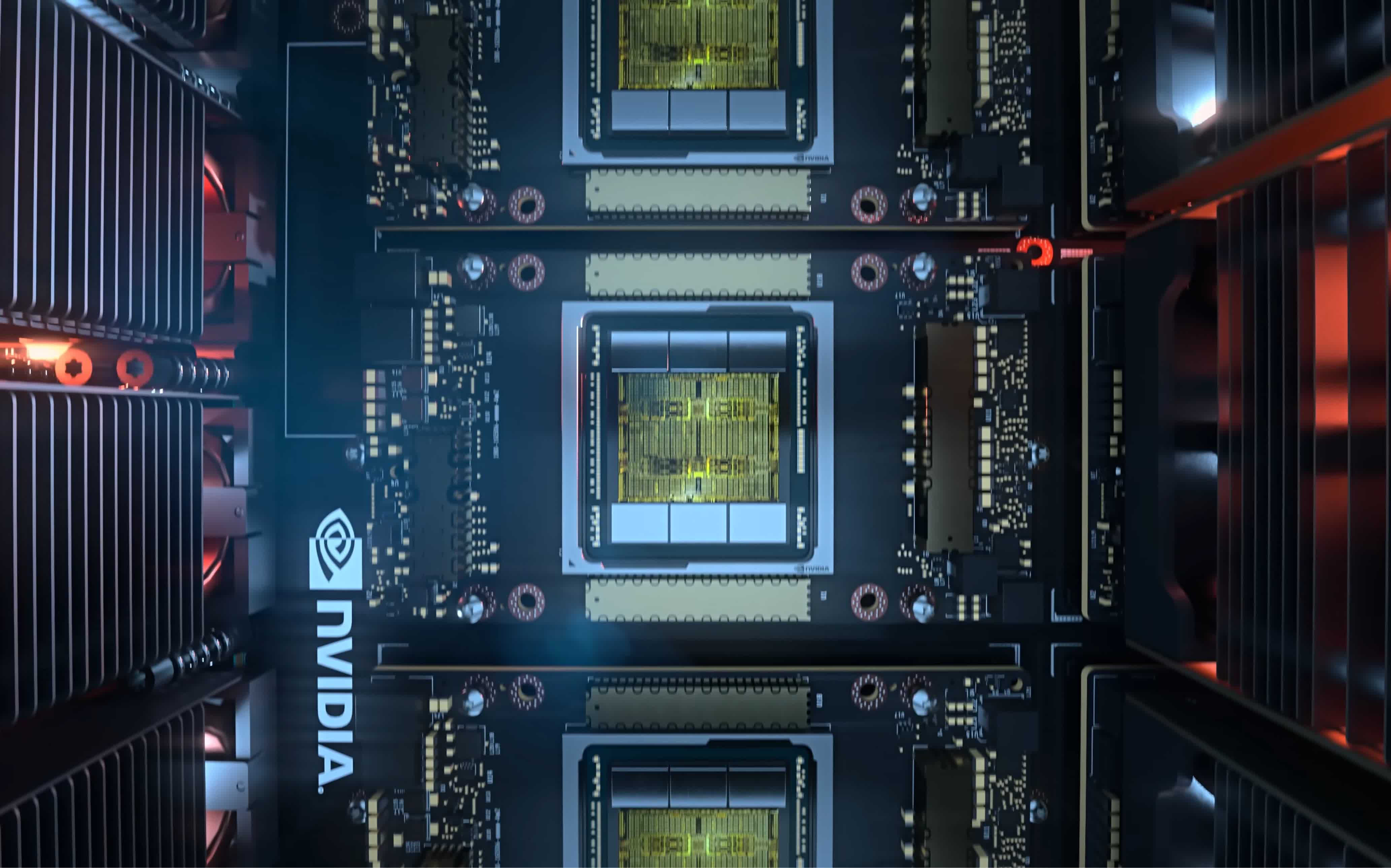

Beyond the potential announcement of the next-gen B200 GPU, GTC is not really an event about chips, GTC is a show for developers. This is Nvidia's flagship event for building the software ecosystem around CUDA and the other pieces of it's software stack.

It is important to note that when talking about Nvidia many people, ourselves included, tend to use "CUDA" as shorthand for all the software that Nvidia provides. This is misleading as Nvidia's software moat is more than just the CUDA development layer, and this is going to be critical for Nvidia in defending its position.

Editor's Note:

Guest author Jonathan Goldberg is the founder of D2D Advisory, a multi-functional consulting firm. Jonathan has developed growth strategies and alliances for companies in the mobile, networking, gaming, and software industries.

At last year's GTC, the company put out 37 press releases, featuring a dizzying array of partners, software libraries and models. We expect more of this next week as Nvidia bulks up its defenses.

These partners are important because there are now hundreds of companies and millions of developers building tools on top of Nvidia's offerings. Once built, those people are unlikely to rebuild their models and applications to run on other company's chips, at least any time soon. It is worth noting that Nvidia's partners and customers span dozens of industry verticals, and while not all of those are going all-in on Nvidia, it still demonstrates immense momentum in Nvidia's favor.

Put simply the defensibility of Nvidia's position right now rests on the inherent inertia of software ecosystems. Companies invest in software – writing the code, testing it, optimizing it, educating their workforce on its use, etc. – and once that investment is made they are going to be deeply reluctant to switch.

We saw this with the Arm ecosystem's attempt to move into the data center over the last ten years. Even as Arm-based chips started to demonstrate real power and performance advantages over x86, it still took years for the software companies and their customers to move, a transition that is still underway. Nvidia appears to be in early days of building up exactly that form of software advantage. And if they can achieve it across a wide swathe of enterprises, they are likely to hold onto for many years. This more than anything else is what positions Nvidia best for the future.

Nvidia has formidable barriers to entry in its software. CUDA is a big part of that, but even if alternatives to CUDA emerge, the way in which Nvidia is providing software and libraries to so many points to them building a very defensible ecosystem.

We point all this out because we are starting to see alternatives to CUDA emerge. AMD has made a lot of progress with its answer to CUDA, ROCm. However, when we say progress, we mean they now have a good, workable platform, but it will take years for it to gain even a share of the adoption of CUDA. ROCm is only available on a small number of AMD products today, while CUDA has worked on all Nvidia GPUs for years.

Other alternatives like UXL or varying combinations of PyTorch and Triton, are similarly interesting but also in early days. UXL in particular looks promising, as it is backed by a group of some of the biggest names in the industry. Of course, that is also its greatest weakness, as those members have highly divergent interests.

We would argue that little of this will matter if Nvidia can get entrenched. And here is where we need to distinguish between CUDA and the Nvidia software ecosystem. The industry will come up with alternatives to CUDA, but that does not mean they can completely erase Nvidia's software barriers to entry.

Also read: Goodbye to Graphics: How GPUs Came to Dominate AI and Compute – No Longer "Just" a Graphics Card

That being said, the biggest threat to Nvidia's software moat is its largest customers. The hyperscalers have no interest in being locked into Nvidia in any way, and they have the resources to build alternatives. To be fair, they are not immune to staying close to Nvidia, it remains the default solution and still has many advantages, but if anyone puts a dent in Nvidia's software ambitions, it is most likely to be from this corner.

And that, of course, opens up the question as to what exactly Nvidia's software ambitions are.

In past years, as Nvidia launched its software offerings, up to and including its cloud service Omniverse, they have conveyed a sense that they had ambitions to create a new component of their revenue stream. On their latest earnings call, they pointed out that they had generated $1 billion in software revenue. However, more recently, we have gotten the sense that they may be repositioning or scaling back those ambitions a bit, with software now positioned as a service they provide to their chip customers rather than a full-blown revenue segment in its own right.

After all, selling software risks putting Nvidia in direct competition with all its biggest customers.