Rumor mill: Next week will finally see Nvidia confirm its Ampere architecture, and it seems the company has put in a massive order with TSMC for its 7nm node technology. Looking beyond Ampere to the next-generation 5nm Hopper family, Nvidia is lightening the load on TSMC by handing some of the work over to Samsung.

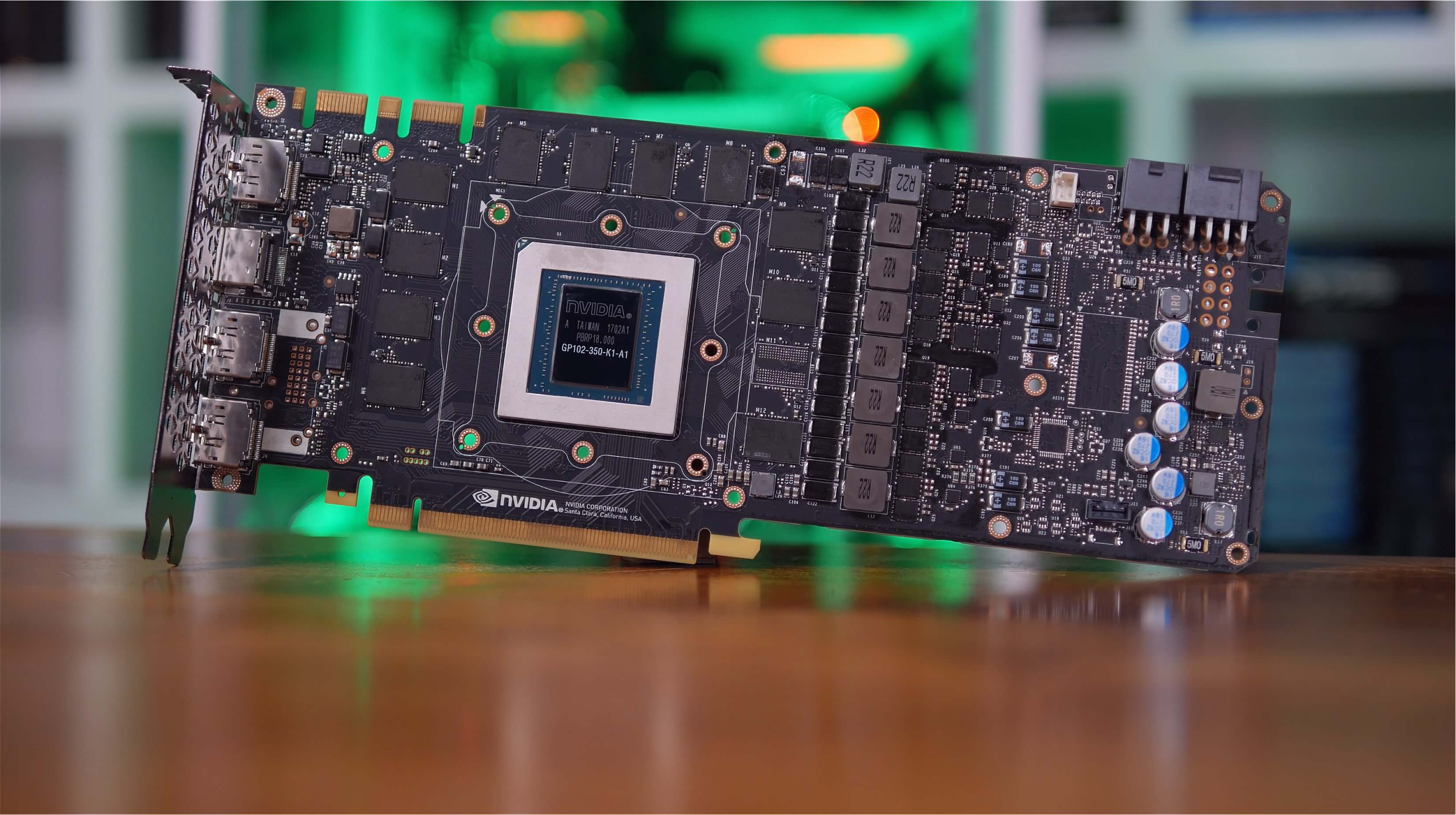

According to reports in The China Times and DigiTimes, Nvidia has been one of TSMC’s main customers of the 7nm process node, which seems to confirm that Ampere GPUs, including those used in consumer graphics cards such as the RTX 3080, will be based on this manufacturing process. Considering rival AMD has been on 7nm since the launch of EPYC Rome last August, this makes sense.

Following the release of Ampere, Nvidia’s next architecture will be Hopper. As one might expect, this will see a shrink from 7nm to 5nm. But reports claim the process will not entirely be handled by the Taiwan Semiconductor Manufacturing Company.

DigiTimes writes that in addition to reserving production slots for TSMC’s 5nm production capacity in 2021, Nvidia will be handing some of the work over to Samsung. Specifically, the Korean giant will produce lower-end Ampere graphics cards, which could use its 7nm EUV or 8nm process nodes.

Last month, we heard that Nvidia was placing orders with TSMC for a mystery 5nm product, which is likely based on Hopper.

Samsung did complete its 5nm EUV development last year and said in its Q1 earnings report that production of the 5nm EUV process will begin in the second quarter of 2020. Nvidia is reportedly in negotiations with the company over whether it can take some 5nm orders, too.

Nvidia CEO Jensen Huang will lead the company’s keynote at the Graphics Technology Conference on May 14, which comes with the byline “get amped.”

It’s expected that Ampere’s consumer graphics cards will arrive in the third quarter, which lines up with rumors of Nvidia AIB partners clearing stock to prepare for the RTX 3000 launch in Q3.

https://www.techspot.com/news/85117-nvidia-has-7nm-order-tsmc-ampere-5nm-hopper.html