In a nutshell: Did you know that higher refresh rates can give you a competitive edge during heated gaming sessions? That may seem like common sense to most avid PC gamers, but Nvidia has published a lengthy, in-depth article to hammer this point home even further.

The GPU maker covers quite a bit of ground in its post, but we'd like to focus on some of the more interesting points here. First, let's talk about the relationship between frame rates and refresh rates.

Also read: How Many FPS Do You Need? Frames Per Second, Explained

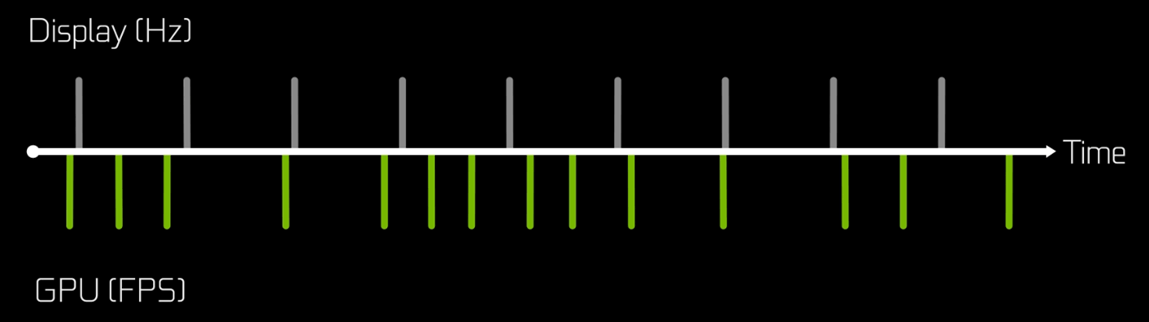

As Nvidia points out, the terms are sometimes used interchangeably, but they describe two different things: your frame rate is the rate at which your GPU can draw frames for a game or piece of software, and your refresh rate effectively measures how well your monitor can keep up. If your GPU is putting out 144 frames per second, but you're gaming on a 60Hz display, you're going to see a not-insignificant amount of screen tearing as your monitor fails to match your video card's speed.

This is why many gamers with lower-end displays prefer to lock their in-game frame rates to around 60 with third-party tools like MSI Afterburner or Rivatuner Statistics Server. As Nvidia states, for optimal results and minimal "ghosting" (the trail that gets left behind by moving images on LCD displays), you generally want both your FPS and refresh rate to be high.

But how high is high enough? For most PC gamers, 60 FPS gaming is the holy grail; the target to pursue and maintain with settings tweaks and hardware upgrades. And that's a perfectly reasonable standard. It's impossible to deny that there is a massive difference in smoothness and playability between 30 and 60 FPS, and most eSports pros will tell you that the latter is the bare minimum you want to achieve before playing games like Overwatch in a competitive capacity.

And then there's 144 FPS gaming: another very noticeable improvement from 60 FPS for many people, and one that can certainly make a difference in competitive gaming. Still, this is often considered an unnecessary luxury, even among many PC gaming enthusiasts.

Speaking from experience, even with the best hardware, it's challenging to maintain upwards of 120 FPS in the latest games without making hefty sacrifices in the visual quality department. See our Red Dead Redemption 2 performance benchmarks for ample evidence of that.

However, Nvidia aims to convince you that not only is 144 FPS-supporting hardware and display tech worth the investment, but you should actually make the jump to 240 FPS for the best competitive results.

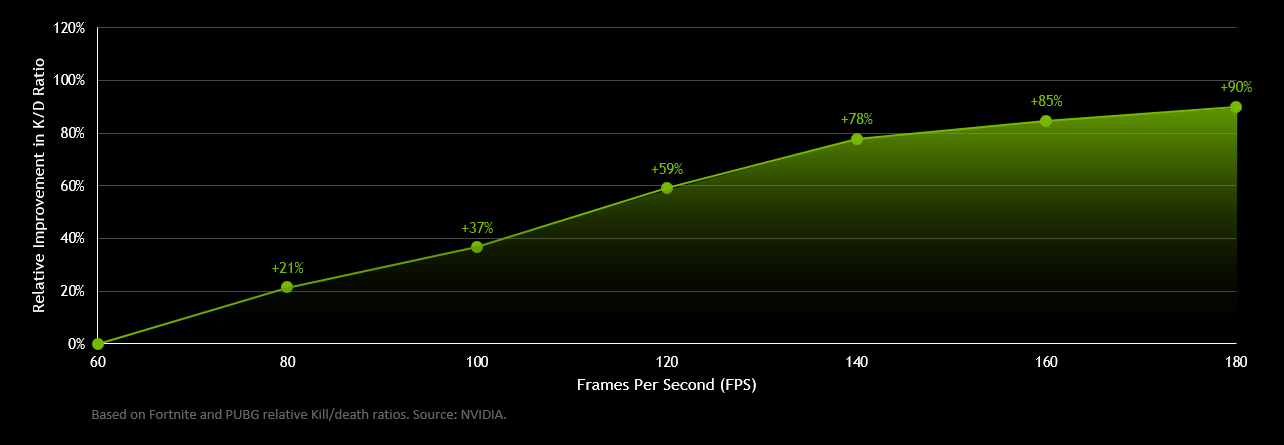

In the company's own studies, Nvidia says it found a strong, direct link between a player's FPS and their K/D ratio in PUBG and Fortnite. Compared to gaming at 60 FPS, Nvidia says users who play at 140 FPS enjoyed a relative K/D improvement of 78 percent. That number climbed all the way to 90 percent when testers hit the 180 FPS mark.

Those results are certainly intriguing, and there's probably some merit to them, but we likely don't need to tell you that Nvidia is not exactly an unbiased third-party here. The company earns revenue from the sale of high-end PC hardware (such as the outlandishly-priced 2080 Ti), so it has a vested interest in persuading you that the best way to achieve maximum frame rates and competitive success is to buy its products.

Further, PUBD and Fortnite are not necessarily the best candidates for this sort of experiment. No matter how skilled you are, there's almost no such thing as an "average" battle royale match. By their very nature, these games are inconsistent, and it's quite easy to lose (or win) many matches in a row through no fault of your own. Additionally, you don't need to earn many kills to win a game, and a single death will boot you back to the lobby.

We don't mean to imply that Nvidia has somehow fudged the results here. If your reaction times are fast enough, it's absolutely possible that you'd benefit from gaming at well over 60, or even 144 FPS. Even in non-competitive games, a smoother experience can only be a good thing.

Whether those benefits will be as significant as Nvidia would have you believe, though, is another matter entirely, and that's the topic of much ongoing discussion in the PC gaming community.

To that end, we'd love to hear your thoughts on this situation. Do you agree with Nvidia that very high refresh and frame rates (144 or more) will lead to much better gaming results, or do you think the 60 FPS/Hz mark is satisfactory? Let us know in the comments.

https://www.techspot.com/news/83036-nvidia-high-framerates-could-lead-significantly-better-kd.html