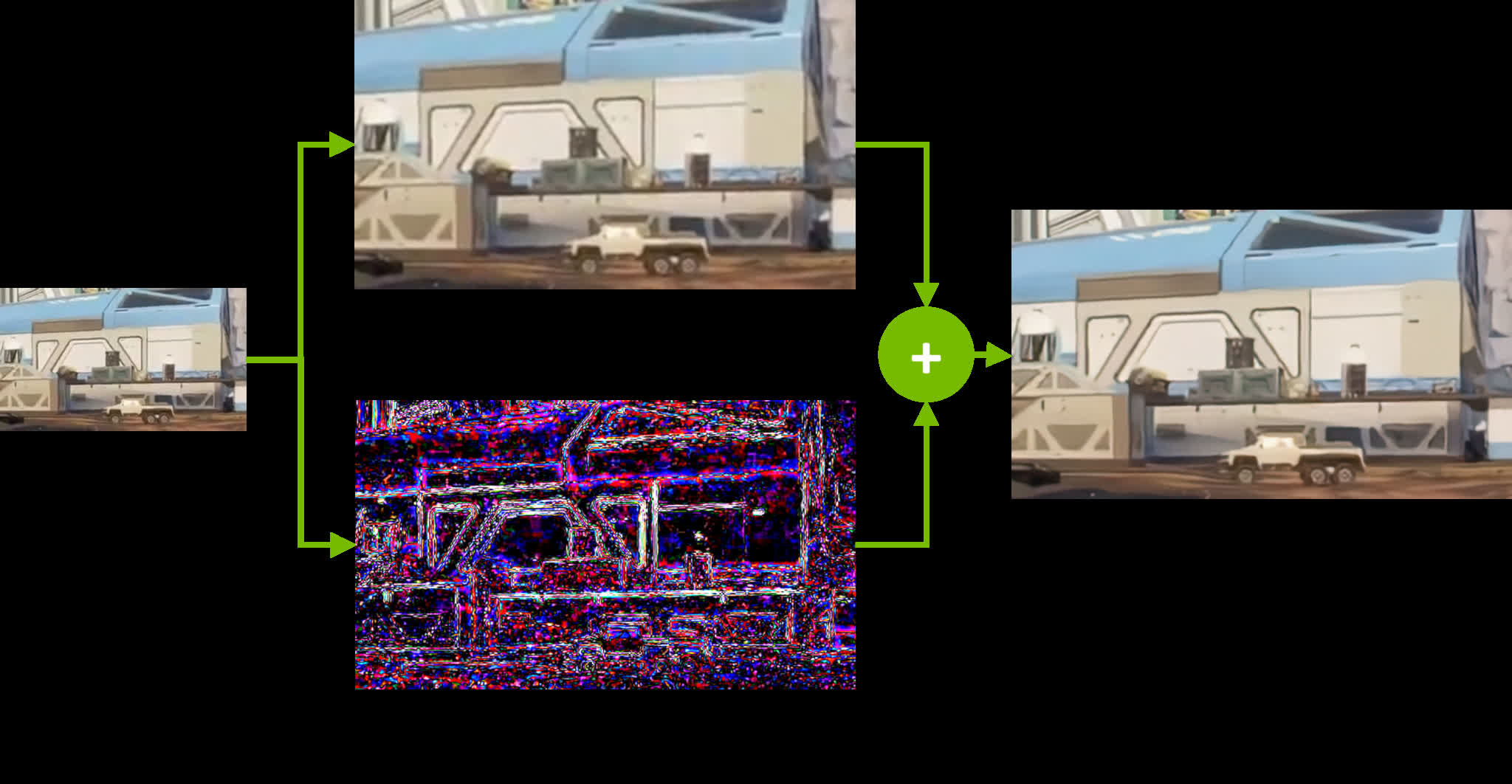

What just happened? Nvidia has launched a new AI upscaling tool designed to boost the effective resolution and overall quality of online videos. Dubbed RTX Video Super Resolution (VSR), the new tool taps into the power of GeForce RTX 40 and 30 Series GPUs to upscale lower-resolution video content up to 4K. Nvidia said the AI is able to remove compression artifacts including blockiness, banding on flat areas, ringing artifacts around edges and washing out of high-frequency details while improving overall sharpness and clarity, all in a single pass.

According to the GPU maker, nearly 80 percent of Internet bandwidth today is comprised of streaming video and 90 percent of it is streamed at 1080p quality or lower. This is fine for lower-resolution devices or older TVs that don't support 4K but when viewed on a computer with a high-res display, the resulting image is often soft or blurry.

The company likened it to putting on a pair of prescription glasses to "snap the world into focus."

Nvidia's neural network model was trained on "countless" images at different resolutions and compression levels so it would know how to properly handle all types of content. An early version of this same tech debuted on the Shield TV in 2019 but that mostly targeted living room use. PC users sit much closer to their displays so the tech had to be enhanced to produce a higher level of processing and refinement.

RTX VSR is available now as part of the latest GeForce Game Ready Driver and requires an RTX 40 or 30 Series GPU. It works with most content streamed in Microsoft Edge and Google Chrome, we're told. Make sure you have the latest versions of your respective browser as support for the tech was only recently added.

With everything in place, simply open the Nvidia Control Panel, head to "adjust video image settings," look for the "RTX video enhancement" section and tick the super resolution box. The feature currently offers four quality levels to choose from, ranging from the lowest performance impact to the highest level of improvement.

https://www.techspot.com/news/97765-nvidia-launches-rtx-video-super-resolution-boost-streaming.html