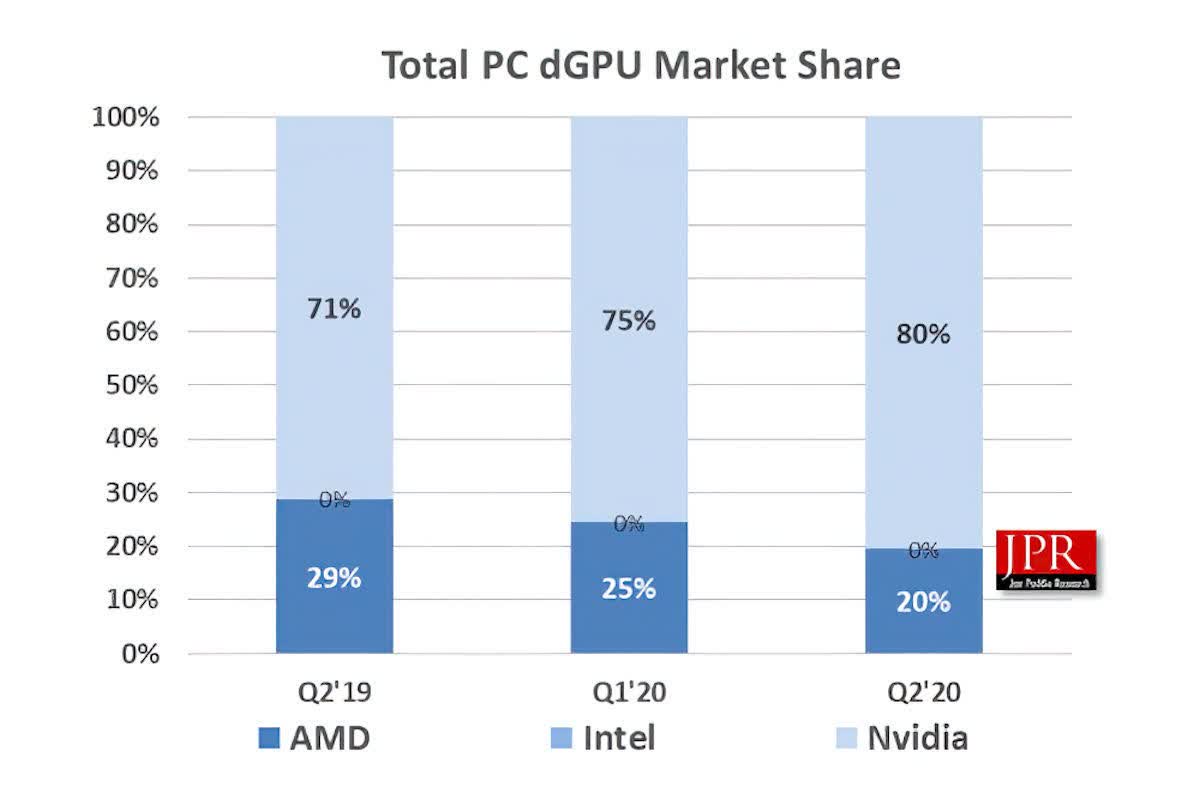

In a nutshell: Nvidia's upcoming Ampere consumer cards will help tighten its grip on the dedicated GPU industry, an area that it already dominates. According to a new report, team green has seen its dGPU market share jump from 75% in Q1 2020 to 80% in the second quarter.

Jon Peddie Research's Q2 2020 "Market Watch" report shows that desktop graphics add-in board sales increased 6.55% from Q1 2020. Intel doesn't (yet) have any product in this field, so Nvidia's 5% quarterly increase has come from AMD, which drops to 20%.

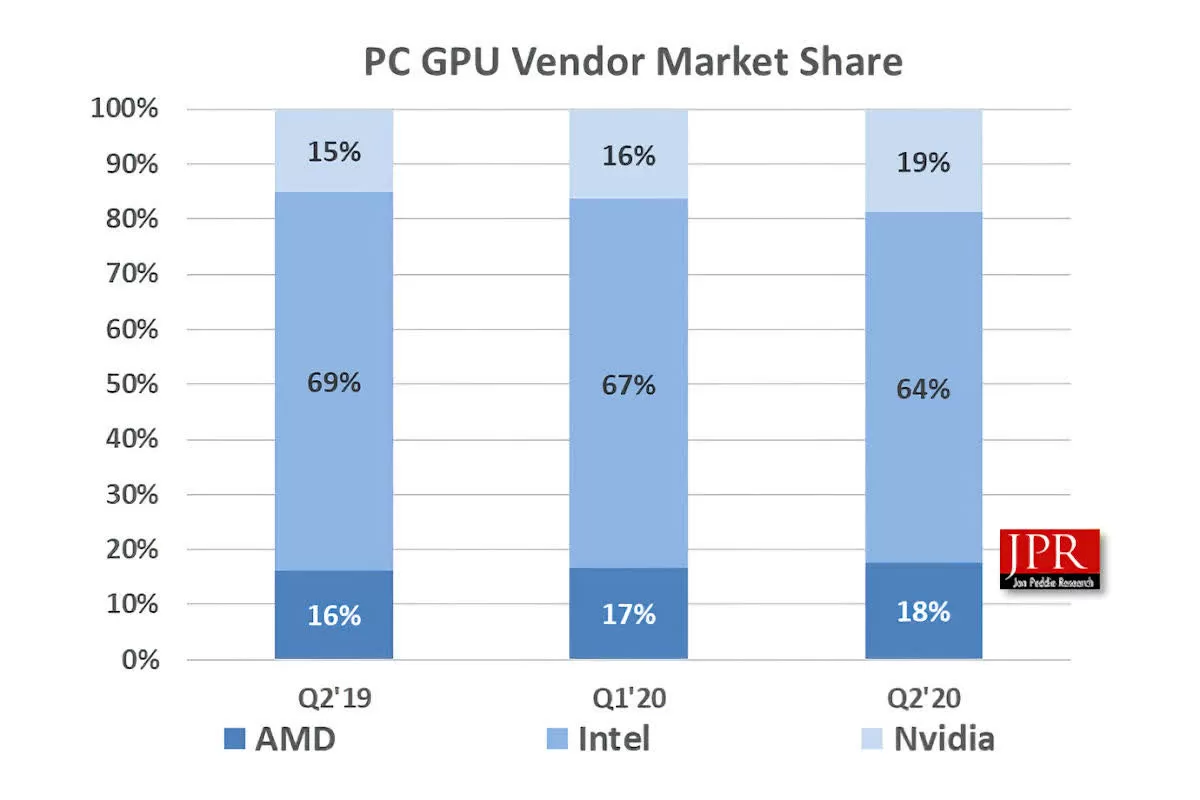

It's a different story when it comes to overall graphics, which Intel rules because of the integrated GPUs it offers in many of its CPUs. Chipzilla takes 64% of this sector—down 3%—while Nvidia's share jumped from 16% to 19%, and AMD was up 1% to 18% QoQ.

It was good news for both gaming giants, as AMD's overall unit shipments were up 8.4% QoQ while Nvidia's jumped 17.8%. Intel, however, was down 2.7%.

Jon Peddie notes that the second quarter is usually down compared to Q1, but the increase in people working from home and gaming—both a result of the pandemic—meant this quarter was up.

"The pandemic has been disruptive and had varying effects on the market. Some sales that might have occurred in Q3 (such as notebooks for the back-to-school season) have been pulled in to Q2 while desktop sales declined. Intel's manufacturing challenges have also negatively affected desktop sales," wrote Jon Peddie, President of JPR.

"We believe the stay at home orders have continued to increase demand in spite of the record-setting unemployment levels. As economies open up, consumer confidence will be an important metric to watch."

Ampere already looks exciting, but Nvidia will have to contend with AMD's Big Navi release this year, while 2021 sees the launch of Intel's Xe HPG Gaming GPUs. More competition for Jensen Huang's firm, but more choice for consumers.

https://www.techspot.com/news/86532-nvidia-now-holds-80-dedicated-gpu-market.html