Why it matters: Nvidia has a lot of things to be happy about as of late, one of which is an interesting new partnership that will see Microsoft bring Xbox and Activision games to the former's competing cloud gaming service, GeForce Now. A renewed interest in AI-infused technologies is also a saving grace for the GPU maker as gaming graphics revenue dries up.

The company's latest financial report suggests Nvidia is doing much better than Wall Street analysts had expected. During the fourth quarter of last year, it made revenue totaling $6.05 billion, down 21 percent from the same period in 2021.

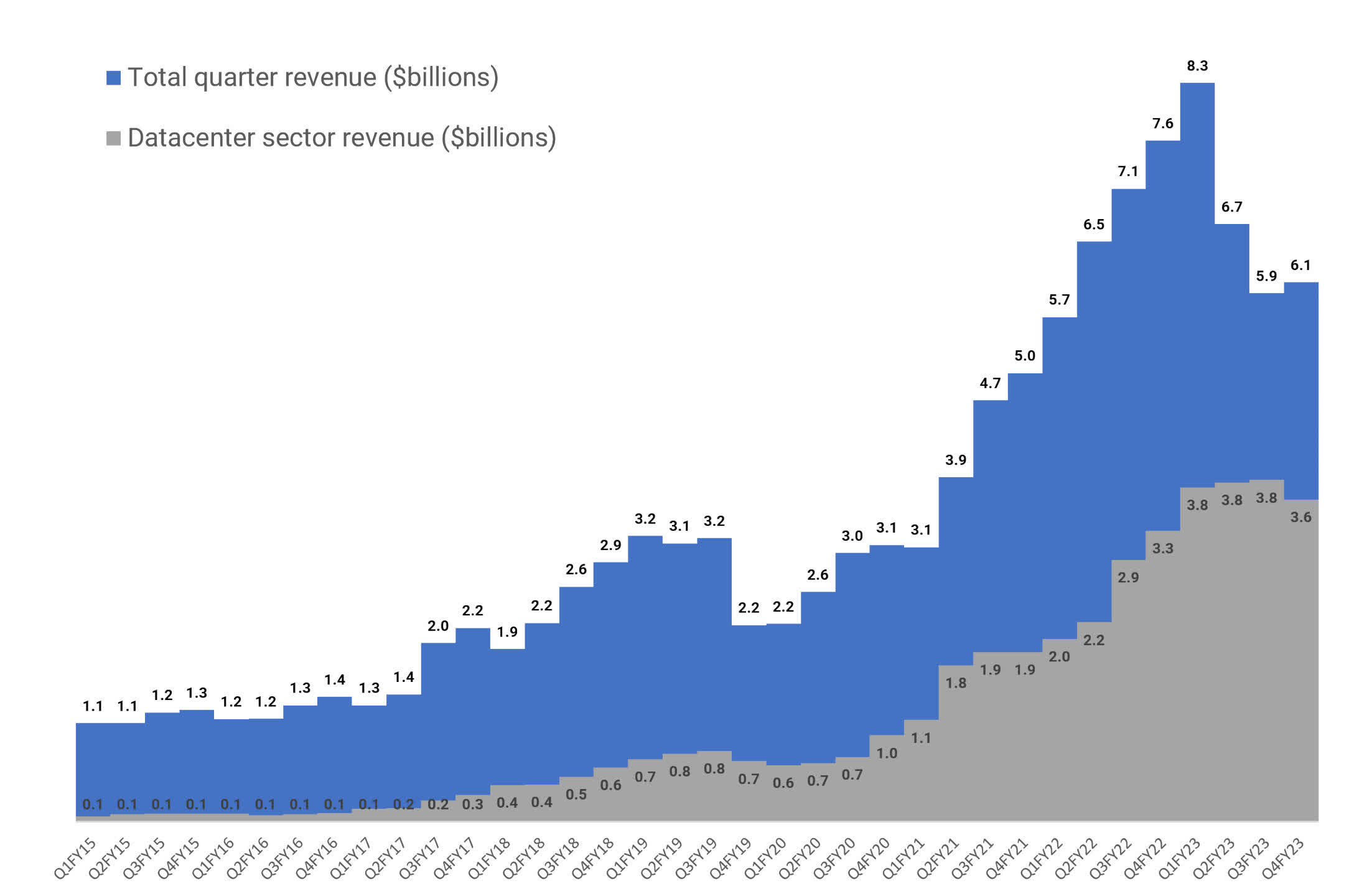

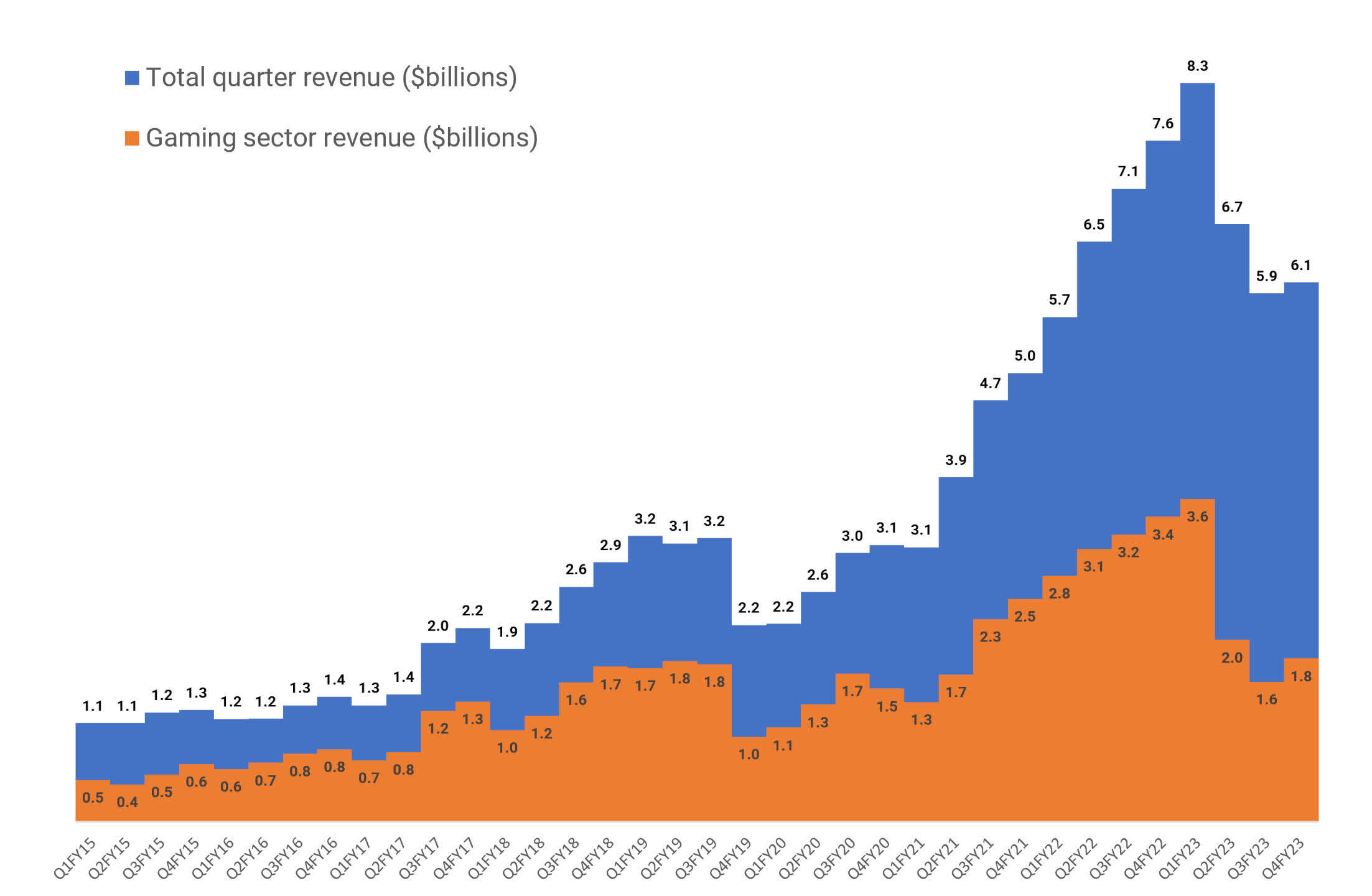

Unsurprisingly, gaming revenue saw the most significant year-over-year drop to about $1.8 billion. That's a 46 percent dive compared to Q4 2021 but up 16 percent from Q3 2022. It's also higher than the $1.6 average estimate by Wall Street analysts. Datacenter and automotive sales were up, but not enough to compensate for the sizable dip in consumer GPU sales and SoCs for game consoles.

Still, Nvidia raked in almost $27 billion in revenue for the year, similar to that achieved in FY 2022. Although, net income saw a 55 percent decline to a little under $4.4 billion. It's also evident that data center revenue — up 41 percent year-over-year to $15 billion — is now the primary contributor to overall revenue.

Like every other chipmaker, the company is dealing with much lower consumer demand compared to previous years, which aligns with the overarching trend in the PC industry. The RTX 4080 saw a rocky launch riddled with controversy, cryptocurrency mining has migrated to ASICs, and overall GPU pricing hasn't seen any significant improvement in months.

Click to enlarge

During an investor call, Nvidia CFO Colette Kress commented that lower gaming sales resulted from China's Covid-19-related problems and channel inventory issues. Specifically, Kress pointed at retailers buying less inventory to eliminate existing RTX 30-series stock. However, that doesn't explain why GPU prices have barely budged since last summer.

One thing is clear. Nvidia's future growth depends heavily on the latest AI renaissance and the race to train large language models like ChatGPT. Earlier this month, Nvidia CEO Jensen Huang said ChatGPT represents the "iPhone moment" for artificial intelligence, which offers a golden opportunity for his company to fill the exploding demand for AI training hardware.

Huang told investors that gaming revenue is also poised for recovery but spent most of the call time hyping the possibilities of computing platforms like ChatGPT, which reached over 100 million active users in only two short months.

https://www.techspot.com/news/97706-nvidia-optimistic-about-future-even-gaming-revenue-dries.html