In context: Like Sony vs. Microsoft, Intel vs. Qualcomm, and Apple vs. everyone, Nvidia vs. AMD is one of the tech industry's big rivalries. So, it came as a surprise when team green chose its main competitor to provide the server processors for its new DGX A100 deep learning system, rather than using Intel’s Xeon platform. Now, the company has revealed the reason behind its decision.

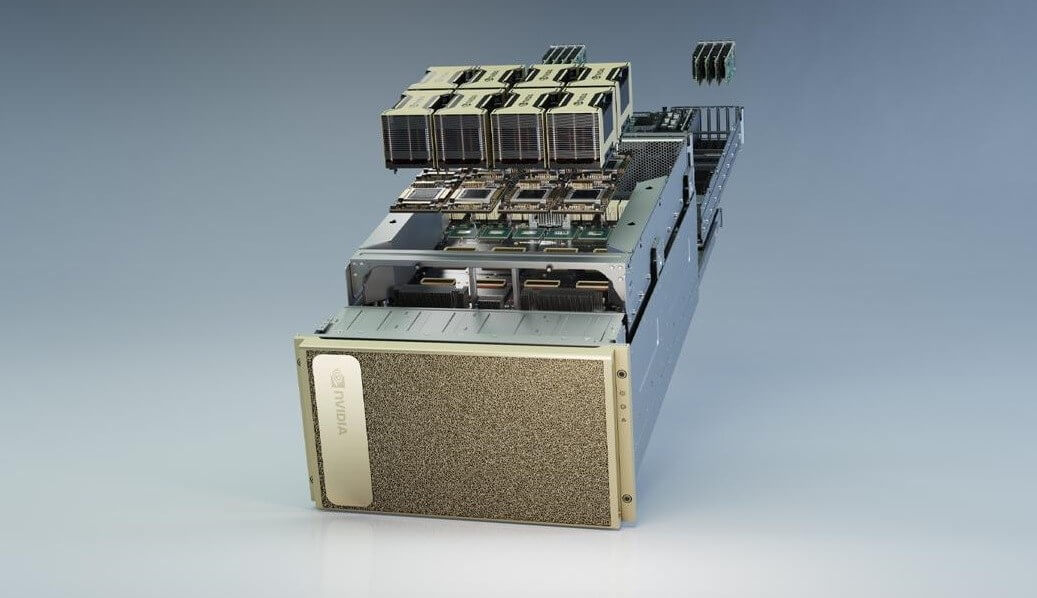

In Nvidia’s first two DGX systems, Intel’s Xeon CPUs were the preferred processor, but the company dropped them in the DGX A100 for two of AMD's 64-core, Zen 2-based Epyc 7742 CPUs. The system, which uses the new, Ampere-based A100 GPUs, boasts 5 petaflops of AI compute performance and 320 GB of GPU memory with 12.4 TB per second of bandwidth.

Speaking to CRN, Nvidia’s Vice President and General Manager of DGX Systems, Charlie Boyle, said the decision came down to the extra features and performance offered by the Epyc processors. "To keep the GPUs in our system supplied with data, we needed a fast CPU with as many cores and PCI lanes as possible. The AMD CPUs we use have 64 cores each, lots of PCI lanes, and support PCIe Gen4," he explained.

In addition to having eight more cores than the Xeon Platinum 9282, Epyc 7742 also supports eight-channel memory, whereas Intel’s Xeon Scalable processors support just six memory channels. AMD’s offering is also a lot cheaper—$6,950 vs around $25,000—and has more cache and a lower TDP.

PCIe 4.0 support is one of the major factors for choosing Epyc, with Intel’s processors still only supporting PCIe 3.0. It means AMD's CPUs offer 128 lanes and a peak PCIe bandwidth of 512GB/s. "The DGX A100 is the first accelerated system to be all PCIe Gen4, which doubles the bandwidth from PCIe Gen3. All of our IO in the system is Gen4: GPUs, Mellanox CX6 NICs, AMD CPUs, and the NVMe drives we use to stream AI data," Boyle said.

AMD, of course, has the advantage of using the 7nm manufacturing process, though Intel’s 10nm Ice Lake server CPUs, which are expected feature PCIe 4.0 support, arrive later this year.

https://www.techspot.com/news/85301-nvidia-reveals-why-chose-rival-amd-over-intel.html