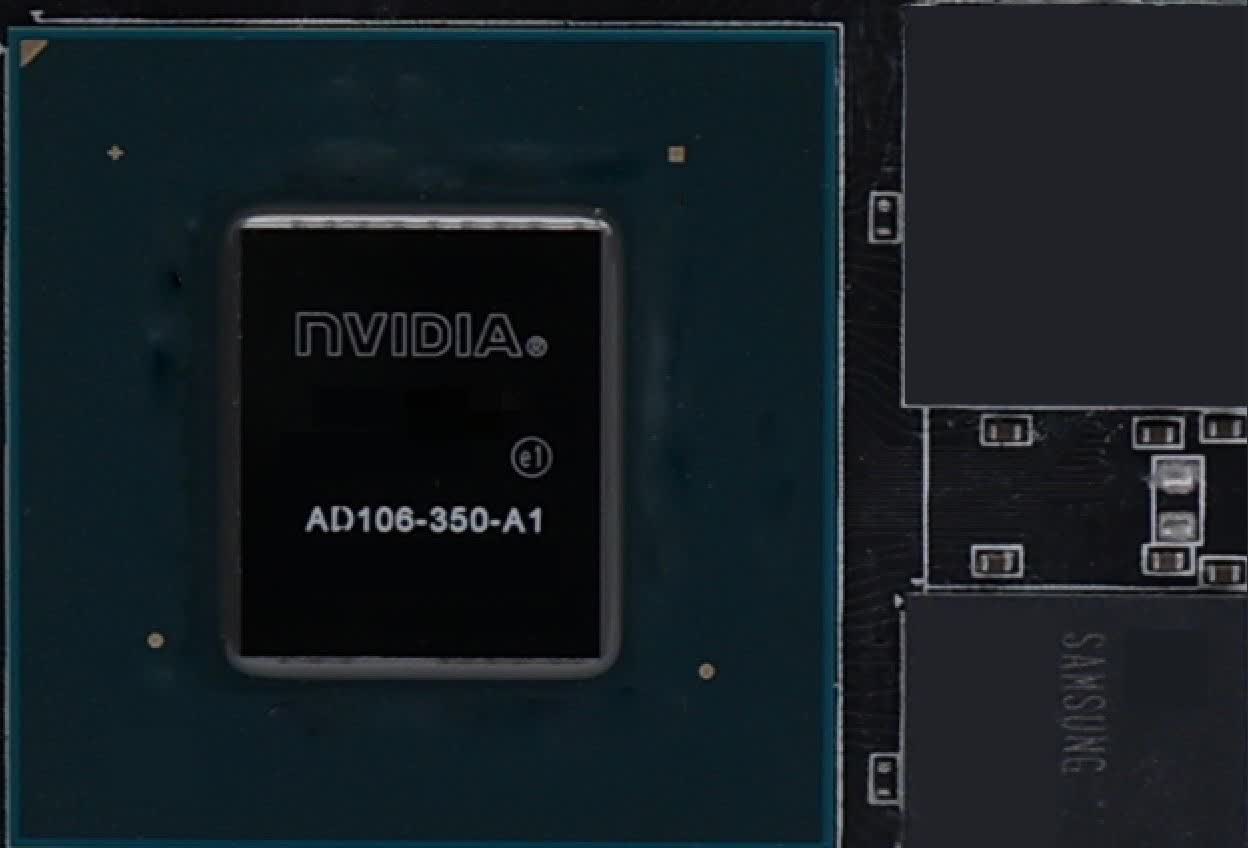

Something to look forward to: As the anticipated release dates of the GeForce RTX 4060 Ti and Radeon RX 7600 draw closer, ushering in a new generation of mainstream gaming GPUs, additional leaks seem to confirm previous rumors about Nvidia's chip. The upcoming GPU, based on the AD106 core, will controversially feature 8GB of VRAM.

Reliable leaker MEGAsizeGPU has posted a die shot of the upcoming desktop variant of the RTX 4060 Ti, confirming it is based on the AD-106-350 GPU. Retail listings of MSI pre-built systems featuring the card also confirm it includes 8GB of GDDR6 VRAM, which could be a concern given the demands of recent high-profile PC game releases.

Previous leaks suggested that, in addition to these specs, the 4060 Ti will feature 4,352 CUDA cores, 18 Gbps VRAM speed, 32 MB of L2 cache, a 128-bit memory bus for 288 GB/s bandwidth, and a 160W TGP. Performance may be similar to the RTX 3070 Ti if its 8GB of VRAM don't hold it back.

Nvidia's choice of memory for the card could prove problematic, as some recent high-end PC games have consumed substantial amounts of VRAM. Titles such as Hogwarts Legacy, the Dead Space Remake, and The Last of Us Part 1 encountered performance issues and other problems on GPUs with less than 12GB of VRAM.

The recently launched Star Wars Jedi: Survivor might be the worst case yet (of poor optimization). The game appears to eat so much memory that, in some situations, even the mighty 24GB RTX 4090 struggles with it. CPU issues are also to blame, and developer Respawn Entertainment has promised to improve the game's performance.

The release date for the RTX 4060 Ti remains uncertain, but it may debut at Computex 2023 at the end of May alongside the Radeon RX 7800 XT, 7700 XT, and 7600. While there is no concrete information on the 4060 Ti's price, a DigiTimes report suggests it will be $399 – the same price as its predecessor.

Details about the 4060 Ti's younger sibling, the desktop 4060, are also still under wraps. Rumors from February indicate it will also have 8GB of VRAM and be based on the same GPU as its laptop counterpart. The specifications suggest that the 4060 has less memory and fewer CUDA cores than its predecessor, the 12GB RTX 3060, although it will feature higher clocks and faster VRAM.

https://www.techspot.com/news/98503-nvidia-rtx-4060-ti-specs-confirmed-die-shot.html