Highly anticipated: The tech industry and tech products are excellent examples of the principle that competition is good for consumers, with vigorous rivalries almost always leading to important new capabilities, lower prices, and just better products. With that thought it mind, it will be interesting to see exactly how Intel's return to the discrete GPU market will impact PCs, as well as the progress and evolution of both AMD and Nvidia's next offerings.

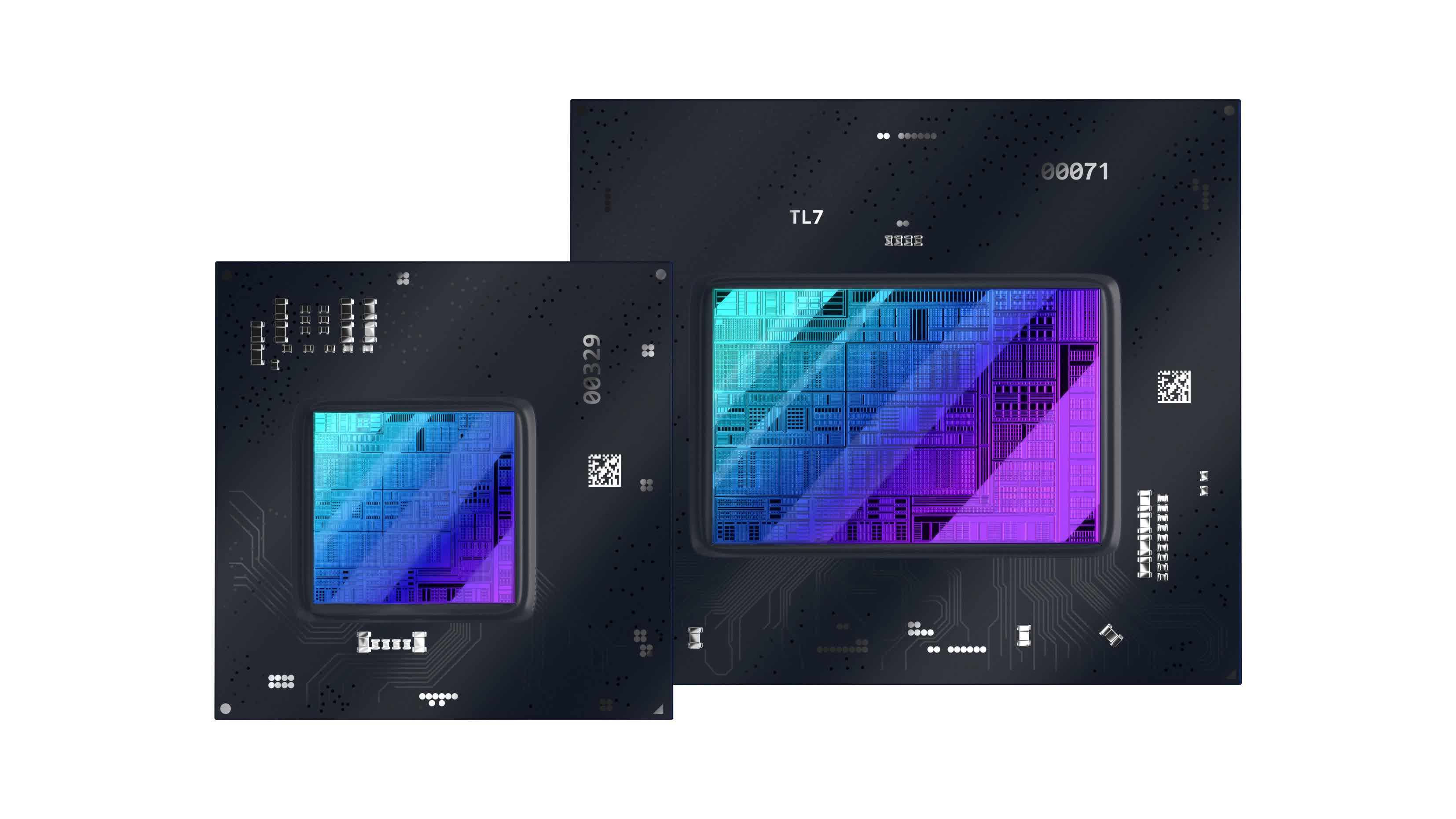

Intel officially announced today the Arc A series, which is targeted at laptops. A version of Arc for desktops has been promised for the second quarter, while a workstation version is due by the third quarter of the year. Add to that the preview that Intel gave of its impressive-looking GPU architecture (codenamed Ponte Vecchio) for datacenter, HPC (high performance computing), and supercomputer applications last year, it's clear that the company is getting serious about graphics and GPU acceleration.

To be sure, Intel has made previous (unsuccessful) runs at building its own high-quality graphics engines. However, this time the environment and the company's technology are both very different. First, while gaming remains an absolutely critical application for GPUs, it is no longer the only one. Content creation applications, such as video editing, that are very dependent on things like media encoding and decoding, have become mainstream requirements.

This is especially true given the influence of YouTube, Twitch, and social media. Similarly, the use of multiple high-resolution displays has also become significantly more common. Most importantly, we're starting to see the rise of AI-powered applications on PCs, and virtually all of them are leveraging GPUs to accelerate their performance.

From photo and video editing to game physics and rendering, the rise of PC native AI-enhanced applications puts Intel's Arc in a very different competitive light given how much focus the company placed on accelerated AI features in its new chip.

Real success in the market will only be achieved if Arc delivers a great gaming experience, however, because of the many other capabilities and applications that a modern GPU architecture can enable, I don't believe it's essential that Intel starts out with the best gaming experience.

Realistically, that would be extremely difficult for Intel to pull off at once, given the huge amount of time and investment that AMD and Nvidia have put into their own architectures over the last few decades. But as long as the Arc GPUs keep things close on the gaming side, I believe the market will be interested in hearing what else they have to offer. Plus, Intel is already starting to talk about its third generation of these chips -- codenamed "Celestial" -- and hinting that they could start to compete for the GPU gaming crown, clearly suggesting the company is in it for the long haul regardless.

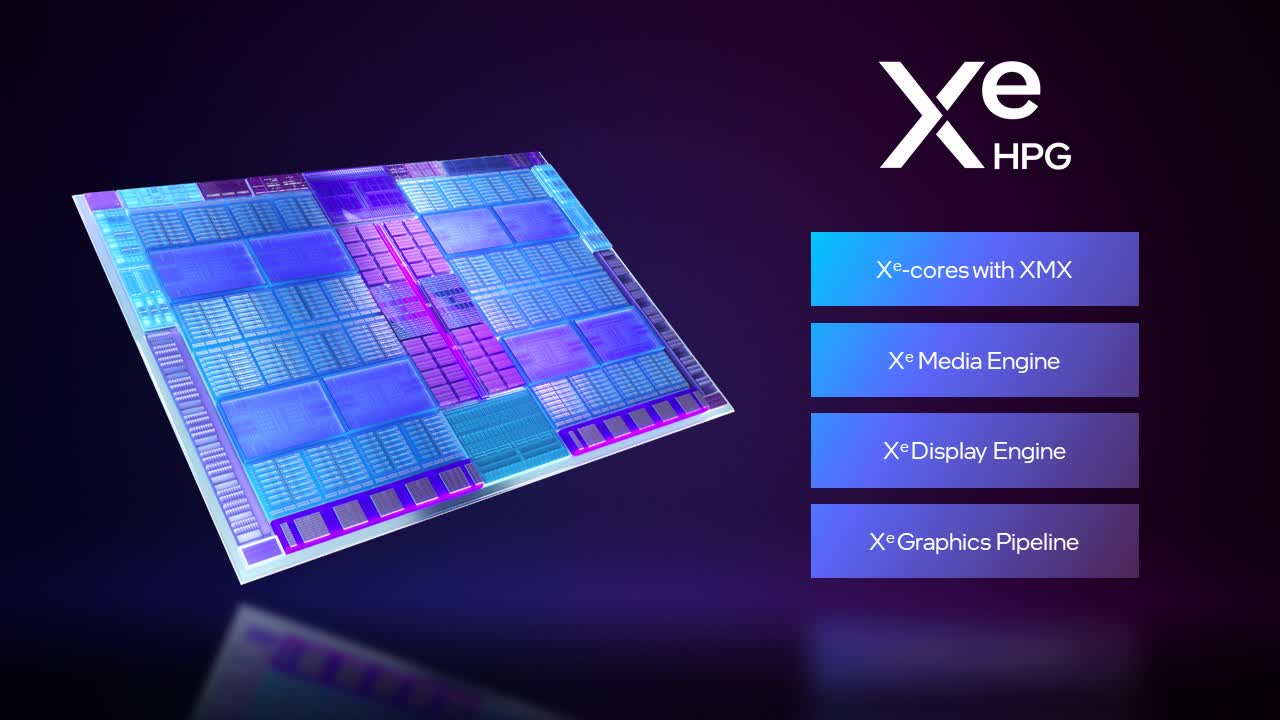

Technologically, Intel is bringing several interesting offerings to this first generation of Arc GPUs (codenamed "Alchemist"). The underlying Xe HPG core architecture features hardware accelerated ray tracing support, up to eight render slices, sixteen 256-bit vector engines and sixteen 1,024-bit matrix engines. Taken together, in the entry-level Arc 3 series chips, Intel claims these features translate into 2x gaming performance over the company's Iris Xe integrated graphics and 2.4x raw performance for creative applications.

For AI acceleration, Intel's newly architected XMX matrix engine supports the acceleration of a host of different word sizes and types (from INT2 through BF16) and offers up to 16x the number of operations per clock for INT8 inferencing. One key applications that benefits from this is Xe Super Sampling, an AI-powered game resolution upscaling technology that's conceptually similar to AMD's FSR and Nvidia's Image Scaling.

One of Arc's unique features is that it's the first to support hardware encoding/decoding of the AV1 codec. It also features a new smoothing technology Intel calls Smooth Sync to improve screen tears when the frames per second output from the GPU doesn't match with the laptop display's screen refresh rate. On the display side, Arc's Xe display engine supports up to four separate 4K 120 Hz resolution monitors over both HDMI 2.0b or DisplayPort 1.4a connections.

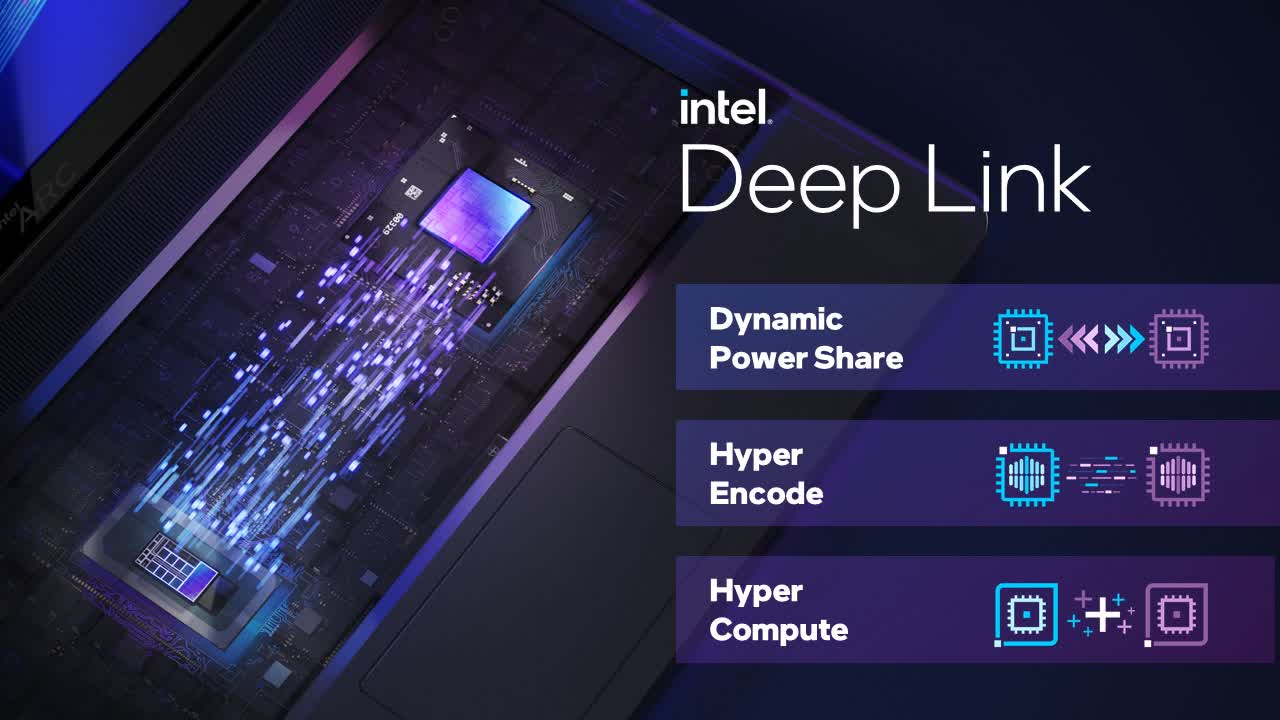

Intel has also put a great deal of thought into integration with their own 12th-gen Alder Lake CPUs, specifically through a set of technologies the company calls Deep Link. Dynamic Power Share allows the system to automatically shift power between the CPU and GPU to optimize the performance for different types of workloads -- a capability similar to AMD's Smart Shift. Hyper Encode and Hyper Compute allow the simultaneous shared use of both the discrete GPU and the CPU's integrated GPU to accelerate either encoding or other types of computing applications, ensuring that no silicon goes wasted.

Intel will be offering Arc chips through a wide variety of partners, with Arc 3-equipped laptops expected to start at $899. Both Arc 5 and Arc 7 notebooks will be coming out later this summer. The company's initial launch partner is Samsung with the Galaxy Book2 Pro, which will include the Arc A370M, along with a 12th gen Core i7 GPU. While Samsung may not be an obvious first choice, the company has been aggressively improving its PC product line over the last few years, and Intel clearly sees it as an important player in the PC market whose presence they want to help.

The return of Intel to the discrete GPU market is bound to generate a great deal of attention and scrutiny. Initial gaming benchmarks versus discrete mobile offerings from AMD and Nvidia could prove to be tough competition, and it will be interesting to see how Arc-based laptops compete against M1-based Macs on creative applications.

However, if Intel can pull together a reasonable story across all these different areas -- and highlight the AI-focused advantages it offers -- then Arc could be off to a good start. Regardless, there's no doubt it will spice up competition in the GPU market, and that's something from which we'll all benefit.

https://www.techspot.com/news/93984-opinion-intel-spices-up-pc-market-arc-gpu.html