Although the rumors have been wrong so far regarding the release date of Nvidia's upcoming Turing-based GPUs, according to information provided to wccftech, we might finally have preliminary specifications for Nvidia's rumored upcoming GTX 1180.

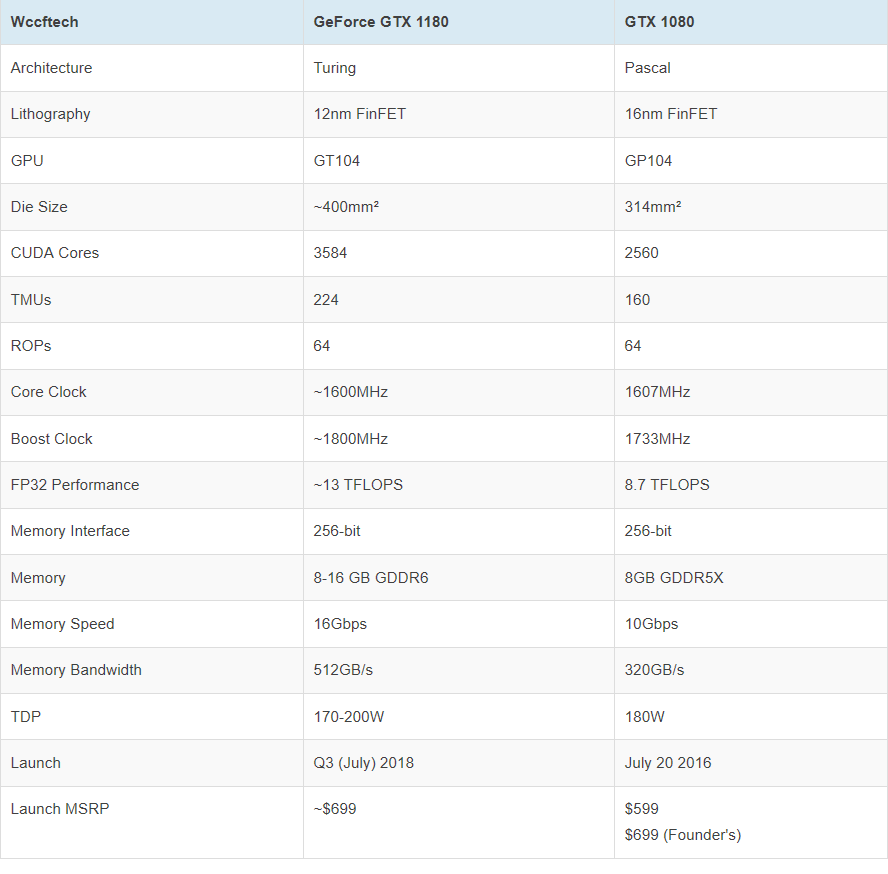

The card will reportedly launch with a core clock of 1.6Ghz, boostable to about 1.8Ghz. The card's architecture is expected to be 3584:224:64 which is comparable to the GTX 1080 Ti's 3584:224:88 architecture.

As far as other improvements over last-generation Nvidia cards go, the 1180 will include "8-16GB" of GDDR6 memory. The memory bandwidth is set to be 16Gbps, a sizable leap over the GTX 1080 Ti's 11Gbps memory bandwidth.

The GTX 1180 will reportedly include a 400mm² die, which is considerably smaller than the 1080 Ti's 471mm² die but much larger than the GTX 1080's 314mm² die. The 1180's CUDA core count, on the other hand, will be identical to the GTX 1080 Ti's at 3584 but a considerable improvement over the 1080's 2560 CUDA cores.

Power consumption could either be slightly lower or slightly higher than the GTX 1080's, according to wccftech. The 1180's rumored spec sheet lists "170-200W" as the card's TDP. No matter where in that range the cards' TDP lands, it'll consume much less power than the GTX 1080 Ti which has a TDP of 250W.

If you're wondering how much the 1180 might cost, sources say customers should expect to pay around $699 for the new GPU. While this is unconfirmed, the pricing would make sense - Nvidia's GTX 1080 Founders Edition launched with the same MSRP.

As interesting as these specs may be, a healthy degree of skepticism may be warranted. After all, none of this information has been officially confirmed. Regardless, we'll likely learn more about Nvidia's upcoming GPUs in the coming months.

https://www.techspot.com/news/74274-preliminary-specs-nvidia-rumored-gtx-1180-have-allegedly.html