Here at Puget Systems, our customers range from those needing relatively modest workstations, all the way up to those that need the most powerful system possible. These extreme workstations used to be dual or quad CPU systems, but recently, the shift has been to quad GPU setups. Having this much GPU power can provide incredible performance for a number of industries including rendering, scientific computing, and even has some use for video editing applications like DaVinci Resolve.

Editor’s Note:

Matt Bach is the head of Puget Labs, a division of Puget Systems that does research and benchmarking to test workstation-level software packages. Puget Systems is a specialized builder of gaming and workstation PCs. This article was originally published on the Puget blog. Republished with permission.

With Nvidia's latest GPUs, the GeForce RTX 3080 10GB and 3090 24GB, the amount of performance you can get from a single GPU has dramatically increased. Unfortunately, the amount of power these cards require (and conversely the amount of heat they generate) has also increased. In addition, almost all the GPU models available from Nvidia and third party manufacturers are not designed for use in multi-GPU configurations which in many cases limits you to only one or two cards.

However, Gigabyte has recently launched a blower-style RTX 3090 that should give us our best chance of using three or four RTX 3090's in a workstations: the GeForce RTX 3090 TURBO 24G.

This type of blower-style cooling system is much better for multi-GPU configurations as it exhausts the majority of the heat directly out the back of the chassis. And when we are dealing with four 350 watt video cards, that is 1,400 watts of heat that we certainly want out of the system as quickly as possible.

While the cooler design may be able to help with the heat output, we also have the problem of total power draw. We should be able to power 1,400 watts with a single 1,600 watt power supply, but that doesn't leave much room for any voltage spikes, not to mention having enough to power the CPU, motherboard, RAM, storage, and other devices inside the computer.

This begs the question: is having four RTX 3090s inside a desktop workstation actually feasible? Or is the heat and power draw too much to handle?

Test Setup

To see if quad RTX 3090 is something we even want to consider offering, we wanted to put a number of configurations to the test to look at performance, temperatures, and power draw. The main system we will be using has the following specs:

| Test Platform | |

| CPU | Intel Xeon W-2255 10 Core |

| CPU Cooler | Noctua NH-U12DX i4 |

| Motherboard | Asus WS C422 SAGE/10G |

| RAM | 8x DDR4-3200 16GB REG ECC(128GB total) |

| Video Card | 1-4x Gigabyte RTX 3090 TURBO 24G |

| Hard Drive | Samsung 970 Pro 512GB |

| PSU | 1-2x EVGA SuperNOVA 1600W P2 |

| Software | Windows 10 Pro 64-bit (Ver. 2004) |

While our testing with up to three RTX 3090s is fairly straightforward, we have some serious concerns about power draw when we get up to four GPUs. We are going to attempt using just a single 1600W power supply with stock settings, but also try setting the power limits for each card to 300W (which should bring us comfortably below the 1600W max), as well as using a dual PSU setup to ensure none of the cards are starved for power.

Power draw is a bit of a concern with quad RTX 3090

For our testing, we will look at performance, power draw, and GPU temperature with OctaneBench, RedShift, V-Ray Next, and the GPU Effects portion of our PugetBench for DaVinci Resolve benchmark.

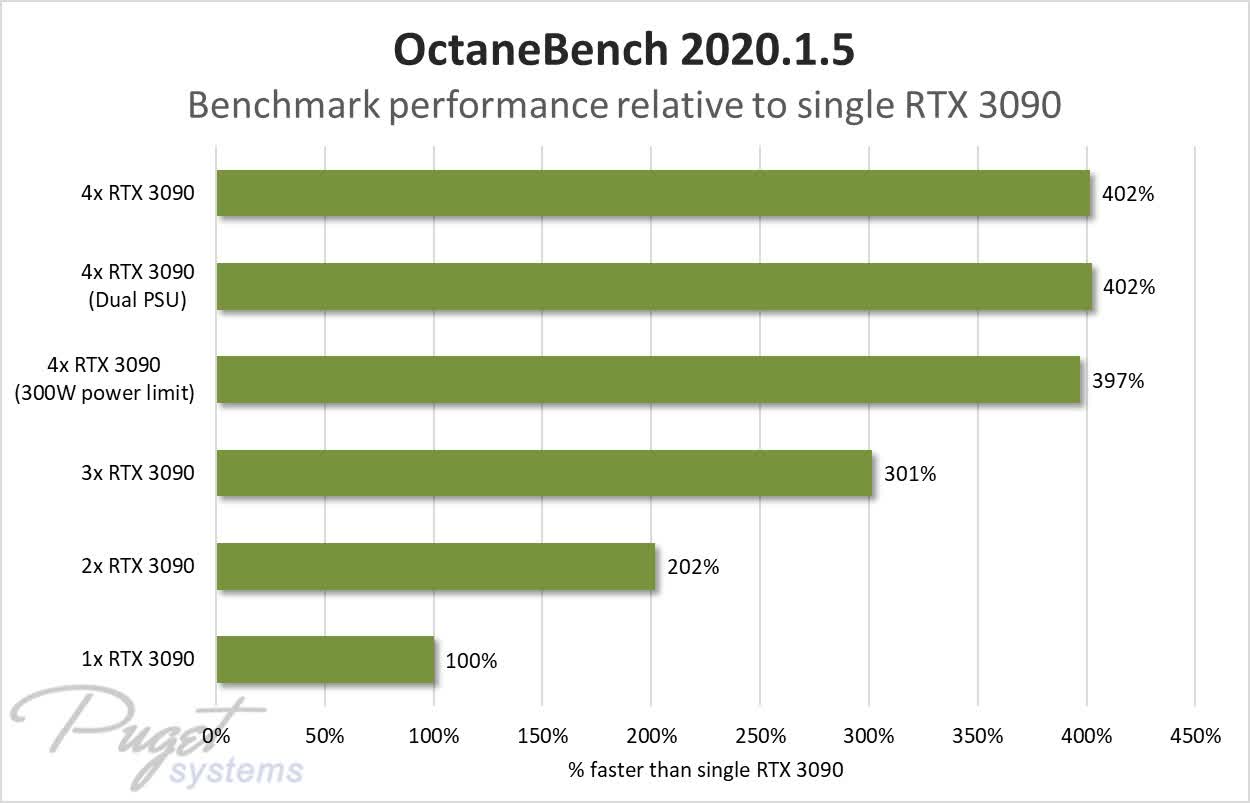

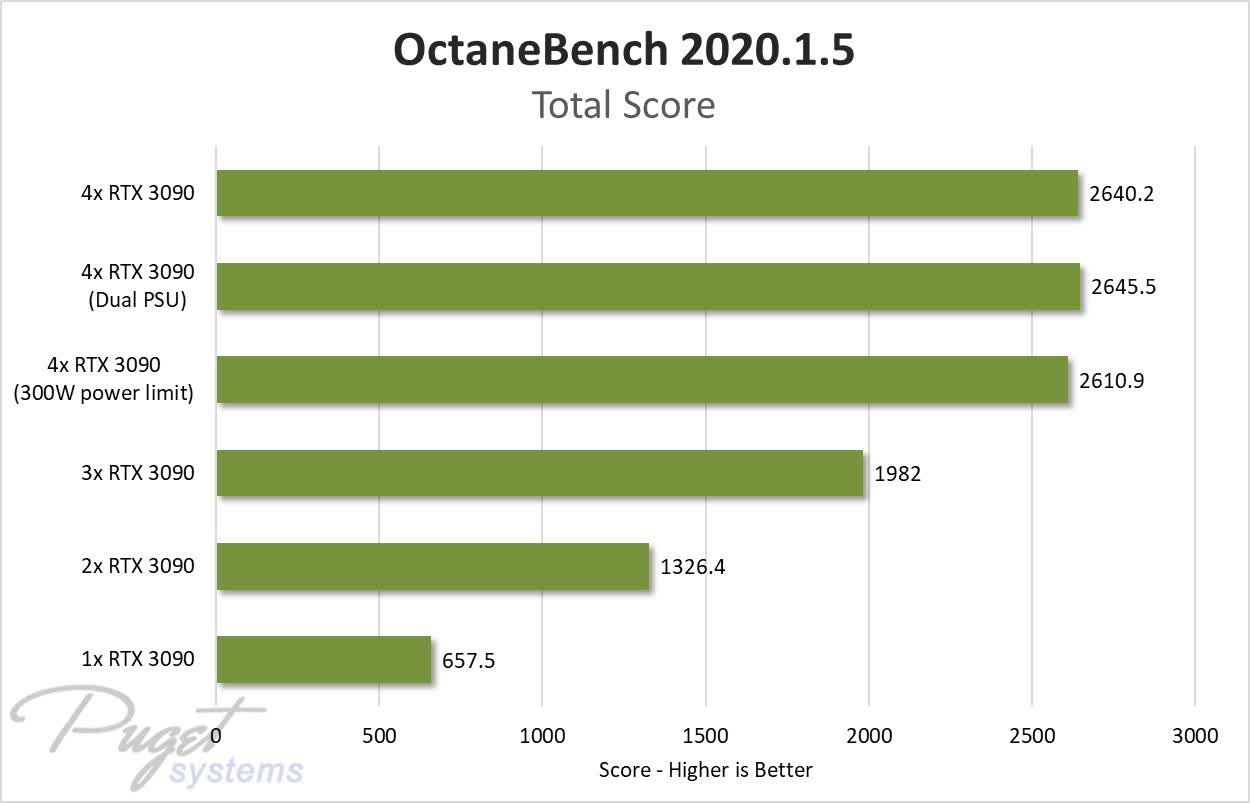

Benchmarks: OctaneBench

OctaneBench is often one of our go-to benchmarks for GPUs because it runs extremely well with multiple video cards. As you can see in the charts above, the scaling from one to four RTX 3090 cards is almost perfect with four cards scoring exactly four times higher than a single RTX 3090.

In fact, the biggest surprise here was that limiting each of the GPUs to 300W only dropped performance by a little more than 1%. While we are going to talk about power draw in more detail later, we will tease that limiting the GPUs reduced the overall system power by ~16%, which is a great return for such a tiny drop in performance.

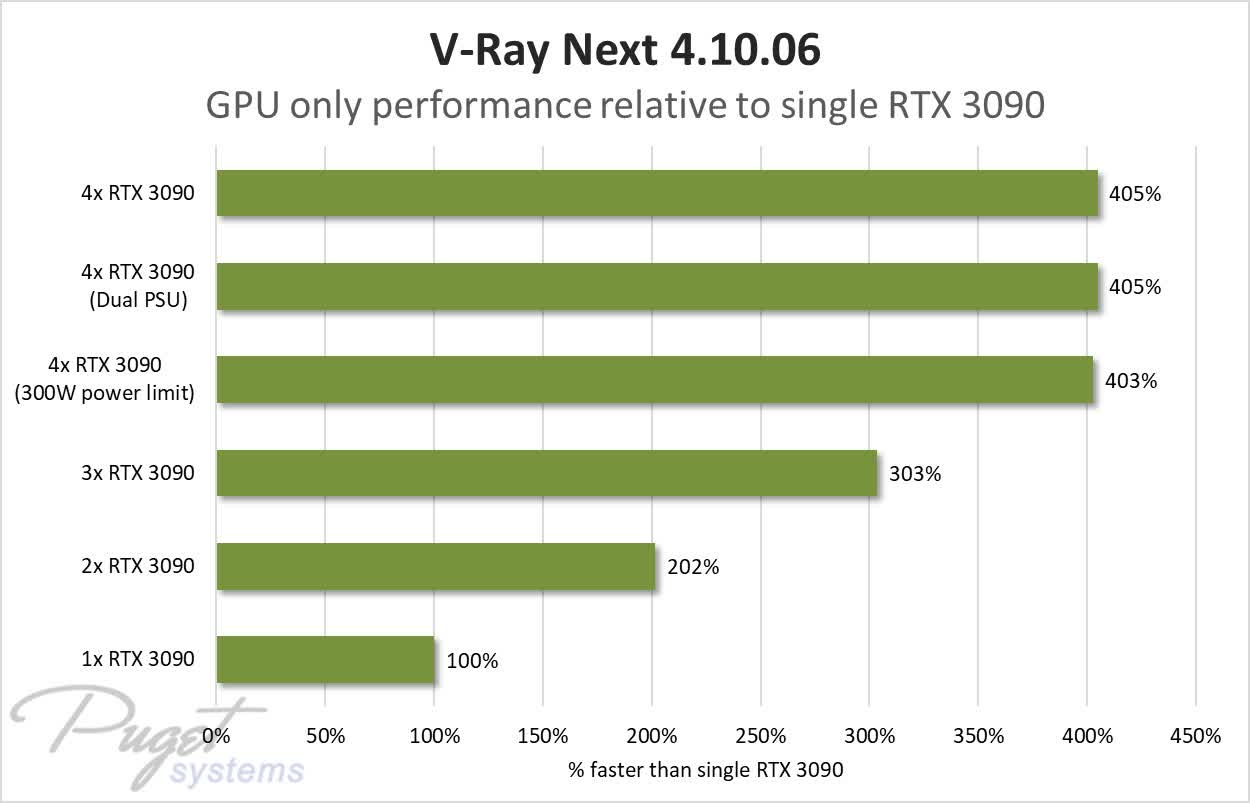

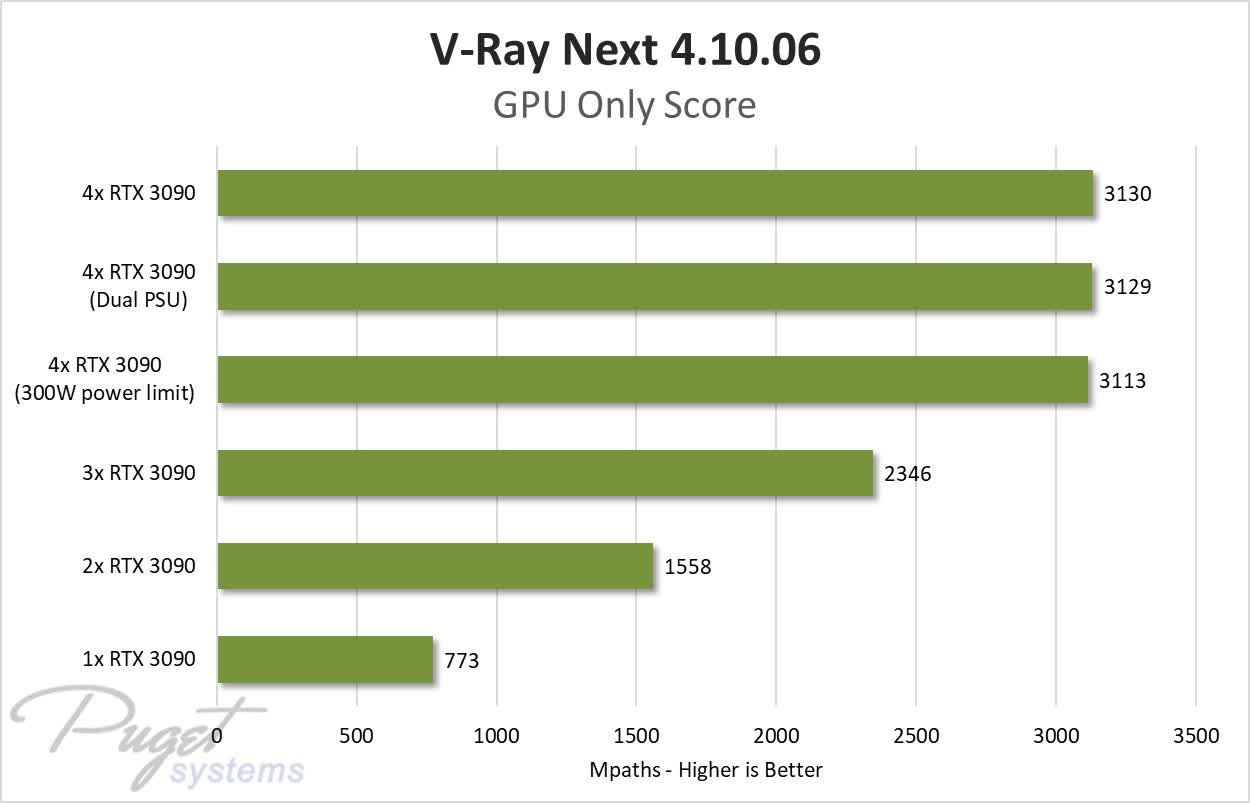

Benchmarks: V-Ray Next

V-Ray Next is quickly becoming another staple for our GPU testing because it not only scales just as well as OctaneRender, but it actually causes slightly higher overall system power draw which makes it a great benchmark for stressing GPUs.

Scaling up to four RTX 3090 cards is perfect, and limiting the GPU power reduced the benchmark result by less than 1%. We also aren't seeing any increase in performance with dual power supplies, which means that so far, a single 1600W power supply appears to be doing OK.

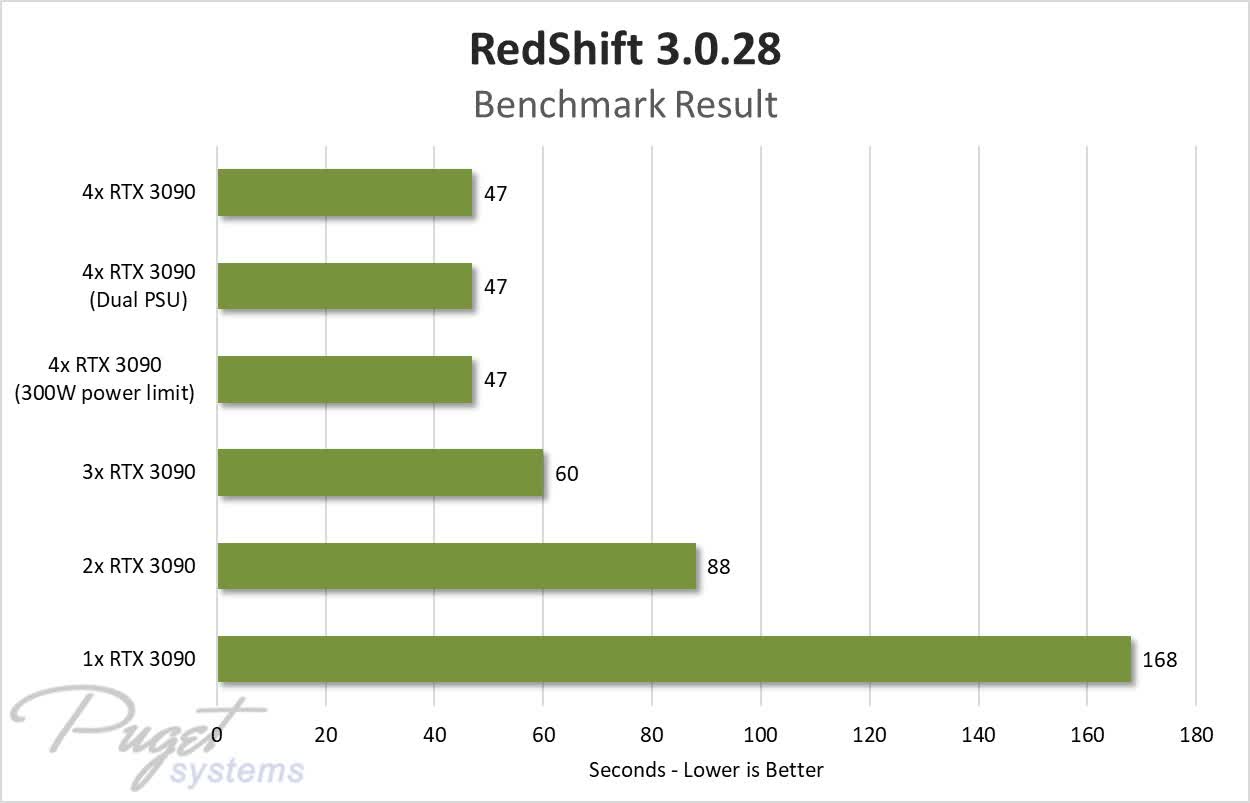

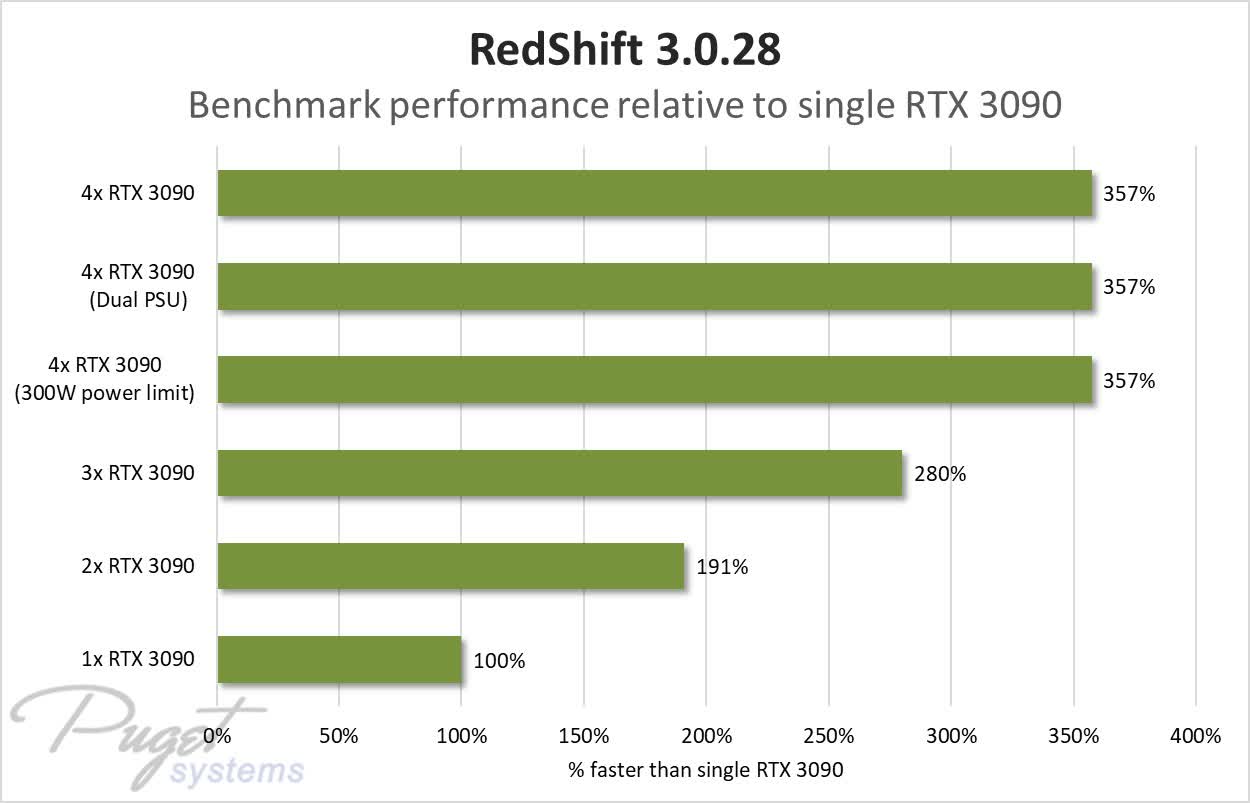

Benchmarks: RedShift

RedShift is interesting because it does not scale as well as OctaneRender or V-Ray, but its recent acquisition by Maxon (makers of Cinema4D) means that we are likely to see more people using it in the near future.

One thing to note is that this benchmark returns the results in seconds, so a lower result is better (the opposite of our other tests).

In RedShift, we didn't see quite as good of scaling, but four RTX 3090 cards is still 3.6 times faster than a single card. Once again, power limiting the cards and using dual power supplies didn't affect the performance to a significant degree.

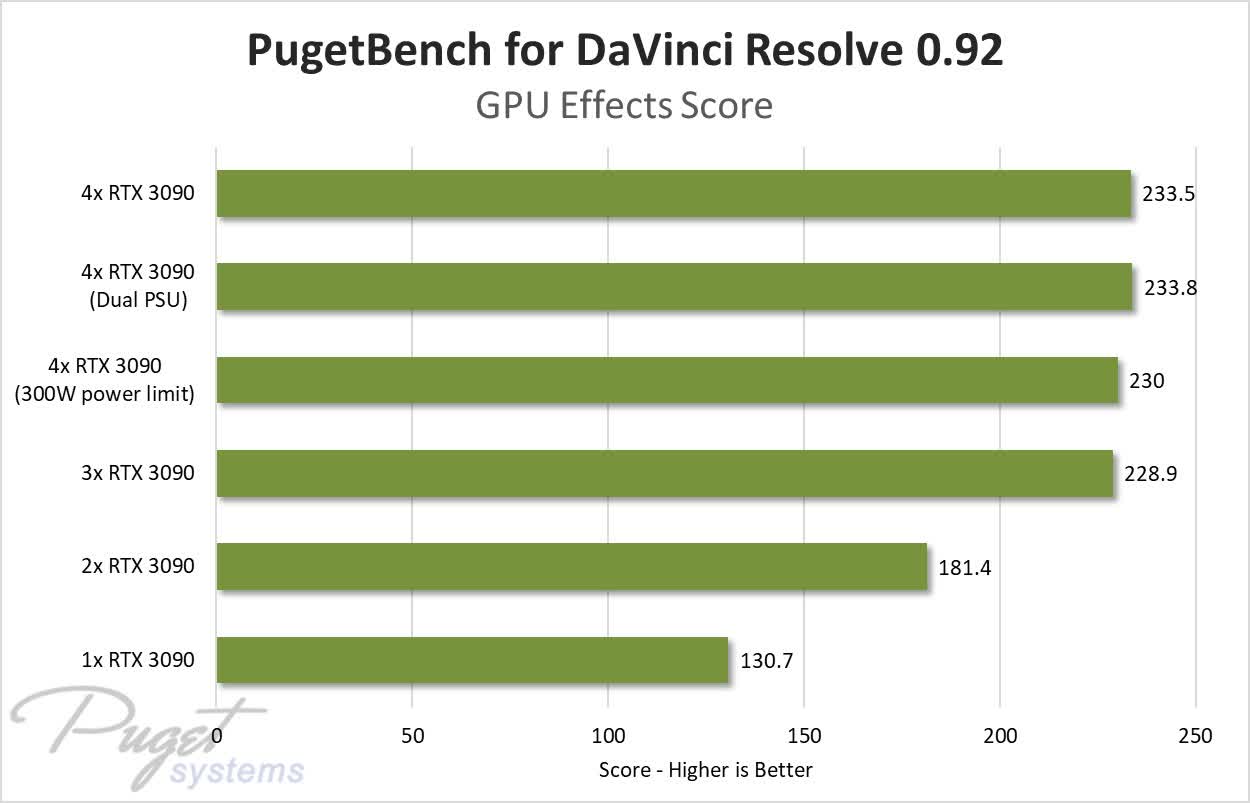

Benchmarks: DaVinci Resolve

To round out our testing, we wanted to look at something that wasn't rendering. We actually were going to include a few other tests such as NeatBench and a CUDA NBody simulation, but either the scaling wasn't very good with multiple GPUs, or we had issues running it due to how new the RTX 3090 is.

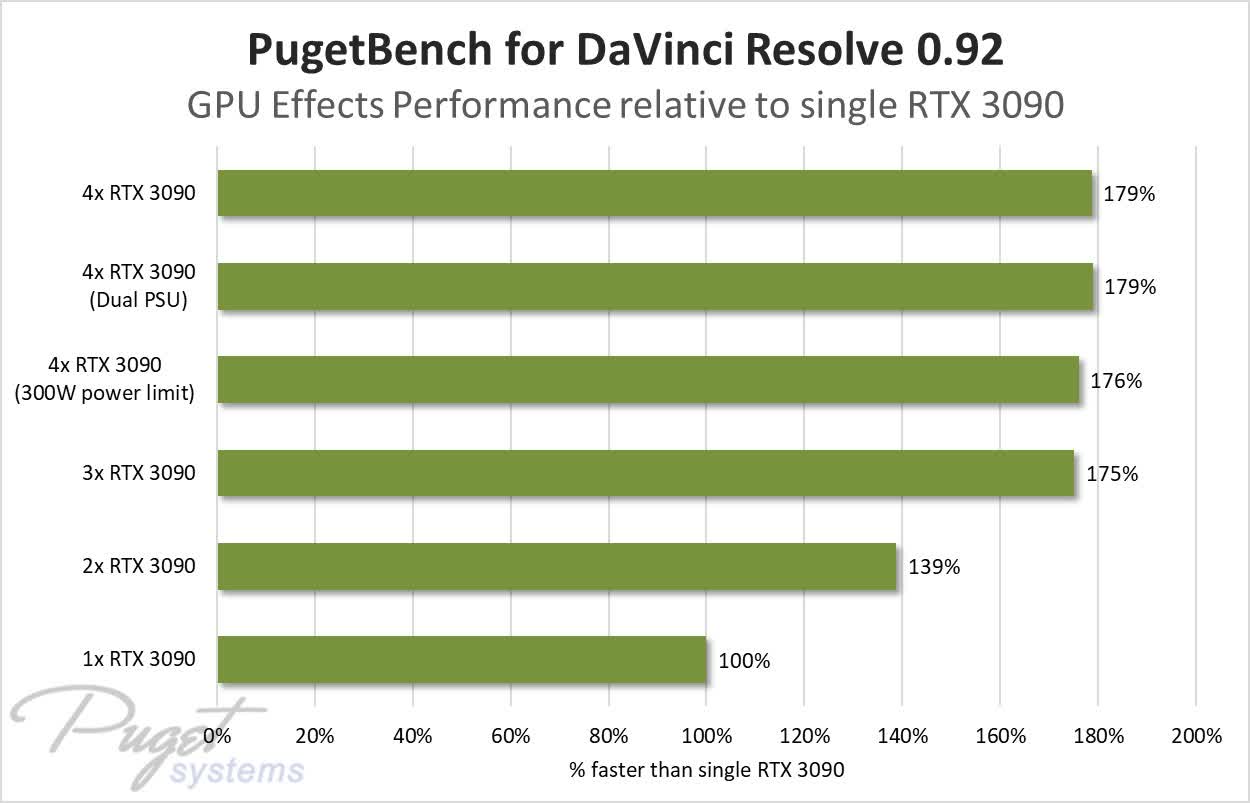

Our DaVinci Resolve benchmark, however, has support for these cards and the "GPU Effects" portion of the benchmark scales fairly well up to three GPUs.

We didn't see a significant increase in performance with four RTX 3090 cards, which may in part be due to our choice of CPU. This test is going to load the processor more than any of the others, and while that shouldn't explain the performance wall entirely, it may be a contributing factor.

Power Draw, Thermals, and Noise

Performance is great to look at, but one of the main reasons we wanted to do this testing was to discover if putting four RTX 3090 cards into a desktop workstation was even feasible. The higher power draw and heat output means that only cards like the Gigabyte RTX 3090 TURBO 24G with a blower-style cooler will even have a chance, but these cards are still rated for 350W each.

Power draw was one of our biggest concerns, so we decided to start there:

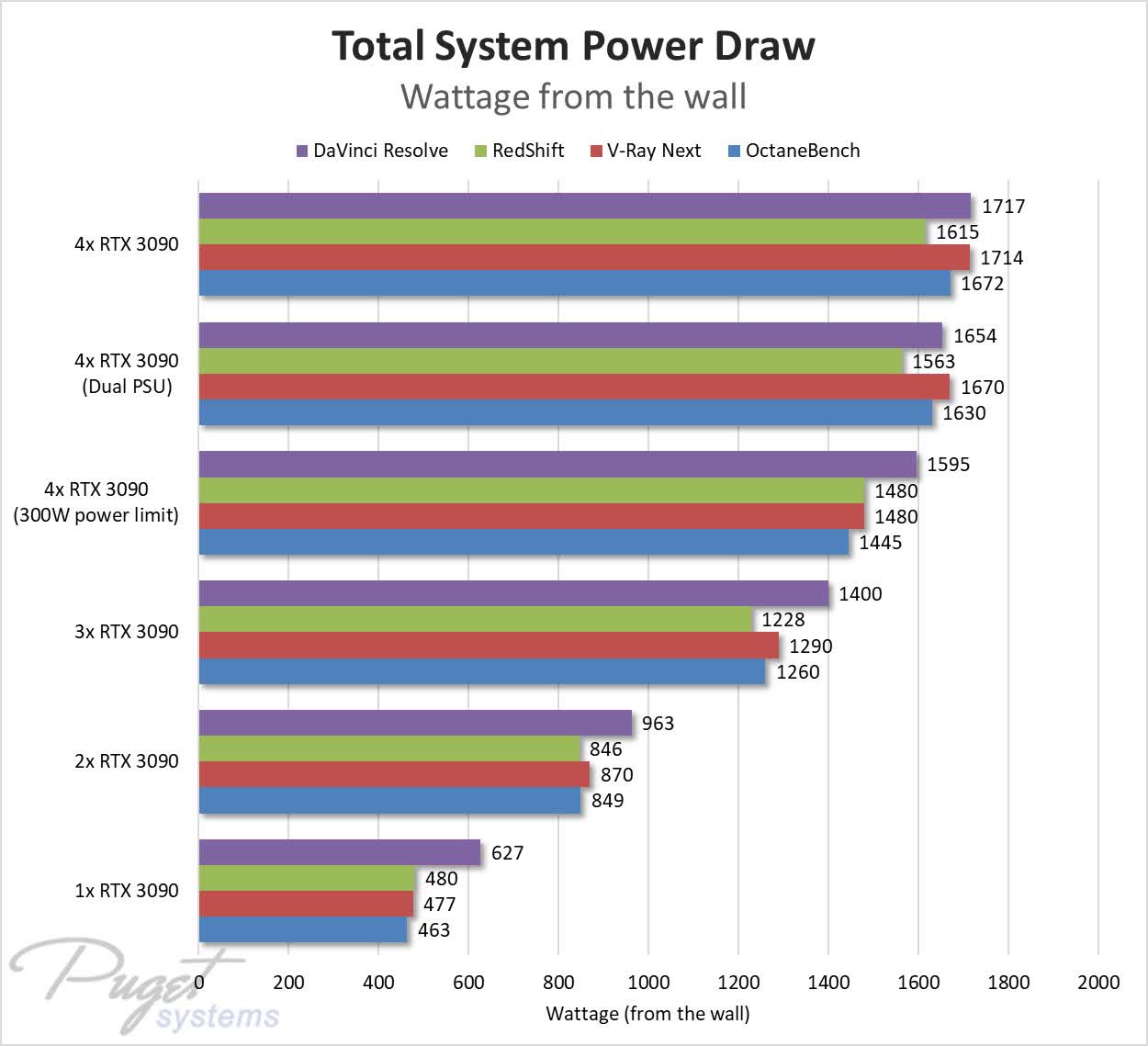

We measured the power draw from the wall during each benchmark, which showed us some very interesting results. First, the benchmark that pulled the most power was actually our DaVinci Resolve GPU Effects test. This is likely because it not only uses the GPUs, but puts a decent load on the CPU as well. Because of this, this test is likely a bit more accurate for the kind of maximum load you might put on a system in an "everyday" situation.

Overall, what we found was that while Quad RTX 3090s was able to run on a single 1600W power supply, it is cutting is extremely close. Remember that this is power draw from the wall, and going by the rated 92% efficiency of the EVGA unit we are using, our peak power draw of 1,717 watts should only translate to 1,580 watts of power internally. That leaves a whole 20 watts to spare!

We didn't have the system shut down on us during our testing, but this is way too close for long-term use. Not to mention that if we used a more power-hungry CPU, or even just added a few more storage drives, we likely would have been pushed over the edge. So, while we technically succeeded with four RTX 3090s on a single 1600W power supply, that is definitely not something we would recommend.

There are larger power supplies you can get that go all the way up to 2400W, but at that point you are going to want to bring in an electrician to make sure your outlets can handle the power draw. The 1717 watts we saw translates to 14.3 amps of power since we are using 120V circuits, and most household and office outlets are going to be wired to a 15 amp breaker. That leaves almost no room for your monitors, speakers, and other peripherals. If you do go the route of a 2400W PSU, you are going to need to ensure that you are using a 20 amp circuit.

But power draw aside, how did the Gigabyte cards handle the heat generated by these cards? 1700 watts of power is more heat than most electric space heaters put out, which can be very hard to handle within a computer chassis.

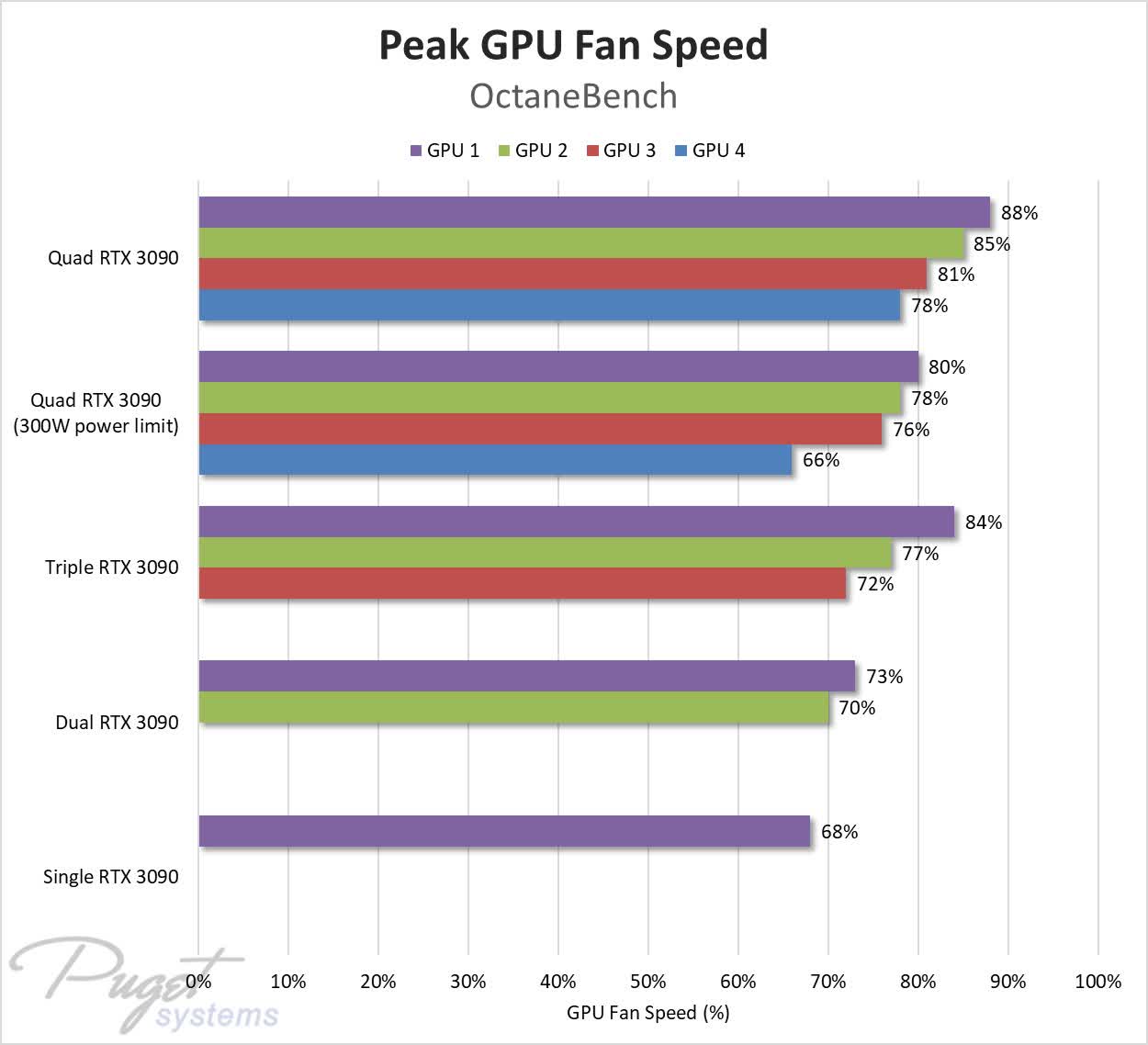

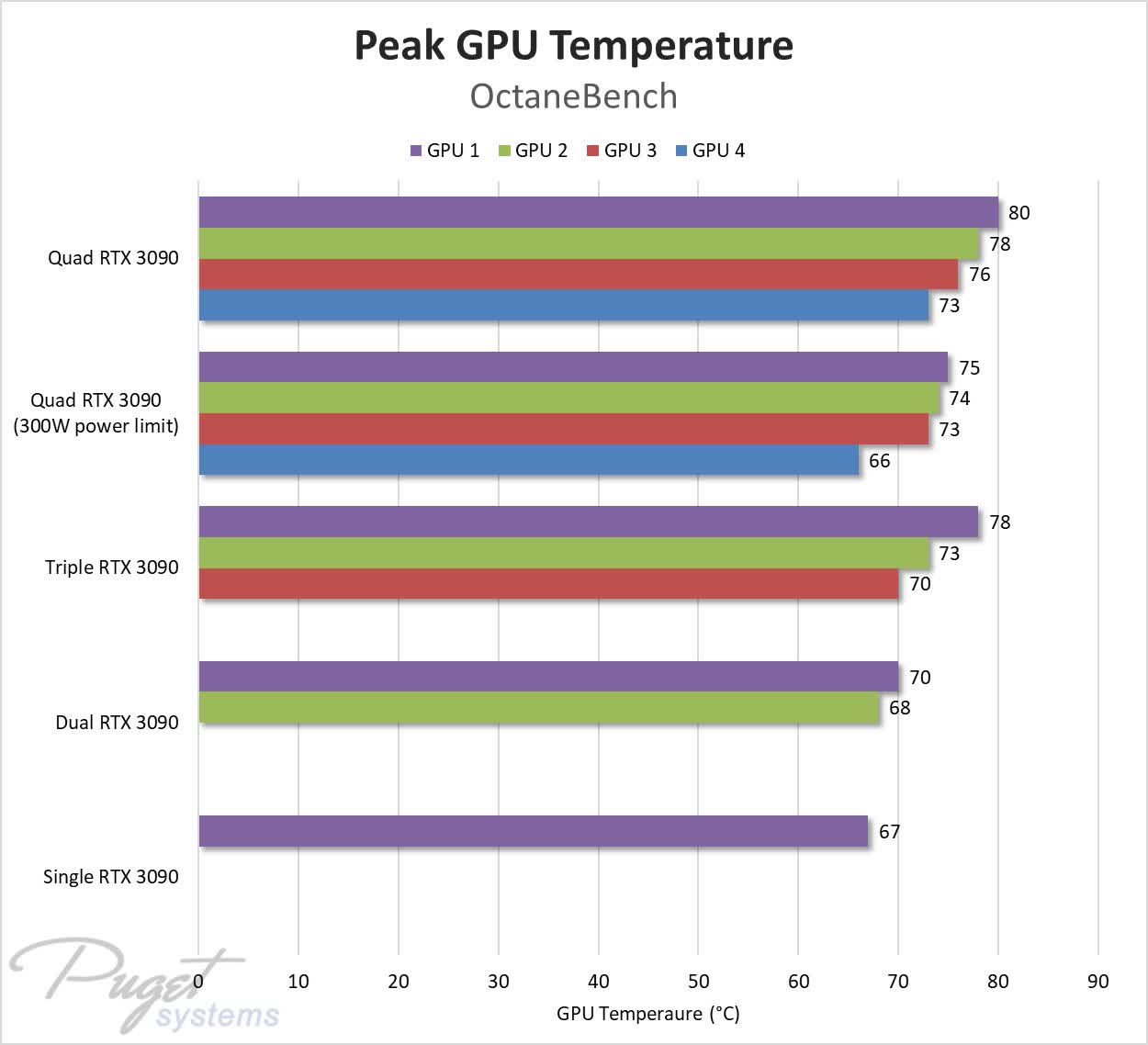

In the two charts above, we are looking at the peak GPU temperature for each card, as well as the peak GPU fan speed for each configuration in OctaneBench. We recorded results for the other tests as well, but OctaneBench was the only test that ran long enough to truly get the system up to temperature.

Surprisingly, the temperatures were not bad at all. Even with all four GPUs running at full speed, the temperatures ranged from 73C on the bottom GPU, to just 80C on the top card However, something to keep in mind is that the temperature of the GPU is only half the picture. GPU coolers - and CPU coolers for that matter - are tuned to increase the fan speed gradually, which means that the temperatures not only have to be acceptable, but there must be adequate fan speed head room to account for higher ambient temperature, longer load times, and the heat generated by other components that might be installed in the future.

In this case, quad RTX 3090s peaked at 88% of the maximum fan speed. To us, that is starting to cut it close, but technically it should be enough headroom - especially if you beefed up the chassis cooling with additional front or side fans.

The last thing we wanted to consider was noise. The temperature of these cards was actually fairly decent, but if the system sounds like a jet engine, that may not be acceptable for many users. Noise is very difficult to get across, but we decided the best way would be to record a short video of each configuration so you can at least hear the relative difference.

All things considered, the noise level of four RTX 3090 cards is not too bad. It certainly isn't what anyone would call quiet, but for the amount of compute power these cards are able to provide, most end users would likely deem it acceptable.

Quad RTX 3090: Feasible or Fantasy?

Interestingly enough, we actually had very few issues getting four RTX 3090 cards to work in a desktop workstation. Using a blower-style card like the RTX 3090 TURBO 24G from Gigabyte is certainly a must, but even under load the GPU temperatures stayed below 80C without going above 90% of the maximum GPU fan speed.

The only true problem we ran into was power draw. We measured a maximum power draw of 1717 watts from the wall, which not only exceeds what we would be comfortable with from a 1600W power supply, but also means that you should run your system from a 20 amp breaker if possible. Most house and office outlets will be on 15 amp circuits in the US, which may mean hiring an electrician to do some electrical work if you decide to use one of the very few 2400W power supplies that are available.

So, will we be offering quad RTX 3090 workstations? Outside of some very custom configurations, we likely are not going to offer this kind of setup to the general public due to the power draw concerns. On the other hand, triple RTX 3090 is something we are likely to pursue, although that has not yet passed our full qualification process quite yet. Even three RTX 3090 cards is going to give a very healthy performance boost over a quad RTX 2080 Ti setup, however, which is great news for users that need faster render times, or those working in AI/ML development.

https://www.techspot.com/news/87264-quad-geforce-rtx-3090-tested-single-pc-running.html