At which point does the jump up from the affordable Ryzen 5600 to the relatively expensive Ryzen 7600 makes sense? That's what we'll be testing in this CPU and GPU scaling benchmark.

https://www.techspot.com/review/2615-ryzen-7600-vs-ryzen-5600/

At which point does the jump up from the affordable Ryzen 5600 to the relatively expensive Ryzen 7600 makes sense? That's what we'll be testing in this CPU and GPU scaling benchmark.

https://www.techspot.com/review/2615-ryzen-7600-vs-ryzen-5600/

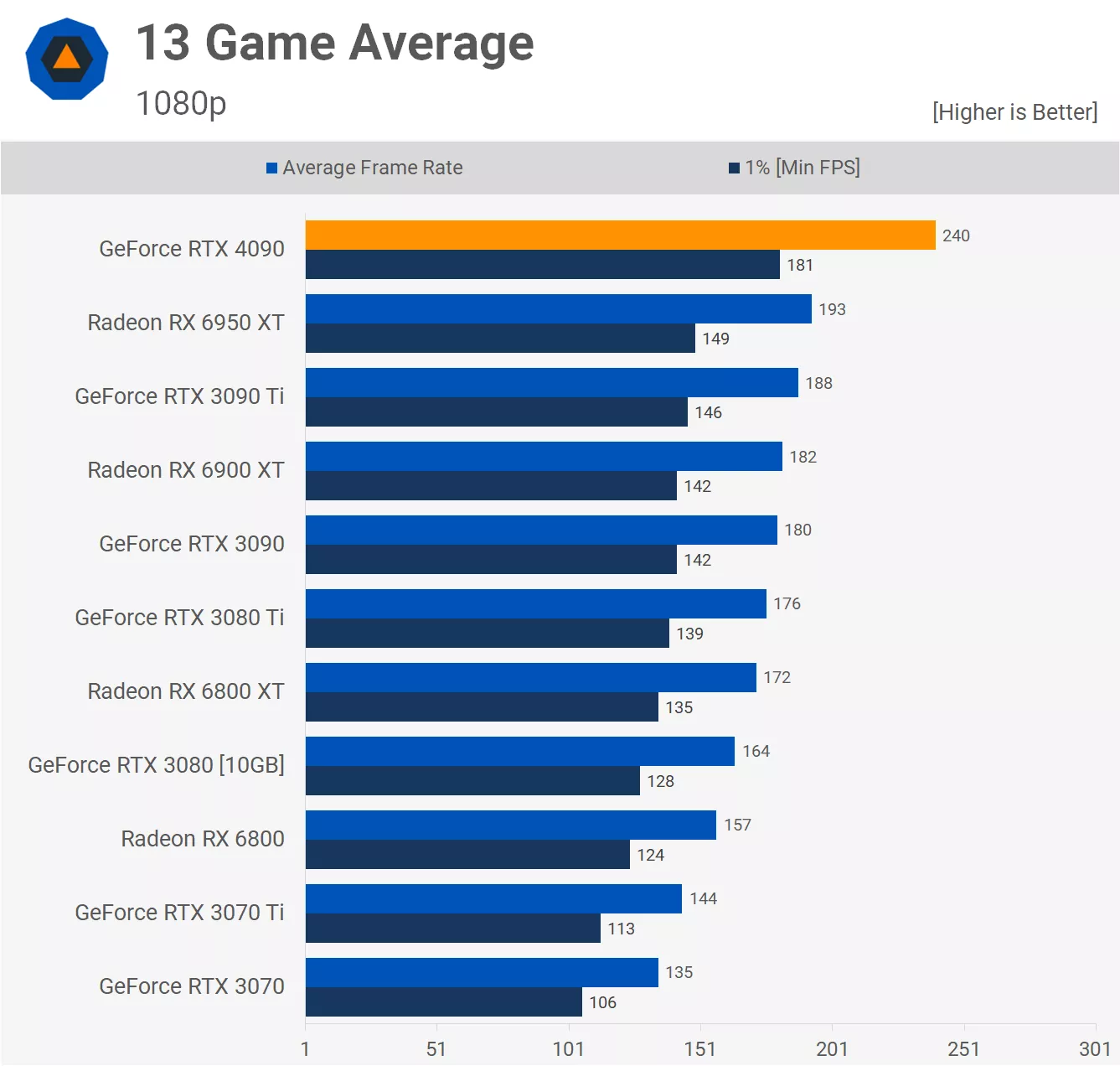

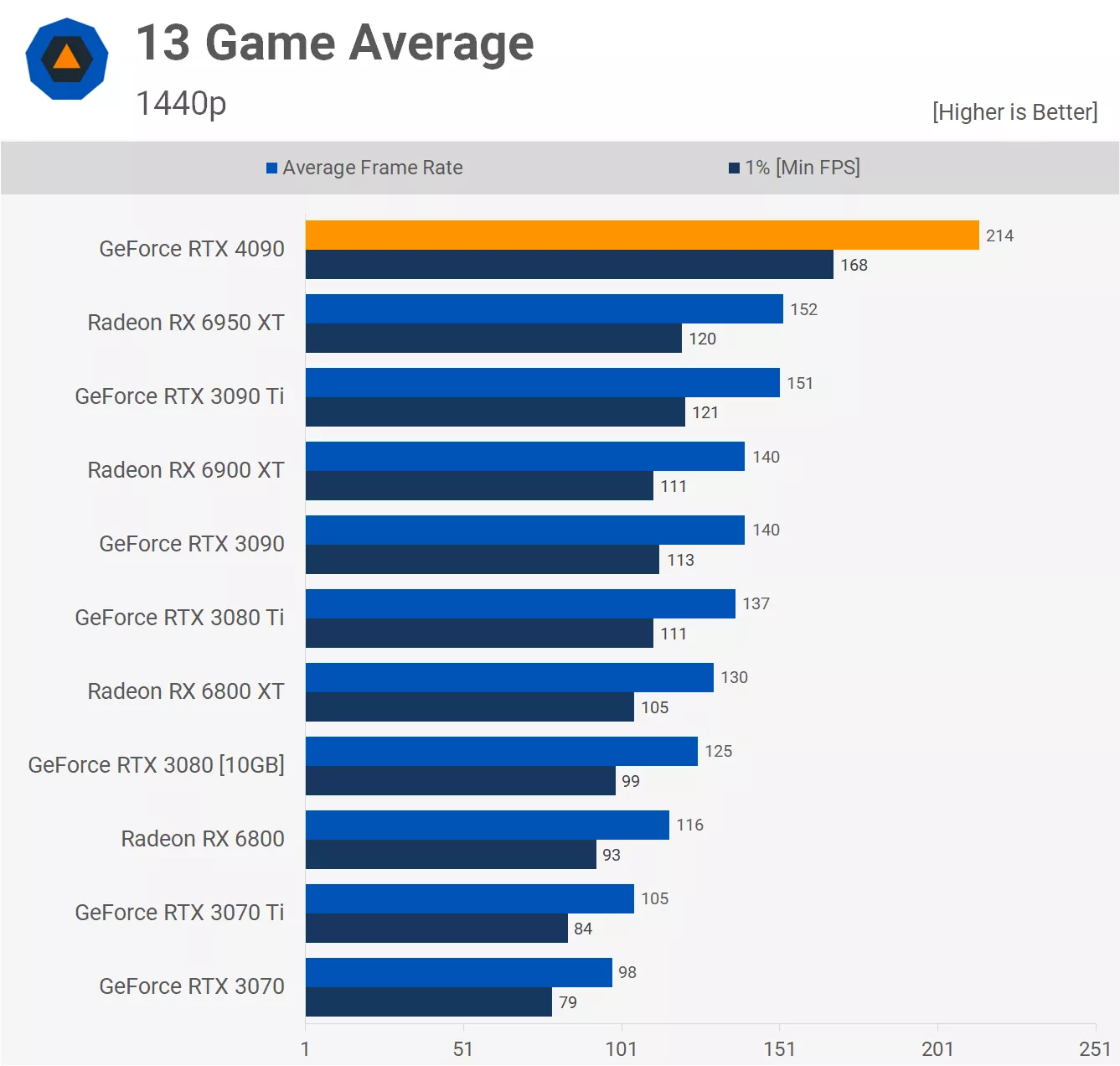

The whole point of this test was to explore CPU bottlenecks, not GPU bottlenecks. Somehow people miss that point every time one of these tests is done.It's clear that the 5600 is a bottleneck to the 4090, however, it would be nice to see this in a higher resolution since I think most people that would be interested in a 4090 would be playing at 1440p and hopefully 4K

Sure but it's still nice to see actual use cases instead of a scenario only like 1%, if even, of all pc gaming users see.The whole point of this test was to explore CPU bottlenecks, not GPU bottlenecks. Somehow people miss that point every time one of these tests is done.

You think it is more of an "actual use case" to show 4k results when testing the 5600X vs 7600X?Sure but it's still nice to see actual use cases instead of a scenario only like 1%, if even, of all pc gaming users see.

The whole point of this test was to explore CPU bottlenecks, not GPU bottlenecks. Somehow people miss that point every time one of these tests is done.

Yes? People who purchase 4090 aren't gonna play on a 1080p monitor unless they're going for extreme high FPS for competitive games and they will not be on 7600 then lol.You think it is more of an "actual use case" to show 4k results when testing the 5600X vs 7600X?

Are you aware of what this article is about?

What would the sweetspot be for a 5600X RX 6750 XT, RX 6800 XT, 3070 ti? I'm curious how a 5600X and a 3080 would do. I dunno about you folks but I seem to know quite a few people with that pairing.

Yes? People who purchase 4090 aren't gonna play on a 1080p monitor unless they're going for extreme high FPS for competitive games and they will not be on 7600 then lol.

People who have 5600 aren't gonna be interested in extreme high FPS so they're gonna be fine with 60 and I'm sure lots are curious how it's gonna fare on higher resolutions even if 1080p is still ubiquitous today.

I understand these articles and benchmarking take a long time but would have been better to have had the Ryzen 3600 in the list to as I imagine it would be a better representative of a worthwhile upgrade to 7600 with a faster GPU. My favourite article Steve did was the 4 years of Ryzen which helped me with my decision to upgrade my R5 1600 to the 3600 as I was buying a 3060ti.

I'm using a 5600 and a 6800XT and game at 1440p/144Hz at Hi-Ultra graphical settings and these make for a good combination. Even the trashpile that is Forspoken (the Demo) runs at 65-90 fps with FSR off (it's on by default in all game presets) and settings one step down from Ultra. CPU use runs at 30-70%. For more normal games like CP2077 and SotTR, I'm not 100% GPU-limited but majority of the time am at Hi-Ultra, with over 100FPS in both.

Now, if you were to ask which would I upgrade to get more performance, I'd next upgrade the 5600 as a meaningful CPU upgrade (5900X/5800X3D) will be like 1/4 the price of a meaningful GPU upgrade (4080) and the 6800XT has a little more to give in some circumstances.

But for gaming the 5600 and the 6800XT are a high end gaming system made in heaven as I got the 5600 for $160 and the 6800 XT for $560. Not their lowest but pretty damn affordable for great performance.

It's clear that the 5600 is a bottleneck to the 4090, however, it would be nice to see this in a higher resolution since I think most people that would be interested in a 4090 would be playing at 1440p and hopefully 4K if they are going to part with that kind of money for a GPU. The 5600 is a huge bottleneck compared to the 7600 at 1080p, but I'm guessing that it is significantly closer at 4K. In any case, I think you would want a bare minimum of a 5800X if you are going to go with a 4090.

I hear that, I have a 3800X but my best bud gifted me his 5600X and I picked up a RX 6750 XT for $510 Cdn. Best deal I could find in months so I jumped on it. Seems like a good combo I don't expect a giant leap over the 3800X but I'm hoping to see less of a bottleneck and my temps will certainly be lower which is always a bonus in the quest for better 1% lows and such.

I can't remember for sure but I think the Tombraider benchmark tells you how bad you're bottlenecked so I'll compare before and after the swap.

I mean yeah I know but I don't think any tech sites have done a specific benchmark on it. I get that it won't paint a pretty picture on all these newer CPUs however, and that's probably something they want to avoid for their sponsors.LOL, the 5600 is good for way more than 60fps. It's fine for 1440p/144, depending on your GPU and graphical settings of course.

I'm using a 5600 and a 6800XT and game at 1440p/144Hz at Hi-Ultra graphical settings and these make for a good combination. Even the trashpile that is Forspoken (the Demo) runs at 65-90 fps with FSR off (it's on by default in all game presets) and settings one step down from Ultra. CPU use runs at 30-70%. For more normal games like CP2077 and SotTR, I'm not 100% GPU-limited but majority of the time am at Hi-Ultra, with over 100FPS in both.

Now, if you were to ask which would I upgrade to get more performance, I'd next upgrade the 5600 as a meaningful CPU upgrade (5900X/5800X3D) will be like 1/4 the price of a meaningful GPU upgrade (4080) and the 6800XT has a little more to give in some circumstances.

But for gaming the 5600 and the 6800XT are a high end gaming system made in heaven as I got the 5600 for $160 and the 6800 XT for $560. Not their lowest but pretty damn affordable for great performance.

I don't agree with dismissing the most common resolution especially one that is widely used for e-sports makes sense.Totally agree. I do appreciate these tests and find them very interesting, but a 1080p benchmark is kinda pointless for a 4090.

The 4090 is clearly bottlenecked at 1080p by both these processors, but if you are buying a 4090 to play at 4k which is very reasonable, will the 5600x still slow you down enough to count? It's relevant because 40/RDNA3 series cards could still be bottlenecked even at higher resolutions, but likely by much lower percentages. If at 4k the 5600x is less than 5% slower that might be relevant for someone not ready for a platform upgrade.The whole point of this test was to explore CPU bottlenecks, not GPU bottlenecks. Somehow people miss that point every time one of these tests is done.